import daft

import matplotlib.pyplot as plt

import warnings

import logging

# Suppress font warnings

warnings.filterwarnings("ignore", category=UserWarning, module="matplotlib.font_manager")

# Also lower logging level for matplotlib font manager

logging.getLogger('matplotlib.font_manager').setLevel(logging.ERROR)

pgm = daft.PGM([6.5, 4.5], node_unit=1.2)

# Hyperparameters

pgm.add_node(daft.Node("alpha", r"$\alpha$", 2, 4));

pgm.add_node(daft.Node("beta", r"$\beta$", 4, 4));

pgm.add_node(daft.Node("lambda_1", r"$\lambda_1$", 1, 3));

pgm.add_node(daft.Node("lambda_2", r"$\lambda_2$", 2, 3));

pgm.add_node(daft.Node("lambda_3", r"$\lambda_3$", 3, 3));

pgm.add_node(daft.Node("lambda_4", r"$\lambda_4$", 4, 3));

pgm.add_node(daft.Node("lambda_5", r"$\lambda_5$", 5, 3));

# Observed data y_i, grouped under lambda_l

pgm.add_node(daft.Node("y_1", r"$y_i$", 1, 2, observed=True));

pgm.add_node(daft.Node("y_2", r"$y_i$", 2, 2, observed=True));

pgm.add_node(daft.Node("y_3", r"$y_i$", 3, 2, observed=True));

pgm.add_node(daft.Node("y_4", r"$y_i$", 4, 2, observed=True));

pgm.add_node(daft.Node("y_5", r"$y_i$", 5, 2, observed=True));

# Edges

pgm.add_edge("alpha", "lambda_1");

pgm.add_edge("alpha", "lambda_2");

pgm.add_edge("alpha", "lambda_3");

pgm.add_edge("alpha", "lambda_4");

pgm.add_edge("alpha", "lambda_5");

pgm.add_edge("beta", "lambda_1");

pgm.add_edge("beta", "lambda_2");

pgm.add_edge("beta", "lambda_3");

pgm.add_edge("beta", "lambda_4");

pgm.add_edge("beta", "lambda_5");

pgm.add_edge("lambda_1", "y_1");

pgm.add_edge("lambda_2", "y_2");

pgm.add_edge("lambda_3", "y_3");

pgm.add_edge("lambda_4", "y_4");

pgm.add_edge("lambda_5", "y_5");

# Plates

pgm.add_plate(daft.Plate([0.6, 1.5, 0.8, 1], label=r"$i=l_i=1$", shift=-0.1));

pgm.add_plate(daft.Plate([1.6, 1.5, 0.8, 1], label=r"$i=l_i=2$", shift=-0.1));

pgm.add_plate(daft.Plate([2.6, 1.5, 0.8, 1], label=r"$i=l_i=3$", shift=-0.1));

pgm.add_plate(daft.Plate([3.6, 1.5, 0.8, 1], label=r"$i=l_i=4$", shift=-0.1));

pgm.add_plate(daft.Plate([4.6, 1.5, 0.8, 1], label=r"$i=l_i=5$", shift=-0.1));

pgm.render()

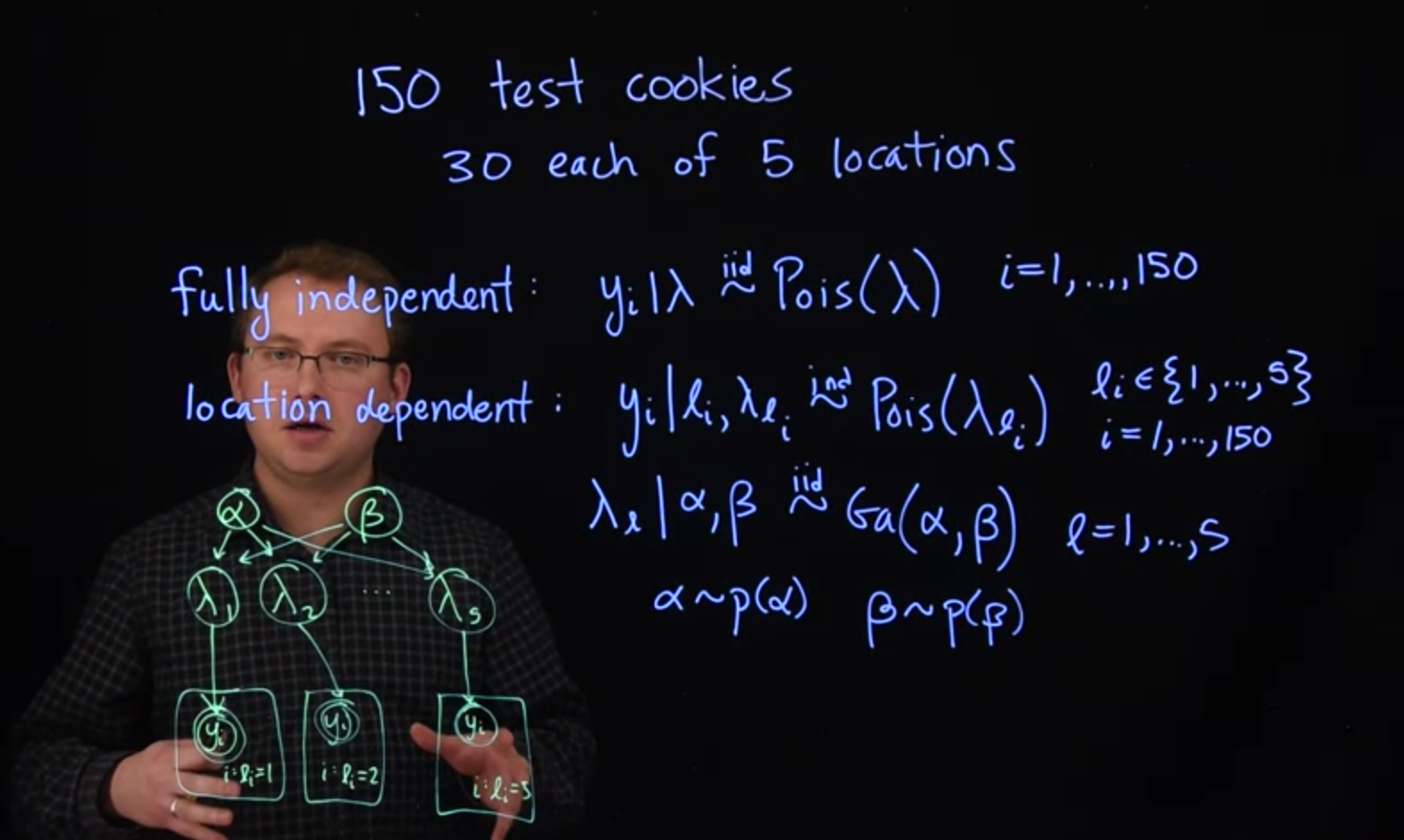

plt.show()50.1 Introduction to Hierarchical Modeling

While the section shows the presence of correlation within groups, I wonder if we really need the iid assumptions below, particularly as we are told in (McElreath 2015) that hierarchical models can handle correlated data.

Throughout the last few lessons, we have assumed that all the observations were independent. But more often than not, this is too strong an assumption to make about our data. There is often a natural grouping to our data points, which leads us to believe that some observation pairs should be more similar to each other than to others.

Let’s look at an example. In the previous course, we talked about using a Poisson model to count chocolate chips in cookies.

Let’s suppose that you own a company that produces chocolate chip cookies. In an experiment, you’re going to produce 150 test cookies. Let’s say you’ll do 30 from your location and then 30 from four other factory locations. So let’s write this as 30 from each of 5 locations. We’re going to assume that all the locations use the same recipe when they make their chocolate chip cookies.

I’d say probably.

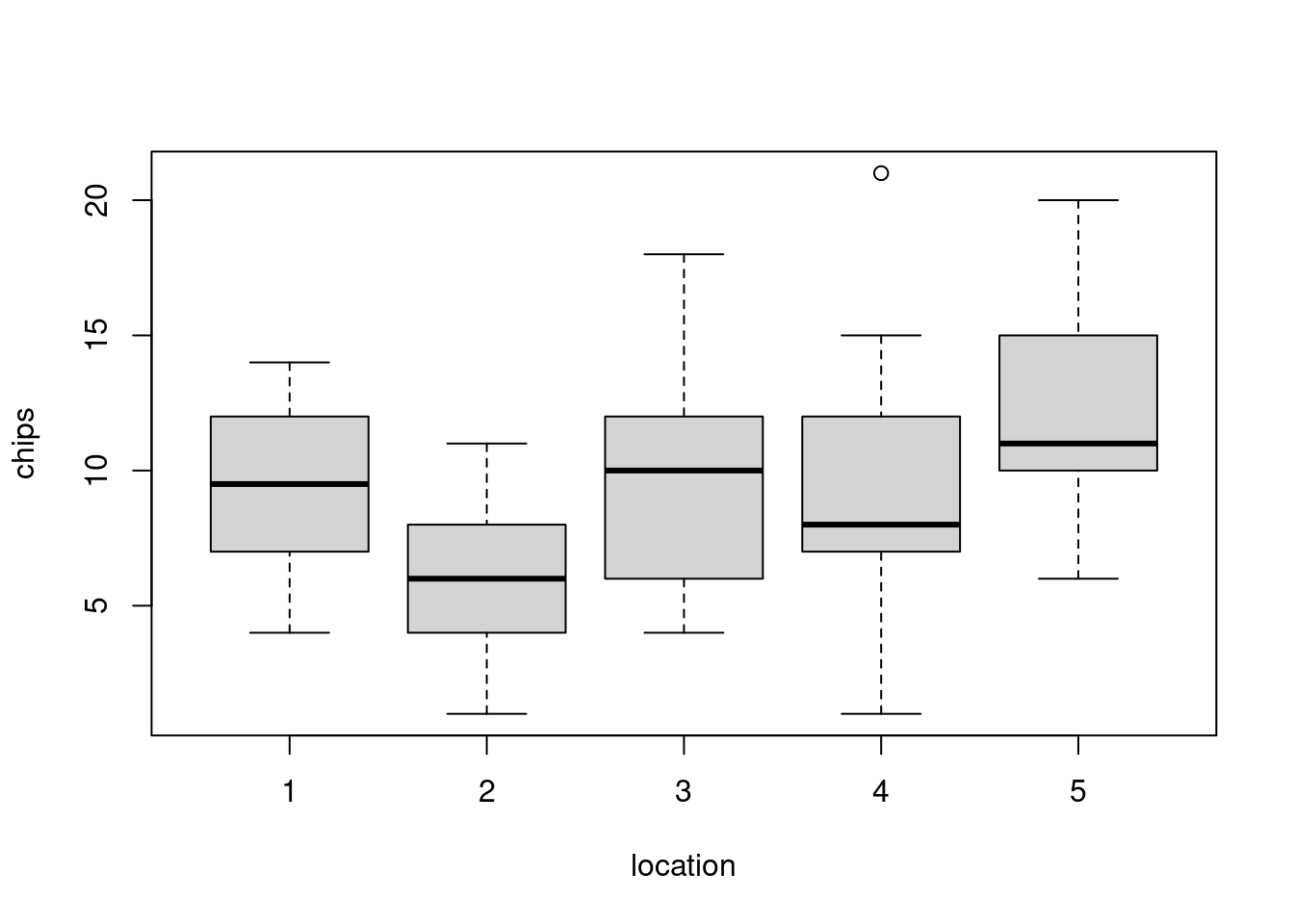

There’s a natural grouping to the cookies. By making it a hierarchical model, we can account for the likely differences between locations in our Poisson model.

The original fully independent model for model for Poisson, the number of chips in the cookies would have looked like this.

N=150 \quad \text{number of cookies}

B=30 \quad \text{from each locations}

50.1.1 Fully independent model

Fitting a single model to all data: This approach treats all observations as independent, ignoring any potential differences or relationships between groups. In the cookie example, fitting a single Poisson model to all 150 cookies would “be ignoring potential differences between locations and the fact that cookies from the same location are likely to be more similar to each other.”

This kind of model is called fully independent model or an completely pooled model as we consider all the cookies belonging to a single group

y_i \mid \lambda \stackrel{iid}{\sim} \mathrm{Pois}(\lambda) \qquad i=1, \ldots, N \qquad \tag{50.1}

Where \lambda is the expected number of chips per cookie.

At the other extreme we can think of fitting a model for each group separately. This kind of model is called a unpooled model.

50.1.2 Location-dependent model

Hierarchical models offer a powerful solution, acting as a “good compromise” between the two extreme approaches described above. They allow for the acknowledgment of natural groupings in data while still leveraging information across those groups. This is also called a partially pooled model, as it allows for some sharing of information between groups while still allowing for group-specific parameters.

In (Gelman et al. 2013, secs. 4.5,5.5,7.3) the authors describe the trade-offs between these types of options and how hierarchical models provide a good compromise. Hierarchical models, can incorporate a sufficient number of parameters to fit data well, while simultaneously using a population distribution to introduce dependence among parameters, thereby avoiding overfitting. This approach allows the parameters of a prior, or population, distribution to be estimated from the data itself

Also In (McElreath 2015), the author describes how shrinkage estimators can be used in hierarchical models to improve parameter estimates. But to keep things simple, we can say that shrinkage is how parameters for groups with less data are “pulled” towards the overall mean, while groups with more data are less affected by this pull.

\begin{aligned} y_i \mid l_i, \lambda_{l_i} & \stackrel{iid}{\sim} \mathrm{Pois}(\lambda_{l_i}) & i=1, \ldots, N \\ \lambda_{l_i} |\alpha, \beta & \sim \mathrm{Gamma}(\alpha, \beta) & l_i = 1, \ldots, B \\ \alpha &\sim \mathrm{Prior}(\alpha) & \text {(hyperparameters)} \\ \beta &\sim \mathrm{Prior}(\beta) & \text {(hyperparameters)} \end{aligned} \tag{50.2}

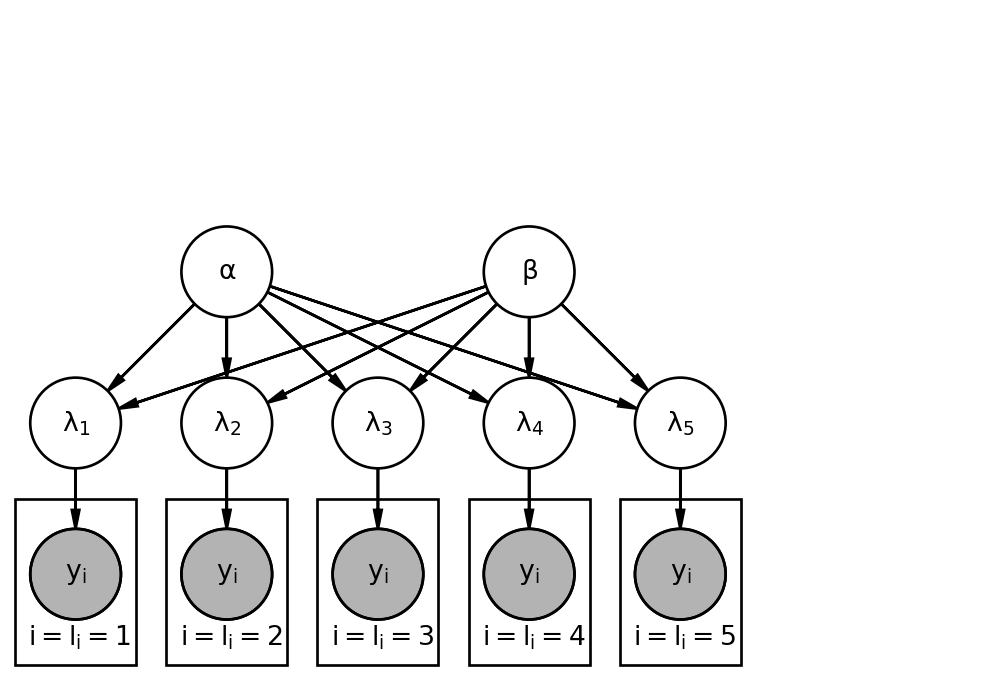

50.1.3 Graphical Model

The structure of a hierarchical model can be visualized as follows:

- Top Level (Hyperparameters): Independent parameters like \alpha and \beta.

- Second Level (Group Parameters): Parameters for each group (e.g., \lambda_1, \lambda_2, \dots, \lambda_5) that are dependent on the hyperparameters.

- Lowest Level (Observations): Individual observations (e.g., cookies) are grouped by their respective locations, and their distributions depend on the specific group parameter.

This multi-level structure allows for the estimation of the hyperparameters from the group-specific parameters.

A primary advantage of hierarchical models is their ability to share information, or borrow strength, from all the data.

- Indirect Information Sharing: Even though groups might have their own specific parameters (e.g., your location’s \lambda_1), the common distribution from which these parameters are drawn means that “information about another location’s cookies might provide information about your cookies, at least indirectly.”

- Improved Parameter Estimation: This shared information leads to more robust and accurate parameter estimates. In the cookie example, “your lambda is not only estimated directly from your 30 cookies, but also indirectly from the other 120 cookies leveraging this hierarchical structure.”

The overarching benefit of hierarchical models is their capacity for “Being able to account for relationships in the data while estimating everything with the single model is a primary advantage of using hierarchical models.” This provides a flexible and powerful framework for analyzing data with inherent group structures, leading to more realistic and informative inferences.

50.2 Correlations within the Normal hierarchical model 🗒️

This section is based on the handout titled Correlation from hierarchical models which deep dives into aspects of correlated quantities within hierarchical models by considering the special case of a Normal model.

50.2.2 Normal hierarchical model

Suppose our data come from a normal distribution, where each group has its own mean.

In the second stage, we assume that all the group means come from a common normal distribution.

Let’s write this hierarchical model. We y_{i,j} denote the i th observation in group j, with mean \theta_j. Thus we get: \begin{aligned} y_{i,j} \mid \theta_j &\stackrel{iid}{\sim} \mathcal{N}(\theta_j, \sigma^2) \\ \theta_j &\stackrel{iid}{\sim} \mathcal{N}(\mu, \tau^2) \end{aligned}

where \sigma^2, \tau^2, and \mu are known constants. To get the marginal distribution of y_{i,j} only, we must compute:

\begin{aligned} \mathbb{P}r(y_{i,j}) &= \int \mathbb{P}r(y_{i,j}, \theta_j) d\theta_j \\ &= \int \mathbb{P}r(y_{i,j} \mid \theta_j) \mathbb{P}r(\theta_j) d\theta_j \end{aligned}

With normally distributed variables, it is often easier to work with the following equivalent formulation of the model

\begin{aligned} \theta_j &= \mu + \nu_j, & \nu_j &\stackrel{iid}{\sim} \mathcal{N}(0, \tau^2) \\ y_{i,j} &= \theta_j + \varepsilon_{i,j}, & \varepsilon_{i,j} &\stackrel{iid}{\sim} \mathcal{N}(0, \sigma^2) \end{aligned}

with all \nu_j and \varepsilon_{i,j} independent. This allows us to substitute \theta_j into the expression for y_{i,j} to get y_{i,j} = \mu + \nu_j + \varepsilon_{i,j}.

One nice property of normal random variables is that if X and Y are both normally distributed (correlated or uncorrelated), then the new variable X + Y will also be normally distributed. Hence \mathbb{P}r(y_{i,j}) is a normal distribution with mean

\begin{aligned} \mathbb{E}(y_{i,j}) &= \mathbb{E}(\mu + \nu_j + \varepsilon_{i,j}) \\ &= E(\mu) + E(\nu_j) + E(\varepsilon_{i,j}) \\ &= \mu + 0 + 0 \\ &= \mu \end{aligned}

and variance

\begin{aligned} \mathbb{V}ar(y_{i,j}) =& \mathbb{V}ar(\mu + \nu_j + \varepsilon_{i,j} ) \\ =& \mathbb{C}ov(\mu + \nu_j + \varepsilon_{i,j} , \mu + \nu_j + \varepsilon_{i,j} ) \\ =& \mathbb{C}ov(\mu,\mu) + \mathbb{C}ov(\nu_j ,\nu_j ) + \mathbb{C}ov(\varepsilon_{i,j} ,\varepsilon_{i,j} ) \\ & + 2 \cdot \mathbb{C}ov(\mu,\nu_j ) + 2 \cdot \mathbb{C}ov(\mu,\varepsilon_{i,j} ) \\ & + 2 \cdot \mathbb{C}ov(\nu_j ,\varepsilon_{i,j} ) \\ =& 0 + \mathbb{V}ar(\nu_j ) + \mathbb{V}ar(\varepsilon_{i,j} ) + 0 + 0 + 0 &&& \text{(since } \nu_j \text{ and } \varepsilon_{i,j} \text{ are independent)} \\ =& \tau^2 + \sigma^2 . \end{aligned}

Now, we want to show that observations in the same group are correlated under this hierarchical model.

Let’s take, for example, observations 1 and 2 from group j, y_{1,j} and y_{2,j}. It does not matter which two you select, as long as they are from the same group. We know that \mathbb{V}ar(y_{1,j}) = \mathbb{V}ar(y_{2,j}) = \tau^2 + \sigma^2. What about their covariance?

\begin{aligned} \mathbb{C}ov(y_{1,j},y_{2,j}) &= \mathbb{C}ov(\mu + \nu_j + \varepsilon_{2,j}, \mu + \nu_j + \varepsilon_{2,j}) \\ &= \mathbb{C}ov(\mu,\mu) + \mathbb{C}ov(\nu_j,\nu_j) + \mathbb{C}ov(\varepsilon_{1,j},\varepsilon_{2,j}) + 2 \cdot \mathbb{C}ov(\mu,\nu_j) + \mathbb{C}ov(\mu,\varepsilon_{1,j}) + \mathbb{C}ov(\mu,\varepsilon_{2,j}) + \mathbb{C}ov(\nu_j,\varepsilon_{1,j}) + \mathbb{C}ov(\nu_j,\varepsilon_{2,j}) \\ &= 0 + \mathbb{V}ar(\nu_j) + 0 + 2 \cdot 0 + 0 + 0 + 0 + 0 \qquad \text{(as } \varepsilon_{1,j} \text{ and } \varepsilon_{2,j} \text{ are independent)} &= \tau^2 \end{aligned}

which gives us correlation:

\begin{aligned} \mathbb{C}or(y_{1,j},y_{2,j}) &= \mathbb{C}ov(y_{1,j},y_{2,j})/\sqrt{\mathbb{V}ar(y_{1,j}) \cdot \mathbb{V}ar(y_{2,j})} \\ &= \tau^2/\sqrt{(\tau^2 + \sigma^2) \cdot (\tau^2 + \sigma^2)} \\ &= \tau^2/\sqrt{(\tau^2 + \sigma^2) \cdot (\tau^2 + \sigma^2)} \\ &= \tau^2/\sqrt{(\tau^2 + \sigma^2)^2} \\ &= \frac{\tau^2}{\tau^2 + \sigma^2}. \end{aligned}

Finally, let’s check the covariance between observations in different groups. Let’s take observation i from groups 1 and 2 (again, our choices do not matter), y_{i,1} and y_{i,2}. Their covariance is

\begin{aligned} \mathbb{C}ov(y_{i,1},y_{i,2}) &= \mathbb{C}ov(\mu + \nu_1 + \varepsilon_{i,1}, \mu + \nu_2 + \varepsilon_{i,2}) \\ &= \mathbb{C}ov(\mu,\mu) + \mathbb{C}ov(\nu_1,\nu_2) + \mathbb{C}ov(\varepsilon_{i,1},\varepsilon_{i,2}) \\ &\quad + \mathbb{C}ov(\mu,\nu_1) + \mathbb{C}ov(\mu,\nu_2) + \mathbb{C}ov(\mu,\varepsilon_{i,1}) + \mathbb{C}ov(\mu,\varepsilon_{i,2}) \\ &\quad + \mathbb{C}ov(\nu_1,\varepsilon_{i,1}) + \mathbb{C}ov(\nu_2,\varepsilon_{i,2}) \\ &= 0 + 0 + 0 + 0 + 0 + 0 + 0 + 0 + 0 \qquad \text{(as all } \varepsilon \text{ and } \nu \text{ are independent)} \\ &= 0, \end{aligned}

which obviously yields correlation 0.

Thus, we have demonstrated that observations from the same group are correlated while observations from different groups are uncorrelated in the marginal distribution for observations.

50.3 Applications of hierarchical modeling

Handout: Common applications of Bayesian hierarchical models

50.4 Prior predictive simulation

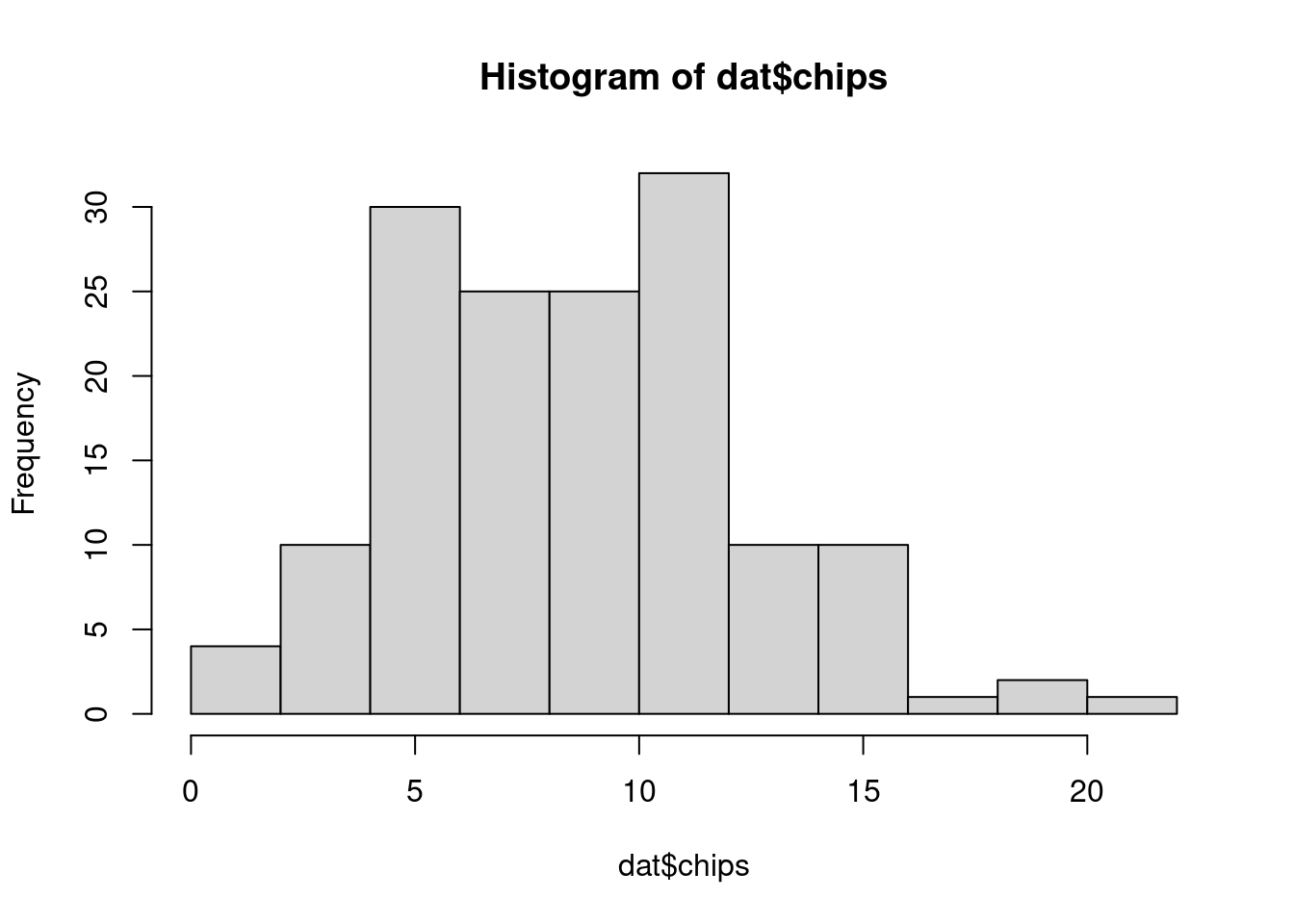

50.4.1 Data

Let’s fit our hierarchical model for counts of chocolate chips. The data can be found in cookies.dat.

dat = read.table(file="data/cookies.dat", header=TRUE)

head(dat) chips location

1 12 1

2 12 1

3 6 1

4 13 1

5 12 1

6 12 1table(dat$location)

1 2 3 4 5

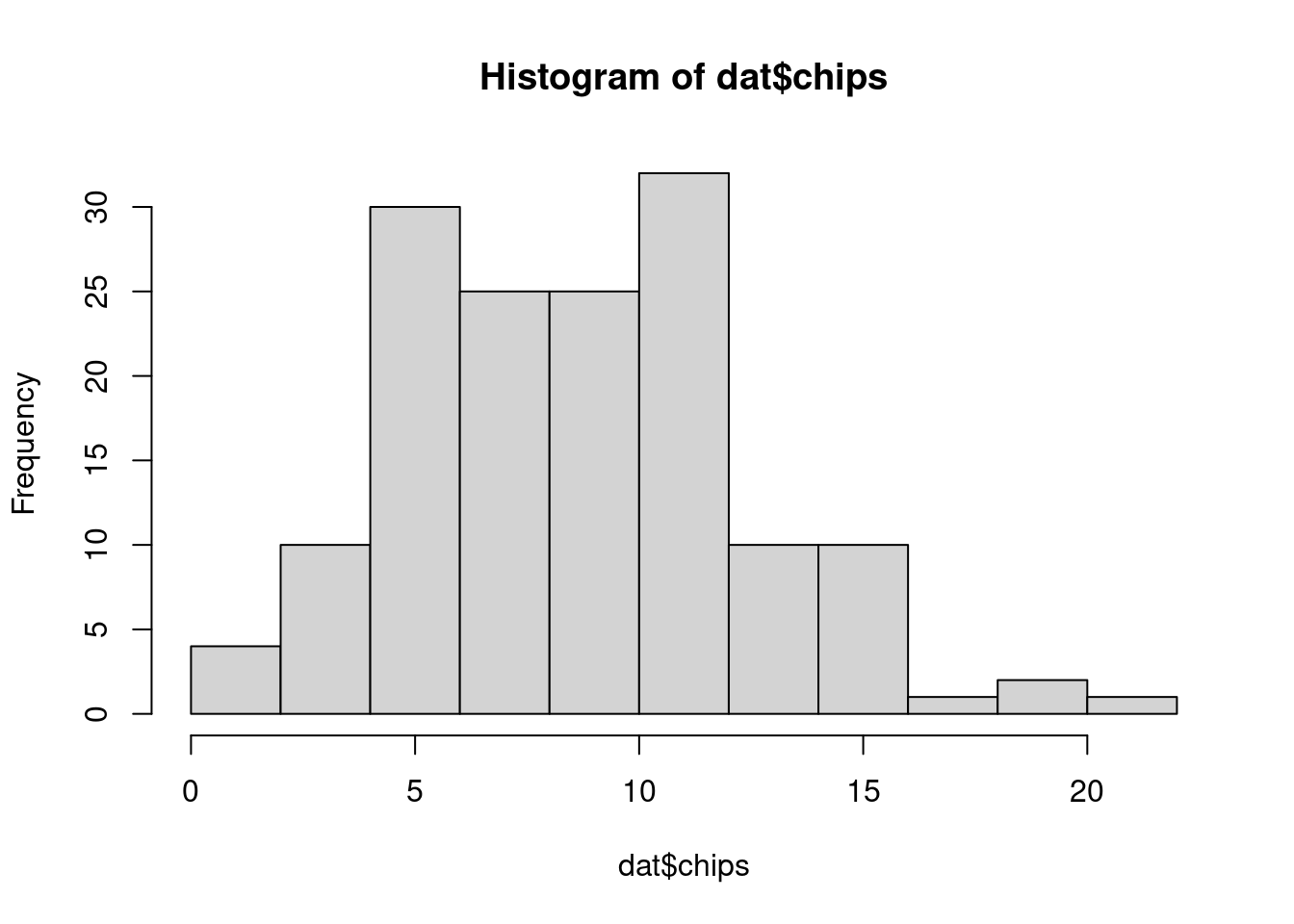

30 30 30 30 30 We can also visualize the distribution of chips by location.

hist(dat$chips)boxplot(chips ~ location, data=dat)50.4.2 Prior predictive checks

Before implementing the model, we need to select prior distributions for \alpha and \beta, the hyperparameters governing the gamma distribution for the \lambda parameters. First, think about what the \lambda’s represent. For location j, \lambda_j is the expected number of chocolate chips per cookie. Hence, \alpha and \beta control the distribution of these means between locations. The mean of this gamma distribution will represent the overall mean of number of chips for all cookies. The variance of this gamma distribution controls the variability between locations. If this is high, the mean number of chips will vary widely from location to location. If it is small, the mean number of chips will be nearly the same from location to location.

To see the effects of different priors on the distribution of \lambda’s, we can simulate. Suppose we try independent exponential priors for \alpha and \beta.

set.seed(112)

n_sim = 500

alpha_pri = rexp(n_sim, rate=1.0/2.0)

beta_pri = rexp(n_sim, rate=5.0)

mu_pri = alpha_pri/beta_pri

sig_pri = sqrt(alpha_pri/beta_pri^2)

summary(mu_pri) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0213 2.9829 9.8522 61.1271 29.9801 4858.7861 summary(sig_pri) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.1834 3.3663 8.5488 41.8137 22.2219 2865.6461 After simulating from the priors for \alpha and \beta, we can use those samples to simulate further down the hierarchy:

lam_pri = rgamma(n=n_sim, shape=alpha_pri, rate=beta_pri)

summary(lam_pri) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.000 1.171 7.668 83.062 28.621 11005.331 Or for a prior predictive reconstruction of the original data set:

(lam_pri = rgamma(n=5, shape=alpha_pri[1:5], rate=beta_pri[1:5]))[1] 66.444084 9.946688 6.028319 15.922568 47.978587(y_pri = rpois(n=150, lambda=rep(lam_pri, each=30))) [1] 63 58 64 63 70 62 61 48 71 73 70 77 66 60 72 77 69 62 66 71 49 80 66 75 74

[26] 55 62 90 65 57 12 9 7 10 12 10 11 7 14 13 9 6 6 13 7 10 12 9 9 10

[51] 7 8 6 9 7 10 13 13 8 12 6 10 3 6 7 4 6 7 5 5 4 3 6 2 8

[76] 4 8 4 5 7 1 4 5 3 8 8 3 1 7 3 16 14 13 17 17 12 13 13 16 16

[101] 15 14 11 10 13 17 16 19 16 17 15 16 7 17 21 16 12 15 14 13 52 44 51 46 39

[126] 40 40 44 46 59 45 49 58 42 31 52 43 47 53 41 48 57 35 60 51 58 36 34 41 59Because these priors have high variance and are somewhat noninformative, they produce unrealistic predictive distributions. Still, enough data would overwhelm the prior, resulting in useful posterior distributions. Alternatively, we could tweak and simulate from these prior distributions until they adequately represent our prior beliefs. Yet another approach would be to re-parameterize the gamma prior, which we’ll demonstrate as we fit the model.

50.5 JAGS Model

library("rjags")Loading required package: codaLinked to JAGS 4.3.2Loaded modules: basemod,bugsmod_string = " model {

for (i in 1:length(chips)) {

chips[i] ~ dpois(lam[location[i]])

}

for (j in 1:max(location)) {

lam[j] ~ dgamma(alpha, beta)

}

alpha = mu^2 / sig^2

beta = mu / sig^2

mu ~ dgamma(2.0, 1.0/5.0)

sig ~ dexp(1.0)

} "

set.seed(113)

data_jags = as.list(dat)

params = c("lam", "mu", "sig")

mod = jags.model(textConnection(mod_string), data=data_jags, n.chains=3)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 150

Unobserved stochastic nodes: 7

Total graph size: 315

Initializing modelupdate(mod, 1e3)

mod_sim = coda.samples(model=mod,

variable.names=params,

n.iter=5e3)

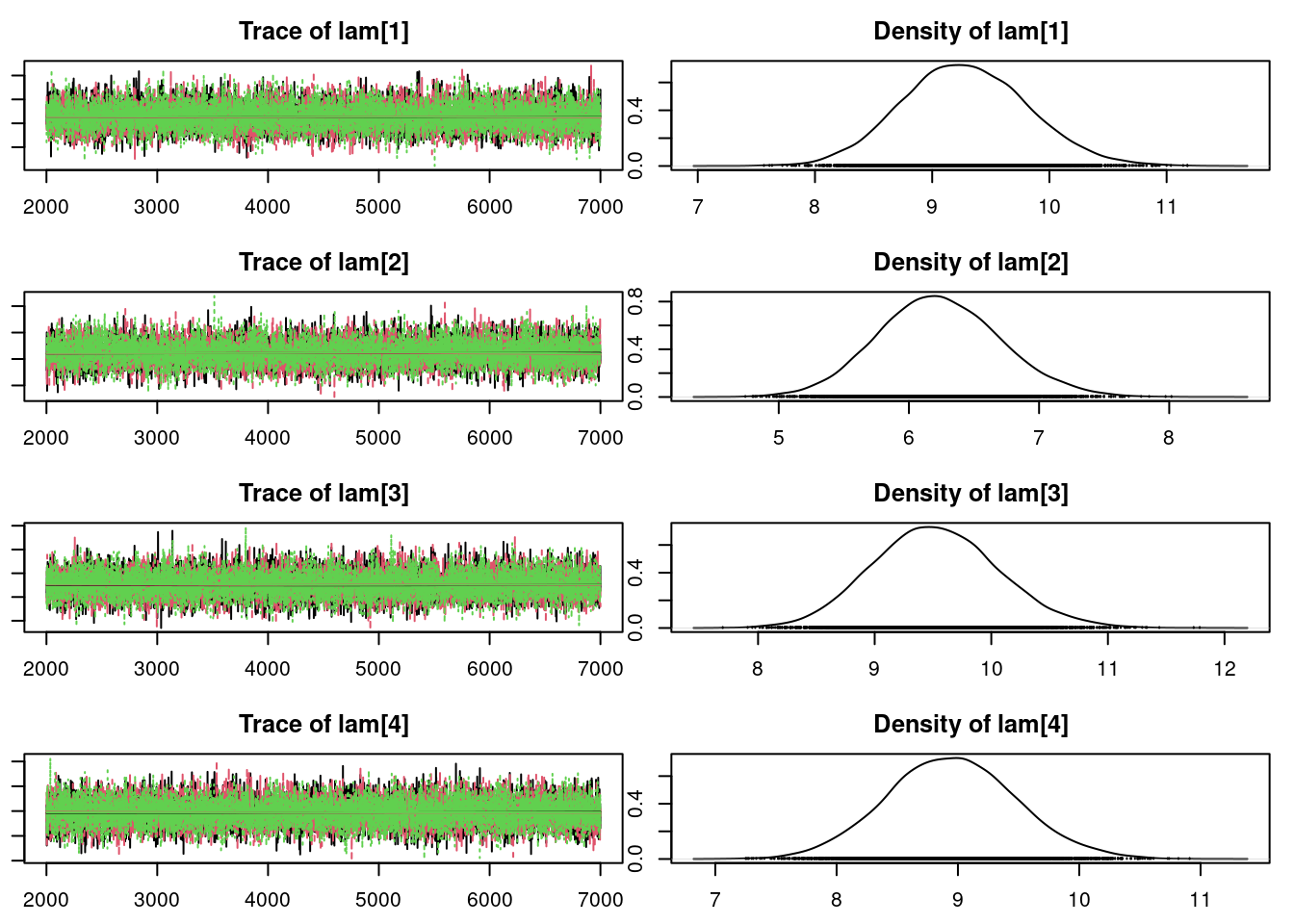

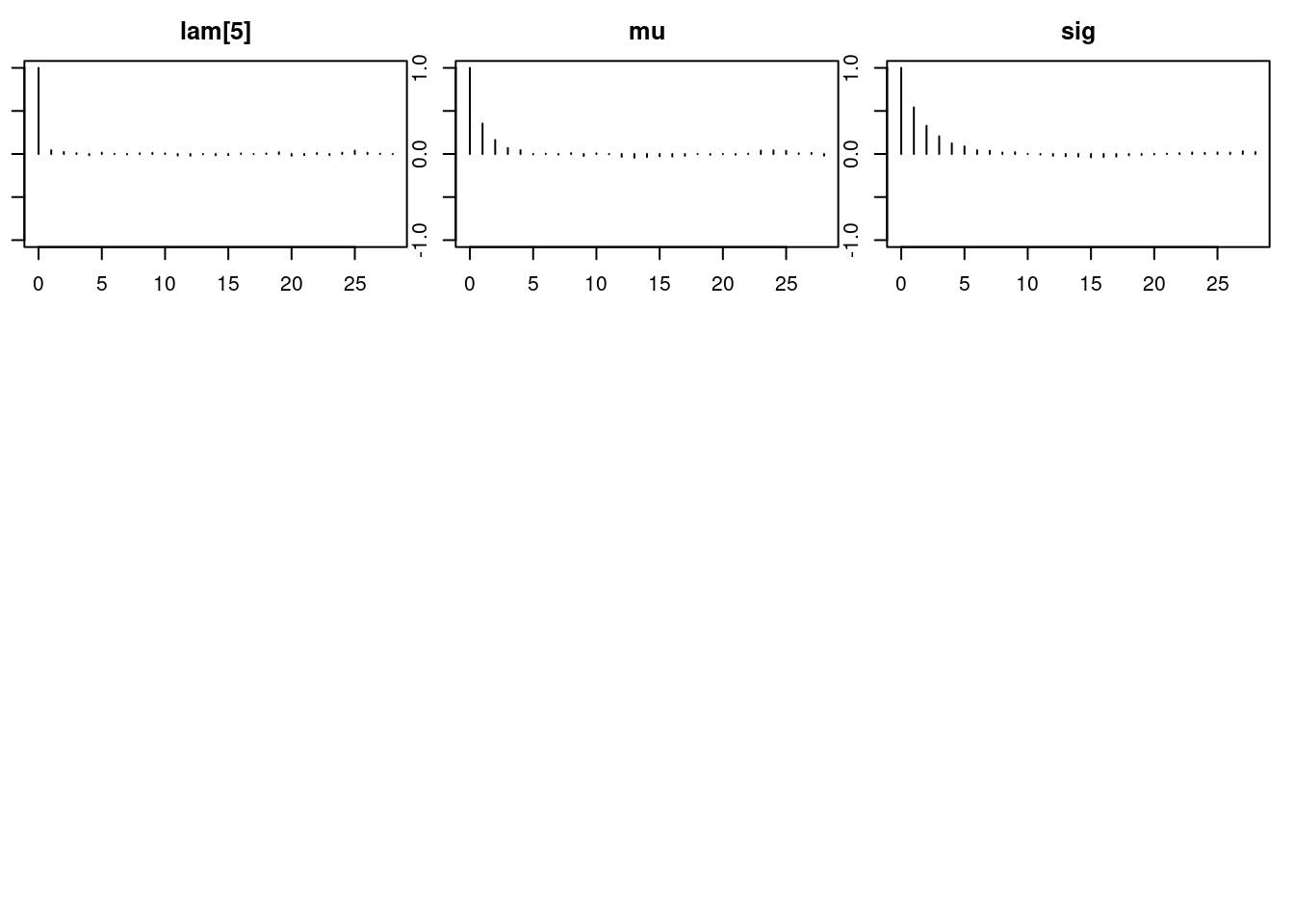

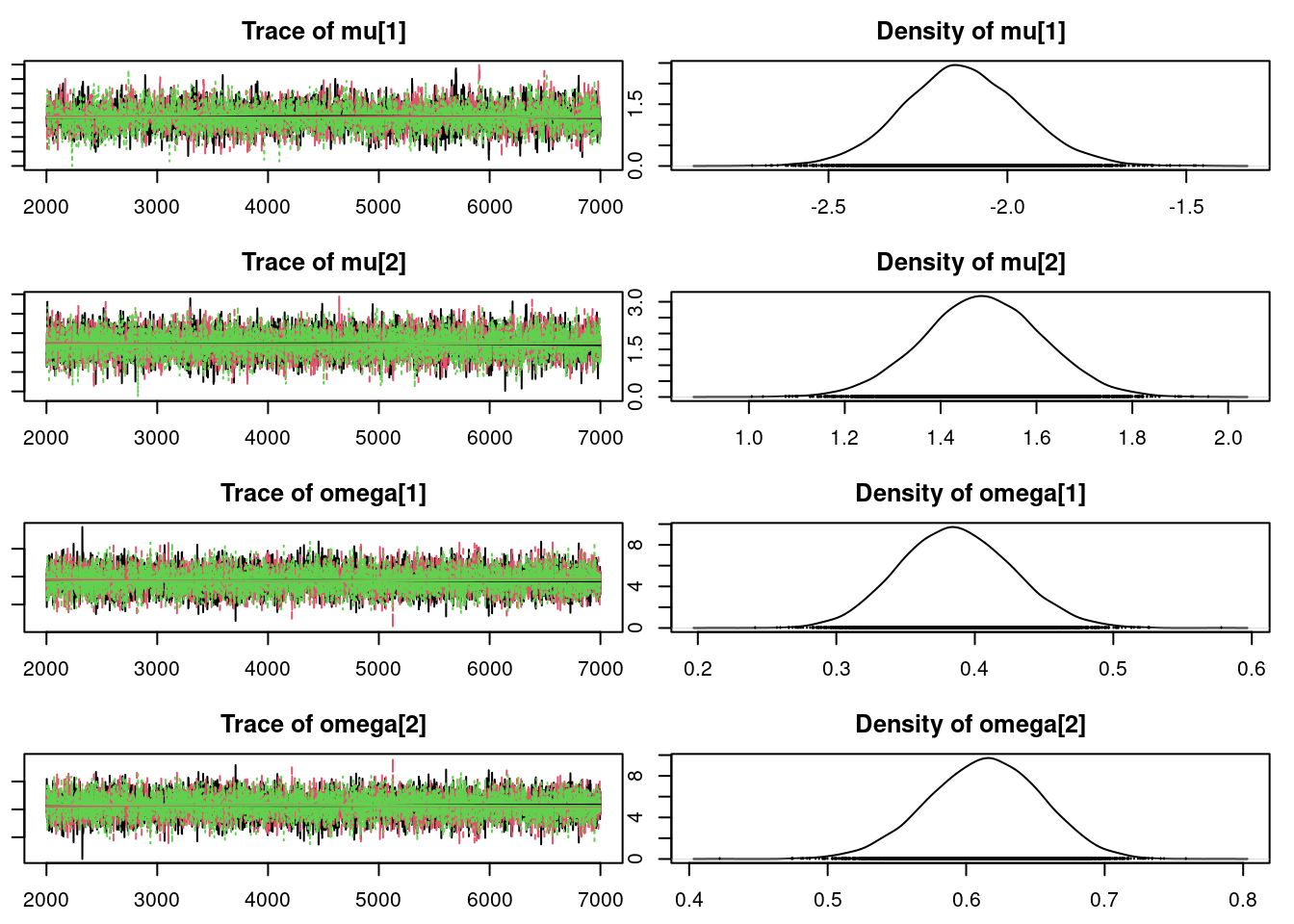

mod_csim = as.mcmc(do.call(rbind, mod_sim))## convergence diagnostics

par(mar = c(2.5, 1, 2.5, 1))

plot(mod_sim)gelman.diag(mod_sim)Potential scale reduction factors:

Point est. Upper C.I.

lam[1] 1 1

lam[2] 1 1

lam[3] 1 1

lam[4] 1 1

lam[5] 1 1

mu 1 1

sig 1 1

Multivariate psrf

1autocorr.diag(mod_sim) lam[1] lam[2] lam[3] lam[4] lam[5]

Lag 0 1.0000000000 1.000000000 1.0000000000 1.0000000000 1.0000000000

Lag 1 0.0174185696 0.116283760 0.0295679140 0.0290422245 0.0578491174

Lag 5 0.0002037479 0.011095694 0.0037110066 0.0031607041 -0.0004427888

Lag 10 -0.0063691431 0.003423878 -0.0001691807 0.0044943636 0.0071217609

Lag 50 0.0036160825 -0.004624143 -0.0063170413 -0.0001712697 -0.0113479246

mu sig

Lag 0 1.000000000 1.000000000

Lag 1 0.359530359 0.546087719

Lag 5 0.021240847 0.116360432

Lag 10 0.011775703 0.031940152

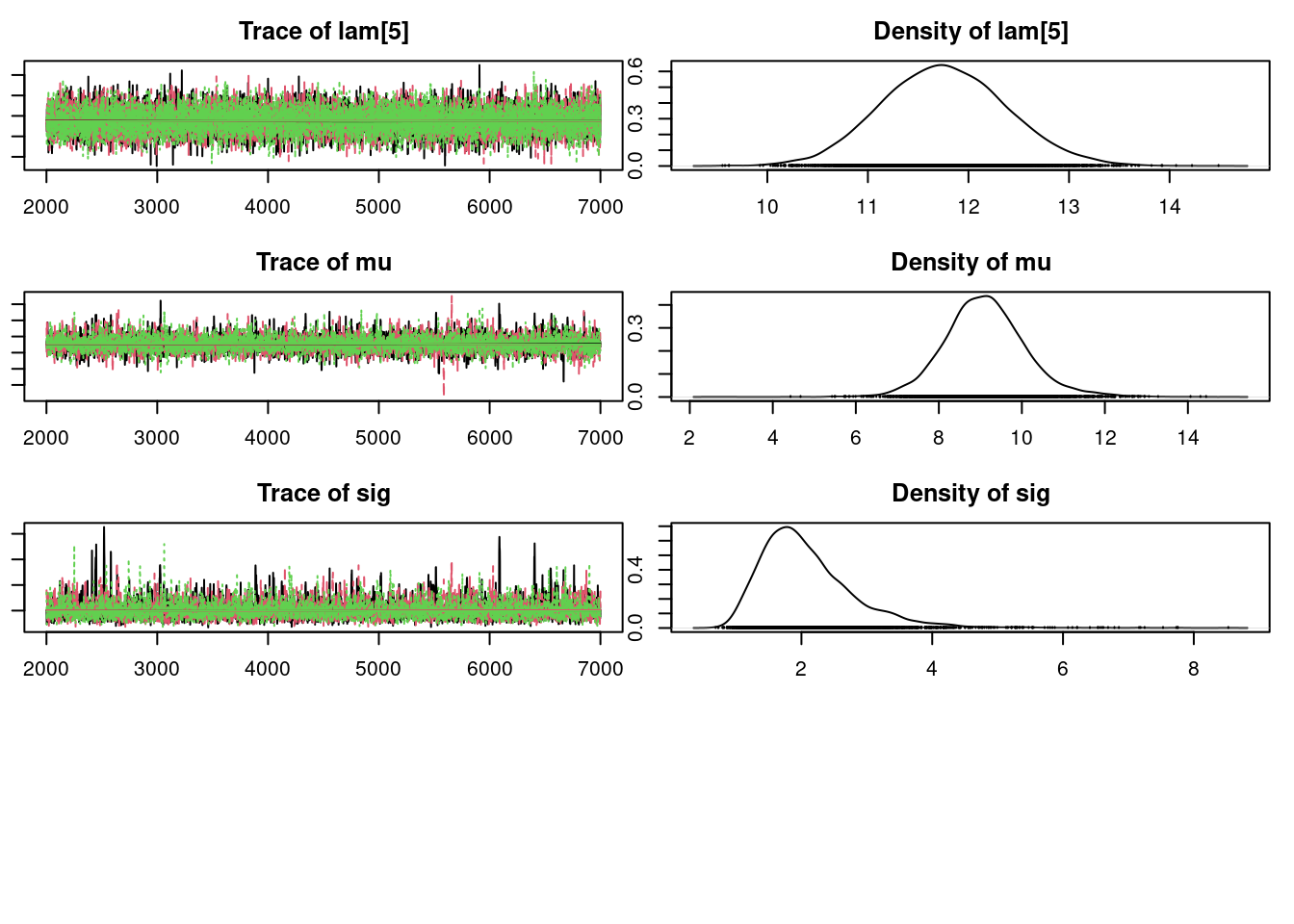

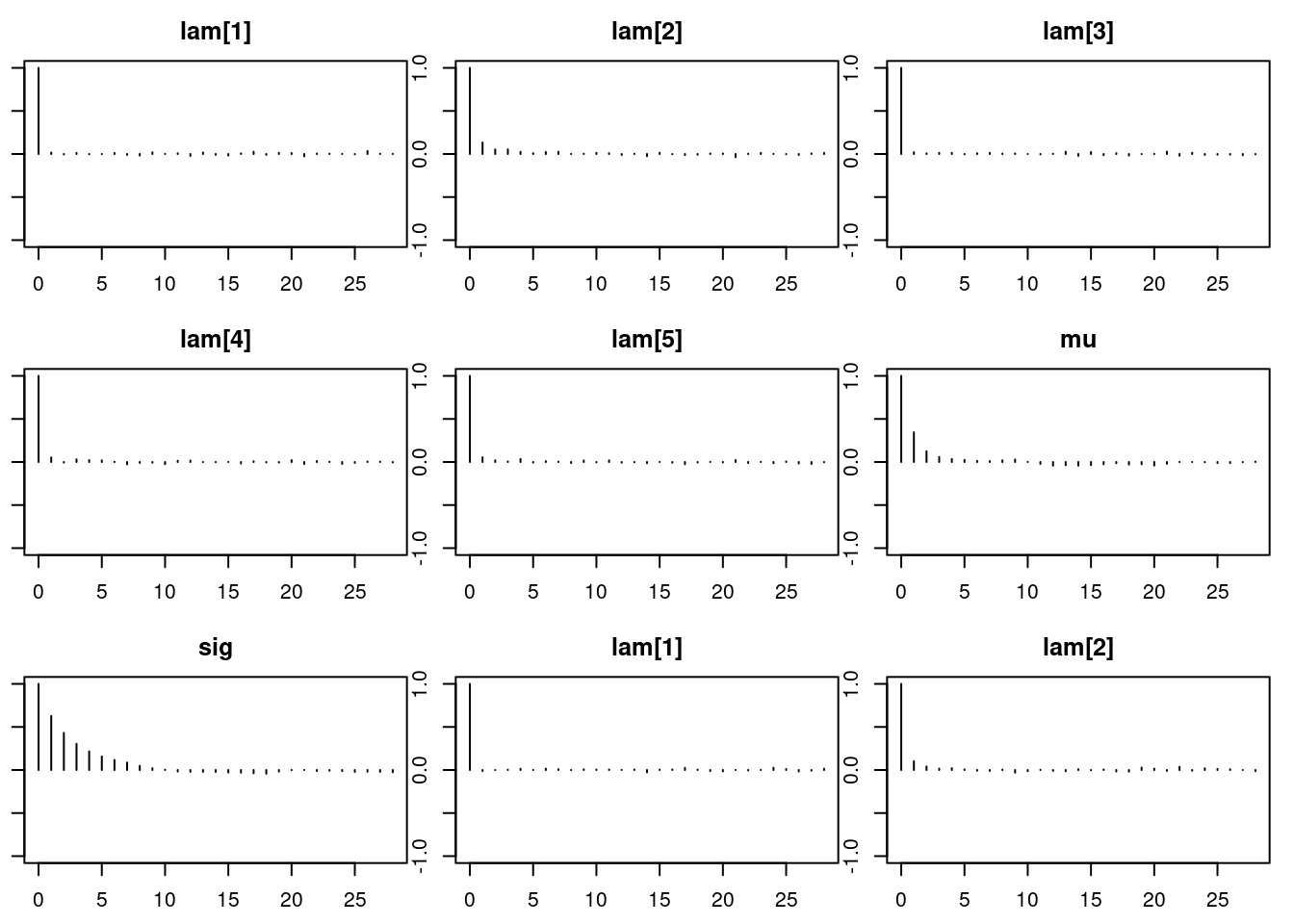

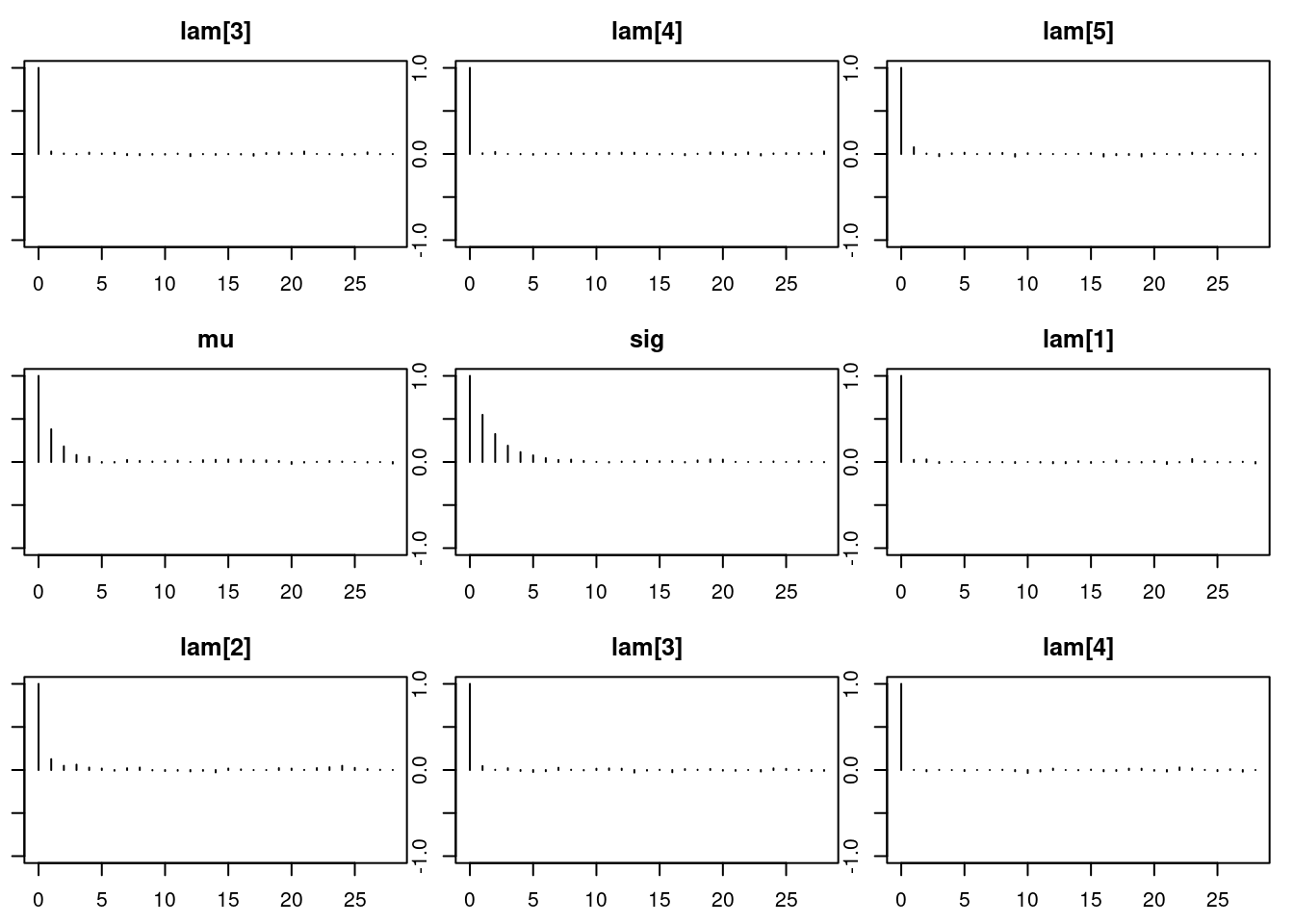

Lag 50 0.006769759 0.004487672par(mar = c(2.5, 1, 2.5, 1))

autocorr.plot(mod_sim)effectiveSize(mod_sim) lam[1] lam[2] lam[3] lam[4] lam[5] mu sig

14574.988 11068.555 14136.525 14340.022 12893.218 6633.699 3636.220 ## compute DIC

dic = dic.samples(mod, n.iter=1e3)50.5.1 Model checking

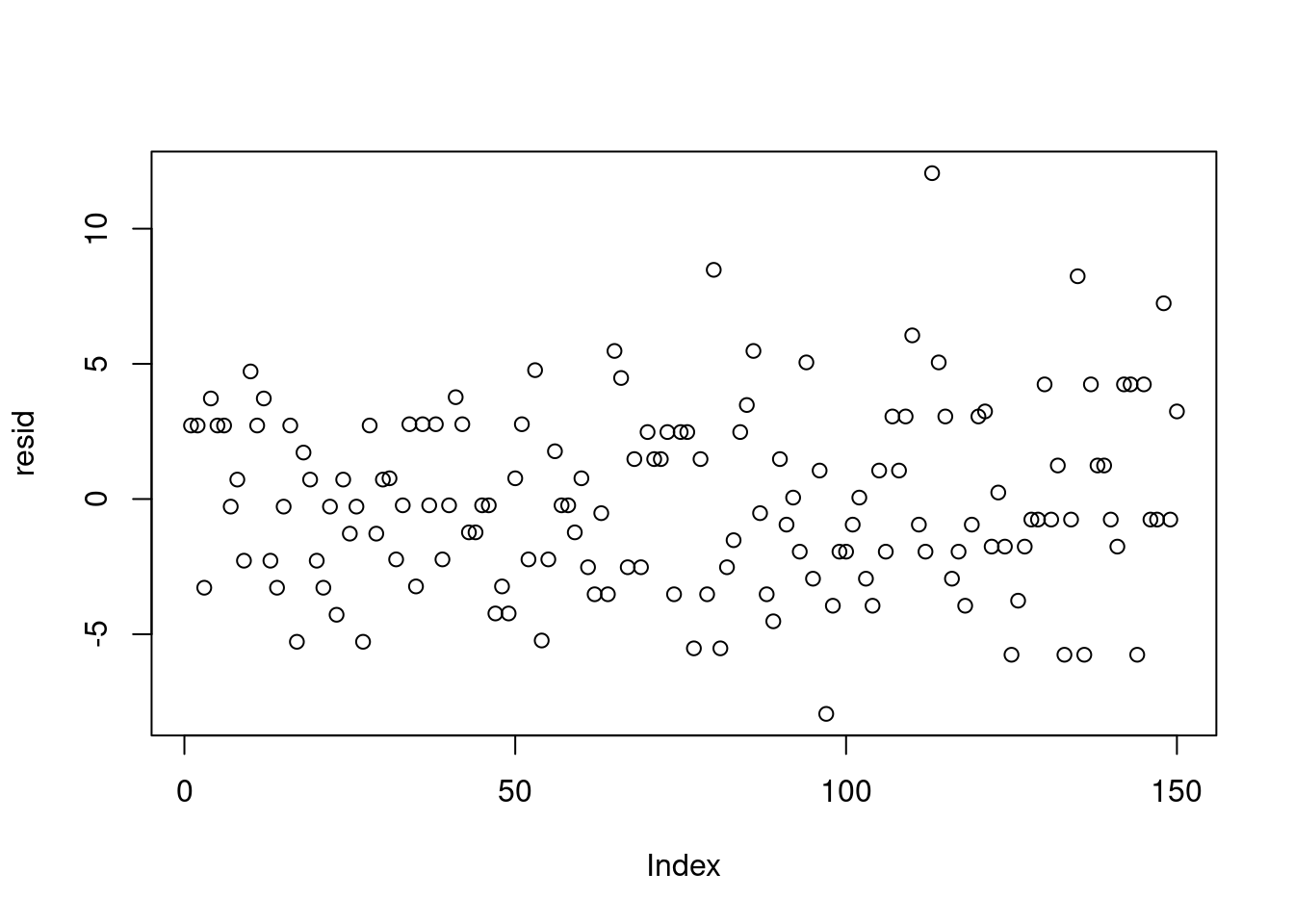

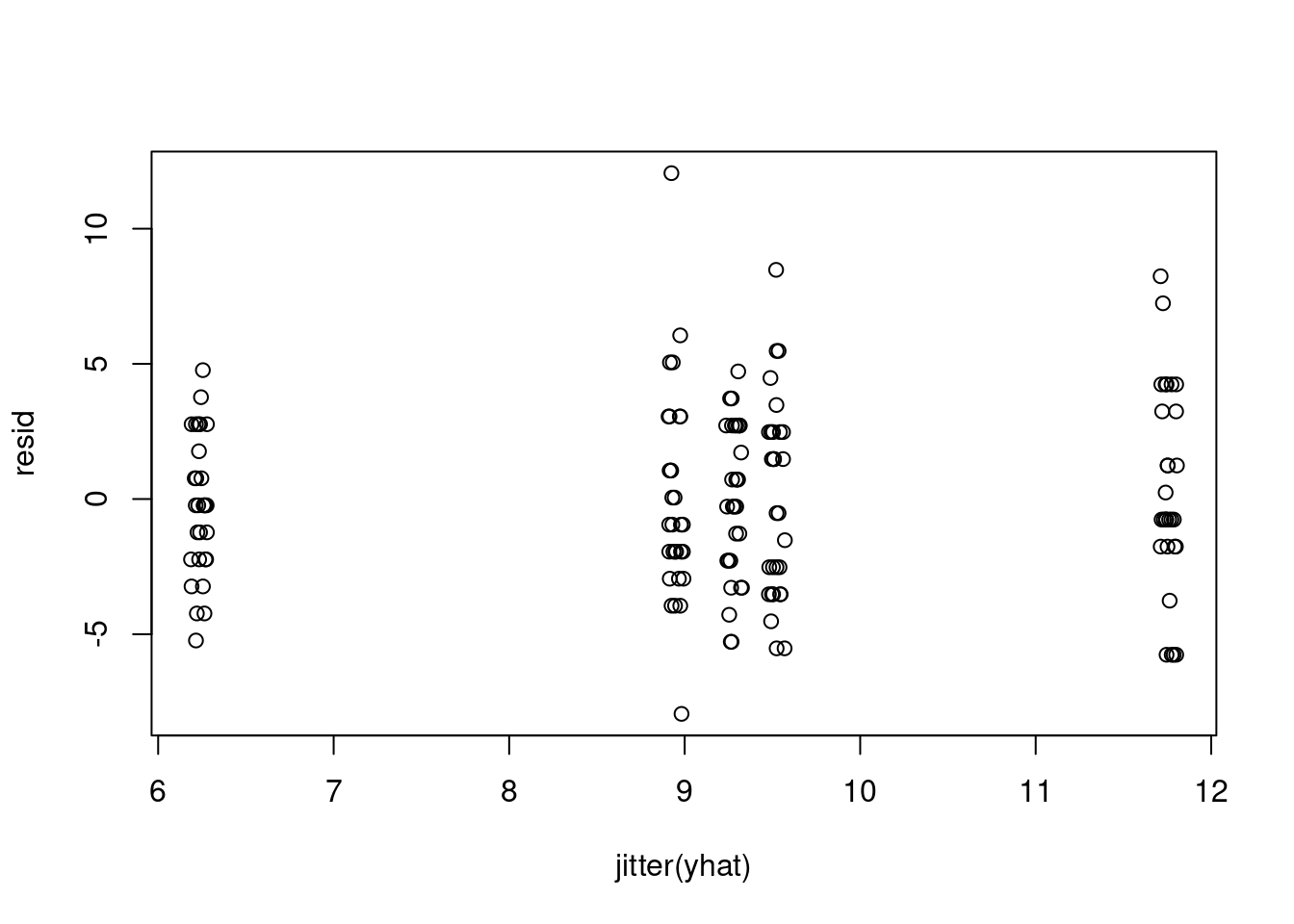

After assessing convergence, we can check the fit via residuals. With a hierarchical model, there are now two levels of residuals: the observation level and the location mean level. To simplify, we’ll look at the residuals associated with the posterior means of the parameters.

First, we have observation residuals, based on the estimates of location means.

## observation level residuals

(pm_params = colMeans(mod_csim)) lam[1] lam[2] lam[3] lam[4] lam[5] mu sig

9.284198 6.226422 9.520563 8.949620 11.752824 9.096351 2.082016 yhat = rep(pm_params[1:5], each=30)

resid = dat$chips - yhat

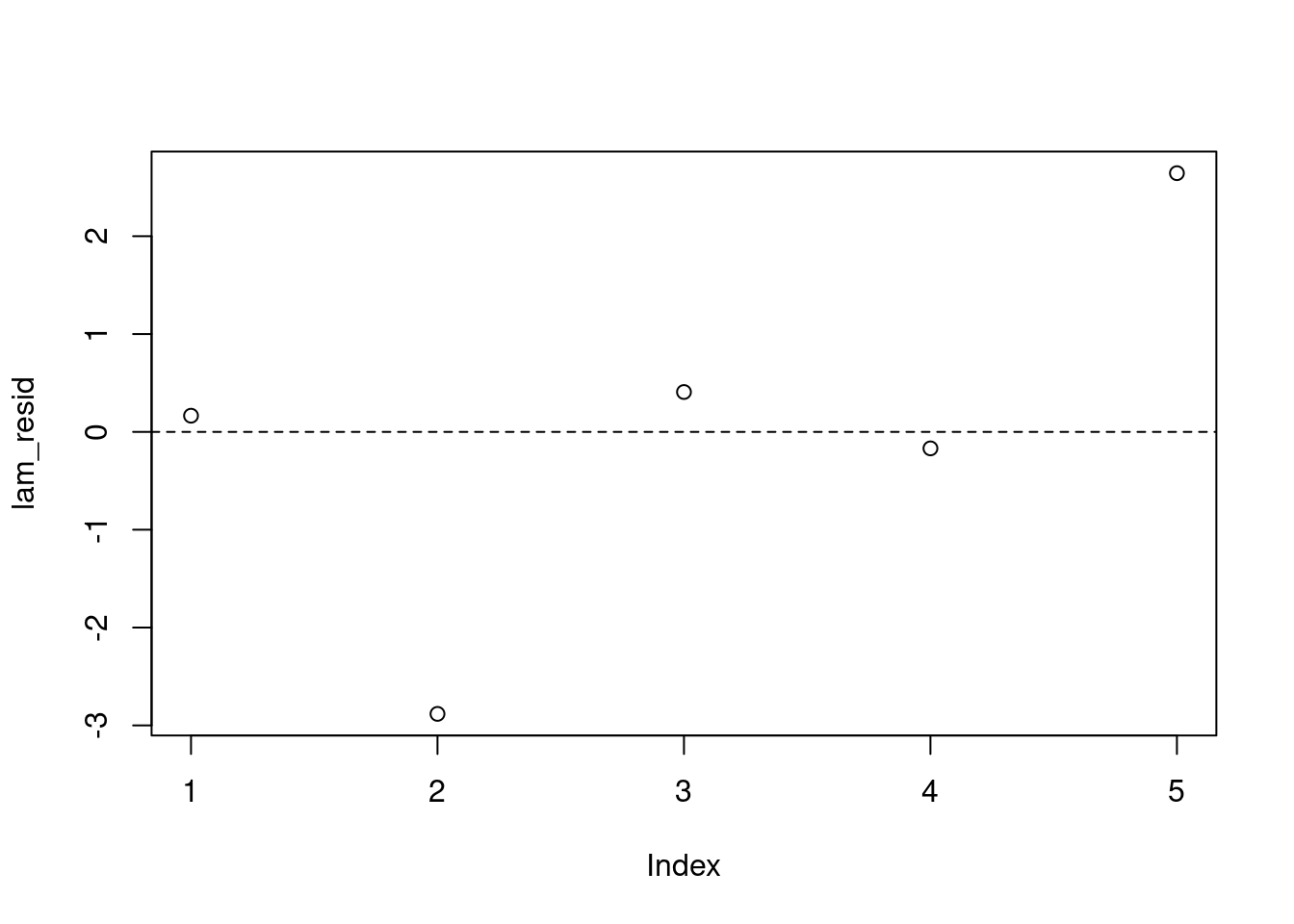

plot(resid)plot(jitter(yhat), resid)var(resid[yhat<7])[1] 6.447126var(resid[yhat>11])[1] 13.72414Also, we can look at how the location means differ from the overall mean \mu.

## location level residuals

lam_resid = pm_params[1:5] - pm_params["mu"]

plot(lam_resid)

abline(h=0, lty=2)We don’t see any obvious violations of our model assumptions.

50.5.2 Results

summary(mod_sim)

Iterations = 2001:7000

Thinning interval = 1

Number of chains = 3

Sample size per chain = 5000

1. Empirical mean and standard deviation for each variable,

plus standard error of the mean:

Mean SD Naive SE Time-series SE

lam[1] 9.284 0.5359 0.004375 0.004443

lam[2] 6.226 0.4647 0.003794 0.004416

lam[3] 9.521 0.5441 0.004443 0.004577

lam[4] 8.950 0.5268 0.004301 0.004400

lam[5] 11.753 0.6219 0.005078 0.005485

mu 9.096 0.9781 0.007986 0.012009

sig 2.082 0.7019 0.005731 0.011998

2. Quantiles for each variable:

2.5% 25% 50% 75% 97.5%

lam[1] 8.260 8.921 9.275 9.642 10.358

lam[2] 5.349 5.906 6.214 6.533 7.177

lam[3] 8.471 9.148 9.510 9.880 10.619

lam[4] 7.959 8.592 8.942 9.304 9.994

lam[5] 10.562 11.328 11.745 12.165 13.010

mu 7.223 8.478 9.068 9.682 11.154

sig 1.089 1.584 1.952 2.445 3.79550.6 Posterior predictive simulation

Just as we did with the prior distribution, we can use these posterior samples to get Monte Carlo estimates that interest us from the posterior predictive distribution.

For example, we can use draws from the posterior distribution of \mu and \sigma to simulate the posterior predictive distribution of the mean for a new location.

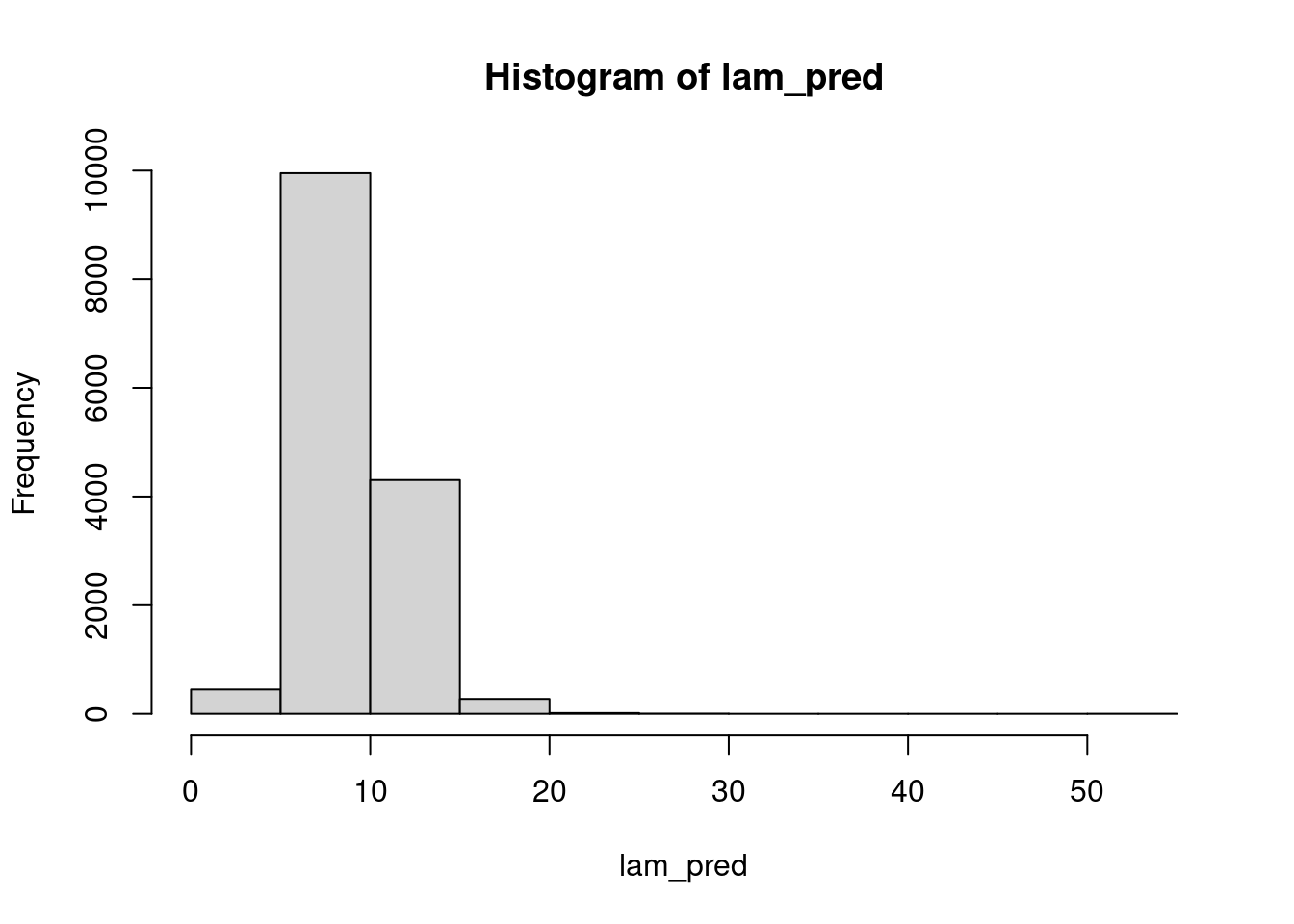

(n_sim = nrow(mod_csim))[1] 15000lam_pred = rgamma(n=n_sim, shape=mod_csim[,"mu"]^2/mod_csim[,"sig"]^2,

rate=mod_csim[,"mu"]/mod_csim[,"sig"]^2)

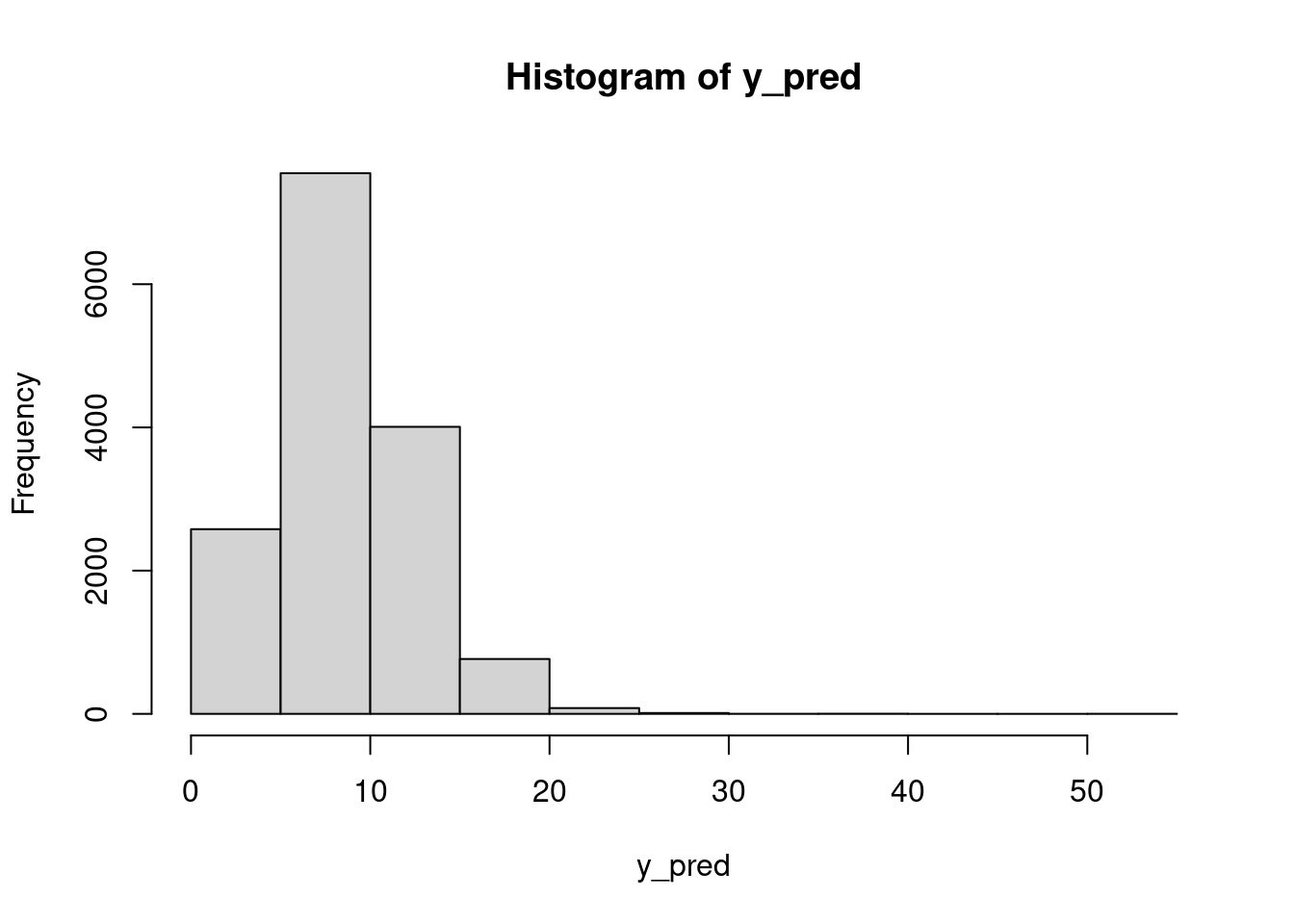

hist(lam_pred)mean(lam_pred > 15)[1] 0.01793333Using these \lambda draws, we can go to the observation level and simulate the number of chips per cookie, which takes into account the uncertainty in \lambda:

mean(y_pred > 15)[1] 0.0576hist(dat$chips)Finally, we could answer questions like: what is the posterior probability that the next cookie produced in Location 1 will have fewer than seven chips?

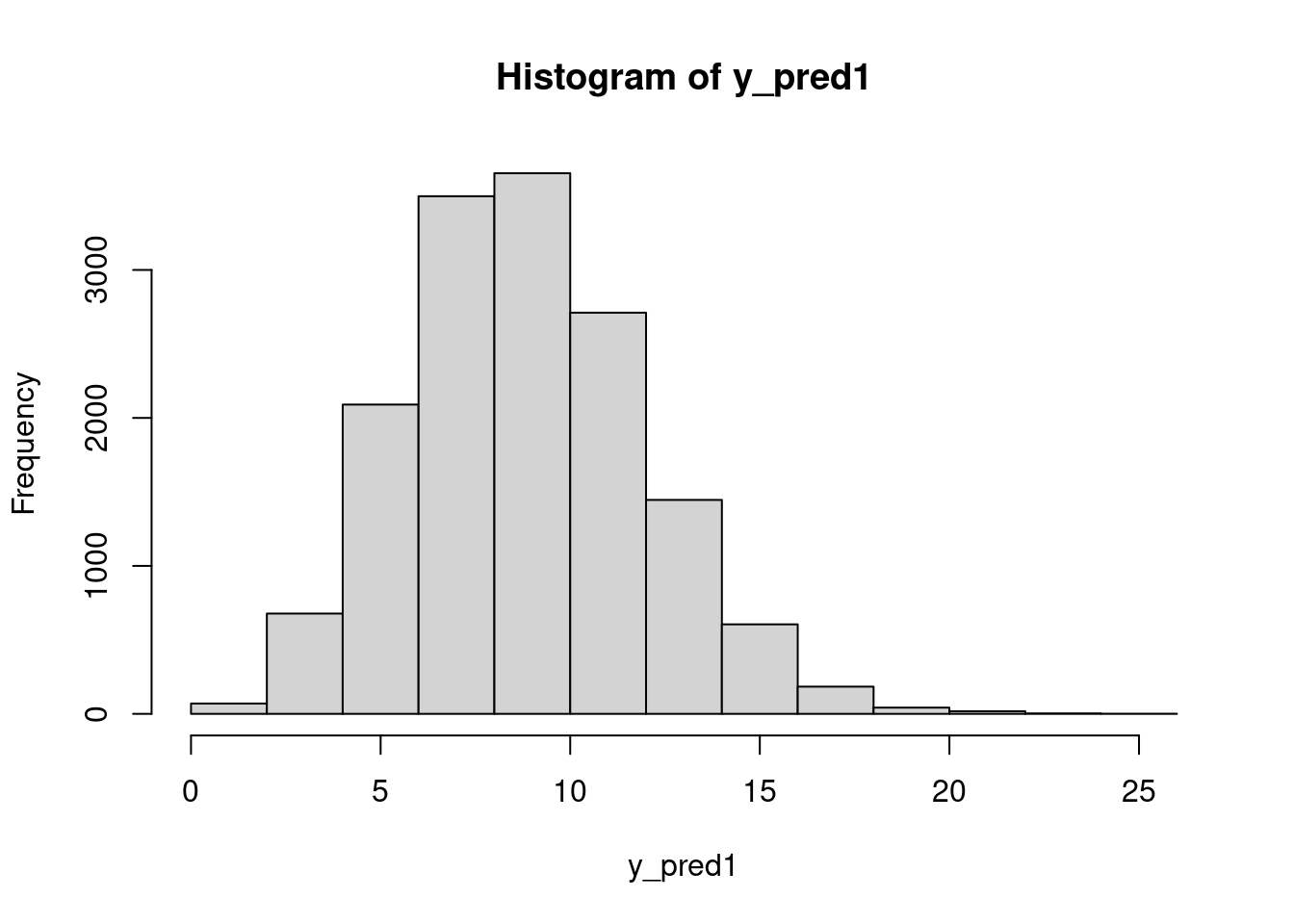

y_pred1 = rpois(n=n_sim, lambda=mod_csim[,"lam[1]"])

hist(y_pred1)mean(y_pred1 < 7)[1] 0.190333350.7 Random intercept linear model

We can extend the linear model for the Leinhardt data on infant mortality by incorporating the region variable. We’ll do this with a hierarchical model, where each region has its own intercept.

library("car")Loading required package: carDatadata("Leinhardt")

?Leinhardt

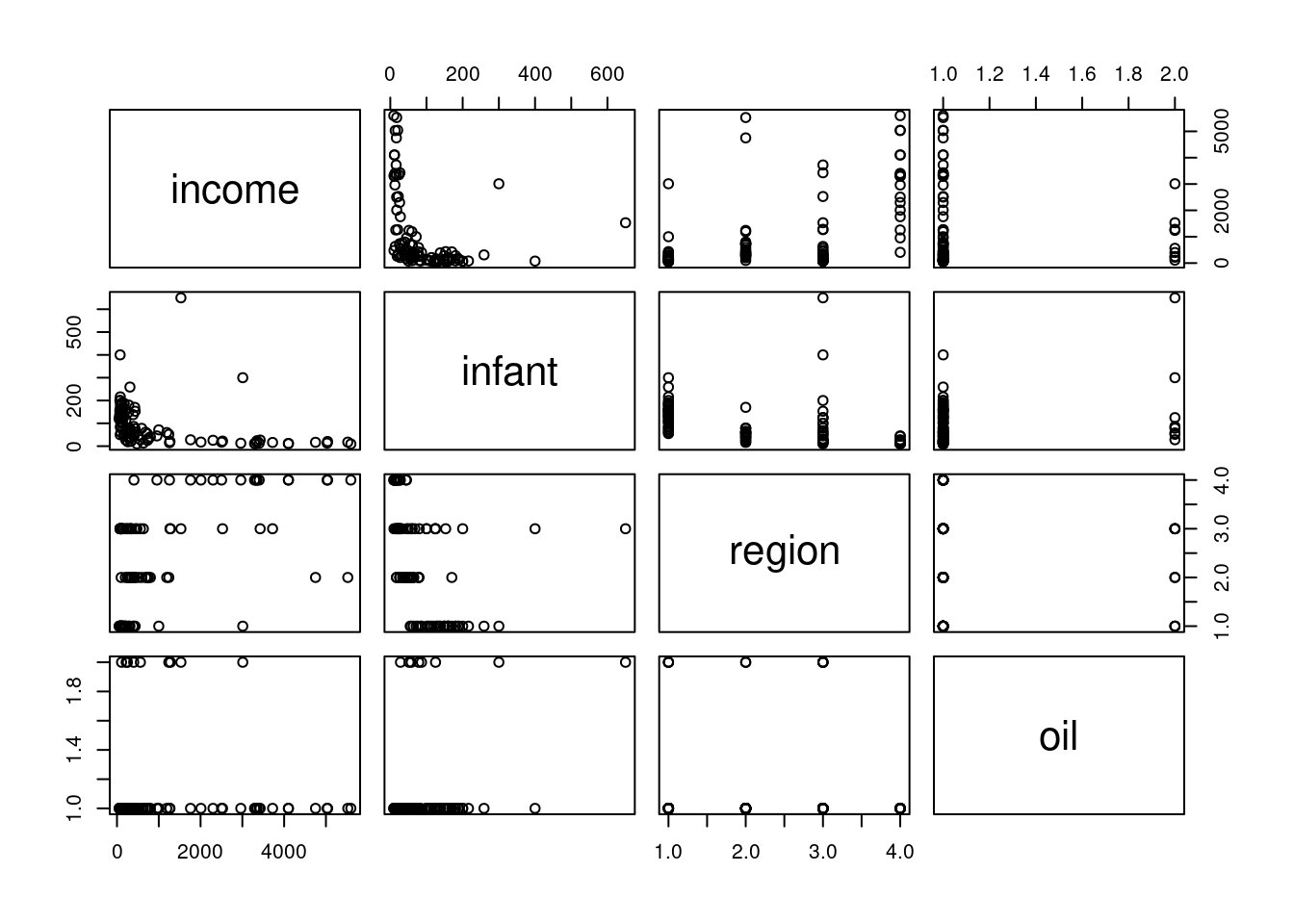

str(Leinhardt)'data.frame': 105 obs. of 4 variables:

$ income: int 3426 3350 3346 4751 5029 3312 3403 5040 2009 2298 ...

$ infant: num 26.7 23.7 17 16.8 13.5 10.1 12.9 20.4 17.8 25.7 ...

$ region: Factor w/ 4 levels "Africa","Americas",..: 3 4 4 2 4 4 4 4 4 4 ...

$ oil : Factor w/ 2 levels "no","yes": 1 1 1 1 1 1 1 1 1 1 ...head(Leinhardt) income infant region oil

Australia 3426 26.7 Asia no

Austria 3350 23.7 Europe no

Belgium 3346 17.0 Europe no

Canada 4751 16.8 Americas no

Denmark 5029 13.5 Europe no

Finland 3312 10.1 Europe noPreviously, we worked with infant mortality and income on the logarithmic scale. Recall also that we had to remove some missing data.

dat = na.omit(Leinhardt)

dat$logincome = log(dat$income)

dat$loginfant = log(dat$infant)

str(dat)'data.frame': 101 obs. of 6 variables:

$ income : int 3426 3350 3346 4751 5029 3312 3403 5040 2009 2298 ...

$ infant : num 26.7 23.7 17 16.8 13.5 10.1 12.9 20.4 17.8 25.7 ...

$ region : Factor w/ 4 levels "Africa","Americas",..: 3 4 4 2 4 4 4 4 4 4 ...

$ oil : Factor w/ 2 levels "no","yes": 1 1 1 1 1 1 1 1 1 1 ...

$ logincome: num 8.14 8.12 8.12 8.47 8.52 ...

$ loginfant: num 3.28 3.17 2.83 2.82 2.6 ...

- attr(*, "na.action")= 'omit' Named int [1:4] 24 83 86 91

..- attr(*, "names")= chr [1:4] "Iran" "Haiti" "Laos" "Nepal"Now we can fit the proposed model:

library("rjags")mod_string = " model {

for (i in 1:length(y)) {

y[i] ~ dnorm(mu[i], prec)

mu[i] = a[region[i]] + b[1]*log_income[i] + b[2]*is_oil[i]

}

for (j in 1:max(region)) {

a[j] ~ dnorm(a0, prec_a)

}

a0 ~ dnorm(0.0, 1.0/1.0e6)

prec_a ~ dgamma(1/2.0, 1*10.0/2.0)

tau = sqrt( 1.0 / prec_a )

for (j in 1:2) {

b[j] ~ dnorm(0.0, 1.0/1.0e6)

}

prec ~ dgamma(5/2.0, 5*10.0/2.0)

sig = sqrt( 1.0 / prec )

} "set.seed(116)

data_jags = list(y=dat$loginfant, log_income=dat$logincome,

is_oil=as.numeric(dat$oil=="yes"), region=as.numeric(dat$region))

data_jags$is_oil [1] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0

[38] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

[75] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0table(data_jags$is_oil, data_jags$region)

1 2 3 4

0 31 20 24 18

1 3 2 3 0params = c("a0", "a", "b", "sig", "tau")

mod = jags.model(textConnection(mod_string), data=data_jags, n.chains=3)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 101

Unobserved stochastic nodes: 9

Total graph size: 622

Initializing modelupdate(mod, 1e3) # burn-in

mod_sim = coda.samples(model=mod,

variable.names=params,

n.iter=5e3)

mod_csim = as.mcmc(do.call(rbind, mod_sim)) # combine multiple chains

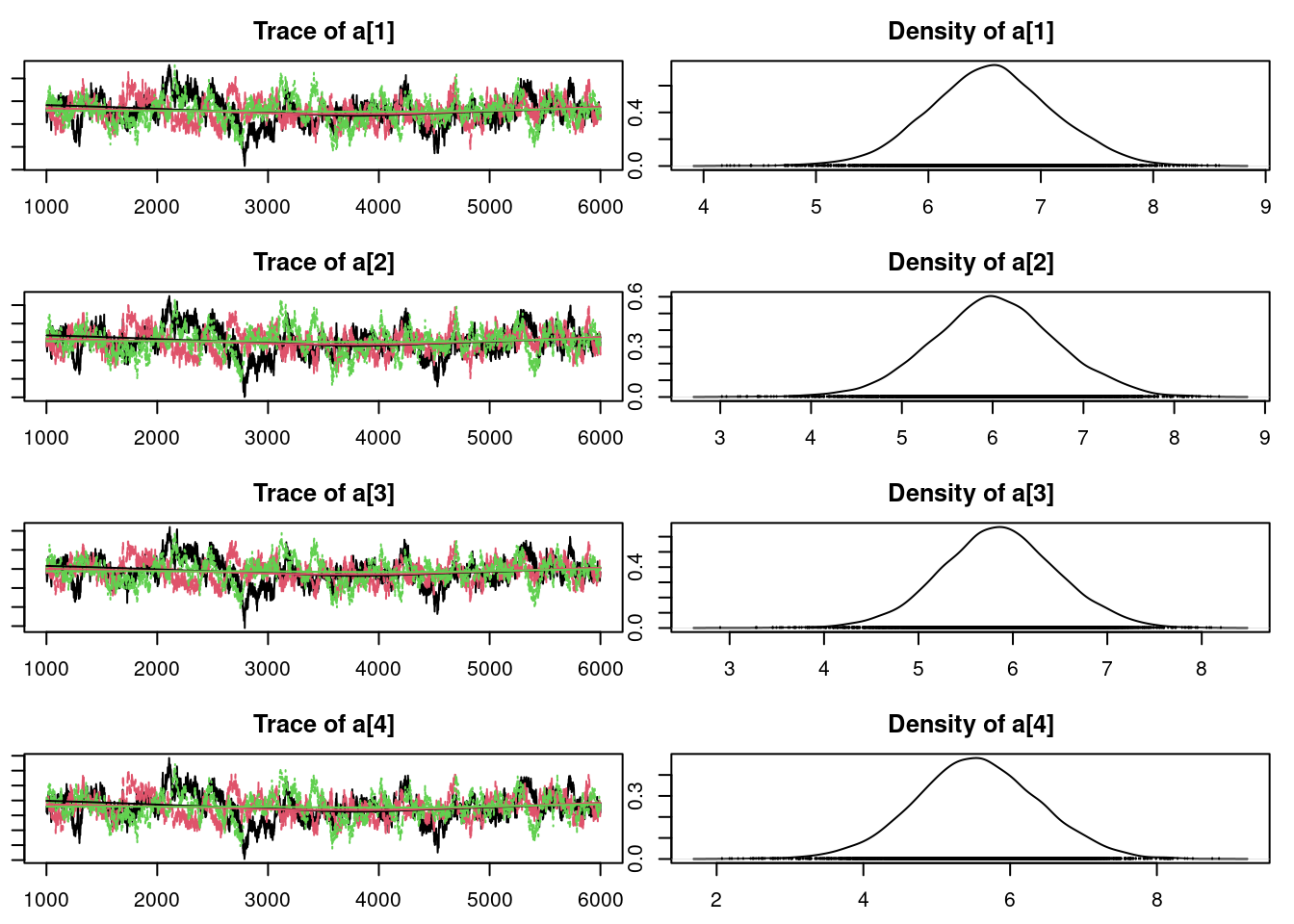

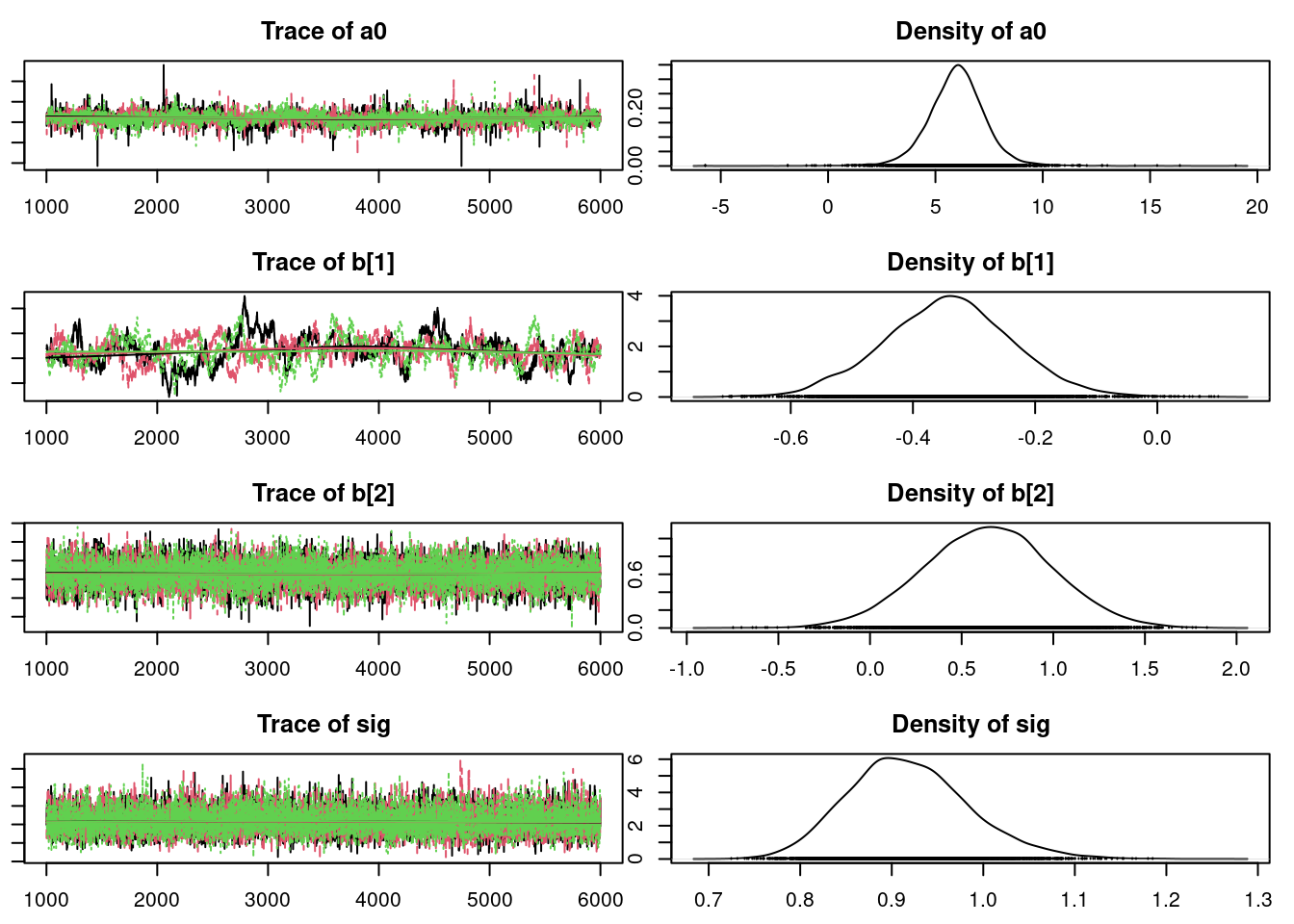

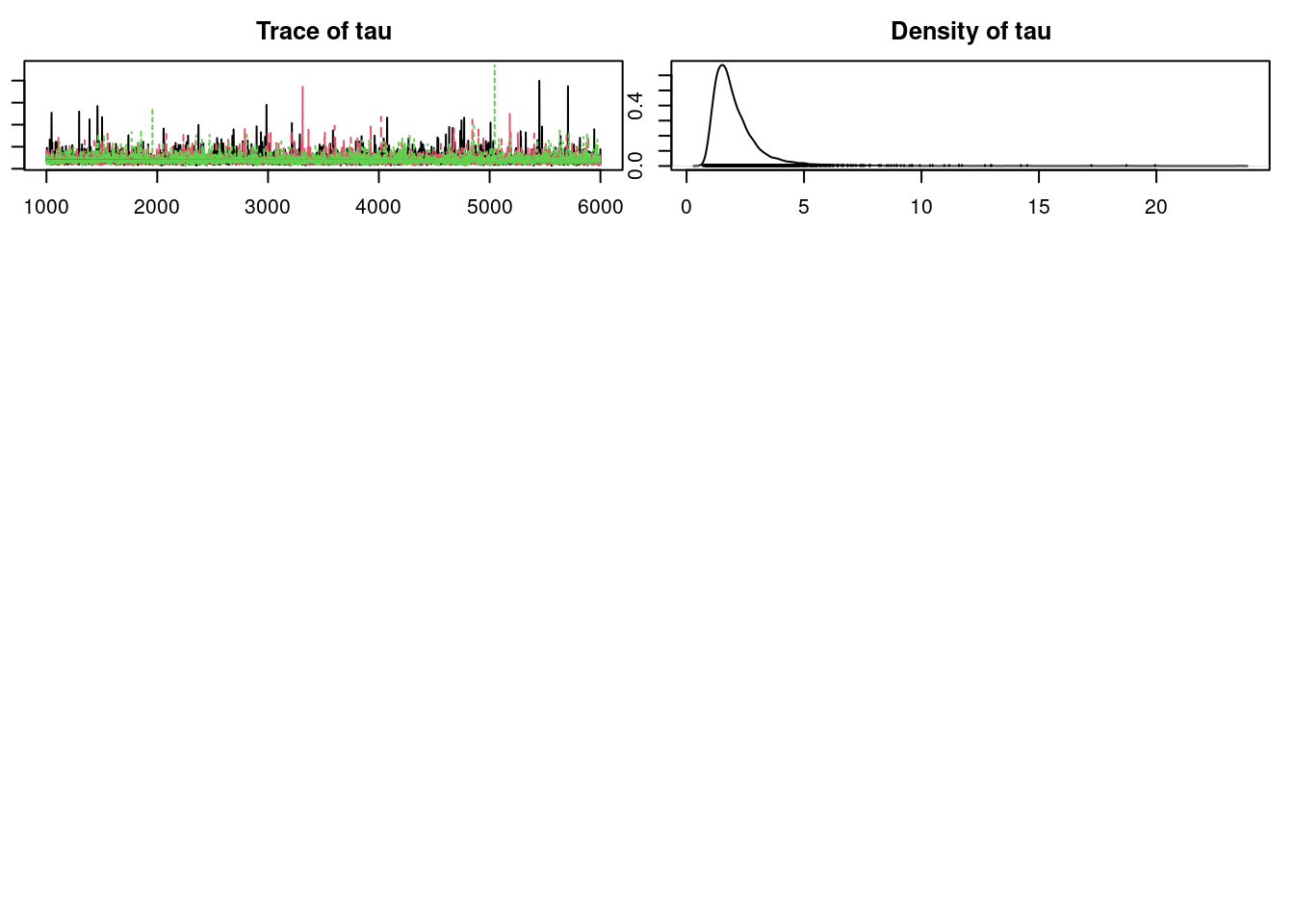

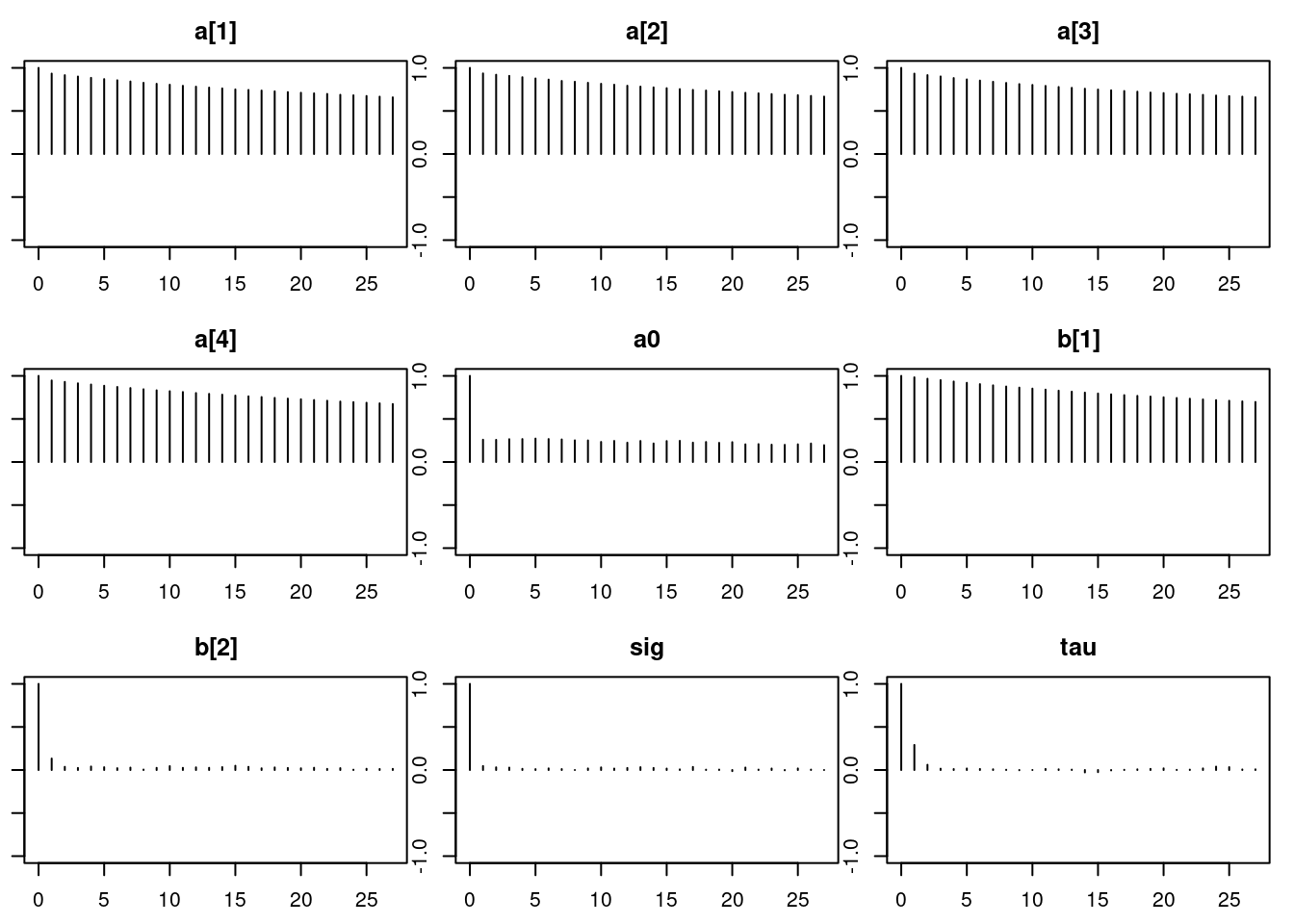

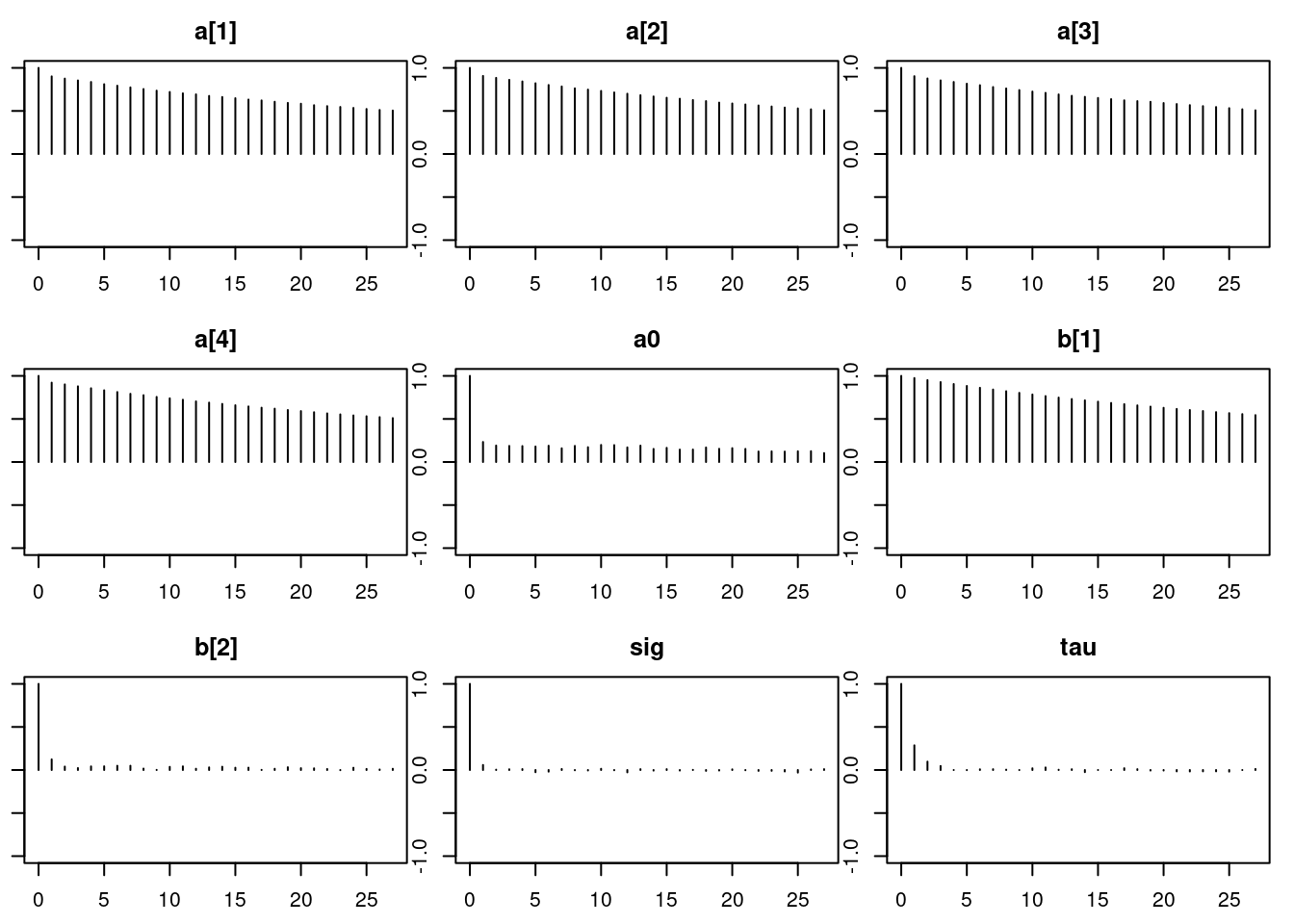

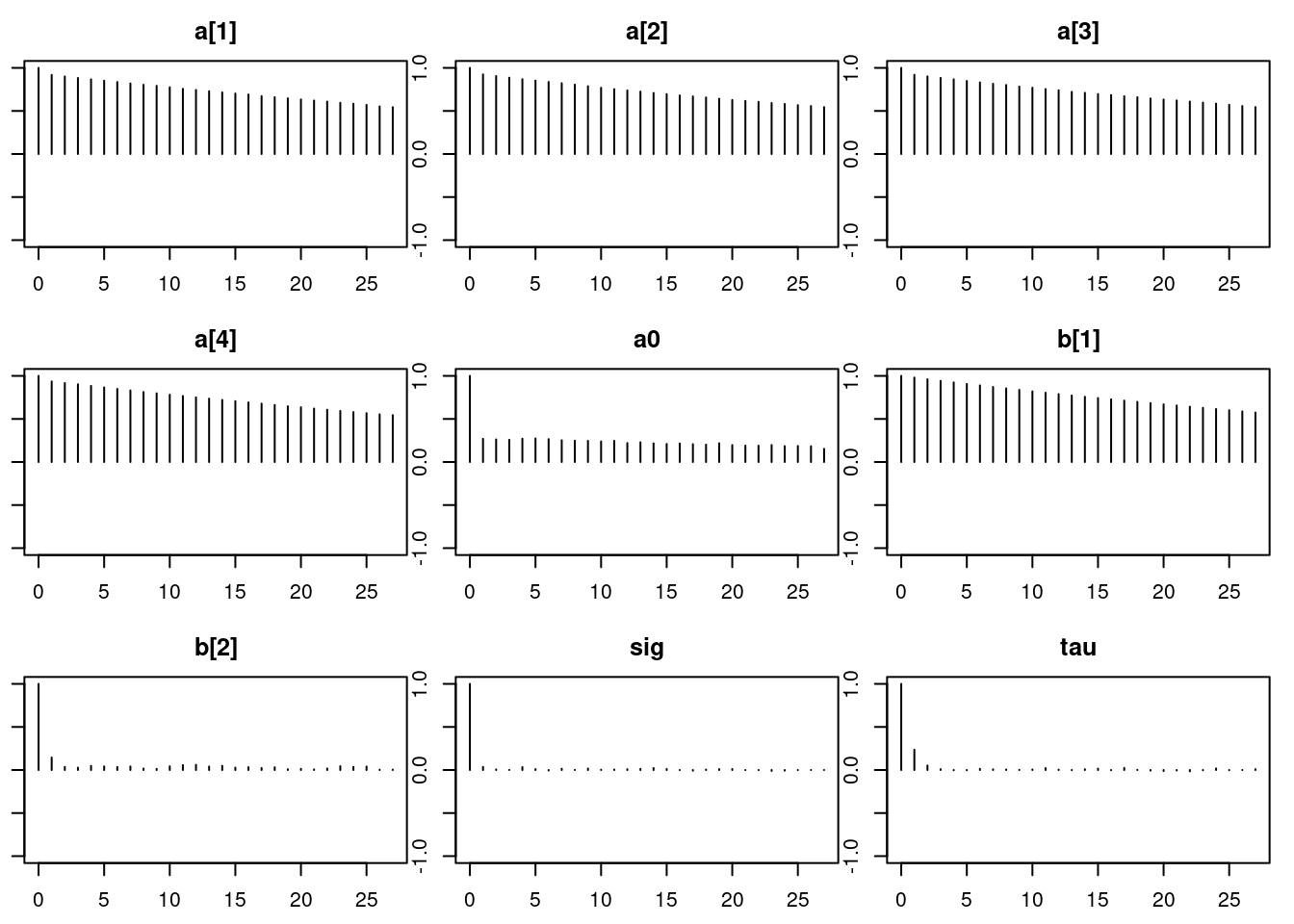

## convergence diagnostics

par(mar = c(2.5, 1, 2.5, 1))

plot(mod_sim)gelman.diag(mod_sim)Potential scale reduction factors:

Point est. Upper C.I.

a[1] 1.01 1.02

a[2] 1.01 1.02

a[3] 1.01 1.02

a[4] 1.01 1.02

a0 1.00 1.00

b[1] 1.01 1.02

b[2] 1.00 1.00

sig 1.00 1.00

tau 1.00 1.00

Multivariate psrf

1.01autocorr.diag(mod_sim) a[1] a[2] a[3] a[4] a0 b[1] b[2]

Lag 0 1.0000000 1.0000000 1.0000000 1.0000000 1.00000000 1.0000000 1.00000000

Lag 1 0.9145533 0.9190490 0.9153497 0.9336272 0.27273652 0.9788790 0.14201127

Lag 5 0.8402993 0.8430299 0.8392982 0.8587545 0.22236719 0.8998488 0.04110112

Lag 10 0.7633785 0.7642915 0.7641442 0.7802393 0.20989426 0.8164018 0.03481647

Lag 50 0.3558835 0.3559423 0.3514290 0.3612697 0.09970275 0.3765800 0.01153484

sig tau

Lag 0 1.0000000000 1.000000000

Lag 1 0.0482208397 0.275104913

Lag 5 -0.0020125296 0.001787598

Lag 10 0.0060611108 0.004824164

Lag 50 0.0006201846 -0.010445828autocorr.plot(mod_sim)effectiveSize(mod_sim) a[1] a[2] a[3] a[4] a0 b[1] b[2]

168.0772 165.9827 155.6973 159.2430 653.9082 160.0758 4728.8159

sig tau

13553.1569 8526.1276 50.7.1 Results

Convergence looks okay, so let’s compare this with the old model from Lesson 7 using DIC:

dic.samples(mod, n.iter=1e3)Mean deviance: 213.9

penalty 7.251

Penalized deviance: 221.2 ### nonhierarchical model: 230.1It appears that this model is an improvement over the non-hierarchical one we fit earlier. Notice that the penalty term, which can be interpreted as the “effective” number of parameters, is less than the actual number of parameters (nine). There are fewer “effective” parameters because they are “sharing” information or “borrowing strength” from each other in the hierarchical structure. If we had skipped the hierarchy and fit one intercept, there would have been four parameters. If we had fit separate, independent intercepts for each region, there would have been seven parameters (which is close to what we ended up with).

Finally, let’s look at the posterior summary.

summary(mod_sim)

Iterations = 1001:6000

Thinning interval = 1

Number of chains = 3

Sample size per chain = 5000

1. Empirical mean and standard deviation for each variable,

plus standard error of the mean:

Mean SD Naive SE Time-series SE

a[1] 6.5436 0.54331 0.0044361 0.0424261

a[2] 5.9951 0.68486 0.0055918 0.0534565

a[3] 5.8349 0.60731 0.0049586 0.0493121

a[4] 5.5193 0.83344 0.0068050 0.0666822

a0 5.9624 1.28070 0.0104569 0.0533723

b[1] -0.3392 0.10326 0.0008431 0.0082143

b[2] 0.6441 0.34958 0.0028543 0.0052422

sig 0.9187 0.06585 0.0005377 0.0005657

tau 2.0300 1.02483 0.0083677 0.0110998

2. Quantiles for each variable:

2.5% 25% 50% 75% 97.5%

a[1] 5.43513 6.1784 6.5707 6.9286 7.5524

a[2] 4.59871 5.5315 6.0264 6.4733 7.2679

a[3] 4.60705 5.4310 5.8557 6.2631 6.9634

a[4] 3.82091 4.9672 5.5514 6.1009 7.0533

a0 3.38995 5.2118 5.9736 6.7325 8.4175

b[1] -0.53016 -0.4111 -0.3438 -0.2702 -0.1273

b[2] -0.04104 0.4076 0.6427 0.8835 1.3183

sig 0.80173 0.8725 0.9147 0.9601 1.0595

tau 0.98202 1.4011 1.7781 2.3296 4.5838In this particular model, the intercepts do not have a real interpretation because they correspond to the mean response for a country that does not produce oil and has USD0 log-income per capita (which is USD1 income per capita). We can interpret a_0 as the overall mean intercept and \tau as the standard deviation of intercepts across regions.

50.7.2 Other models

We have not investigated adding interaction terms, which might be appropriate. We only considered adding hierarchy on the intercepts, but in reality nothing prevents us from doing the same for other terms in the model, such as the coefficients for income and oil. We could try any or all of these alternatives and see how the DIC changes for those models. This, together with other model checking techniques we have discussed could be used to identify your best model that you can use to make inferences and predictions.

50.8 Mixture models

Histograms of data often reveal that they do not follow any standard probability distribution. Sometimes we have explanatory variables (or covariates) to account for the different values, and normally distributed errors are adequate, as in normal regression. However, if we only have the data values themselves and no covariates, we might have to fit a non-standard distribution to the data. One way to do this is by mixing standard distributions.

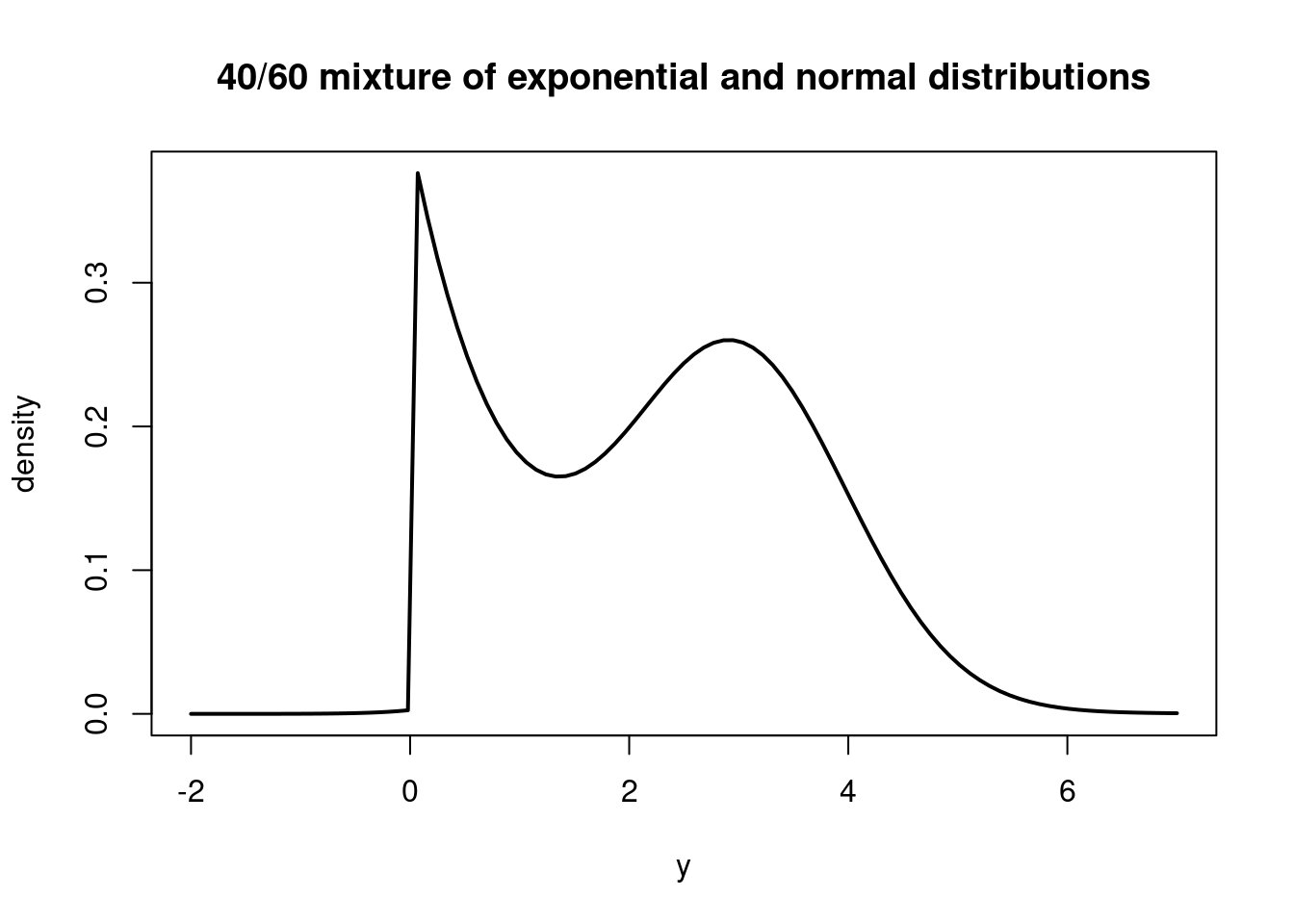

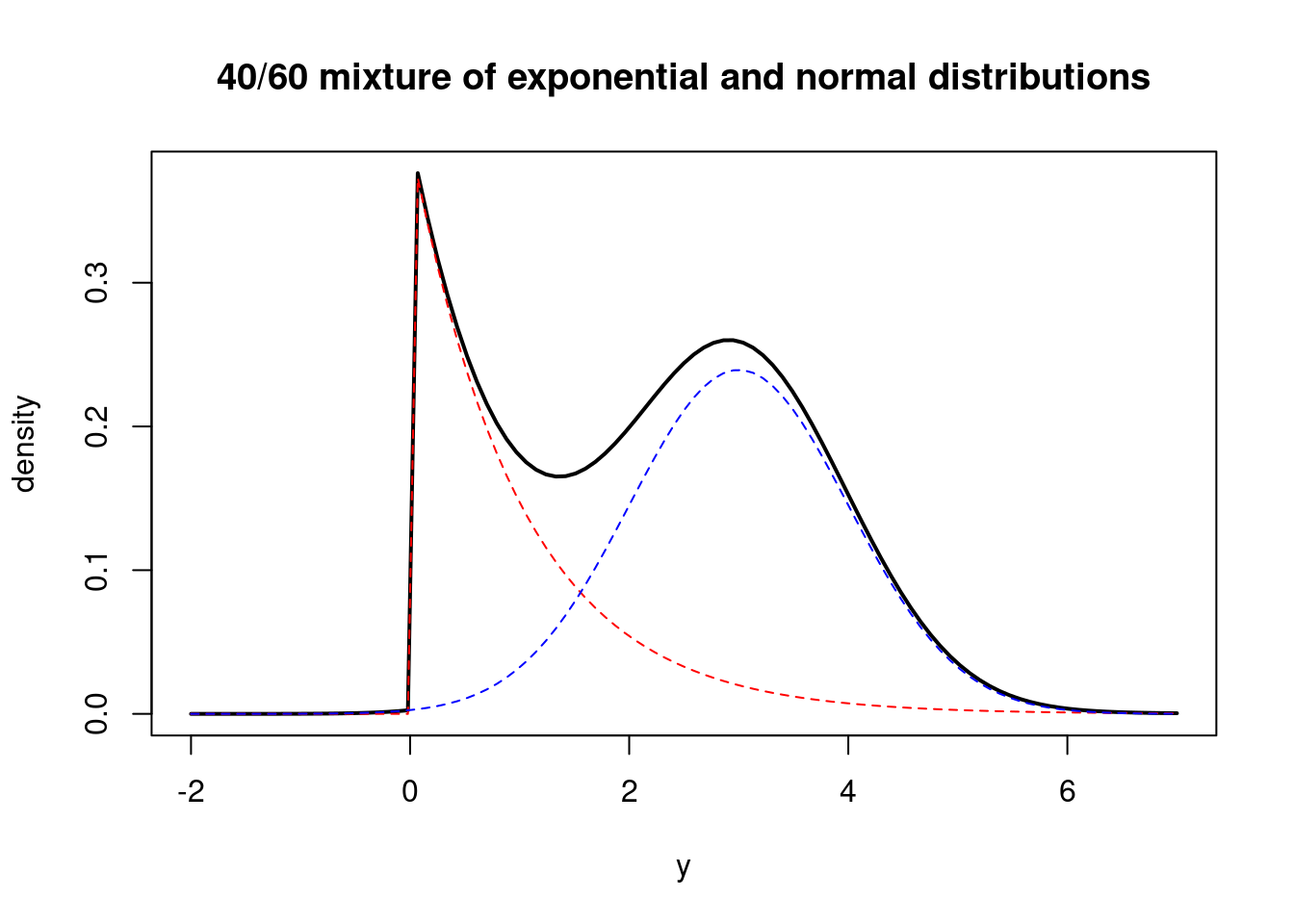

Mixture distributions are just a weighted combination of probability distributions. For example, we could take an exponential distribution with mean 1 and normal distribution with mean 3 and variance 1 (although typically the two mixture components would have the same support; here the exponential component has to be non-negative and the normal component can be positive or negative). Suppose we give them weights: 0.4 for the exponential distribution and 0.6 for the normal distribution. We could write the PDF for this distribution as

\mathbb{P}r(y) = 0.4 \cdot \exp(-y) \cdot \mathbb{I}_{(y \ge 0)} + 0.6 \cdot \frac{1}{\sqrt{2 \pi}} \exp\left(- \frac{1}{2} (y - 3)^2\right) \tag{50.3}

where \mathbb{I}_{(y \ge 0)} is the indicator function that is 1 if y \ge 0 and 0 otherwise. This PDF is a mixture of the exponential and normal distributions, with the weights indicating the relative contribution of each distribution to the overall PDF.

The PDF of this mixture distribution would look like this:

curve( 0.4*dexp(x, 1.0) + 0.6*dnorm(x, 3.0, 1.0), from=-2.0, to=7.0, ylab="density", xlab="y", main="40/60 mixture of exponential and normal distributions", lwd=2)We could think of these two distributions as governing two distinct populations, one following the exponential distribution and the other following the normal distribution.

Let’s draw the weighted PDFs for each population.

curve( 0.4*dexp(x, 1.0) + 0.6*dnorm(x, 3.0, 1.0), from=-2.0, to=7.0, ylab="density", xlab="y", main="40/60 mixture of exponential and normal distributions", lwd=2)

curve( 0.4*dexp(x, 1.0), from=-2.0, to=7.0, col="red", lty=2, add=TRUE)

curve( 0.6*dnorm(x, 3.0, 1.0), from=-2.0, to=7.0, col="blue", lty=2, add=TRUE)The general form for a discrete mixture of distributions is as follows:

\mathbb{P}r(y) = \sum_{j=1}^J \omega_j \cdot f_j (y) \tag{50.4}

where the \omega’s are positive weights that add up to 1 (they are probabilities) and each of the J f_j(y) functions is a PDF for some distribution. In the example above, the weights were 0.4 and 0.6, f_1 was an exponential PDF and f_2 was a normal PDF.

One way to simulate from a mixture distribution is with a hierarchical model. We first simulate an indicator for which “population” the next observation will come from using the weights \omega. Let’s call this z_i. In the example above, z_i would take the value 1 (indicating the exponential distribution) with probability 0.4 and 2 (indicating the normal distribution) with probability 0.6. Next, simulate the observation y_i from the distribution corresponding to z_i.

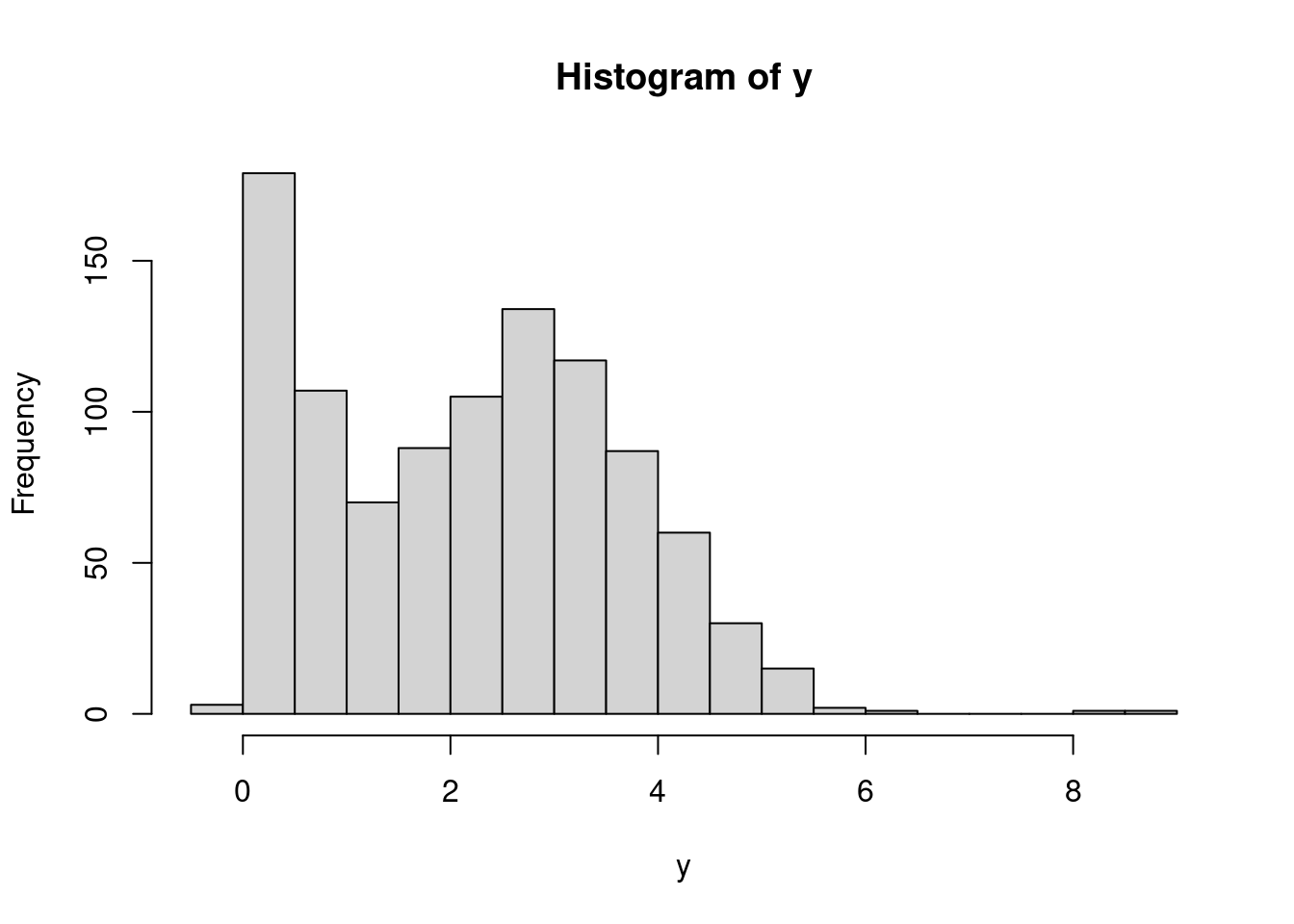

Let’s simulate from our example mixture distribution.

set.seed(117)

n = 1000

z = numeric(n)

y = numeric(n)

for (i in 1:n) {

z[i] = sample.int(2, 1, prob=c(0.4, 0.6)) # returns a 1 with probability 0.4, or a 2 with probability 0.6

if (z[i] == 1) {

y[i] = rexp(1, rate=1.0)

} else if (z[i] == 2) {

y[i] = rnorm(1, mean=3.0, sd=1.0)

}

}

hist(y, breaks=30)If we keep only the y values and throw away the z values, we have a sample from the mixture model above. To see that they are equivalent, we can marginalize the joint distribution of y and z:

\begin{aligned} \mathbb{P}r(y) &= \sum_{j=1}^2 \mathbb{P}r(y, z=j) \\ &= \sum_{j=1}^2 \mathbb{P}r(z=j) \cdot \mathbb{P}r(y \mid z=j) \\ &= \sum_{j=1}^2 \omega_j \cdot f_j(y) \end{aligned} \tag{50.5}

50.8.1 Bayesian inference for mixture models

When we fit a mixture model to data, we usually only have the y values and do not know which “population” they belong to. Because the z variables are unobserved, they are called latent variables. We can treat them as parameters in a hierarchical model and perform Bayesian inference for them. The hierarchial model might look like this:

\begin{aligned} y_i \mid z_i, \theta & \overset{\text{ind}}{\sim} f_{z_i}(y \mid \theta) \, , \quad i = 1, \ldots, n \\ \text{Pr}(z_i = j \mid \omega) &= \omega_j \, , \quad j=1, \ldots, J \\ \omega &\sim \mathbb{P}r(\omega) \\ \theta &\sim \mathbb{P}r(\theta) \end{aligned} \tag{50.6}

where we might use a Dirichlet prior (see the review of distributions in the supplementary material) for the weight vector \omega and conjugate priors for the population-specific parameters in \theta. With this model, we could obtain posterior distributions for z (population membership of the observations), \omega (population weights), and \theta (population-specific parameters in f_j). Next, we will look at how to fit a mixture of two normal distributions in JAGS.

50.9 Example with JAGS

50.9.1 Data

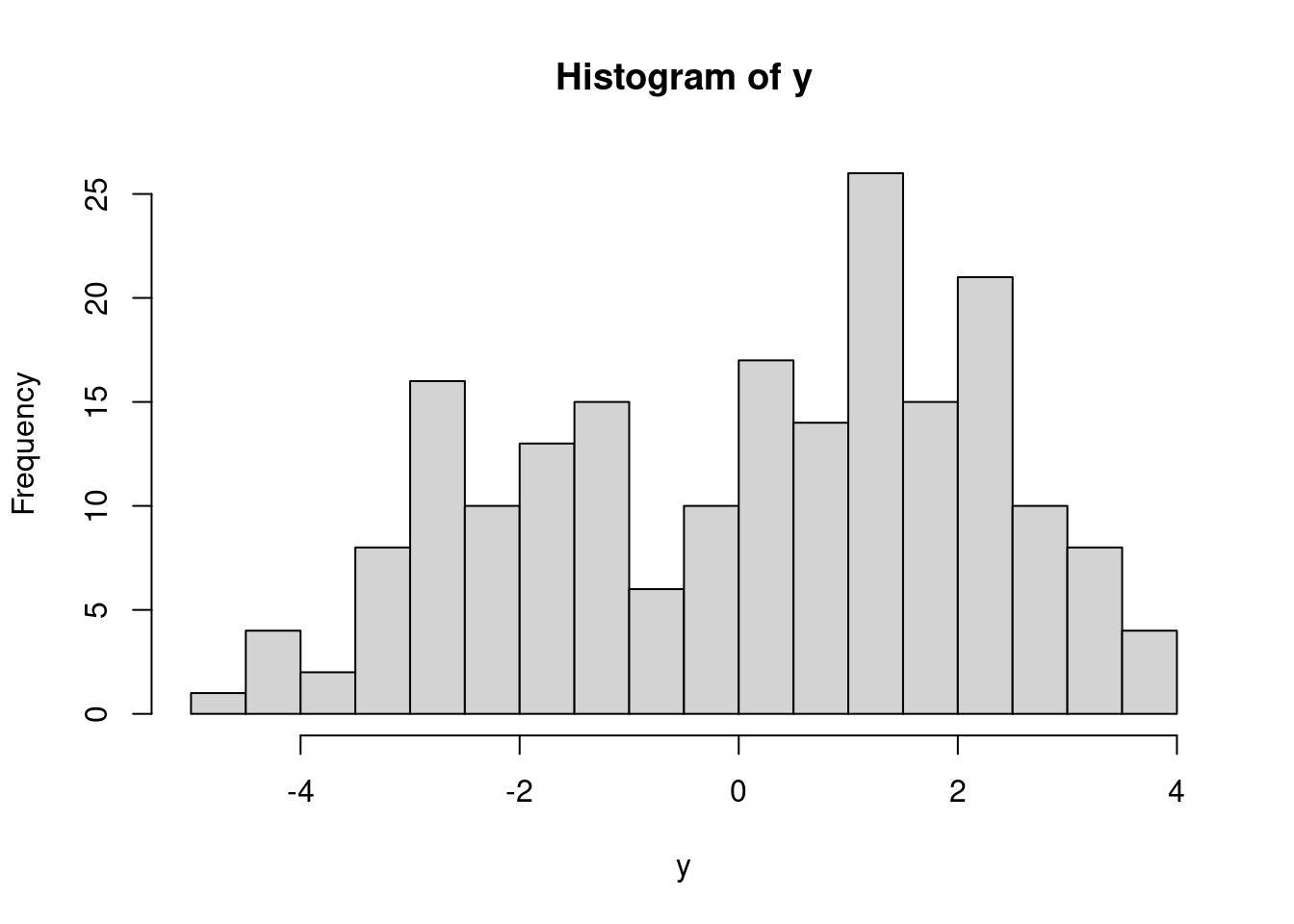

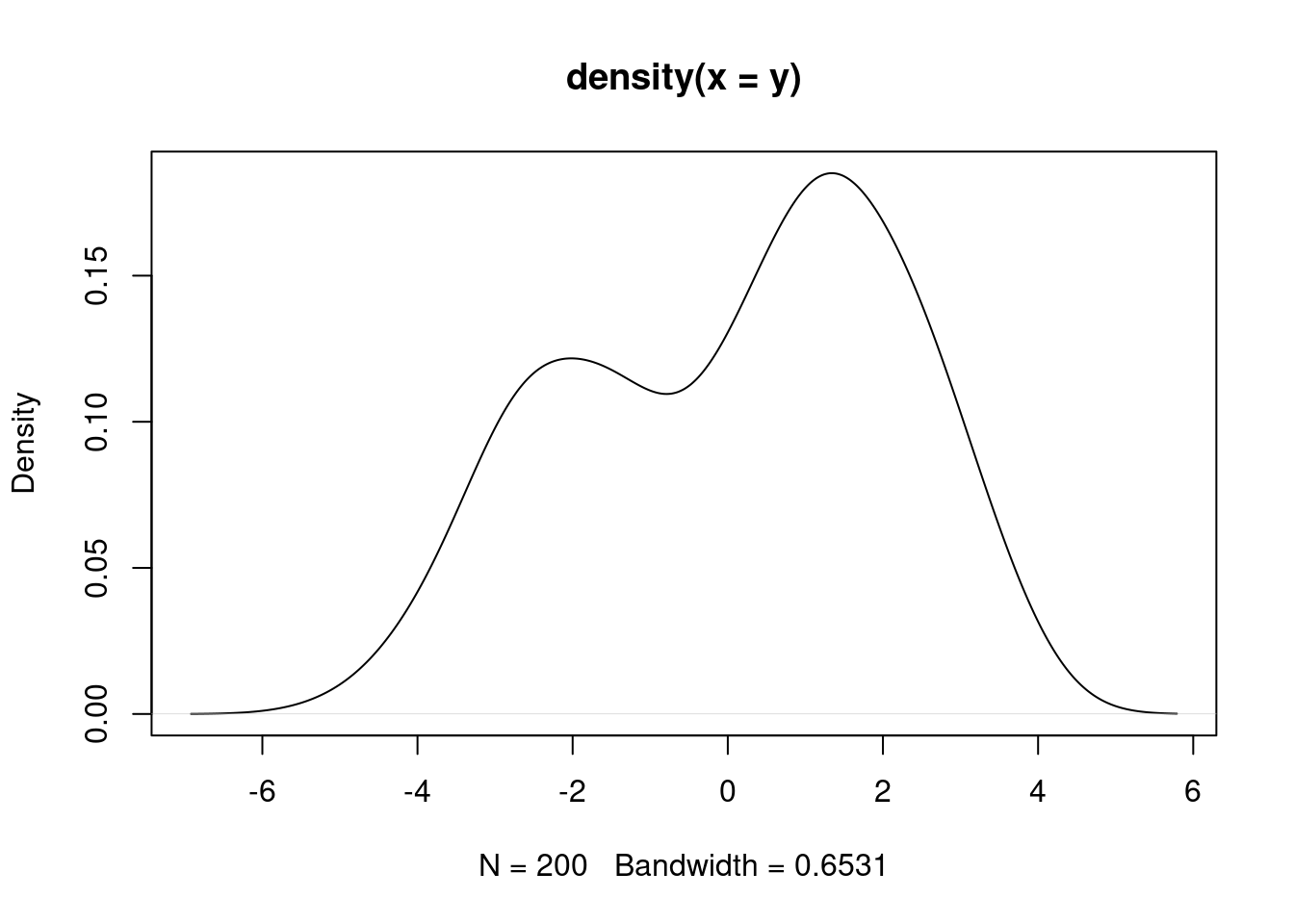

For this example, we will use the data in the attached file mixture.csv.

dat = read.csv("data/mixture.csv", header=FALSE)

y = dat$V1

(n = length(y))[1] 200Let’s visualize these data.

It appears that we have two populations, but we do not know which population each observation belongs to. We can learn this, along with the mixture weights and population-specific parameters with a Bayesian hierarchical model.

We will use a mixture of two normal distributions with variance 1 and different (and unknown) means.

50.9.2 Model

library("rjags")mod_string = " model {

for (i in 1:length(y)) {

y[i] ~ dnorm(mu[z[i]], prec)

z[i] ~ dcat(omega)

}

mu[1] ~ dnorm(-1.0, 1.0/100.0)

mu[2] ~ dnorm(1.0, 1.0/100.0) T(mu[1],) # ensures mu[1] < mu[2]

prec ~ dgamma(1.0/2.0, 1.0*1.0/2.0)

sig = sqrt(1.0/prec)

omega ~ ddirich(c(1.0, 1.0))

} "

set.seed(11)

data_jags = list(y=y)

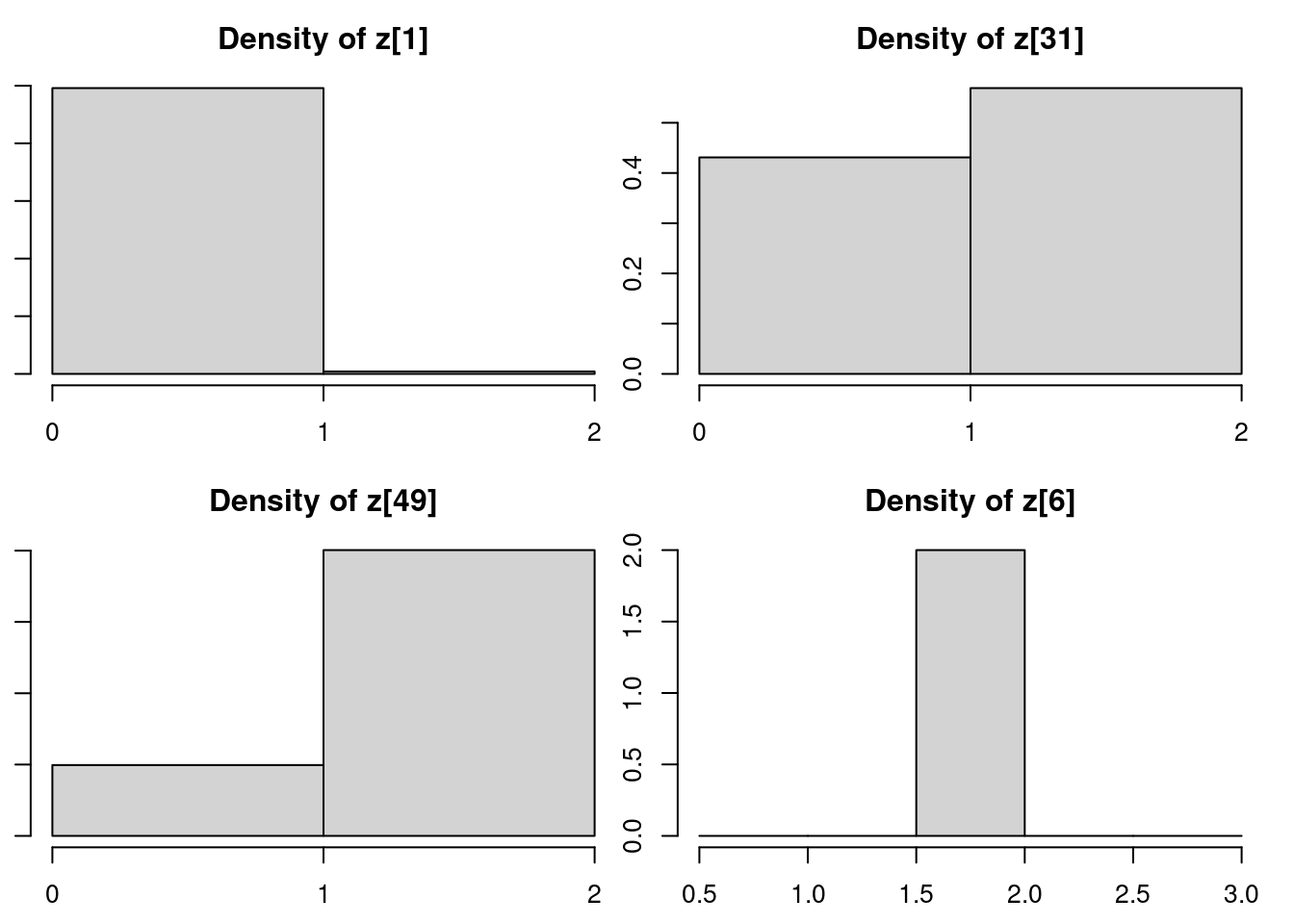

params = c("mu", "sig", "omega", "z[1]", "z[31]", "z[49]", "z[6]") # Select some z's to monitor

mod = jags.model(textConnection(mod_string), data=data_jags, n.chains=3)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 200

Unobserved stochastic nodes: 204

Total graph size: 614

Initializing modelupdate(mod, 1e3)

mod_sim = coda.samples(model=mod,

variable.names=params,

n.iter=5e3)

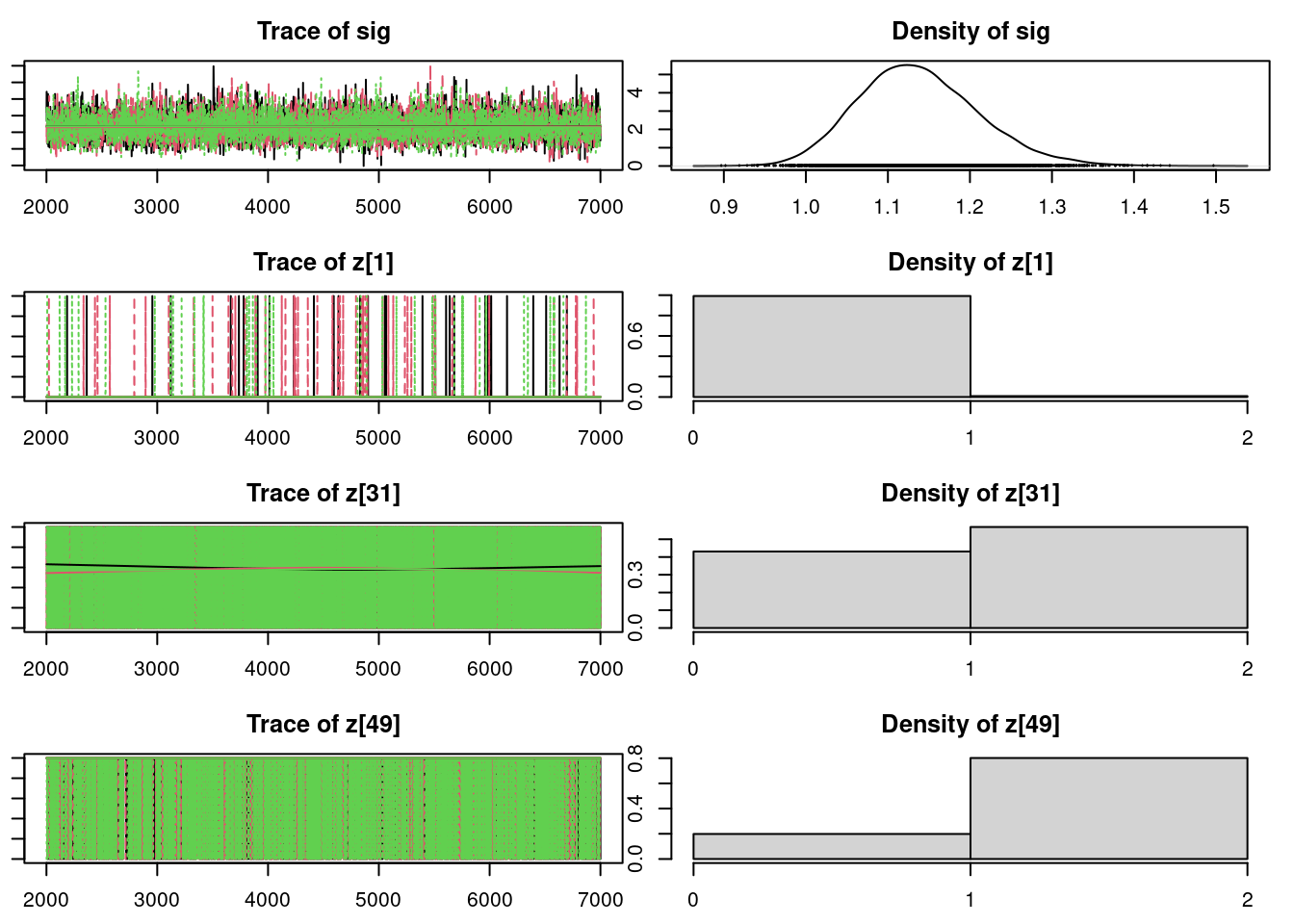

mod_csim = as.mcmc(do.call(rbind, mod_sim))## convergence diagnostics

par(mar = c(2.5, 1, 2.5, 1))

plot(mod_sim, ask=TRUE)autocorr.diag(mod_sim) mu[1] mu[2] omega[1] omega[2] sig

Lag 0 1.000000000 1.00000000 1.000000000 1.000000000 1.000000000

Lag 1 0.530438161 0.33702642 0.292896373 0.292896373 0.417383022

Lag 5 0.066760414 0.03223754 0.045842460 0.045842460 0.035678030

Lag 10 0.015465065 0.01977249 0.016815138 0.016815138 0.002118578

Lag 50 -0.004819543 -0.01272824 -0.002412294 -0.002412294 0.011330059

z[1] z[31] z[49] z[6]

Lag 0 1.0000000000 1.0000000000 1.000000000 NaN

Lag 1 0.0090766668 0.0264389839 0.027122731 NaN

Lag 5 -0.0035641483 0.0078403615 0.012977266 NaN

Lag 10 -0.0091052063 -0.0003363289 -0.003093949 NaN

Lag 50 0.0003506642 -0.0039933435 -0.003920248 NaNeffectiveSize(mod_sim) mu[1] mu[2] omega[1] omega[2] sig z[1] z[31] z[49]

4096.601 6069.676 6176.270 6176.270 5745.915 14032.269 12975.628 13404.704

z[6]

0.000 50.9.3 Results

summary(mod_sim)

Iterations = 2001:7000

Thinning interval = 1

Number of chains = 3

Sample size per chain = 5000

1. Empirical mean and standard deviation for each variable,

plus standard error of the mean:

Mean SD Naive SE Time-series SE

mu[1] -2.1188 0.16570 0.0013529 0.0025918

mu[2] 1.4904 0.12629 0.0010312 0.0016241

omega[1] 0.3877 0.04018 0.0003280 0.0005122

omega[2] 0.6123 0.04018 0.0003280 0.0005122

sig 1.1355 0.07438 0.0006073 0.0009856

z[1] 1.0090 0.09444 0.0007711 0.0007935

z[31] 1.5687 0.49528 0.0040439 0.0043692

z[49] 1.8010 0.39926 0.0032600 0.0034530

z[6] 2.0000 0.00000 0.0000000 0.0000000

2. Quantiles for each variable:

2.5% 25% 50% 75% 97.5%

mu[1] -2.4405 -2.2308 -2.1188 -2.0077 -1.7939

mu[2] 1.2334 1.4075 1.4908 1.5741 1.7375

omega[1] 0.3102 0.3603 0.3869 0.4148 0.4679

omega[2] 0.5321 0.5852 0.6131 0.6397 0.6898

sig 1.0076 1.0836 1.1296 1.1812 1.2964

z[1] 1.0000 1.0000 1.0000 1.0000 1.0000

z[31] 1.0000 1.0000 2.0000 2.0000 2.0000

z[49] 1.0000 2.0000 2.0000 2.0000 2.0000

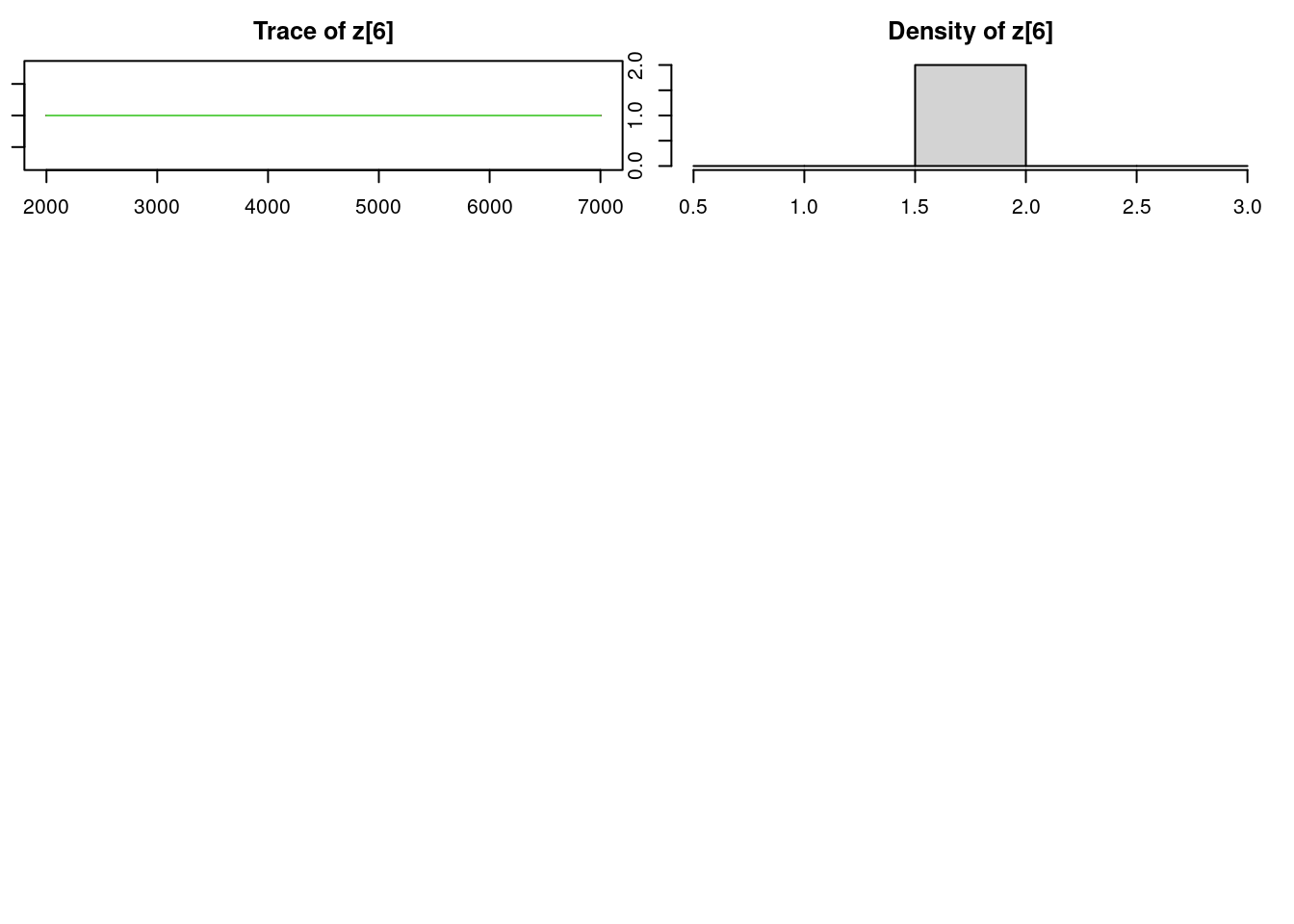

z[6] 2.0000 2.0000 2.0000 2.0000 2.0000## for the population parameters and the mixing weights

par(mar = c(2.5, 1, 2.5, 1))

par(mfrow=c(3,2))

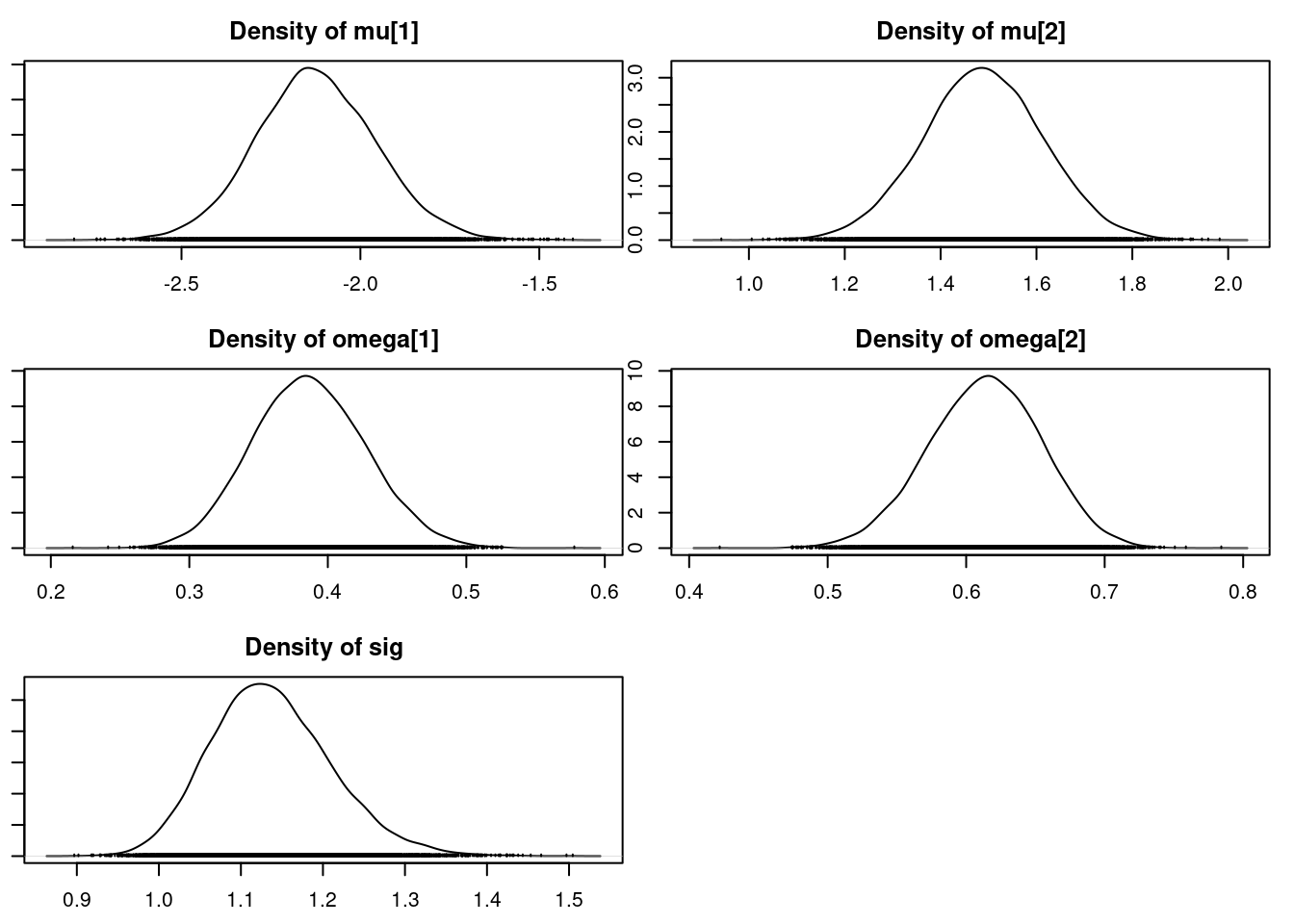

densplot(mod_csim[,c("mu[1]", "mu[2]", "omega[1]", "omega[2]", "sig")])## for the z's

par(mfrow=c(2,2))

par(mar = c(2.5, 1, 2.5, 1))

densplot(mod_csim[,c("z[1]", "z[31]", "z[49]", "z[6]")])table(mod_csim[,"z[1]"]) / nrow(mod_csim) ## posterior probabilities for z[1], the membership of y[1]

1 2

0.991 0.009 table(mod_csim[,"z[31]"]) / nrow(mod_csim) ## posterior probabilities for z[31], the membership of y[31]

1 2

0.4313333 0.5686667 table(mod_csim[,"z[49]"]) / nrow(mod_csim) ## posterior probabilities for z[49], the membership of y[49]

1 2

0.199 0.801 table(mod_csim[,"z[6]"]) / nrow(mod_csim) ## posterior probabilities for z[6], the membership of y[6]

2

1 y[c(1, 31, 49, 6)][1] -2.2661749 -0.3702666 0.0365564 3.7548080If we look back to the y values associated with these z variables we monitored, we see that y_1 is clearly in Population 1’s territory, y_{31} is ambiguous, y_{49} is ambiguous but is closer to Population 2’s territory, and y_6 is clearly in Population 2’s territory. The posterior distributions for the z variables closely reflect our assessment.