## simulate data

y=c(-1,0,1)

n.all =200

p=2 ## p is the order of AR process

for (i in 3:n.all) {

set.seed(i)

U=runif(1)

if(U<=0.5){

y.new=rnorm(1,0.1*y[i-1]+0.1*y[i-2],0.25)

}else if(U>0.8){

y.new=rnorm(1,0.3*y[i-1]+0.5*y[i-2],0.25)

}else{

y.new=rnorm(1,0.4*y[i-1]-0.5*y[i-2],0.25)

}

y=c(y,y.new)

}First I simulate the data using a mixture of AR processes.

The following code is used to determine the number of mixing components in the mixture model. It uses a Gibbs sampler to sample from the posterior distribution of the model parameters and calculates the Deviance Information Criterion (DIC) for models with different numbers of mixing components (2 to 5). The function model_comp_mix takes the total number of components as an argument and returns the DIC for that model.

Most of the code is identical to the code in the previous section. What we have added is the calculation of the DIC for the mixture model.

## Function to determine the number of mixing components

## It is a combination of posterior inference for mixture model and calculation of DIC

library(MCMCpack)Loading required package: codaLoading required package: MASS##

## Markov Chain Monte Carlo Package (MCMCpack)## Copyright (C) 2003-2025 Andrew D. Martin, Kevin M. Quinn, and Jong Hee Park##

## Support provided by the U.S. National Science Foundation## (Grants SES-0350646 and SES-0350613)

##library(mvtnorm)

model_comp_mix=function(tot_num_comp){

## hyperparameters

m0=matrix(rep(0,p),ncol=1) ## p is the order of AR process

C0=10*diag(p)

C0.inv=0.1*diag(p)

n0=0.02

d0=0.02

K=tot_num_comp ## let the number of mixing component to vary by input

a=rep(1,K)

Y=matrix(y[(p+1):n.all],ncol=1) ## y_{p+1:T} n.all is the value of T

Fmtx=matrix(c(y[p:(n.all-1)],y[1:(n.all-p)]),nrow=2,byrow=TRUE) ## design matrix F

n=length(Y)

## The code below is used to obtain posterior samples of model parameters

## Just copy from the last lecture

sample_omega=function(L.cur){

n.vec=sapply(1:K, function(k){sum(L.cur==k)})

rdirichlet(1,a+n.vec)

}

sample_L_one=function(beta.cur,omega.cur,nu.cur,y.cur,Fmtx.cur){

prob_k=function(k){

beta.use=beta.cur[((k-1)*p+1):(k*p)]

omega.cur[k]*dnorm(y.cur,mean=sum(beta.use*Fmtx.cur),sd=sqrt(nu.cur))

}

prob.vec=sapply(1:K, prob_k)

L.sample=sample(1:K,1,prob=prob.vec/sum(prob.vec))

return(L.sample)

}

sample_L=function(y,x,beta.cur,omega.cur,nu.cur){

L.new=sapply(1:n, function(j){sample_L_one(beta.cur,omega.cur,nu.cur,y.cur=y[j,],Fmtx.cur=x[,j])})

return(L.new)

}

sample_nu=function(L.cur,beta.cur){

n.star=n0+n+p*K

err.y=function(idx){

L.use=L.cur[idx]

beta.use=beta.cur[((L.use-1)*p+1):(L.use*p)]

err=Y[idx,]-sum(Fmtx[,idx]*beta.use)

return(err^2)

}

err.beta=function(k.cur){

beta.use=beta.cur[((k.cur-1)*p+1):(k.cur*p)]

beta.use.minus.m0=matrix(beta.use,ncol=1)-m0

t(beta.use.minus.m0)%*%C0.inv%*%beta.use.minus.m0

}

d.star=d0+sum(sapply(1:n,err.y))+sum(sapply(1:K,err.beta))

1/rgamma(1,shape=n.star/2,rate=d.star/2)

}

sample_beta=function(k,L.cur,nu.cur){

idx.select=(L.cur==k)

n.k=sum(idx.select)

if(n.k==0){

m.k=m0

C.k=C0

}else{

y.tilde.k=Y[idx.select,]

Fmtx.tilde.k=Fmtx[,idx.select]

e.k=y.tilde.k-t(Fmtx.tilde.k)%*%m0

Q.k=t(Fmtx.tilde.k)%*%C0%*%Fmtx.tilde.k+diag(n.k)

Q.k.inv=chol2inv(chol(Q.k))

A.k=C0%*%Fmtx.tilde.k%*%Q.k.inv

m.k=m0+A.k%*%e.k

C.k=C0-A.k%*%Q.k%*%t(A.k)

}

rmvnorm(1,m.k,nu.cur*C.k)

}

nsim=20000

## store parameters

beta.mtx=matrix(0,nrow=p*K,ncol=nsim)

L.mtx=matrix(0,nrow=n,ncol=nsim)

omega.mtx=matrix(0,nrow=K,ncol=nsim)

nu.vec=rep(0,nsim)

## initial value

beta.cur=rep(0,p*K)

L.cur=rep(1,n)

omega.cur=rep(1/K,K)

nu.cur=1

## Gibbs Sampler

for (i in 1:nsim) {

set.seed(i)

## sample omega

omega.cur=sample_omega(L.cur)

omega.mtx[,i]=omega.cur

## sample L

L.cur=sample_L(Y,Fmtx,beta.cur,omega.cur,nu.cur)

L.mtx[,i]=L.cur

## sample nu

nu.cur=sample_nu(L.cur,beta.cur)

nu.vec[i]=nu.cur

## sample beta

beta.cur=as.vector(sapply(1:K, function(k){sample_beta(k,L.cur,nu.cur)}))

beta.mtx[,i]=beta.cur

if(i%%1000==0){

print(i)

}

}

## Now compute DIC for mixture model

## Somilar as the calculation of DIC in Module 2

cal_log_likelihood_mix_one=function(idx,beta,nu,omega){

norm.lik=rep(0,K)

for (k.cur in 1:K) {

mean.norm=sum(Fmtx[,idx]*beta[((k.cur-1)*p+1):(k.cur*p)])

norm.lik[k.cur]=dnorm(Y[idx,1],mean.norm,sqrt(nu),log=FALSE)

}

log.lik=log(sum(norm.lik*omega))

return(log.lik)

}

cal_log_likelihood_mix=function(beta,nu,omega){

sum(sapply(1:n, function(idx){cal_log_likelihood_mix_one(idx=idx,beta=beta,nu=nu,omega=omega)}))

}

sample.select.idx=seq(10001,20000,by=1)

beta.mix=rowMeans(beta.mtx[,sample.select.idx])

nu.mix=mean(nu.vec[sample.select.idx])

omega.mix=rowMeans(omega.mtx[,sample.select.idx])

log.lik.bayes.mix=cal_log_likelihood_mix(beta.mix,nu.mix,omega.mix)

post.log.lik.mix=sapply(sample.select.idx, function(k){cal_log_likelihood_mix(beta.mtx[,k],nu.vec[k],omega.mtx[,k])})

E.post.log.lik.mix=mean(post.log.lik.mix)

p_DIC.mix=2*(log.lik.bayes.mix-E.post.log.lik.mix)

DIC.mix=-2*log.lik.bayes.mix+2*p_DIC.mix

return(DIC.mix)

}

## Run this code will give you DIC corresponding to mixture model with 2:5 mixing components

mix.model.all=sapply(2:5,model_comp_mix)[1] 1000

[1] 2000

[1] 3000

[1] 4000

[1] 5000

[1] 6000

[1] 7000

[1] 8000

[1] 9000

[1] 10000

[1] 11000

[1] 12000

[1] 13000

[1] 14000

[1] 15000

[1] 16000

[1] 17000

[1] 18000

[1] 19000

[1] 20000

[1] 1000

[1] 2000

[1] 3000

[1] 4000

[1] 5000

[1] 6000

[1] 7000

[1] 8000

[1] 9000

[1] 10000

[1] 11000

[1] 12000

[1] 13000

[1] 14000

[1] 15000

[1] 16000

[1] 17000

[1] 18000

[1] 19000

[1] 20000

[1] 1000

[1] 2000

[1] 3000

[1] 4000

[1] 5000

[1] 6000

[1] 7000

[1] 8000

[1] 9000

[1] 10000

[1] 11000

[1] 12000

[1] 13000

[1] 14000

[1] 15000

[1] 16000

[1] 17000

[1] 18000

[1] 19000

[1] 20000

[1] 1000

[1] 2000

[1] 3000

[1] 4000

[1] 5000

[1] 6000

[1] 7000

[1] 8000

[1] 9000

[1] 10000

[1] 11000

[1] 12000

[1] 13000

[1] 14000

[1] 15000

[1] 16000

[1] 17000

[1] 18000

[1] 19000

[1] 20000This code takes a rather long time to run. It would be a good idea to save the output as a csv with k, DIC Second it requires access to the variables

y,n.all, andpI defined these variables in the previous section, so you can run this code after running the previous section.Second we get to use this code in the ?sec-capstone-lm-infer-mix-comp It works fine for the earthquake data which is an AR(p) with p>1. But for the google tends data for covid which is an ar(1) process it has multiple issues. The first group were related to algebra - certain vectors and matrices are incompatible… Next I found that gibs sampler crashed due to numerical instability when the variance is too small.

I mention all this as the feedback asked to refer back to this section and added that the choice of prior is important. We actually saw earlier in Section 104.5 how to check the robustness of our model via prior sensitivity analysis! However the exercise has an explicit prior in it code so it seems strange that it is leads to bad DIC values.

In fact, if we think about it, if DIC should be able to inform us regarding the best model parameters. However we may need to use it in a bayesian optimization framework to find the best set of parameters and hyperparameters.

I hope I can figure out this matter soon however I am now moving on to the final project and will have to come back to this later, time permitting.

However one useful point is that I will prefer to tackle the earthquake data first as the code works fine for it and for the google trends data, it seems a bit of a trivial task to fit an AR(1) for the final project.

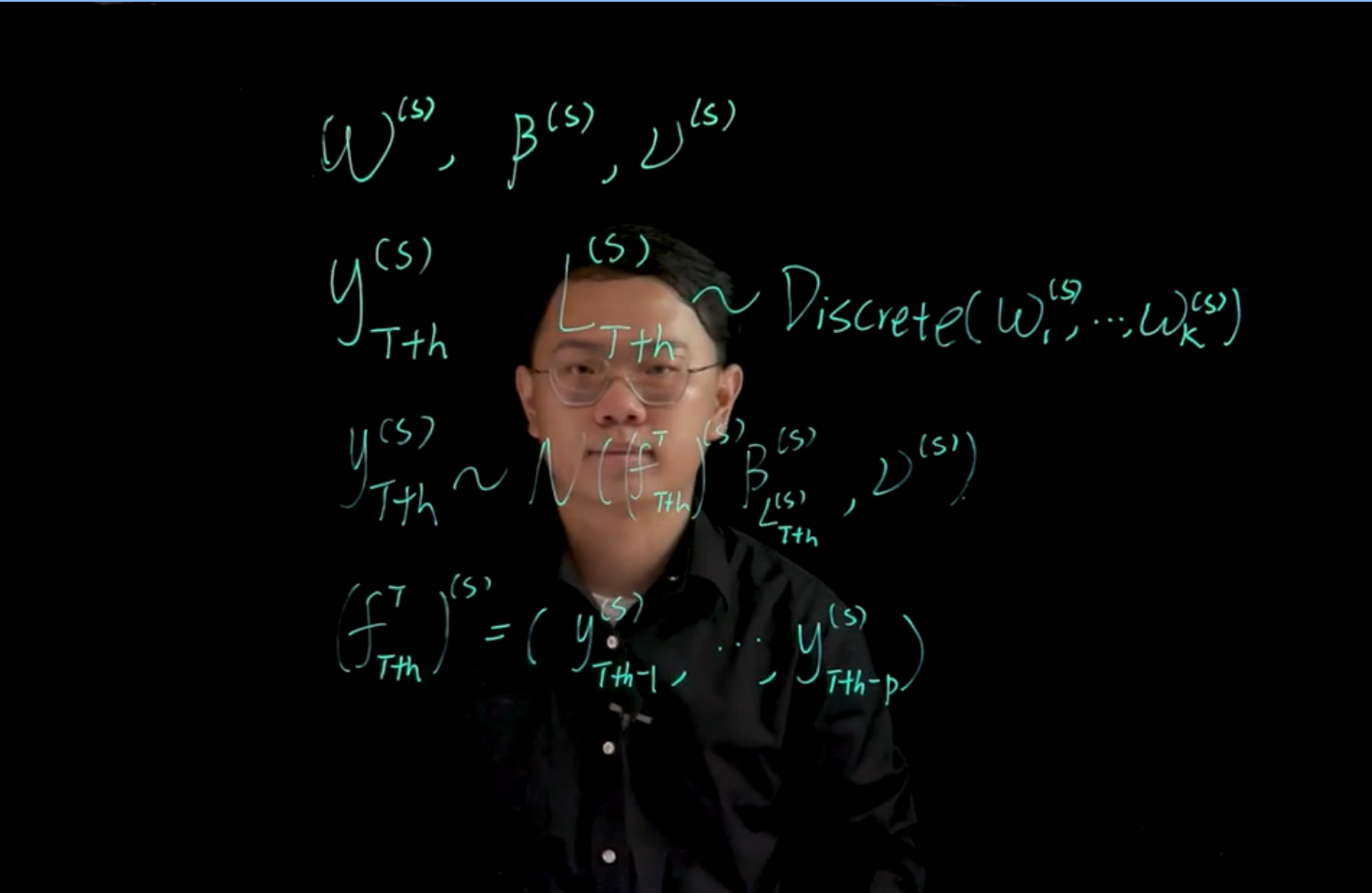

111.1 Prediction for location mixture of AR model

The following code can be used to obtain the h-step ahead prediction of the time series y using the posterior samples from the mixture model. The function y_pred_h_step takes two arguments: h.step, which is the number of steps ahead for prediction, and s, which is the index of the posterior sample to use for prediction.

## the prediction function for mixture model

## Obtain the sth posterior sample for the h-step ahead prediction of y

y_pred_h_step <- function(h.step,s){

omega.vec.use = omega.mtx[,s]

beta.use = beta.mtx[,s]

nu.use = nu.vec[s]

for (i in 1:h.step) {

k.use = sample(1:K,1,prob=omega.vec.use)

beta.cat = beta.use[((k.use-1)*p+1):(k.use*p)]

mu.y = sum(y.cur*phi.cat)

y.new = rnorm(1,mu.y,sqrt(nu.use))

y.pred[i] = y.new

y.cur = c(y.new,y.cur)

y.cur = y.cur[-length(y.cur)]

}

return(y.pred)

}