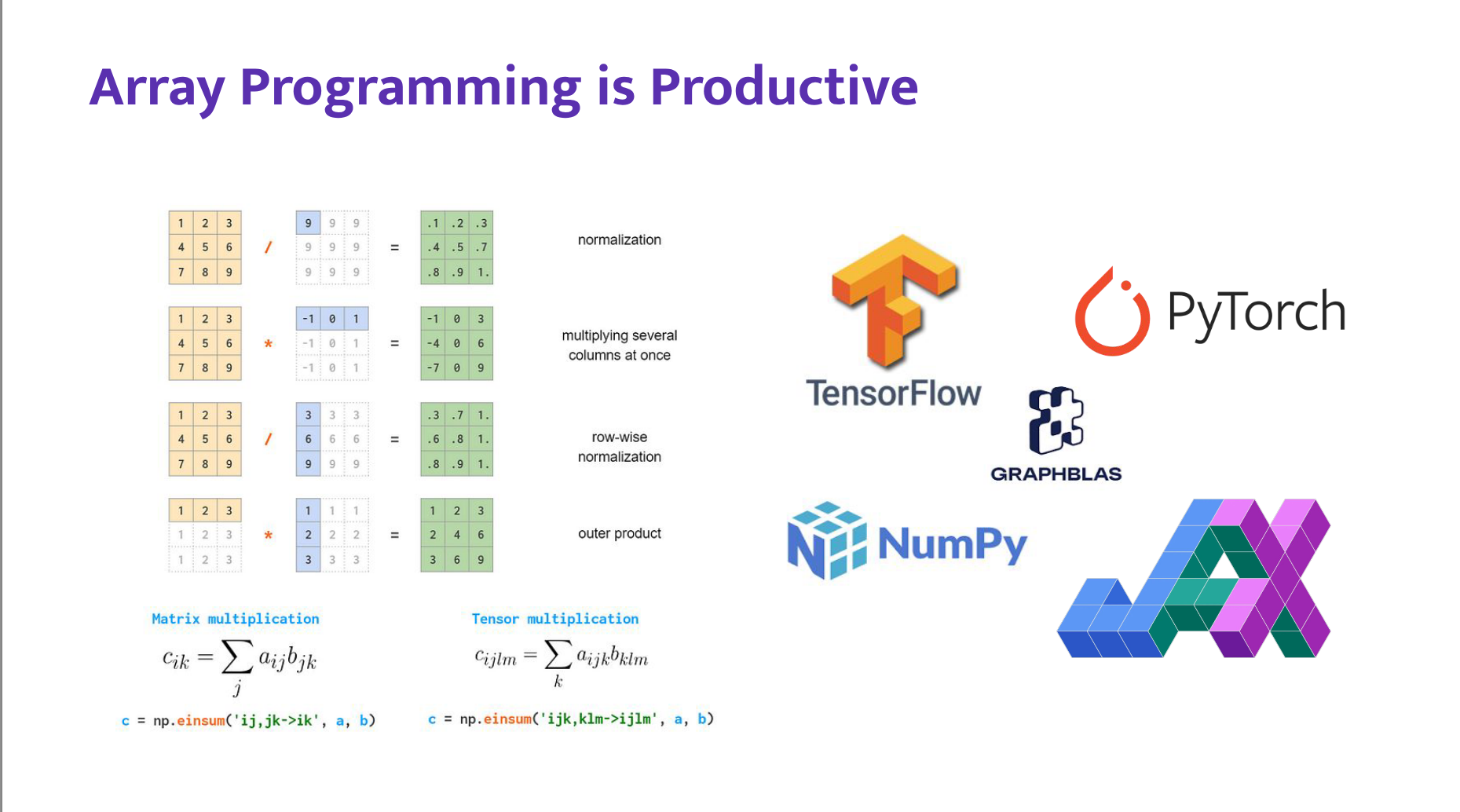

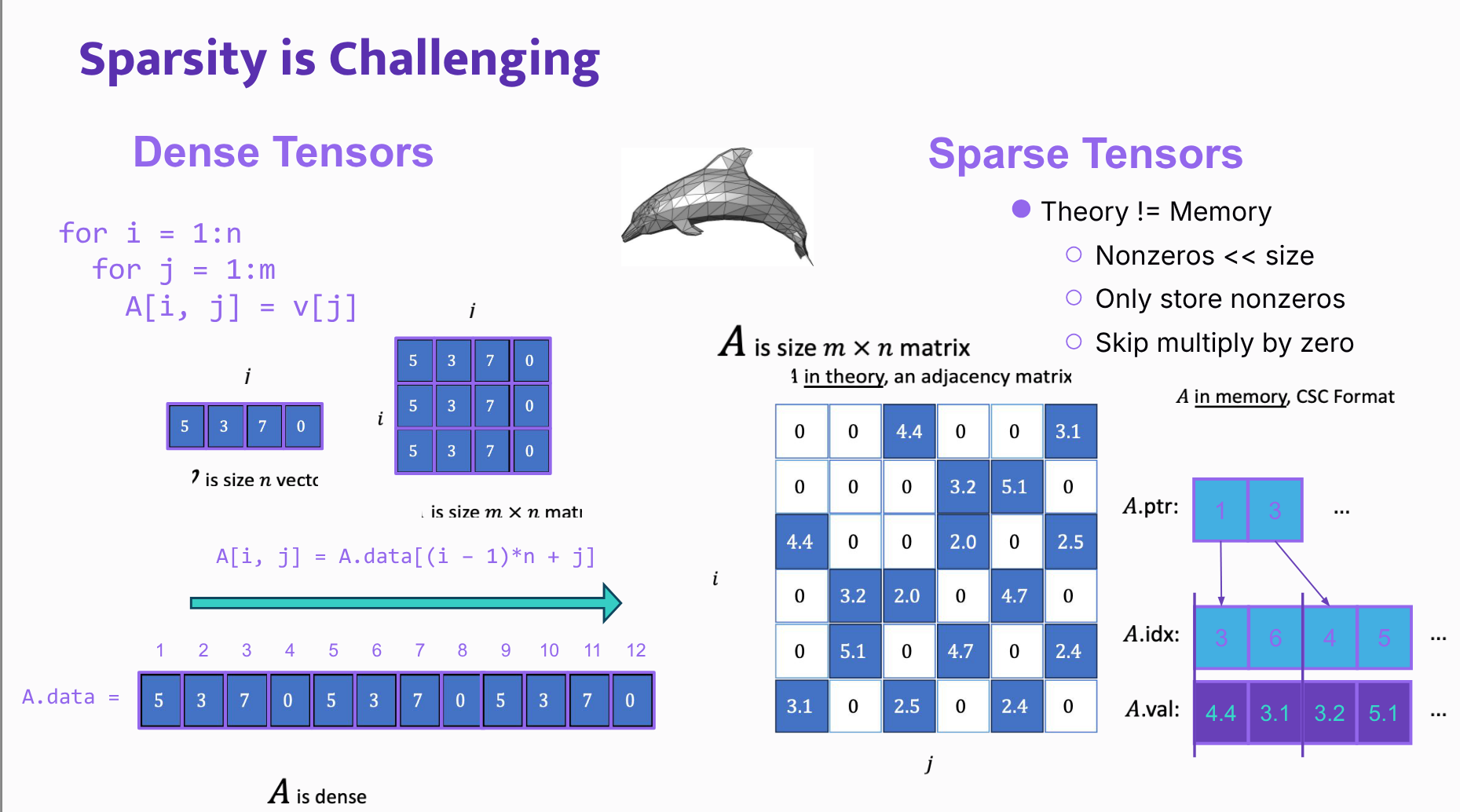

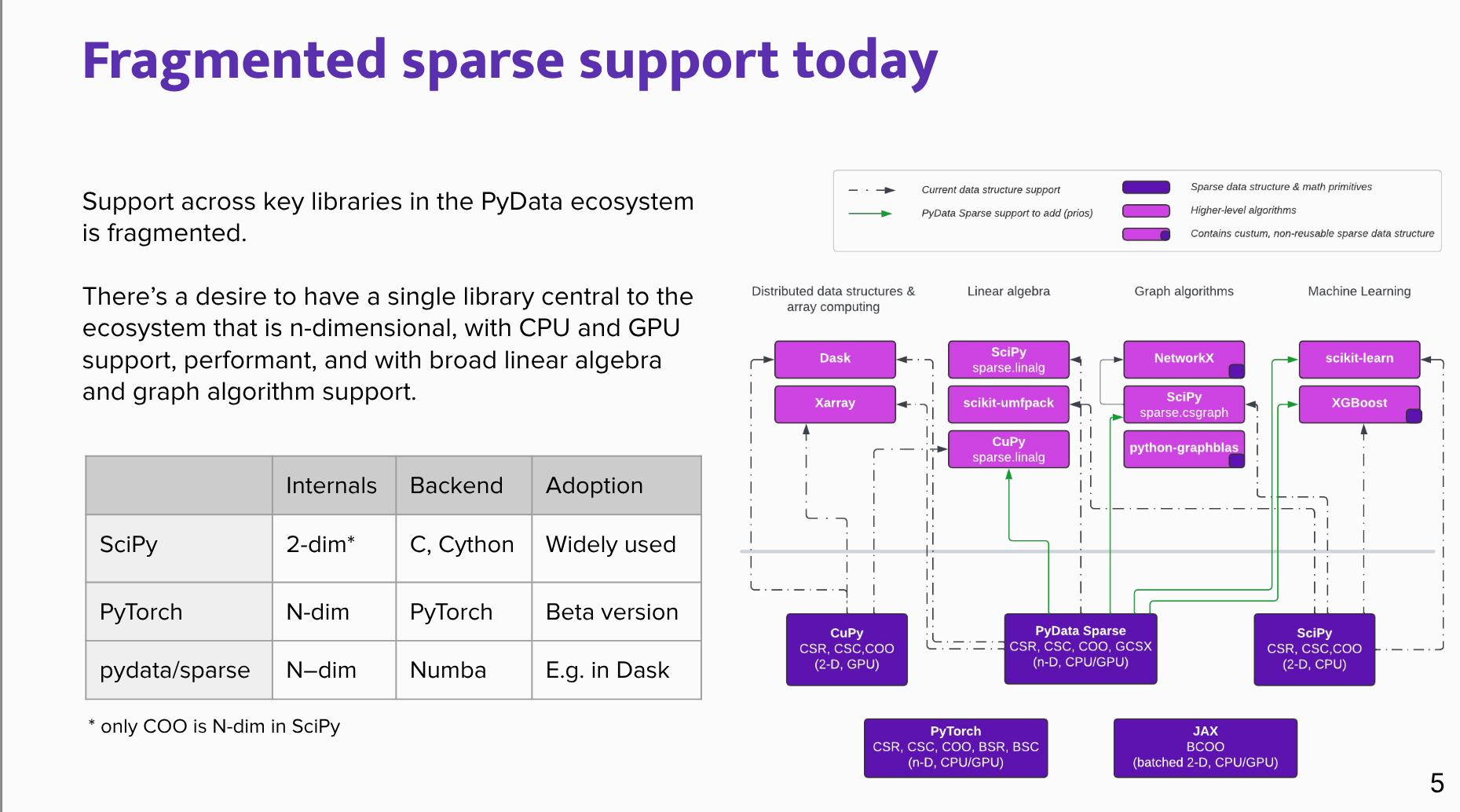

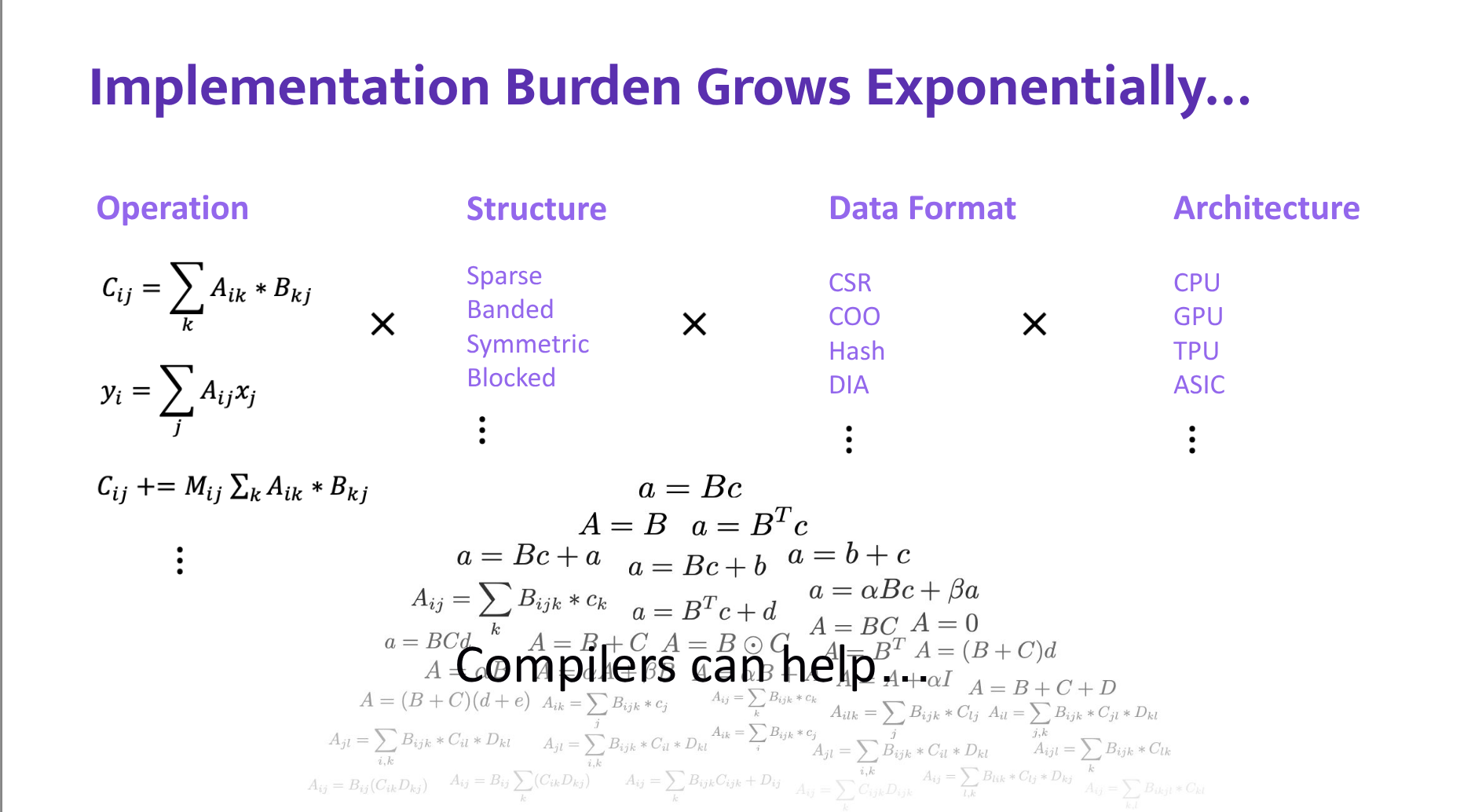

Scientific Python Ecosystem offers a wide variety of numerical packages, such as NumPy, CuPy, or JAX. One of the domains that also captures a lot of attention in the community is sparse computing.

In this talk, we will present the current landscape of sparse computing in the Python ecosystem and our efforts to revive/expand it.

Our main contributions to the Python ecosystem cover:

- making a novel

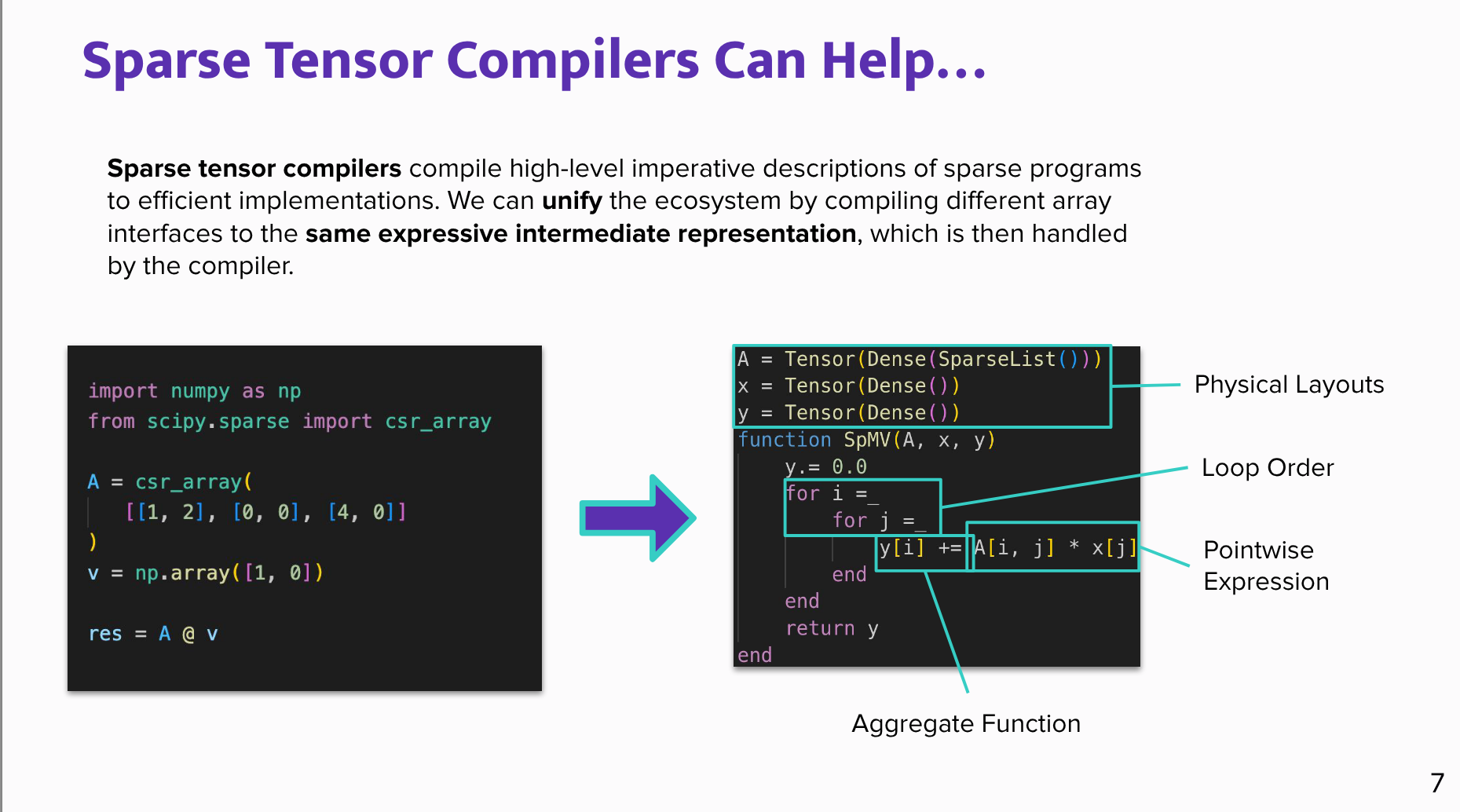

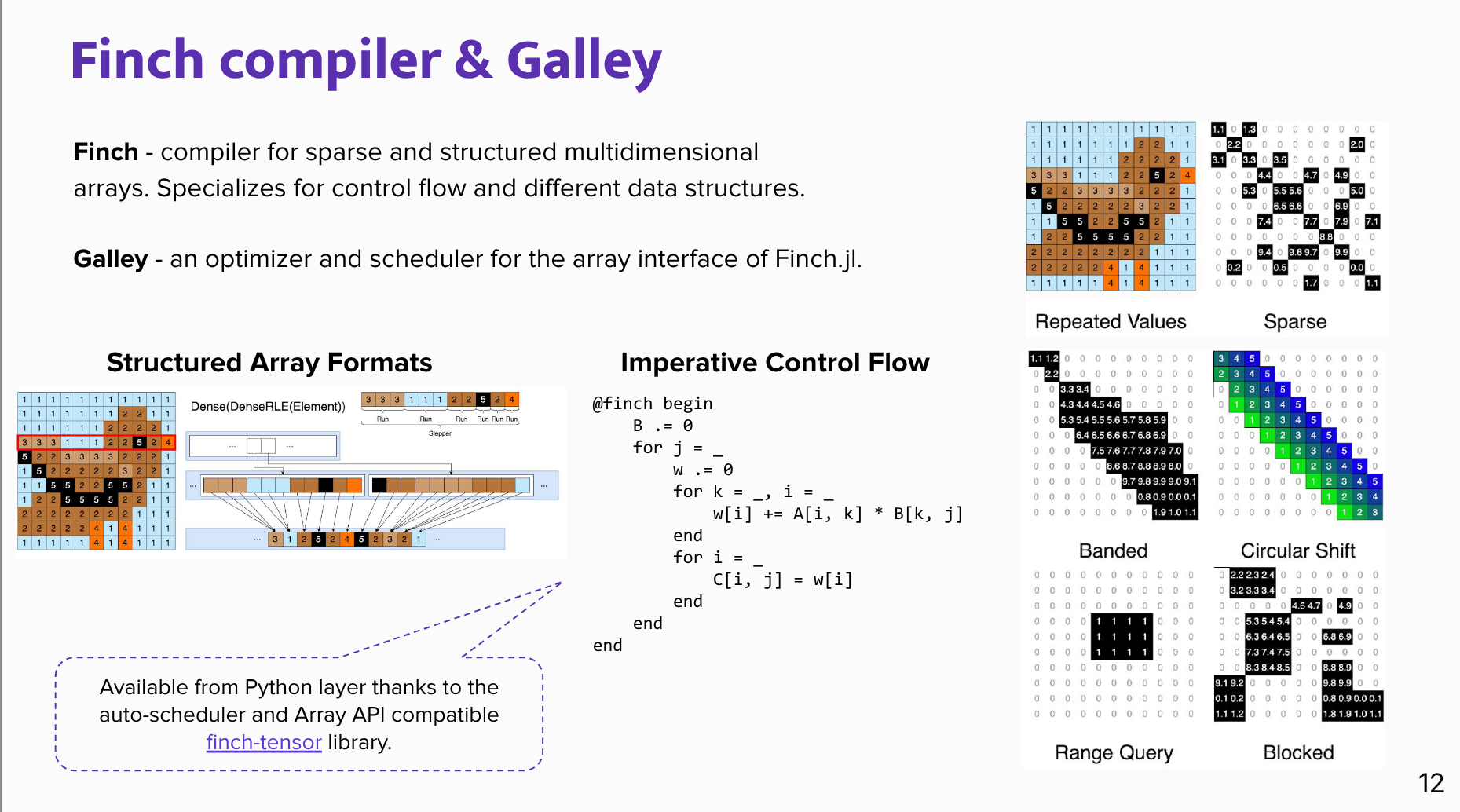

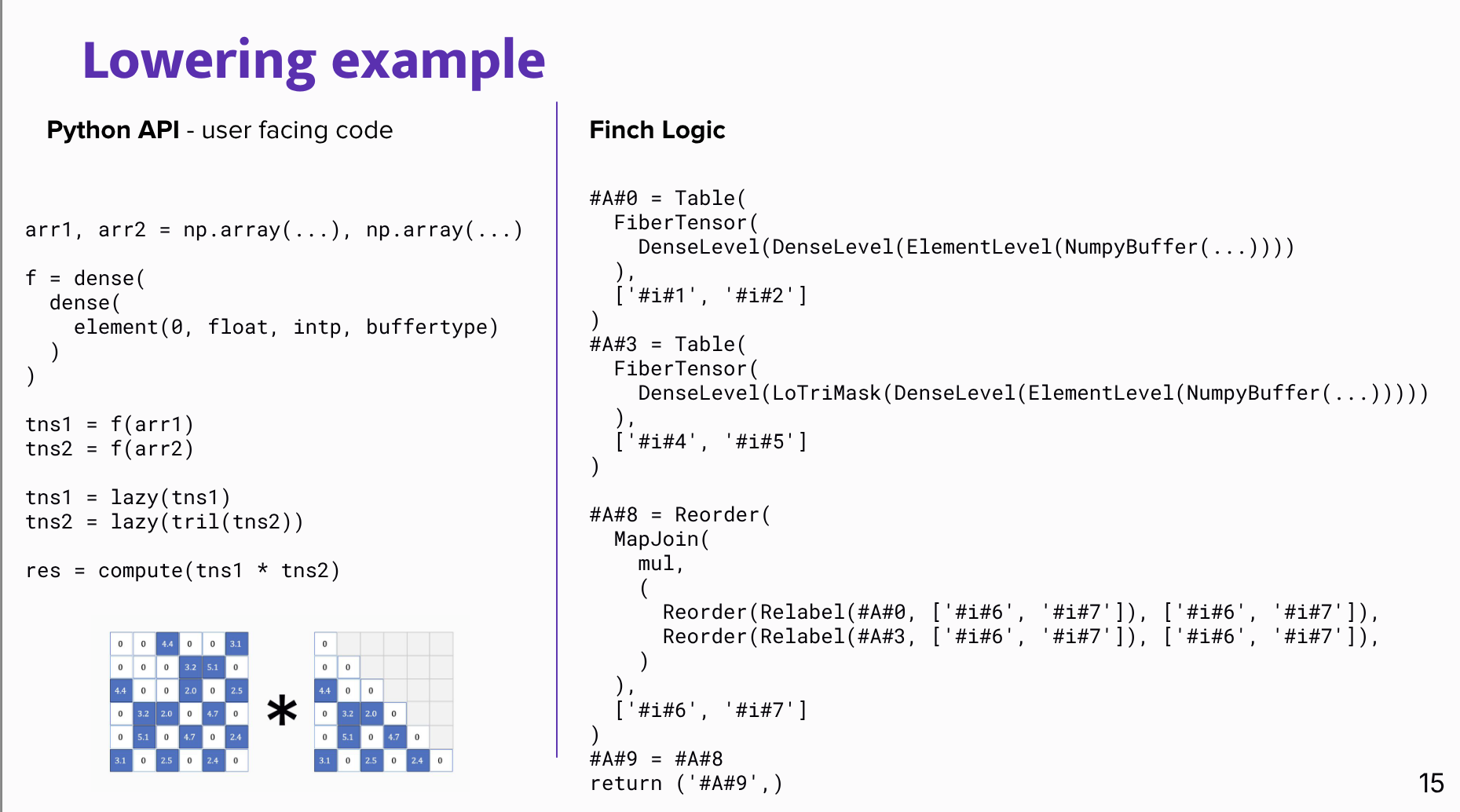

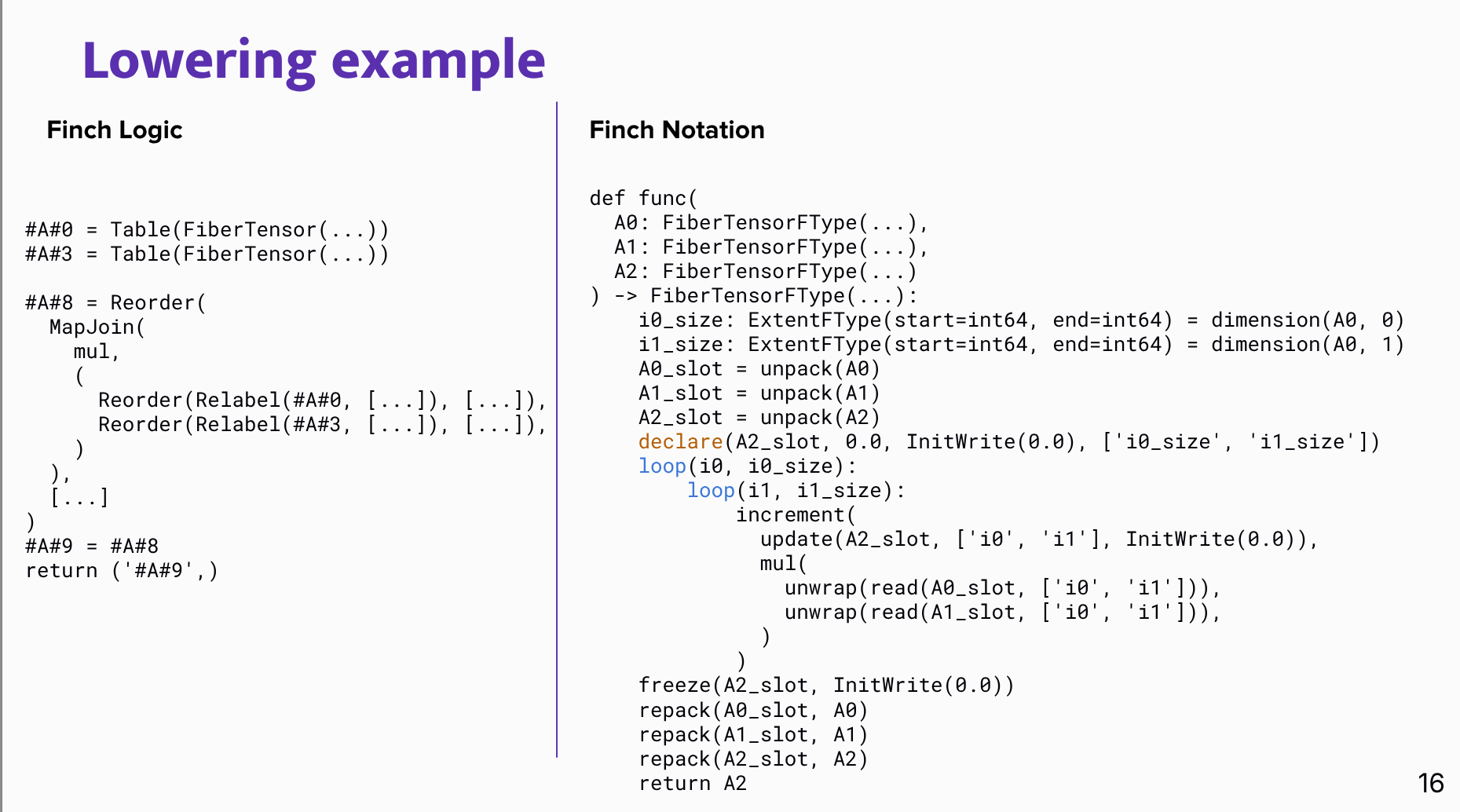

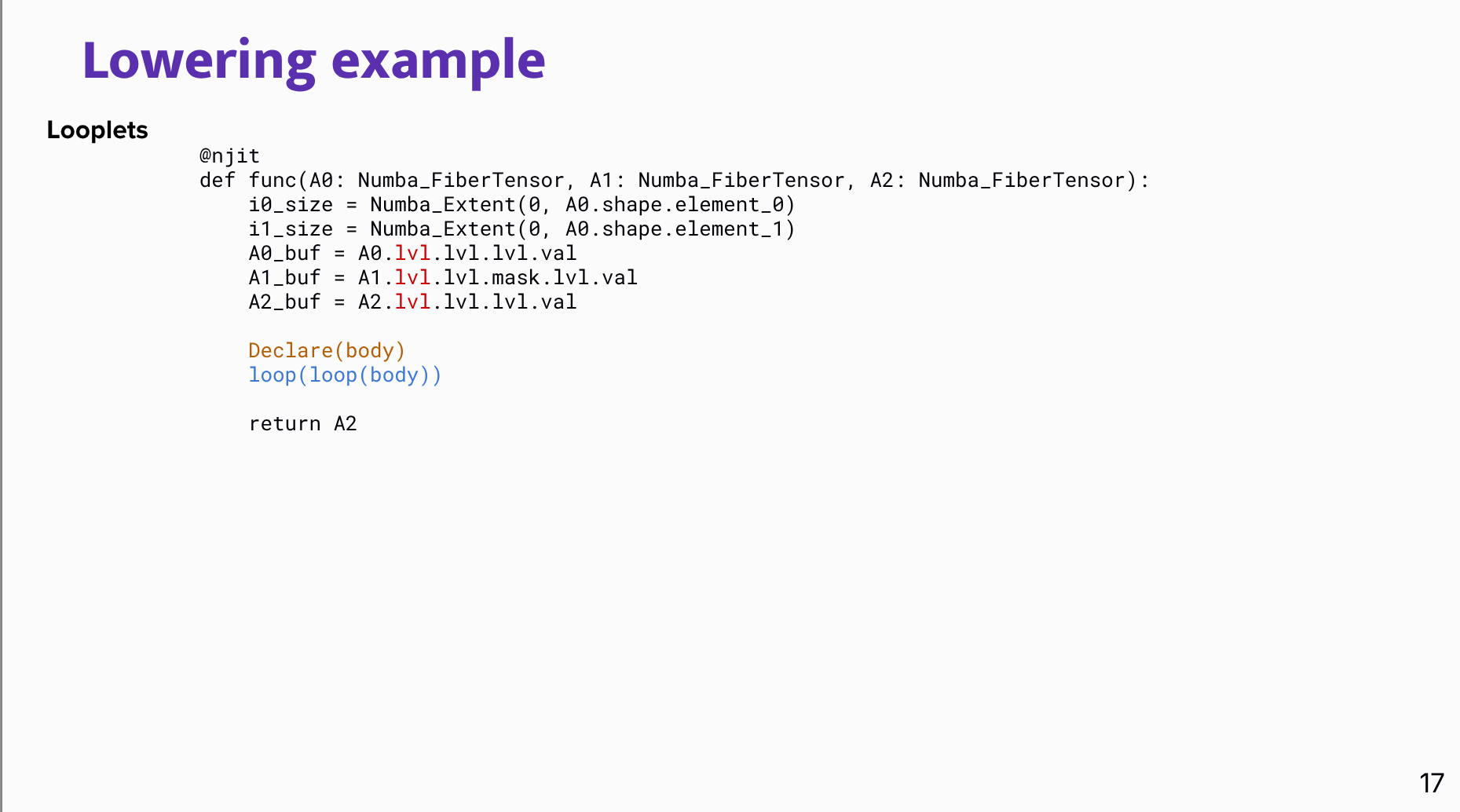

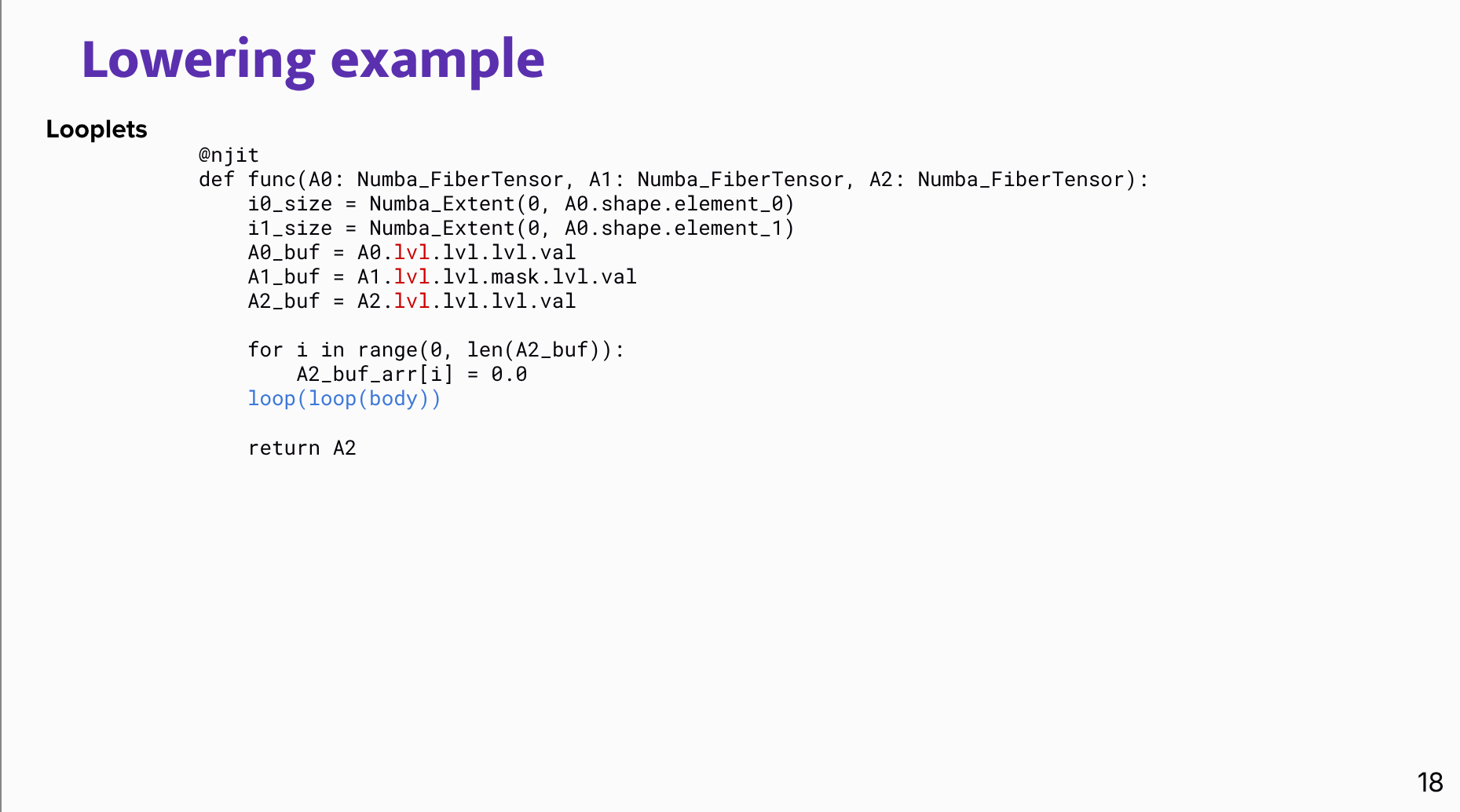

Finchsparse tensor compiler and Galley scheduler available for the community, - standardizing various aspects of sparse computing.

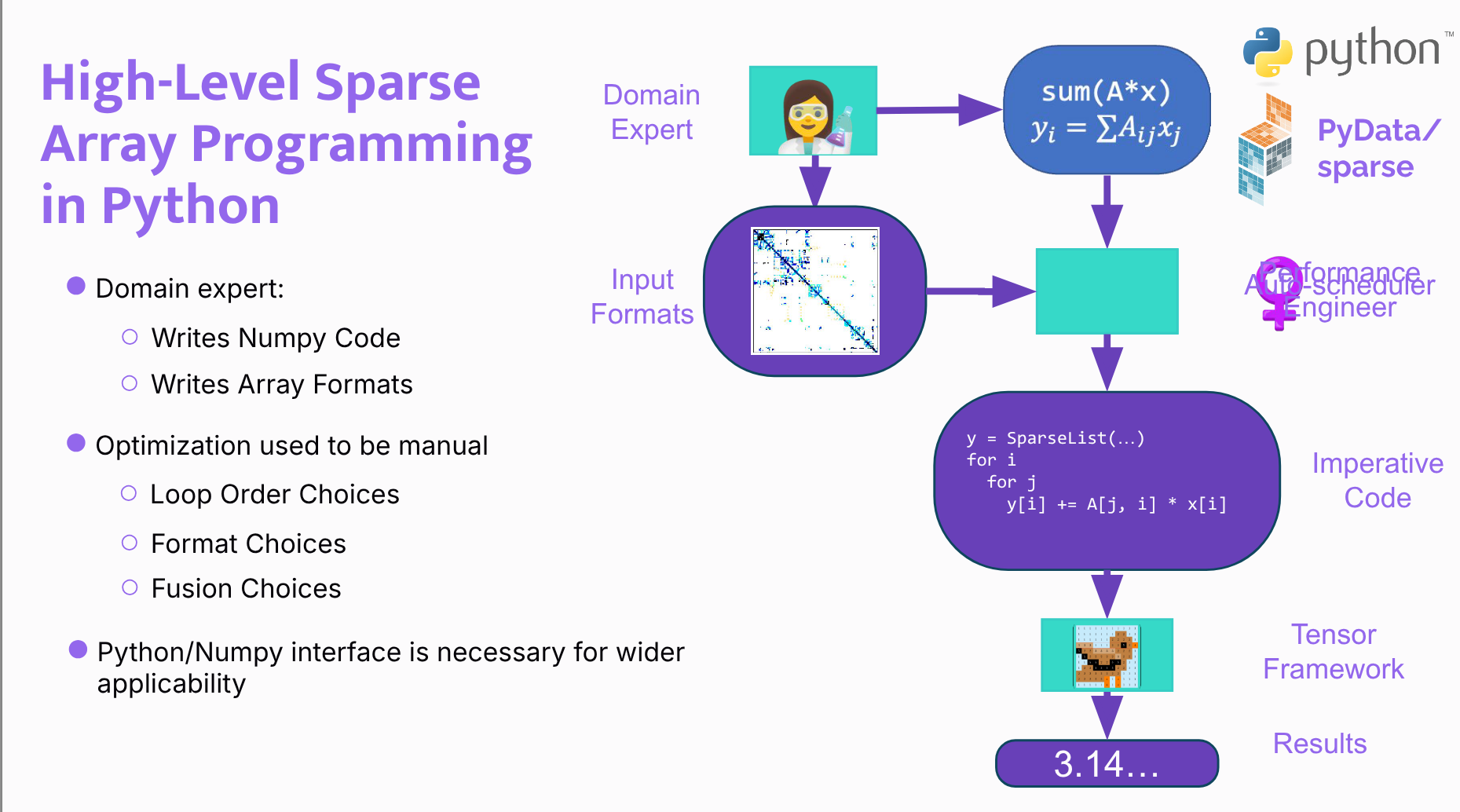

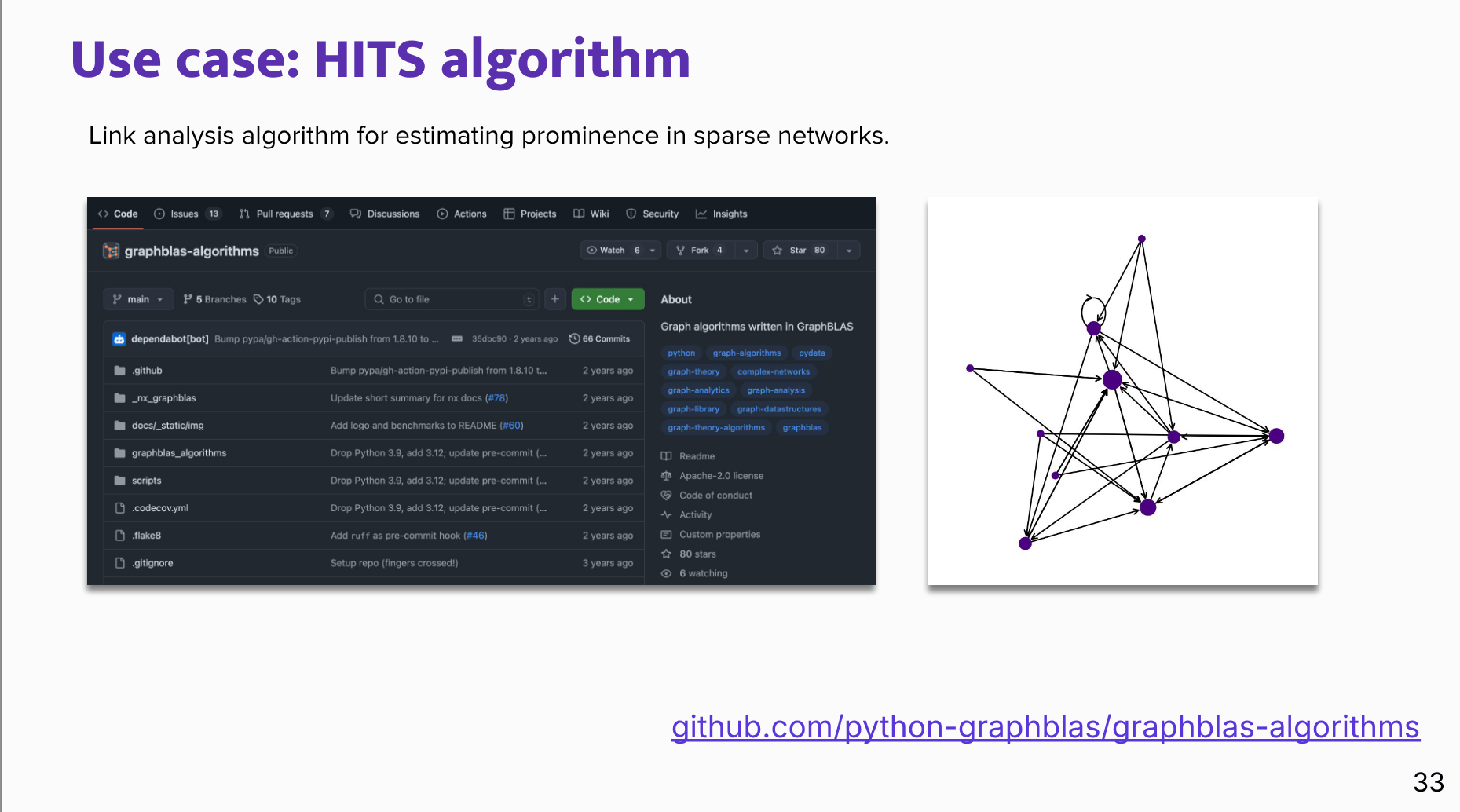

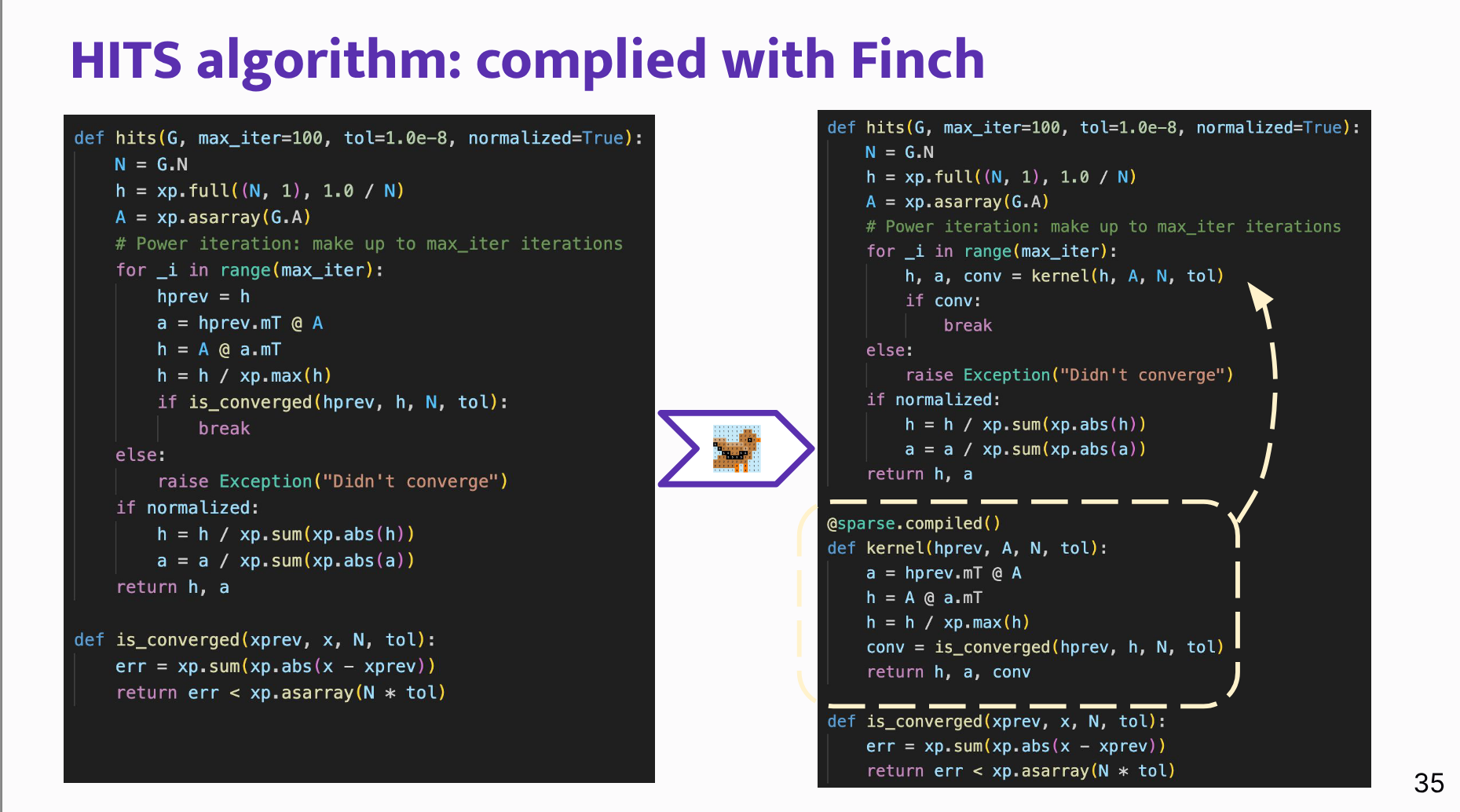

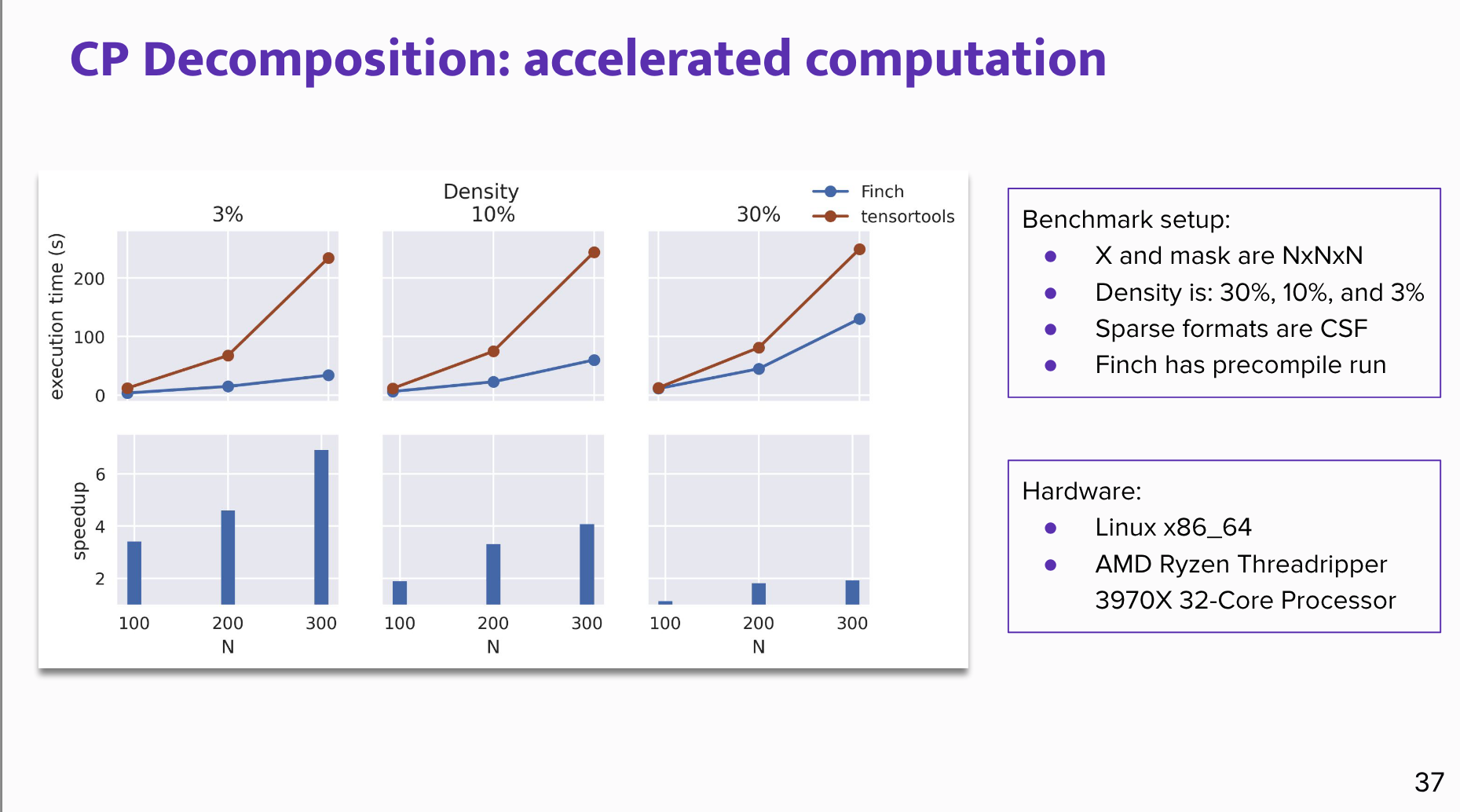

We will show how to use the Finch compiler with the PyData/Sparse package and how it outperforms well-established alternatives for multiple kernels, such as [MTTKRP](http://tensor-compiler.org/docs/data_analytics.html) or [SDDMM](http://tensor-compiler.org/docs/machine_learning.html).

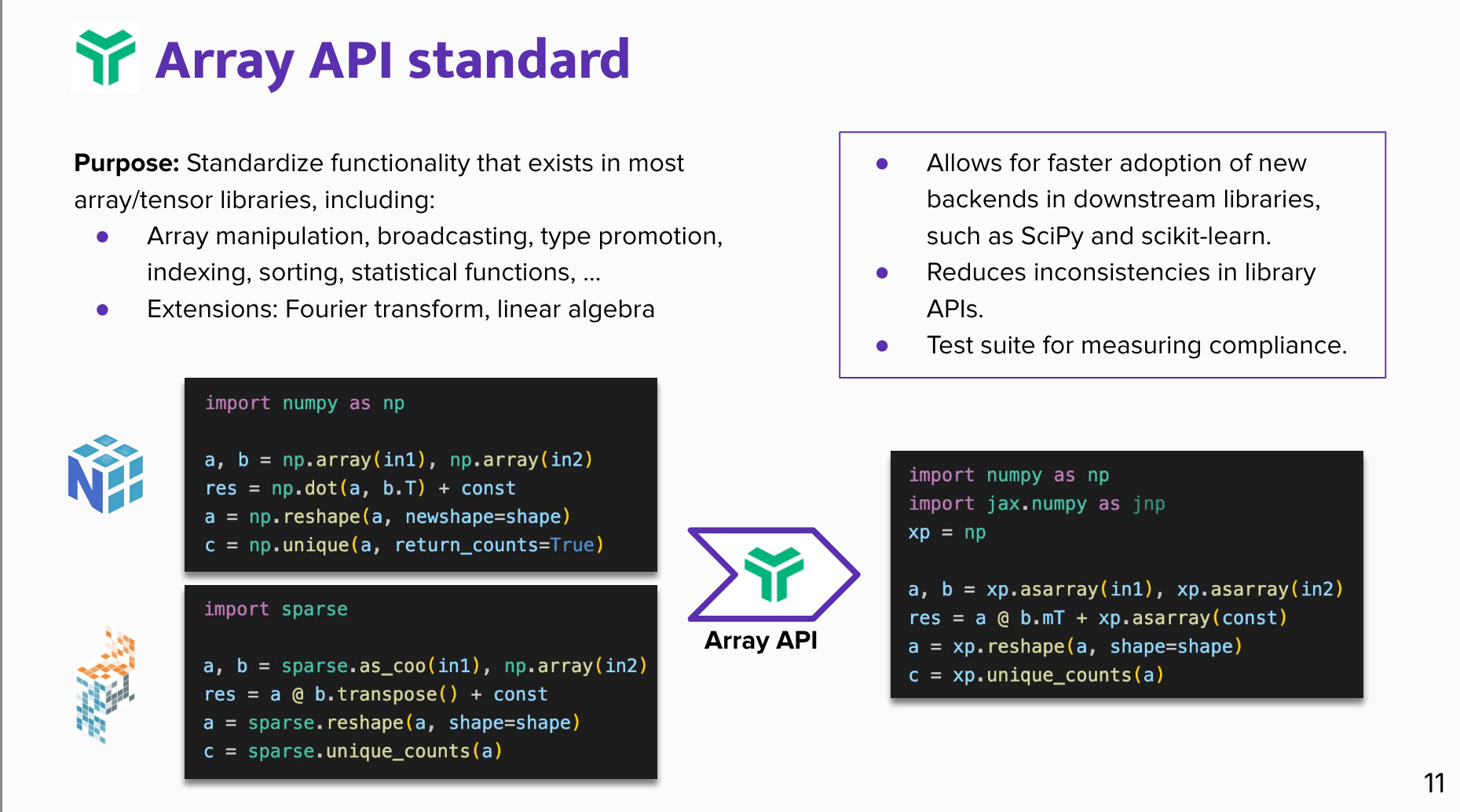

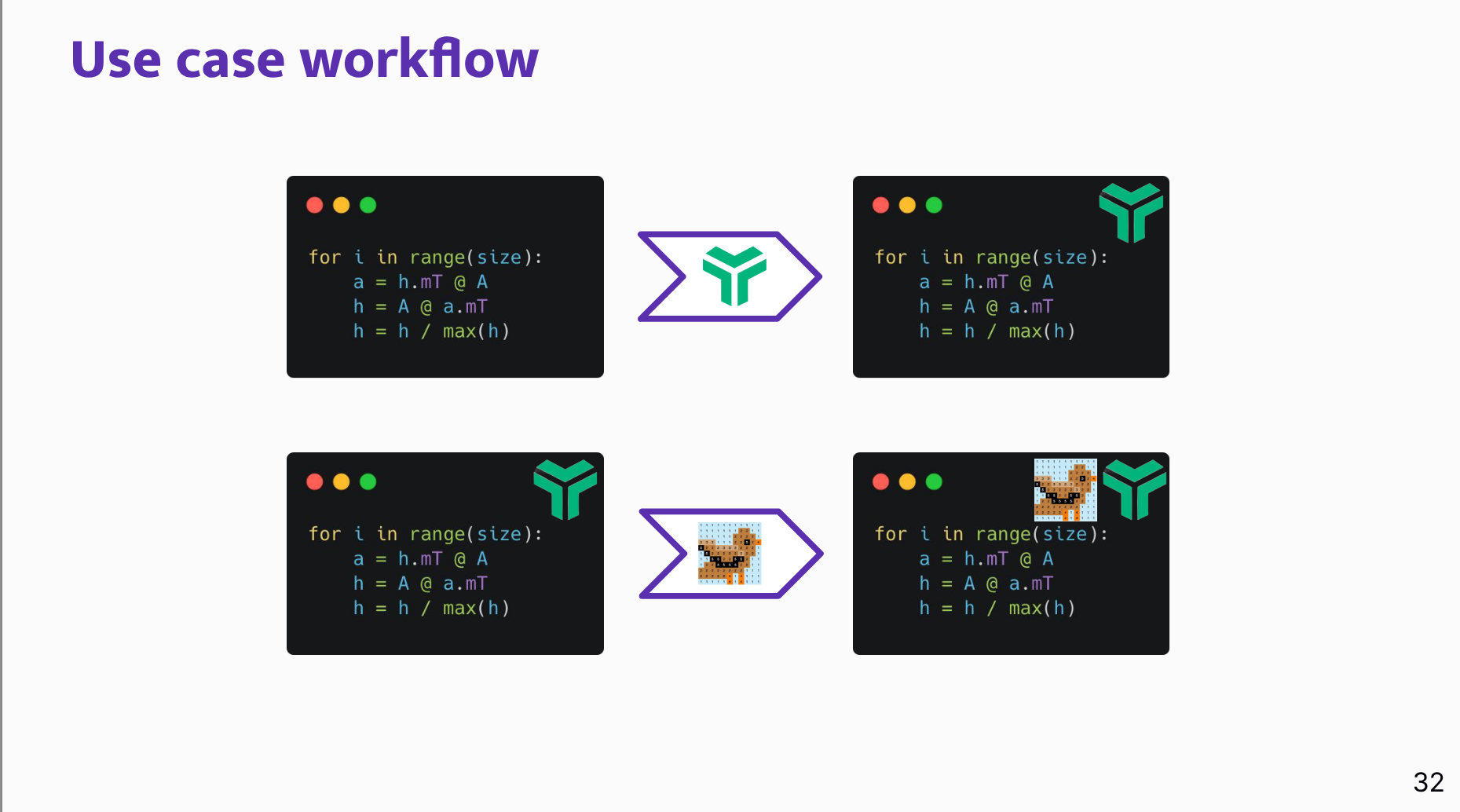

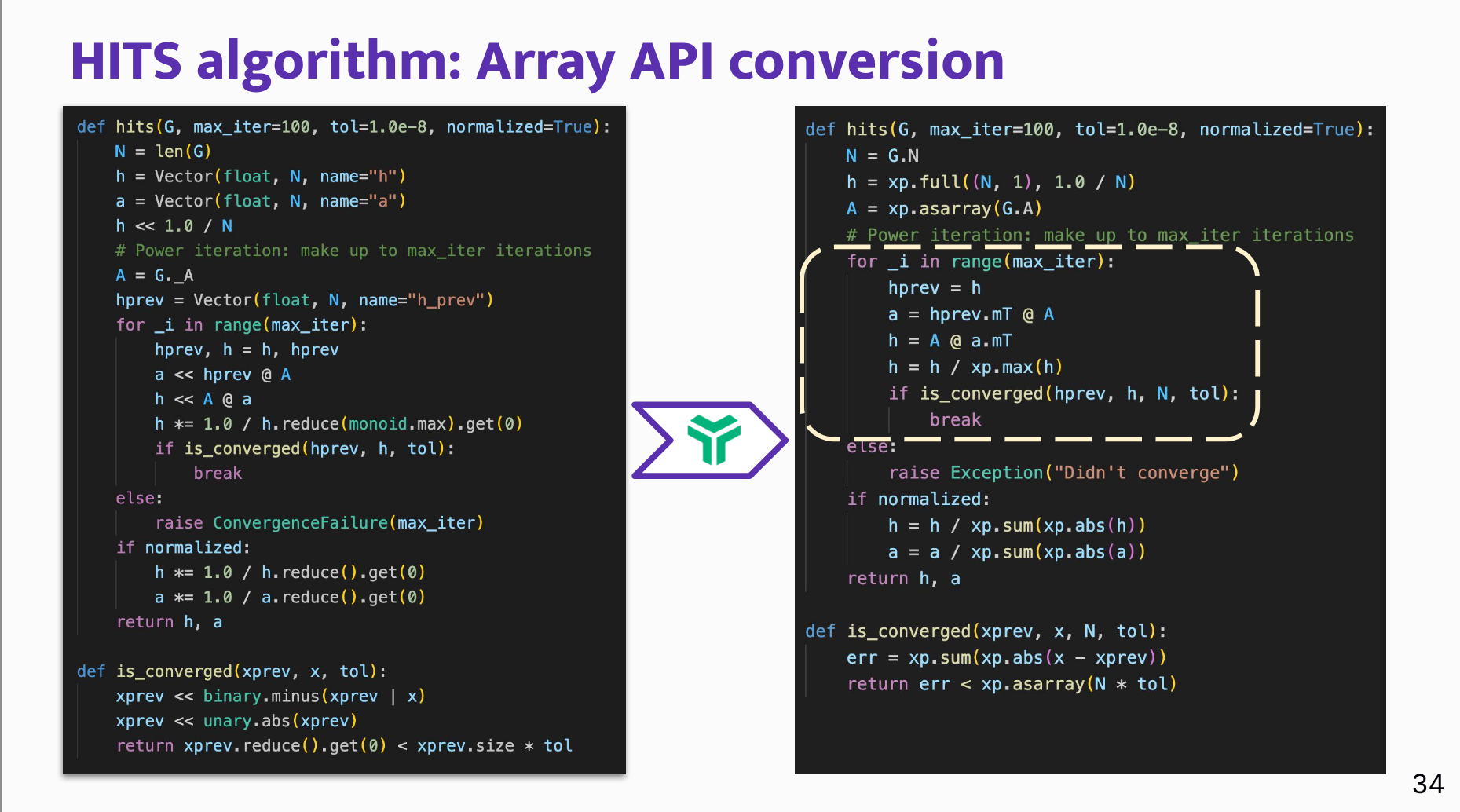

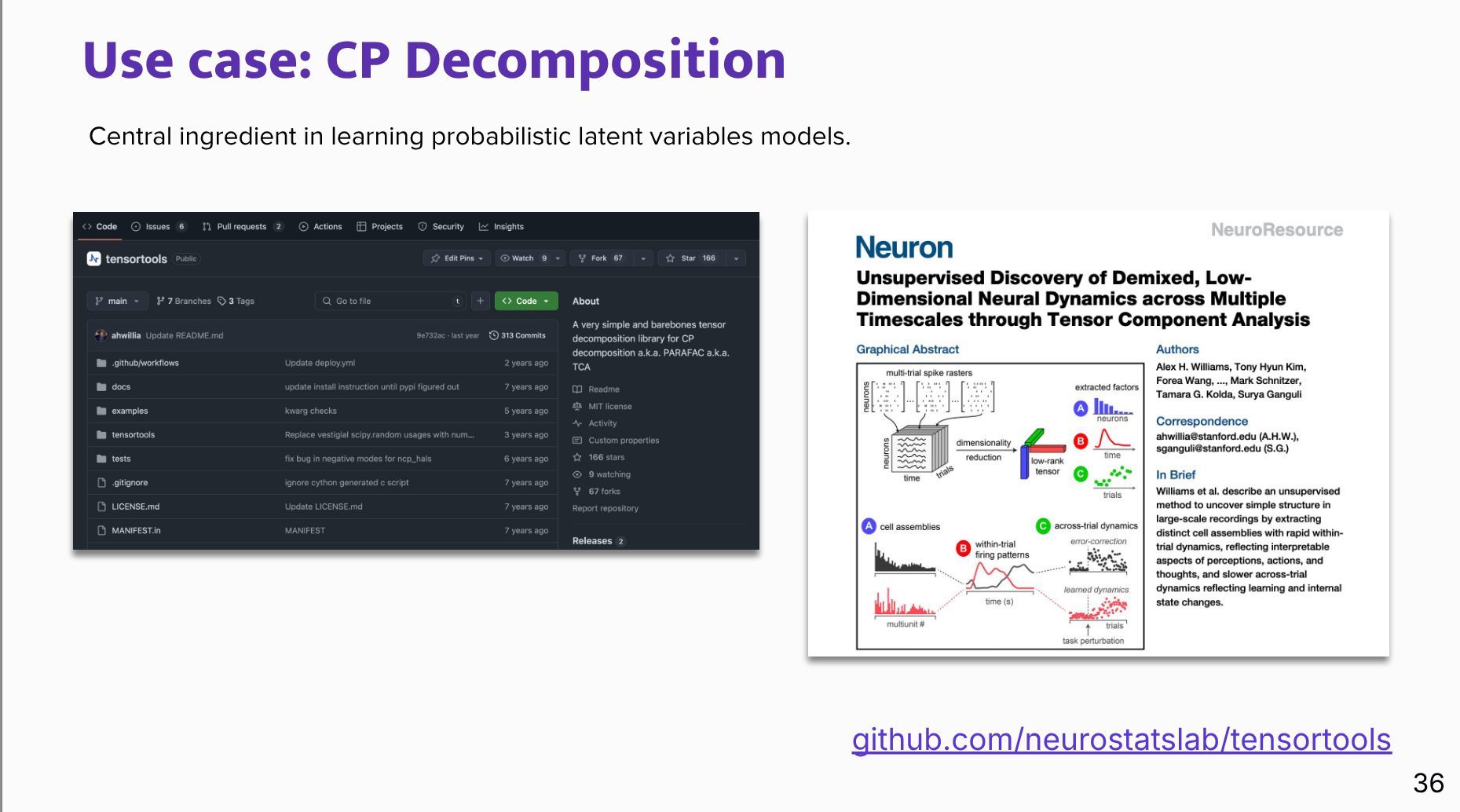

Real-world use-cases will show you how, step-by-step, Python practitioners can migrate their code to an Array API compatible version and benefit from tensor operator fusion and autoscheduling capabilities offered by the Finch compiler.

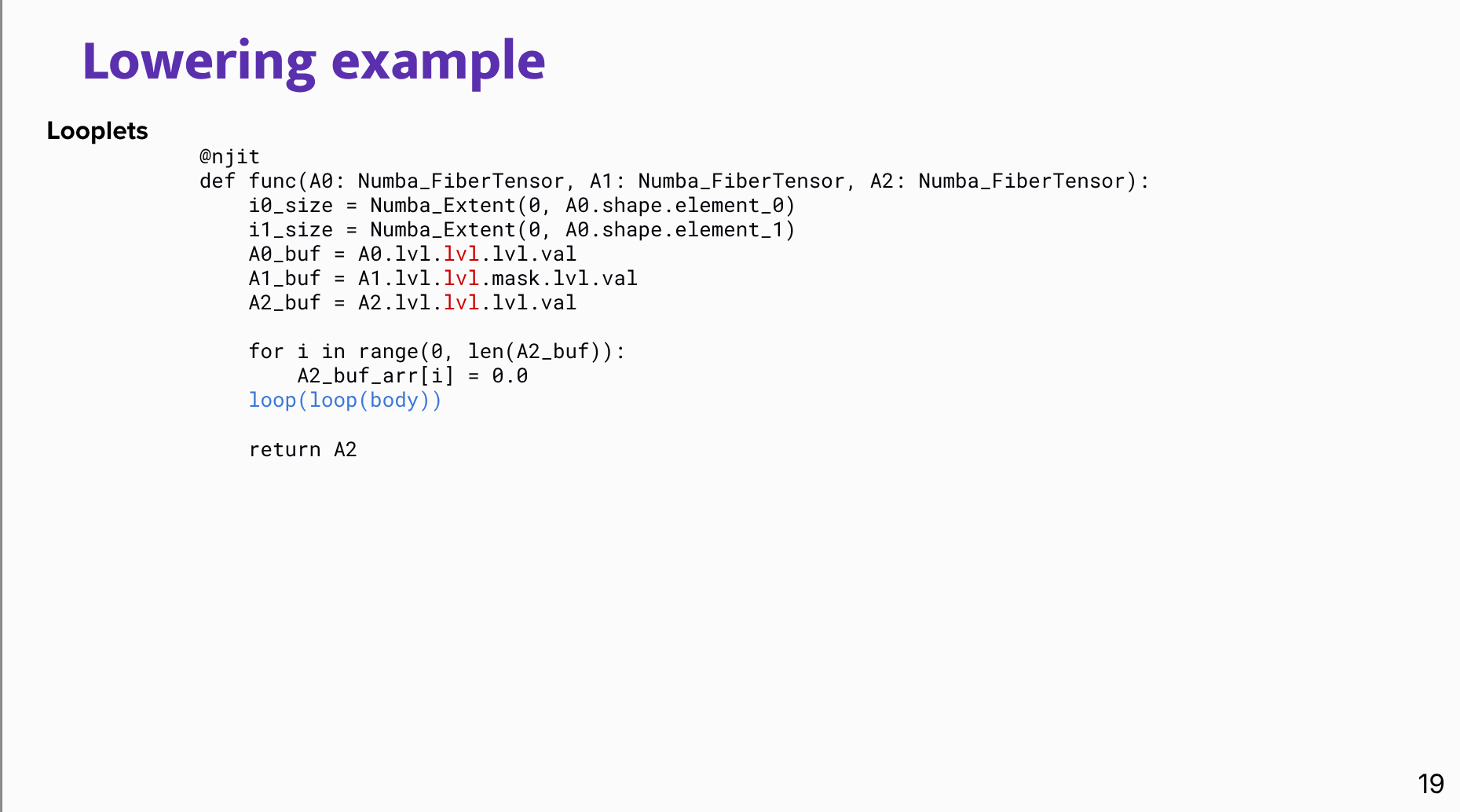

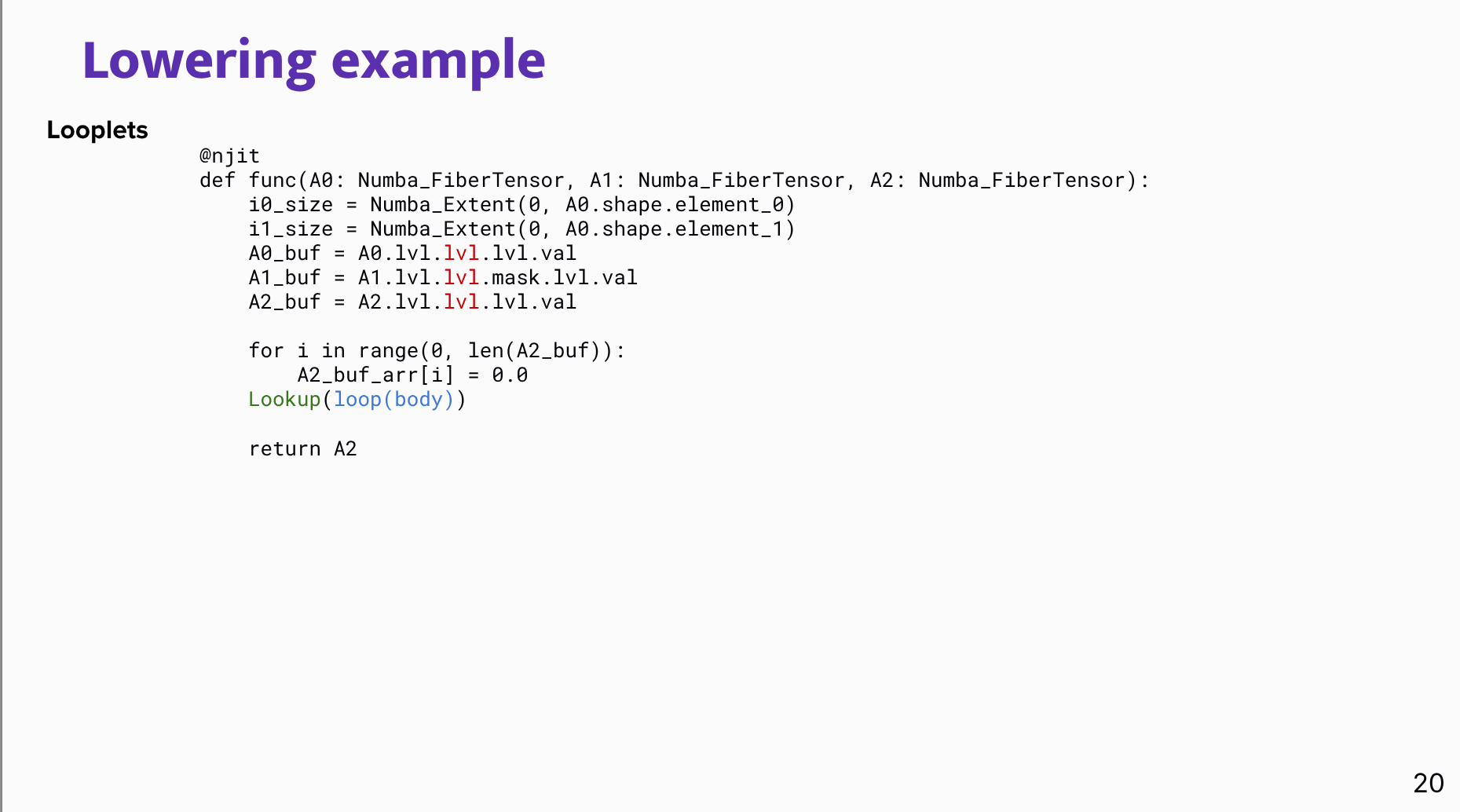

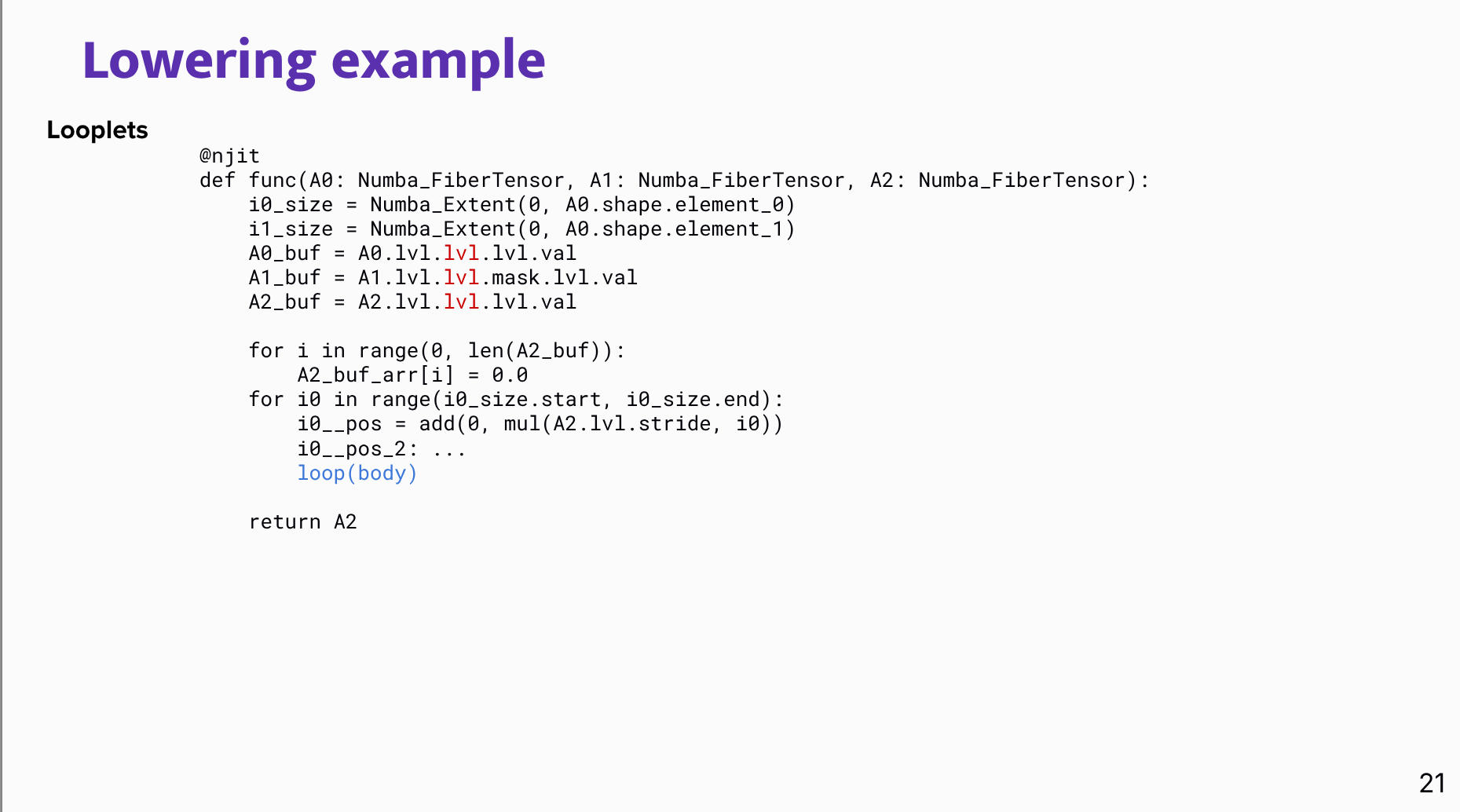

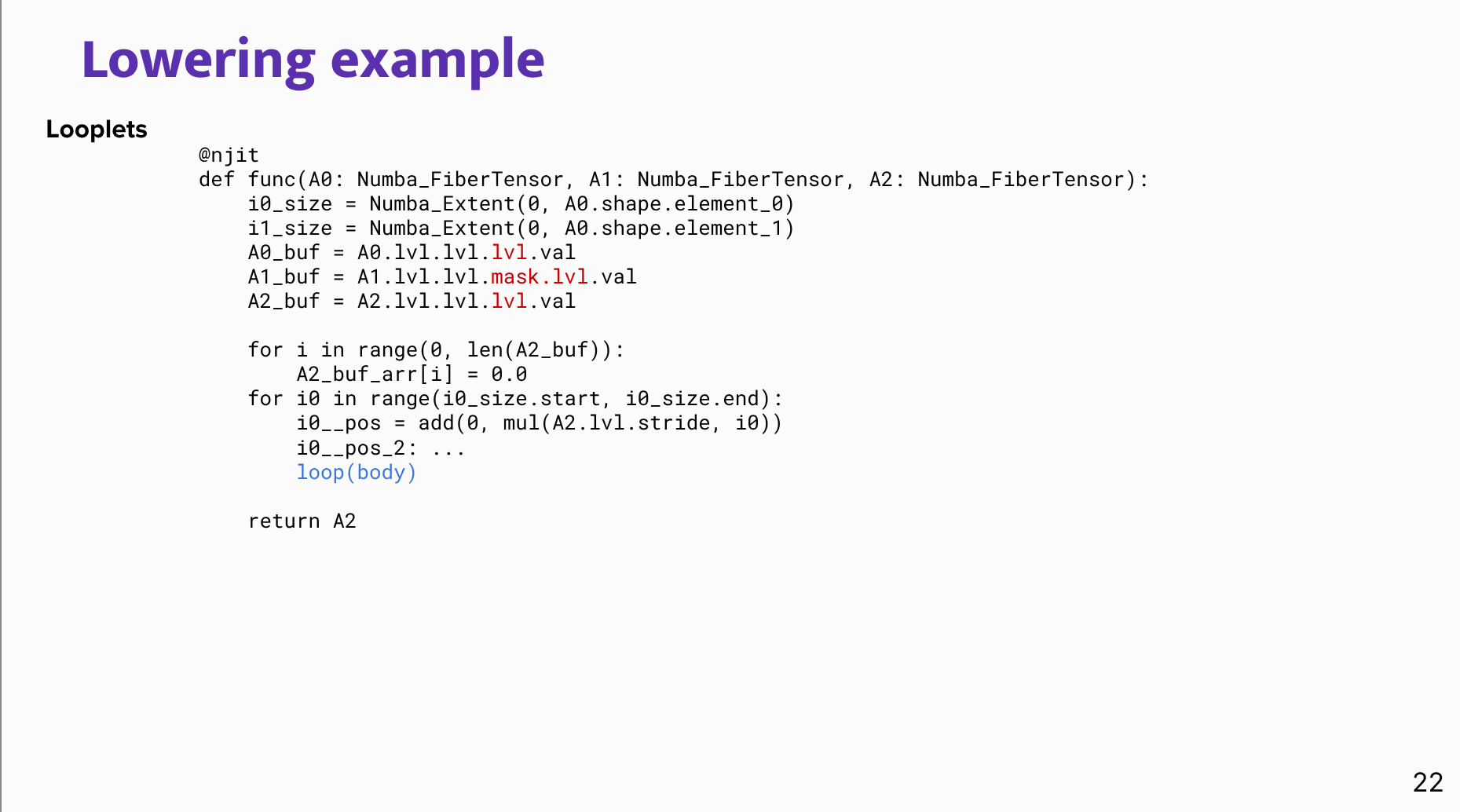

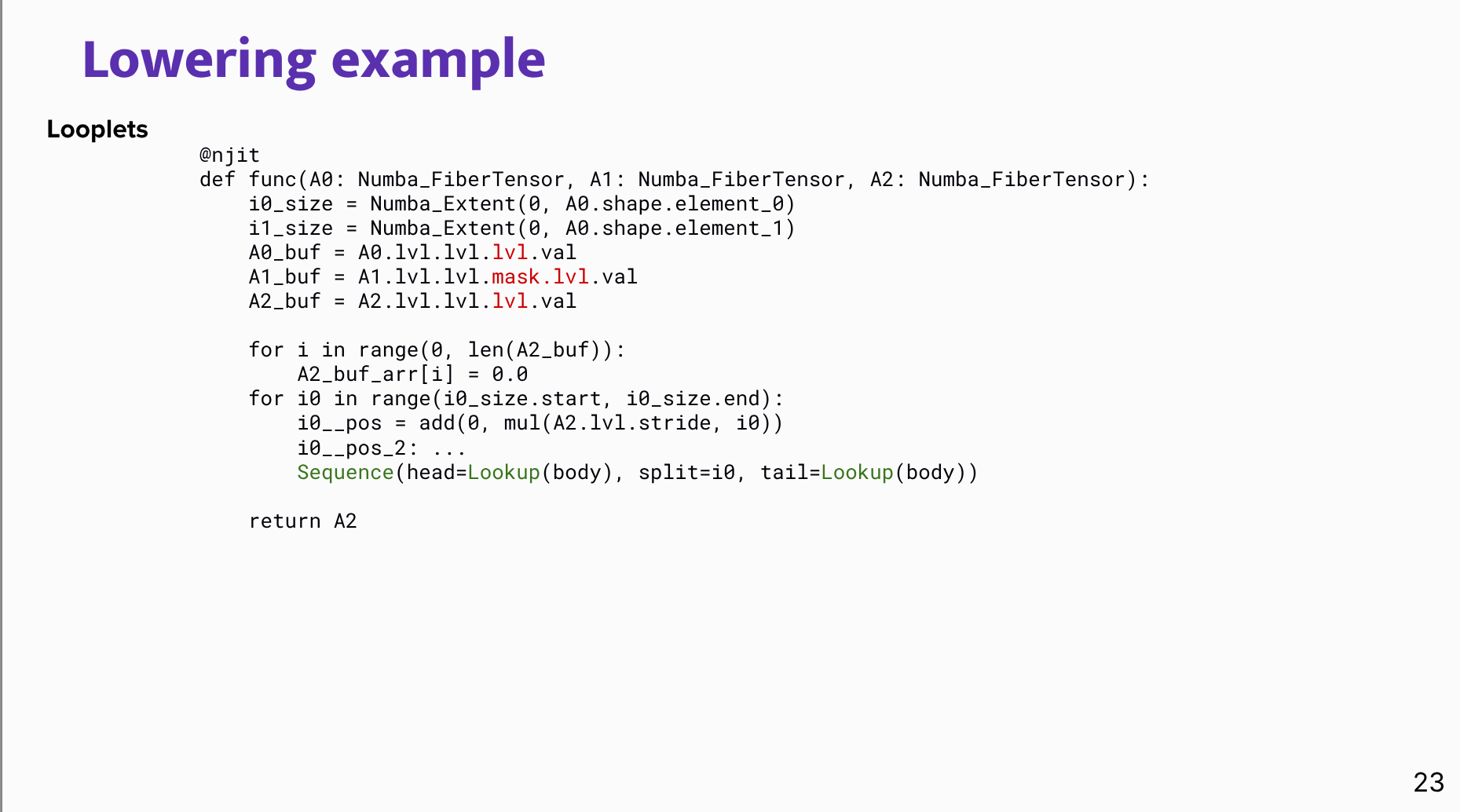

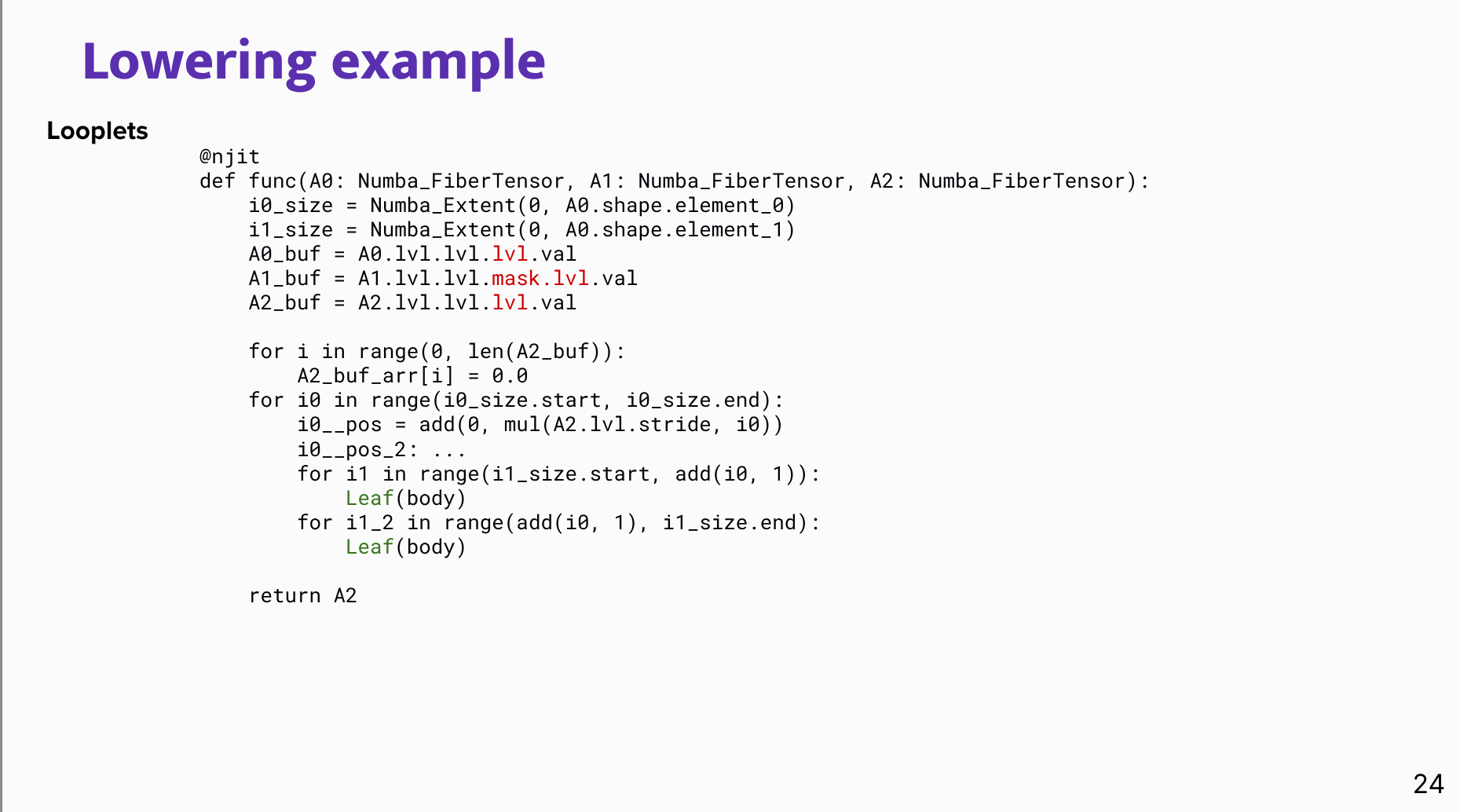

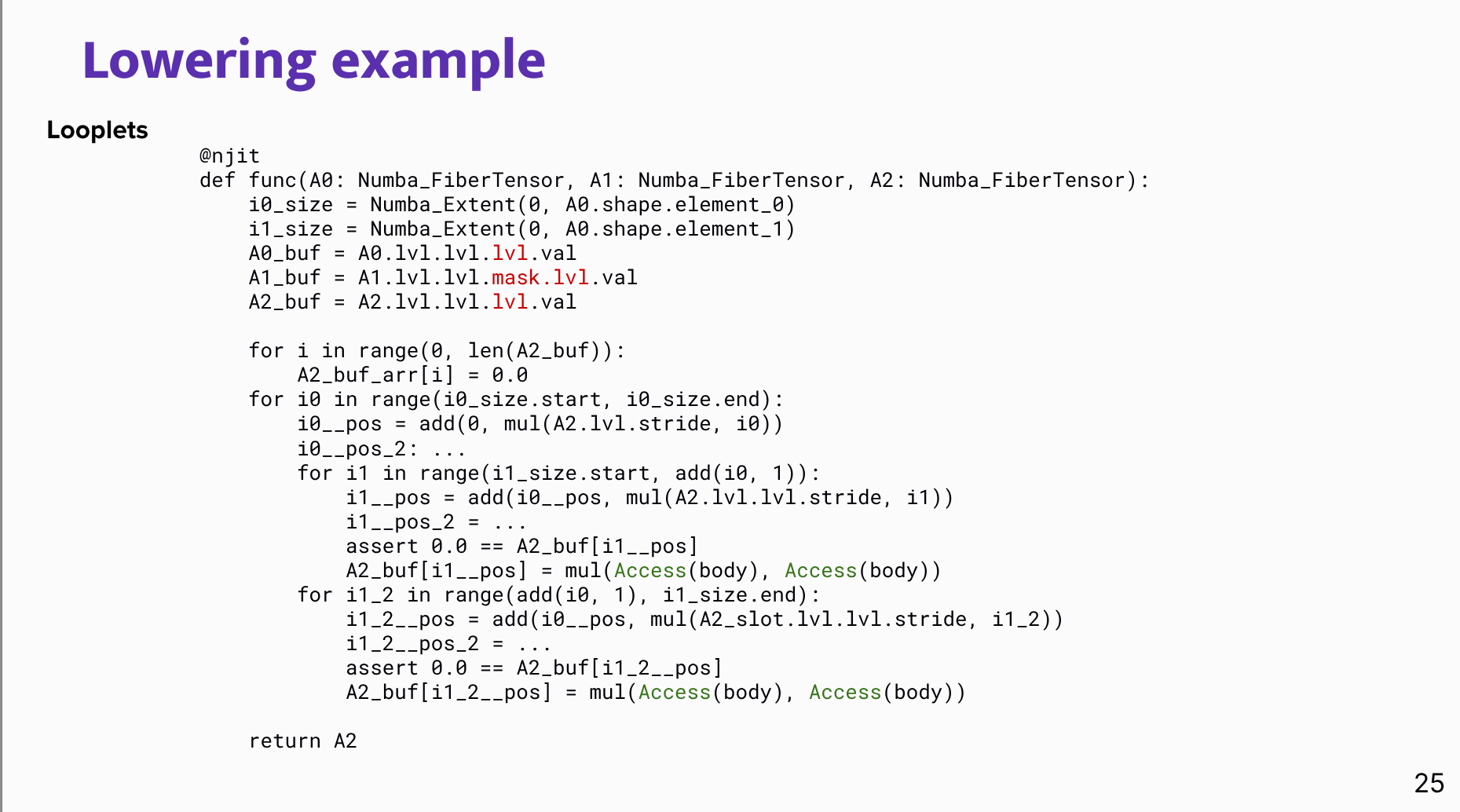

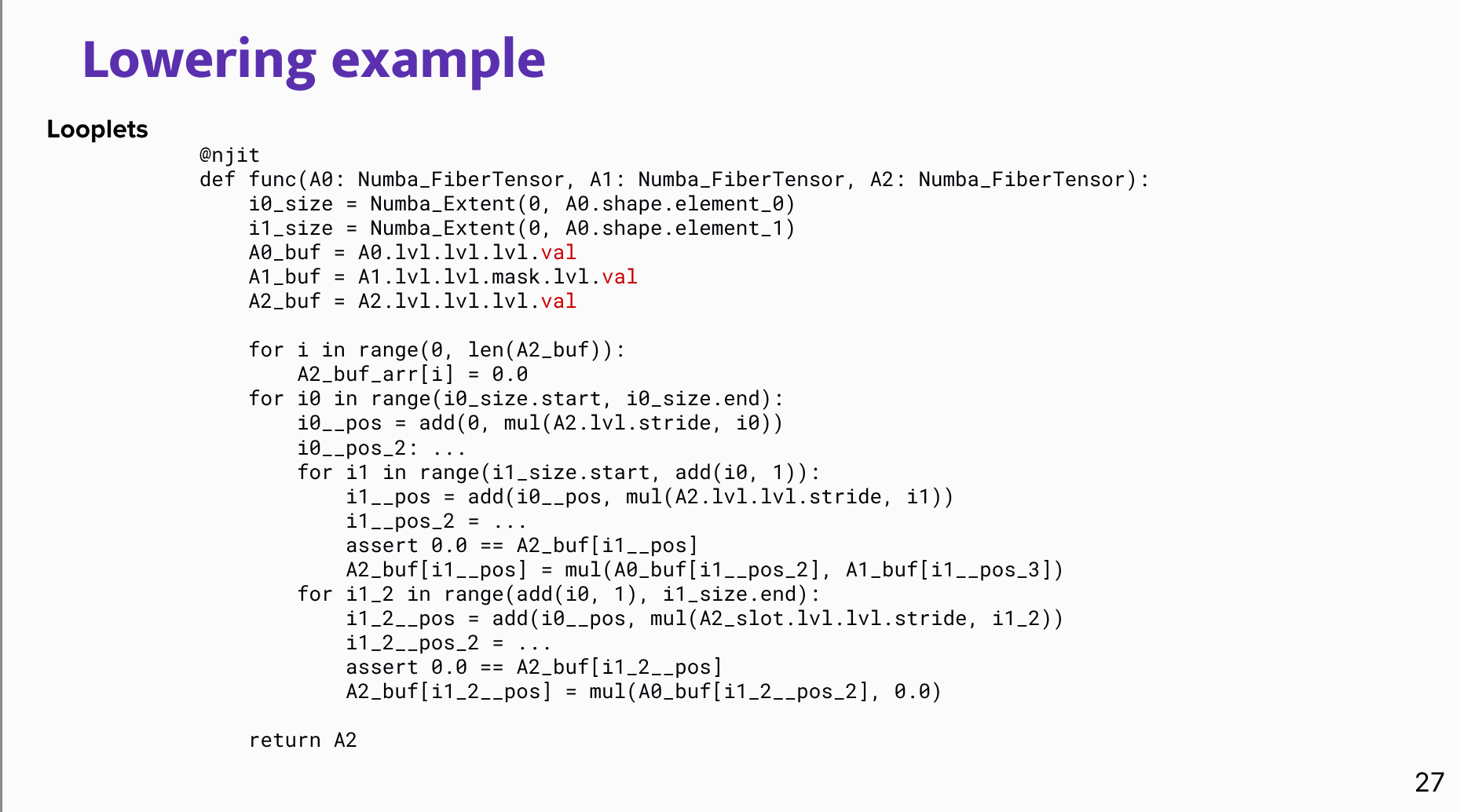

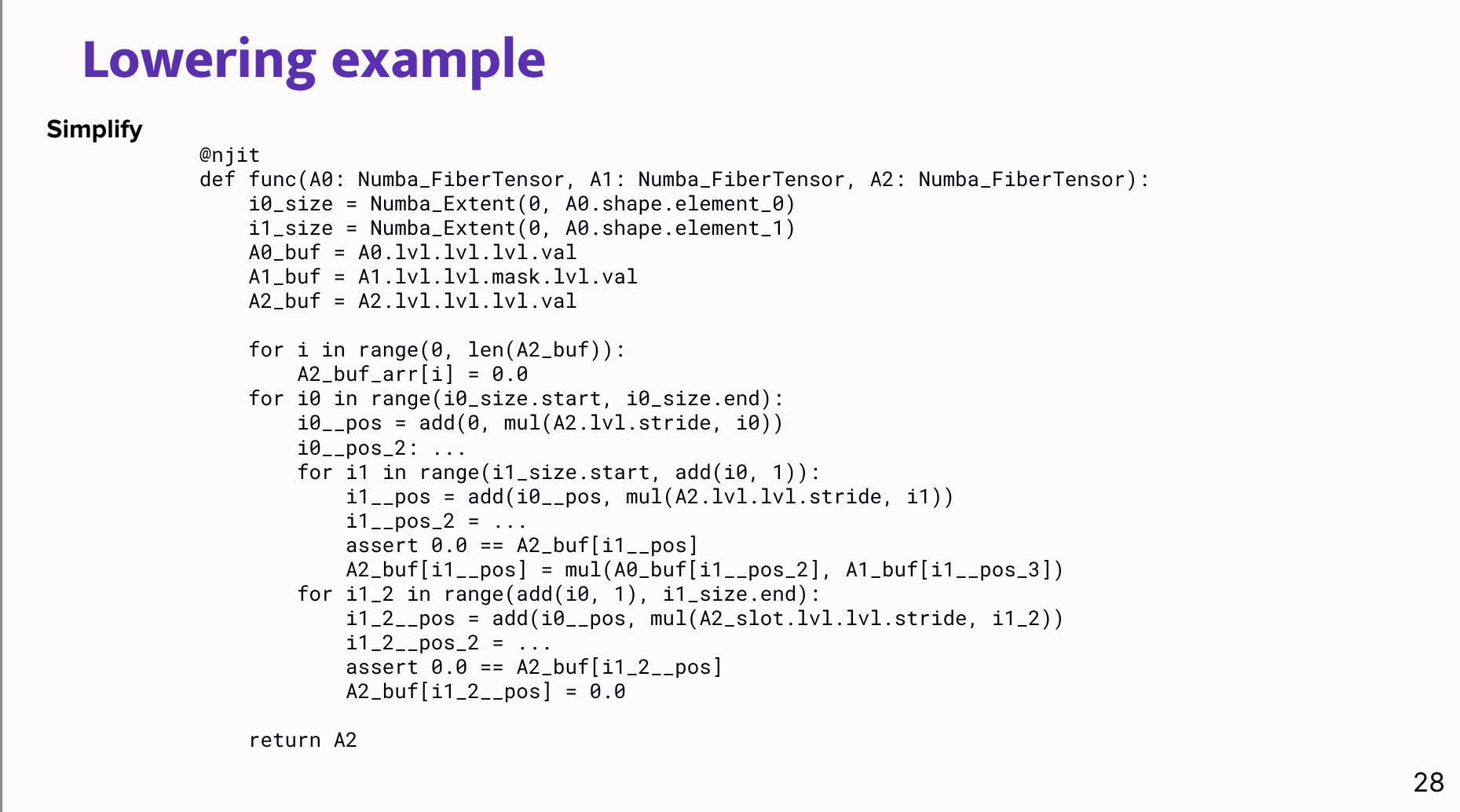

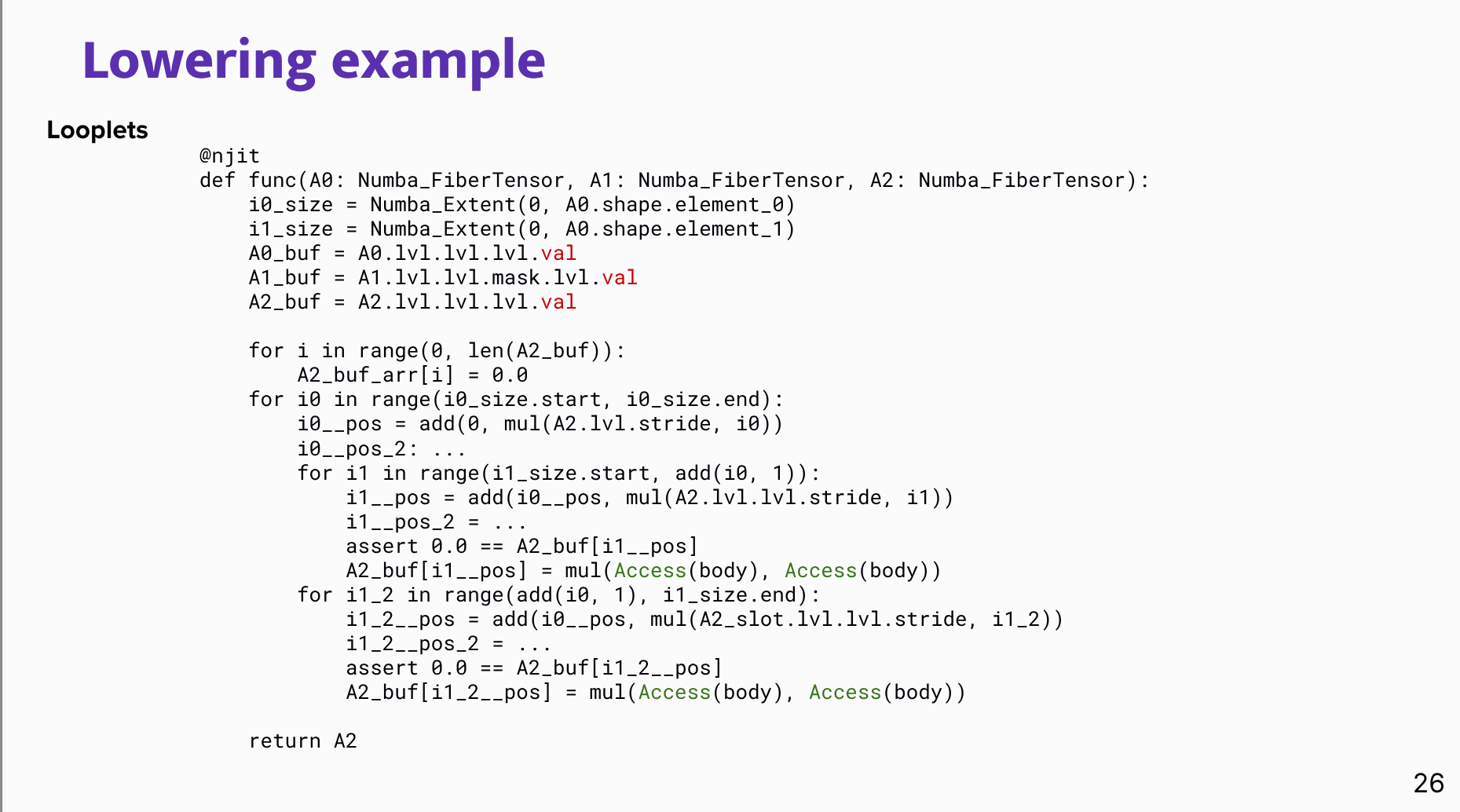

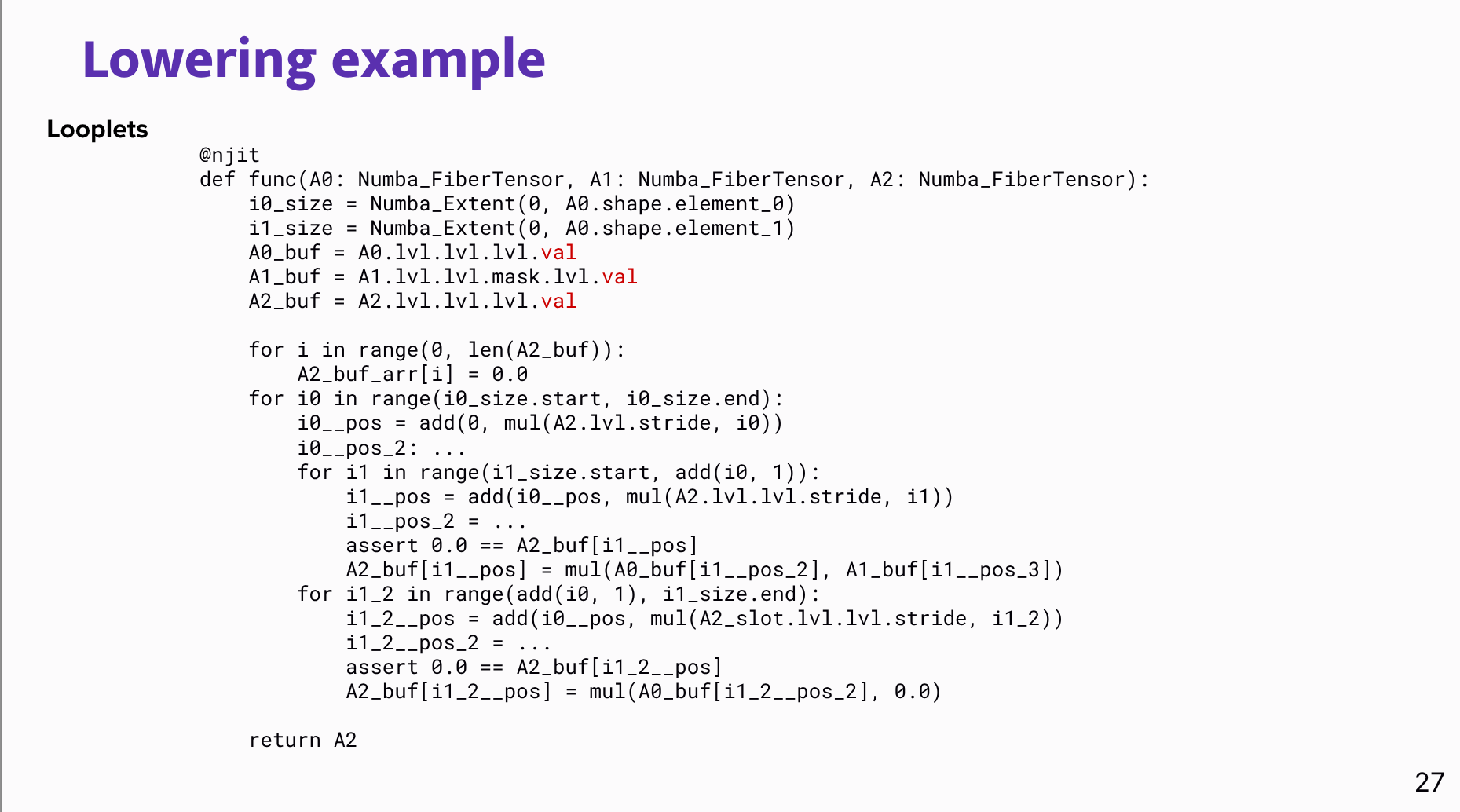

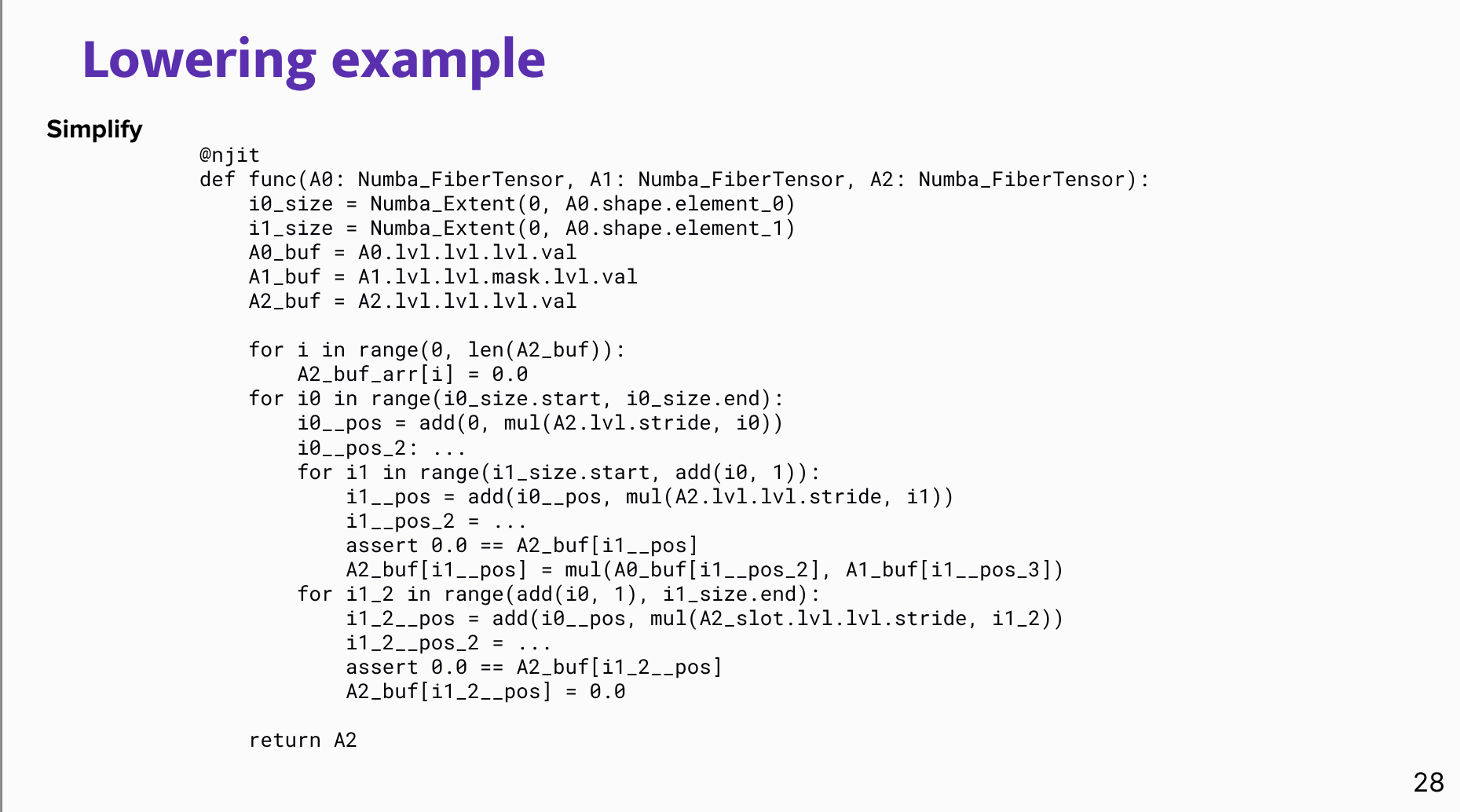

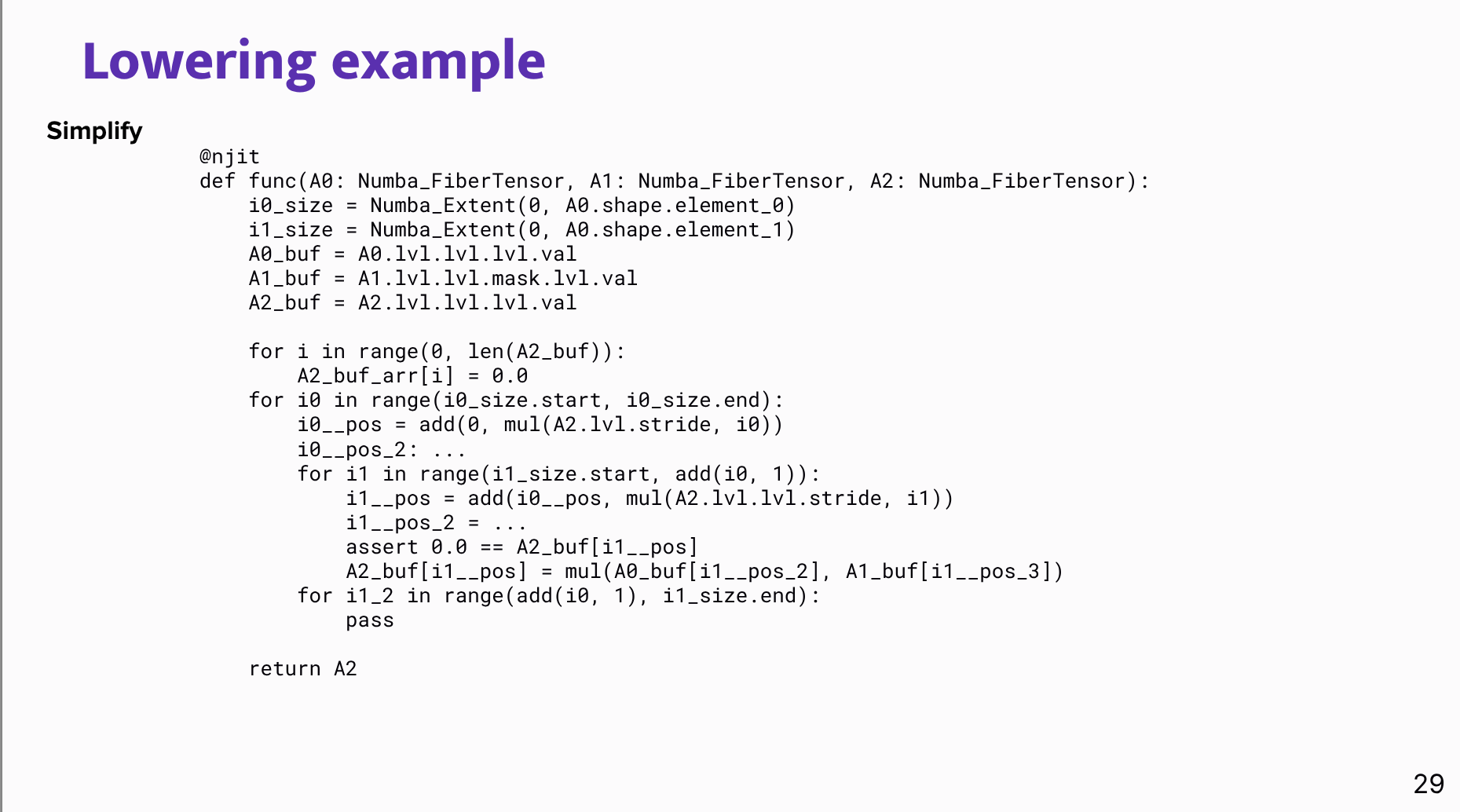

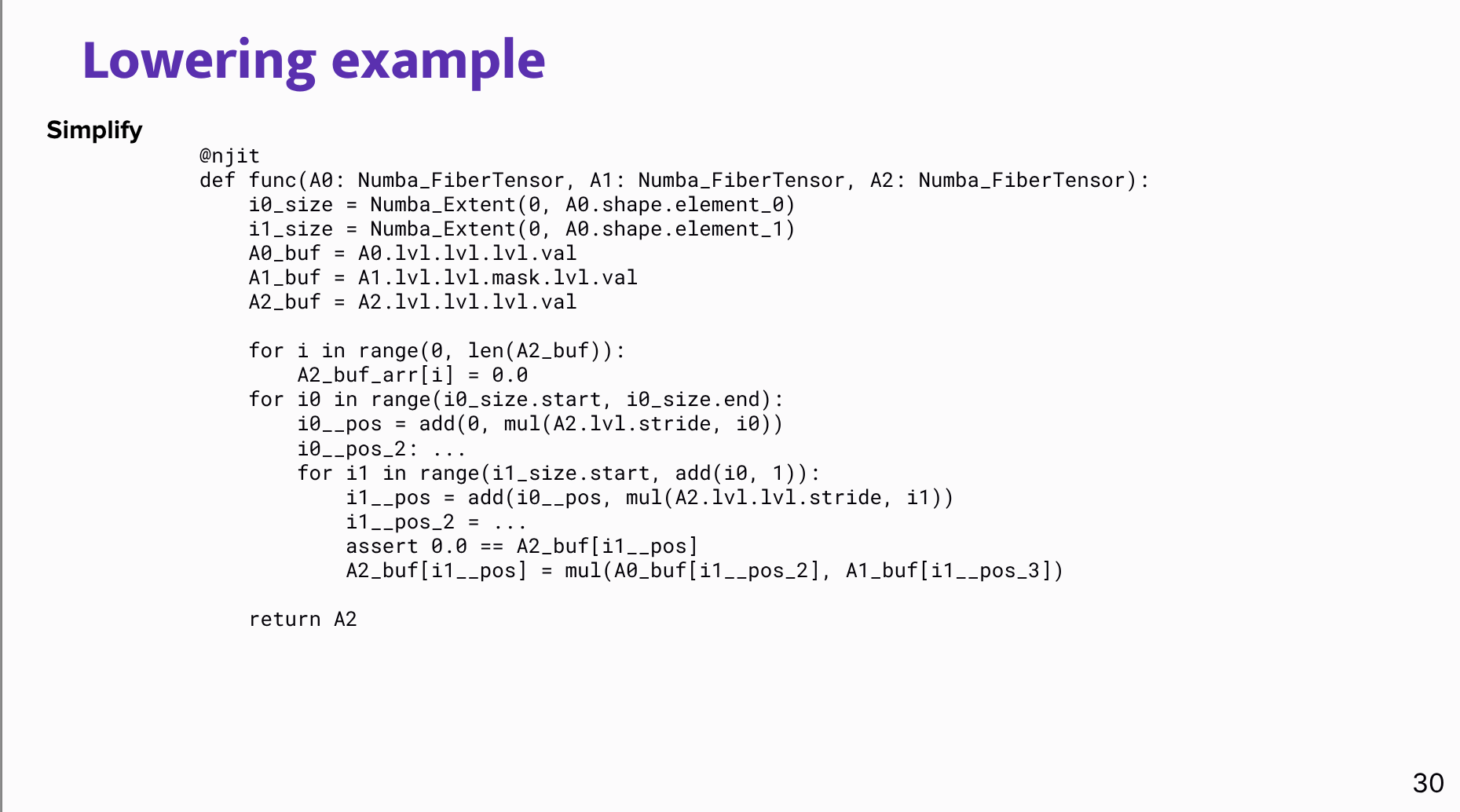

Apart from the existing Julia implementation, the number of sparse backends offered by PyData/Sparse will grow in the future to provide a Python-native alternatives for scipy.sparse and Numba solutions. One of them that is currently under development is finch-tensor-lite, a pure Python rewrite of Finch.jl compiler, meant to make the solution lightweight by dropping Julia runtime dependency while providing the majority of features.

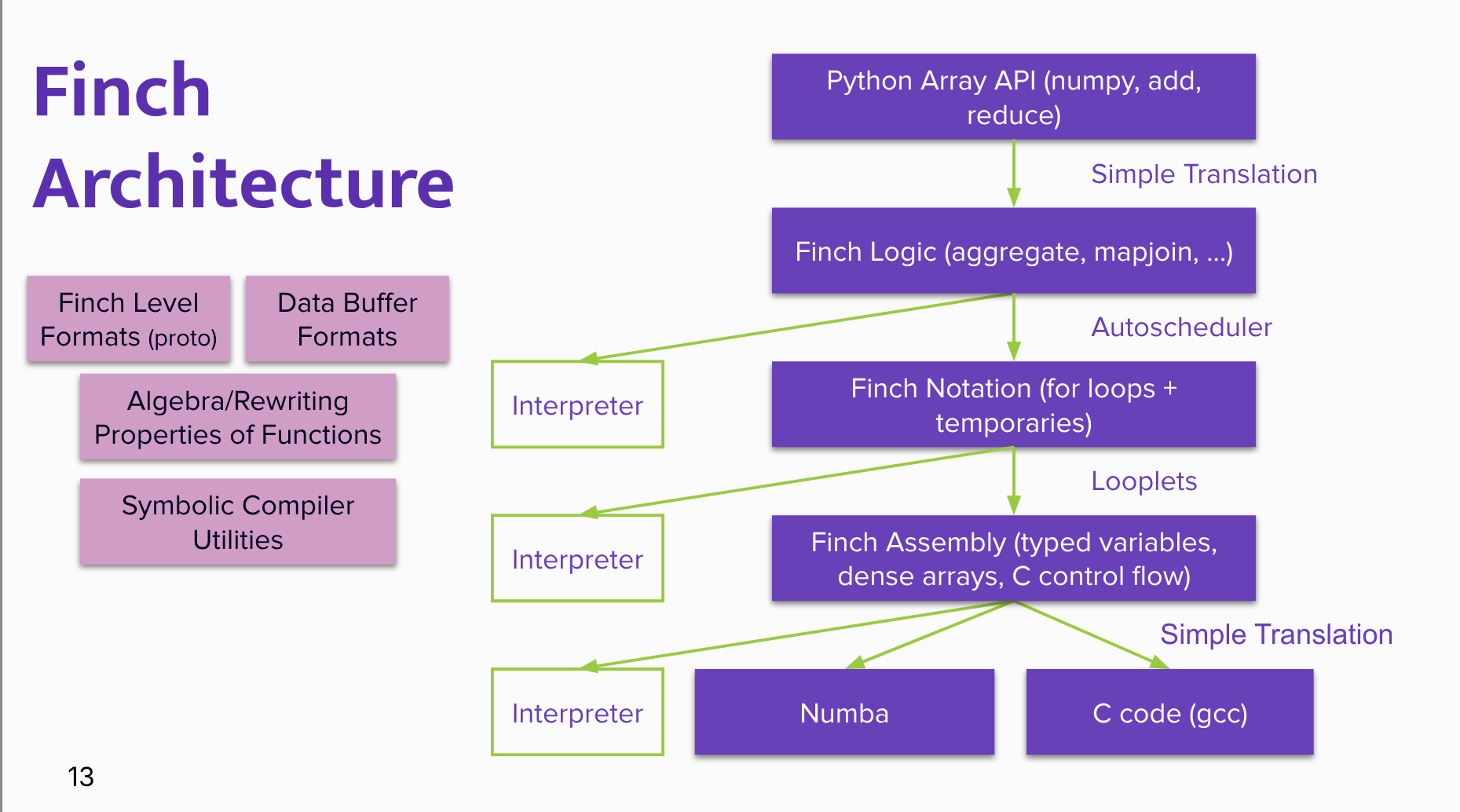

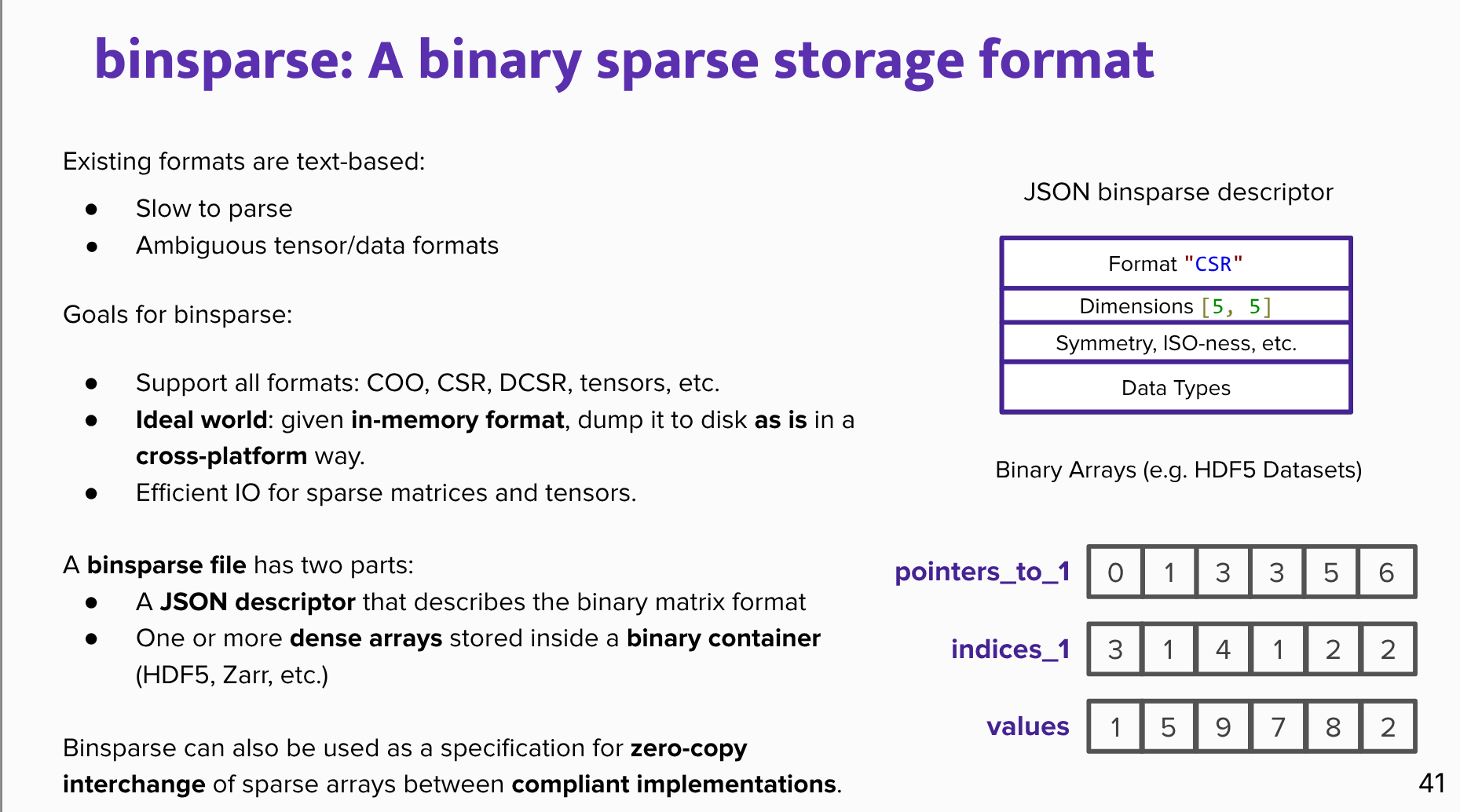

In this talk we’re going to understand the current landscape of sparse computing in the Python ecosystem first. Then a high-level overview of the Finch technology and compiler’s architecture will be presented together with other solutions vital for the project: Array API Standard and binsparse format.

Next, we’re going to present a selected set of benchmarks - also focusing on real world use-cases: how Finch impacts users’ experience when writing sparse programs in Python. Last but not least a showcase of the current development will be shown - pure Python rewrite of Finch compiler.

Mateusz Sokół

Mateusz Sokół is a Software Engineer at Quansight, working on multitude of open source projects in the Scientific Python Ecosystem.

- His GitHub profile here: https://github.com/mtsokol

Willow Marie Ahrens

Willow Marie Ahrens is assistant professor in the School of Computer Science at Georgia Tech. She is inspired to make programming high-performance computers more productive, efficient, and accessible. Her research focuses on using compilers to accelerate productive programming languages with state-of-the-art datastructures, algorithms, and architectures, bridging the gap between program flexibility and performance. She’s the author of the Finch sparse tensor programming language. Finch supports general programs on general tensor formats, such as sparse, run-length-encoded, banded, or otherwise structured tensors. Please reach out if you are interested in doing research at Georgia Tech!

Outline

Reflections

WoW

Resources

Repos and Docs:

binsparse specification A cross-platform binary storage format for sparse data, particularly sparse matrices.

Papers:

Citation

@online{bochman2025,

author = {Bochman, Oren},

title = {PyData/Sparse \& {Finch} - {Extending} Sparse Computing in

the {Python} Ecosystem},

date = {2025-12-12},

url = {https://orenbochman.github.io/posts/2025/2025-12-11-pydata-sparse-and-finch/},

langid = {en}

}