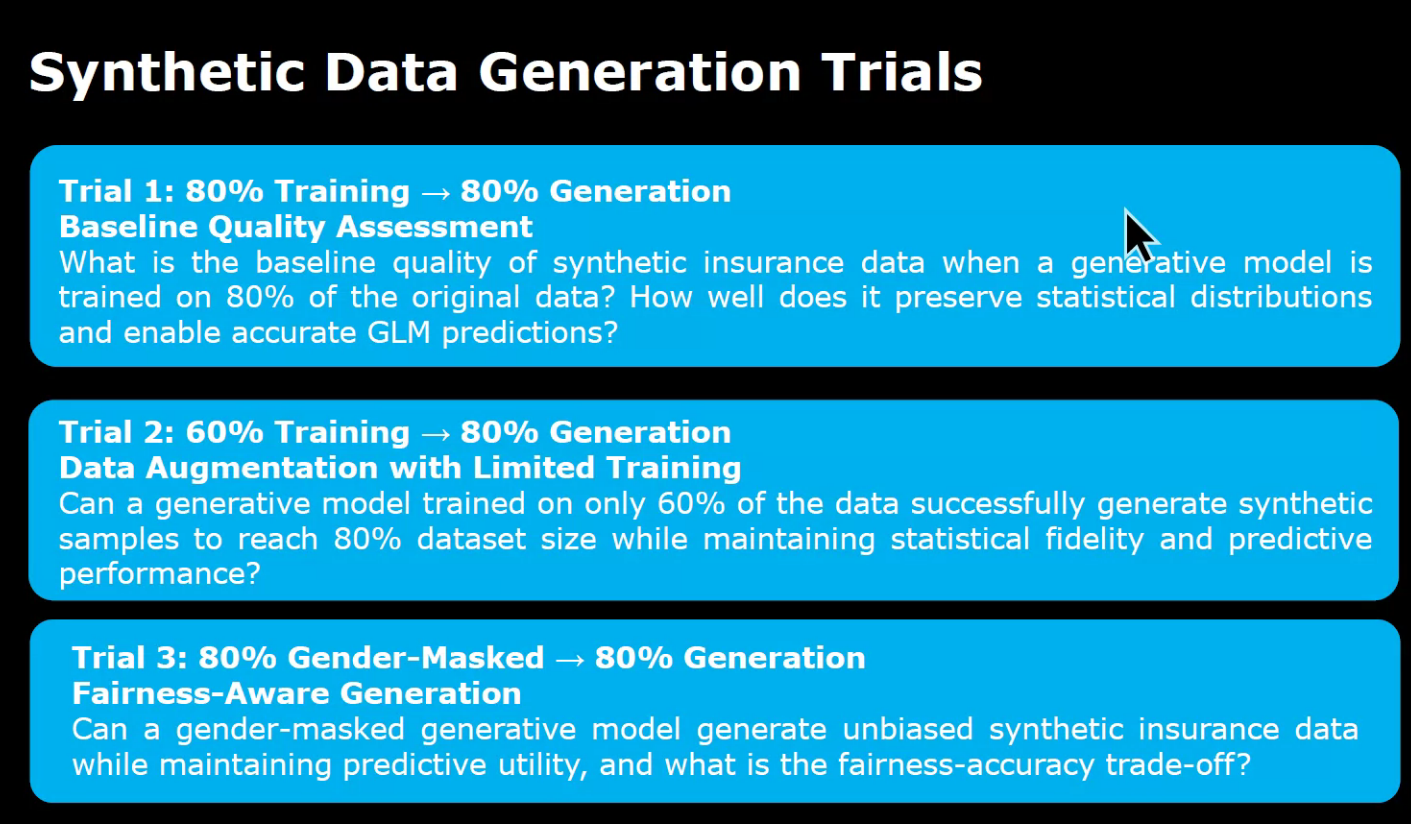

This study is oriented to a synthetic non-life insurance premium dataset generated using several Generative Models.

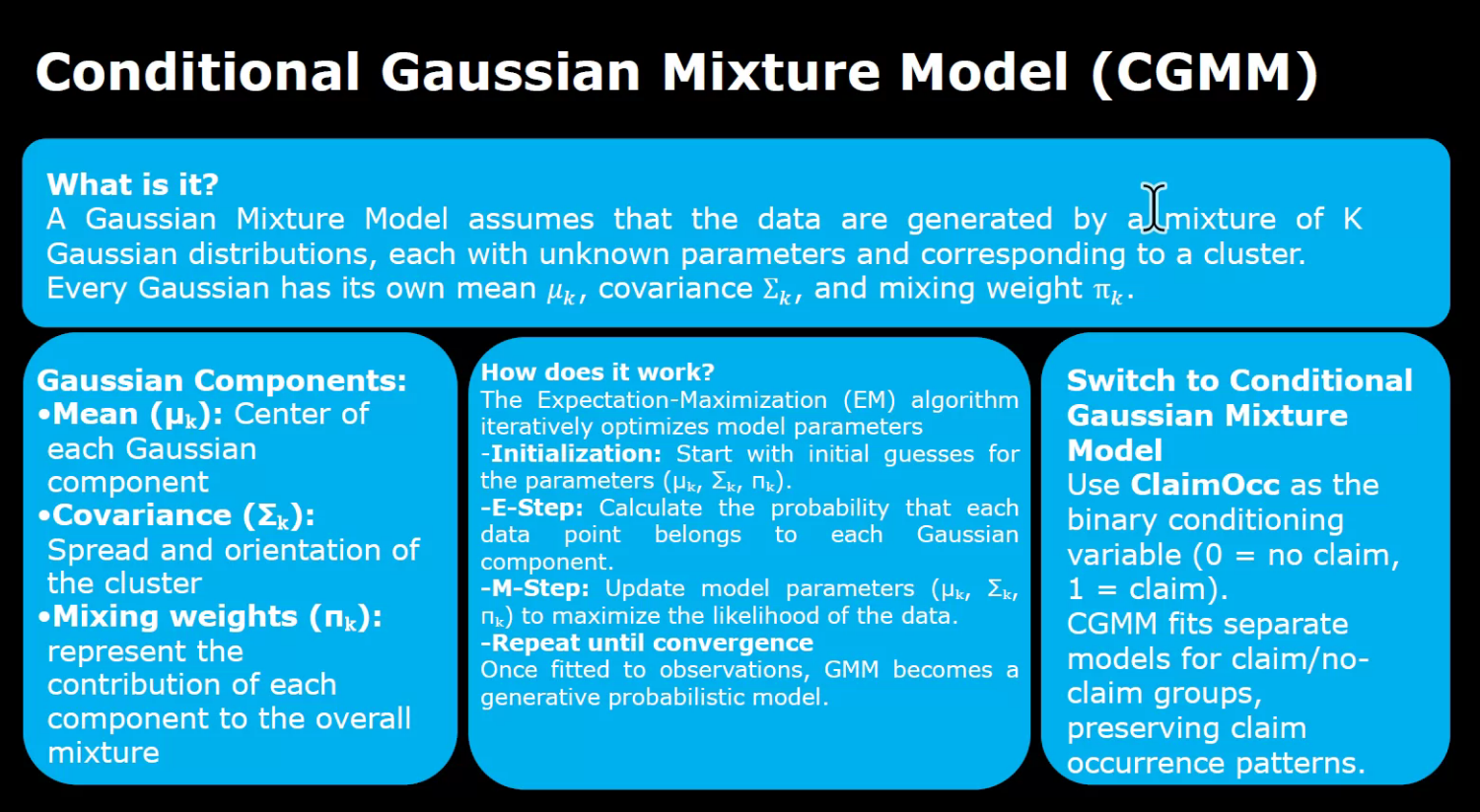

As a benchmark, a Conditional Gaussian Mixture Model has been employed.

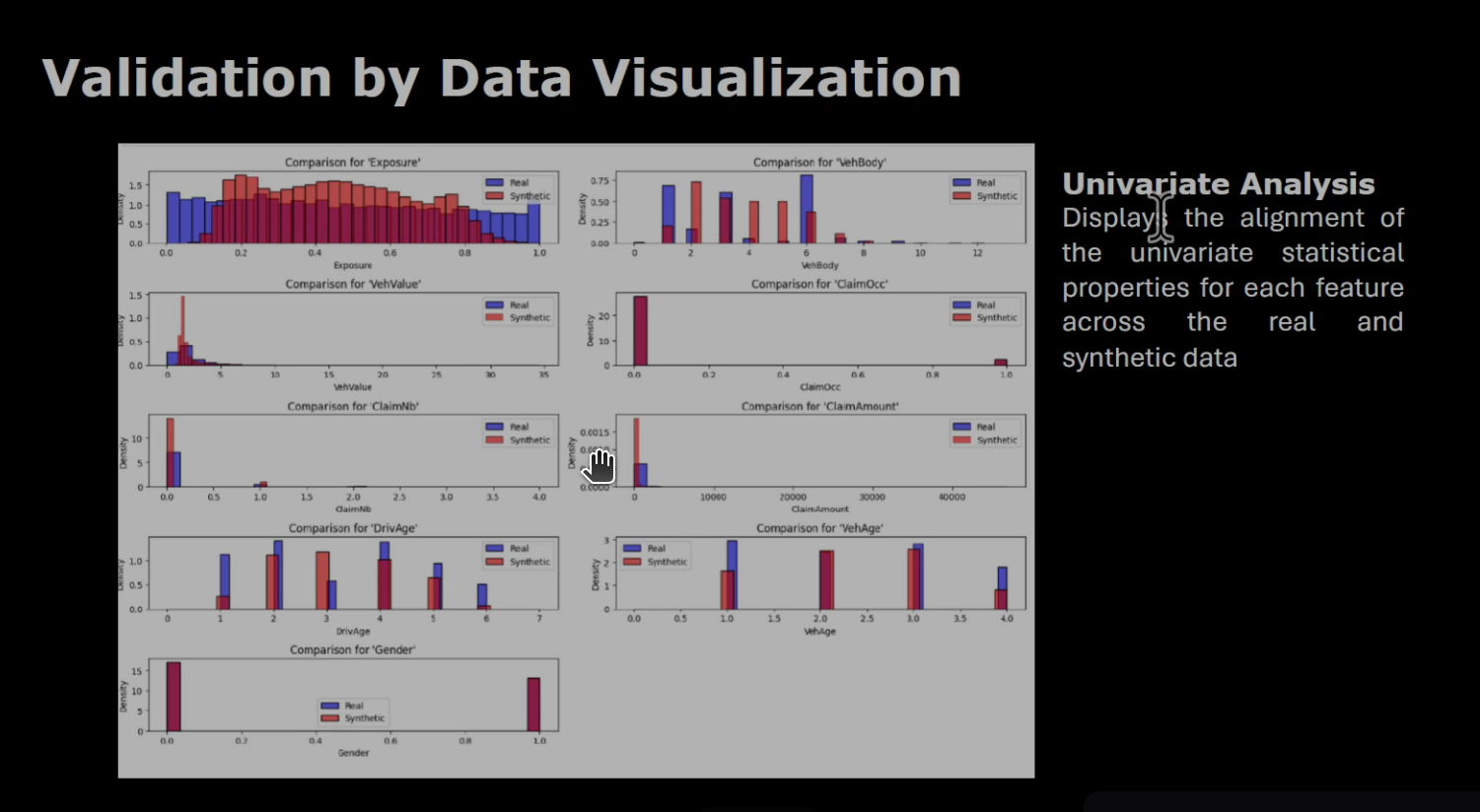

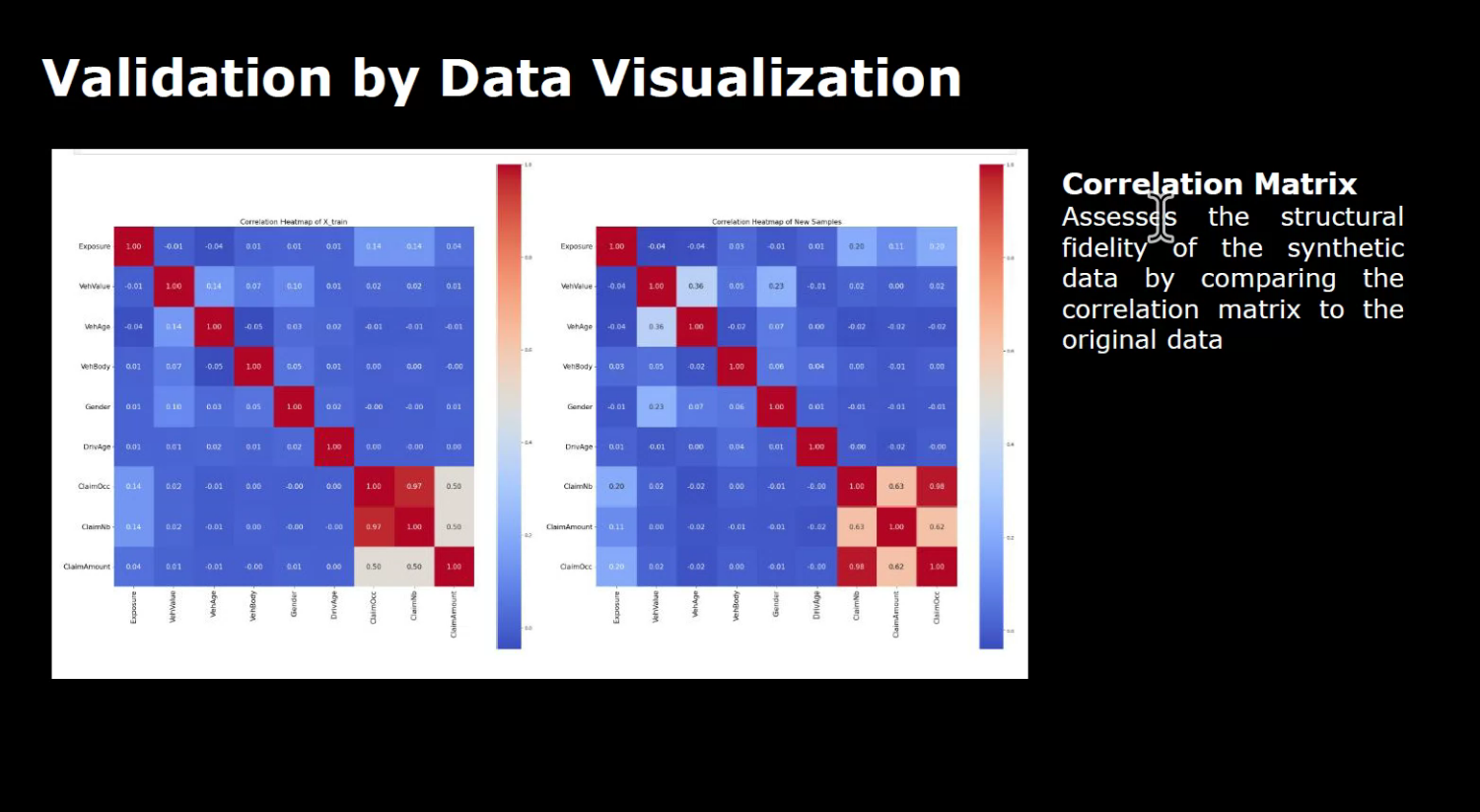

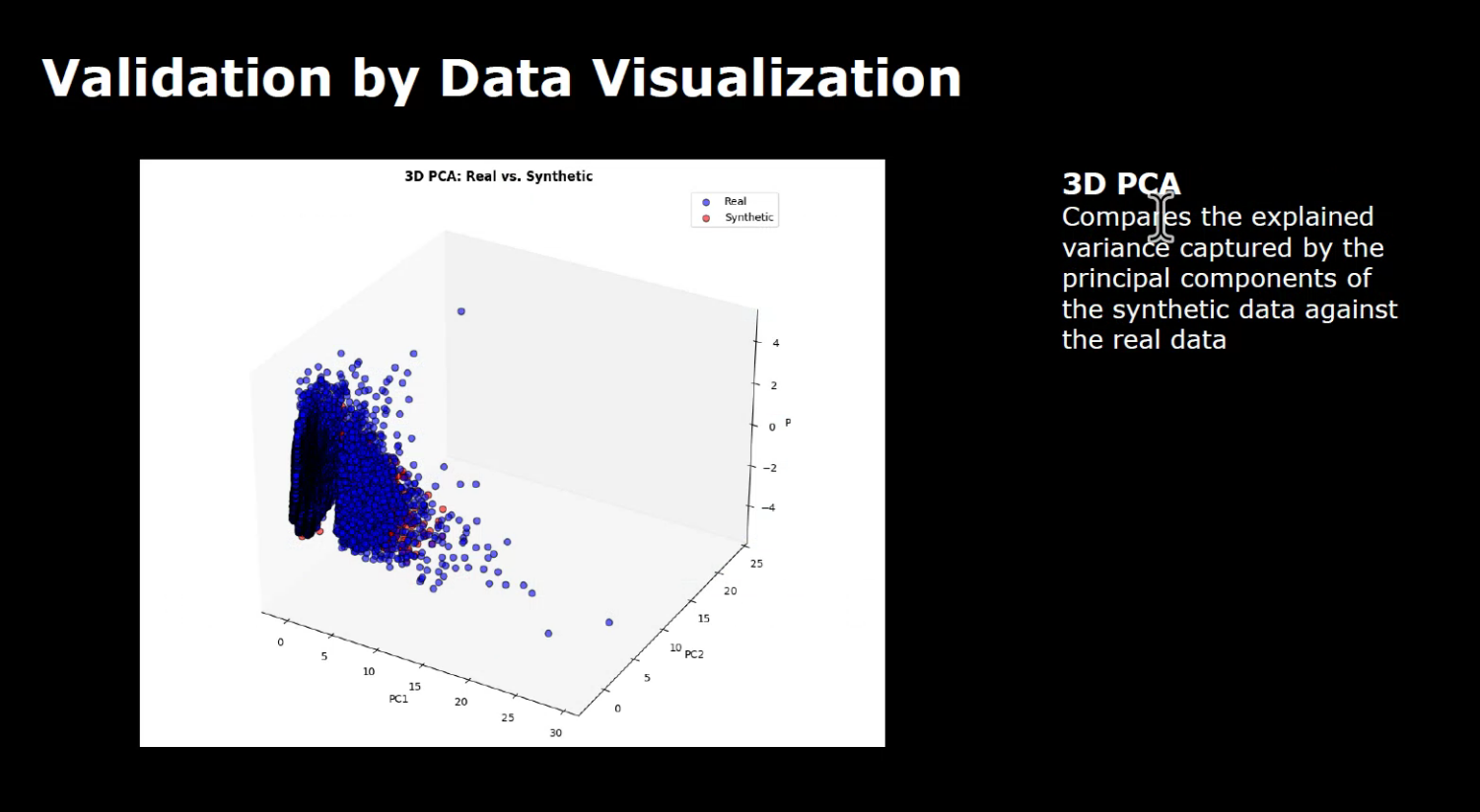

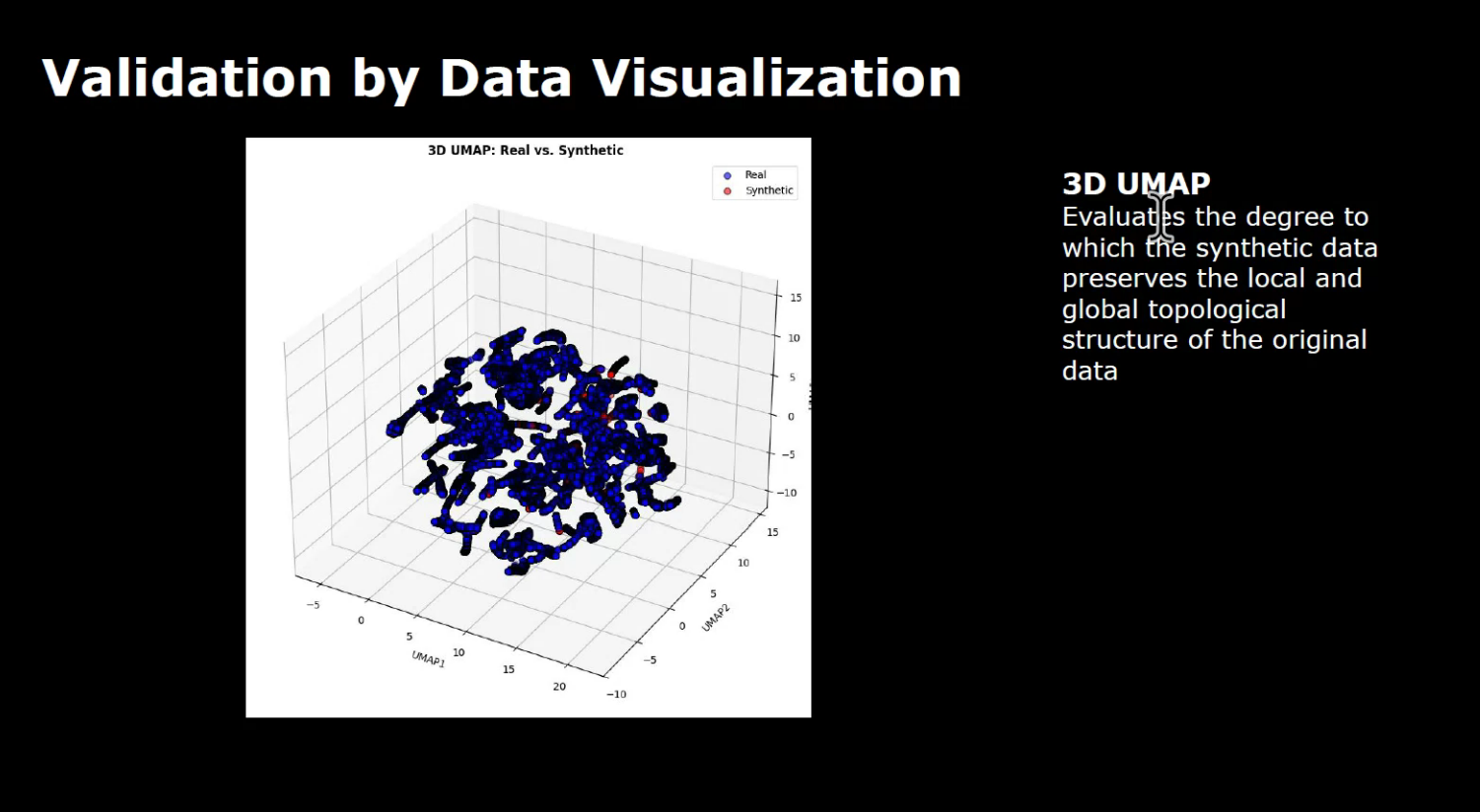

The validation of the generated data involved several steps: data visualization, comparison with univariate analysis, PCA and UMAP representations between the trained data and the generated samples.

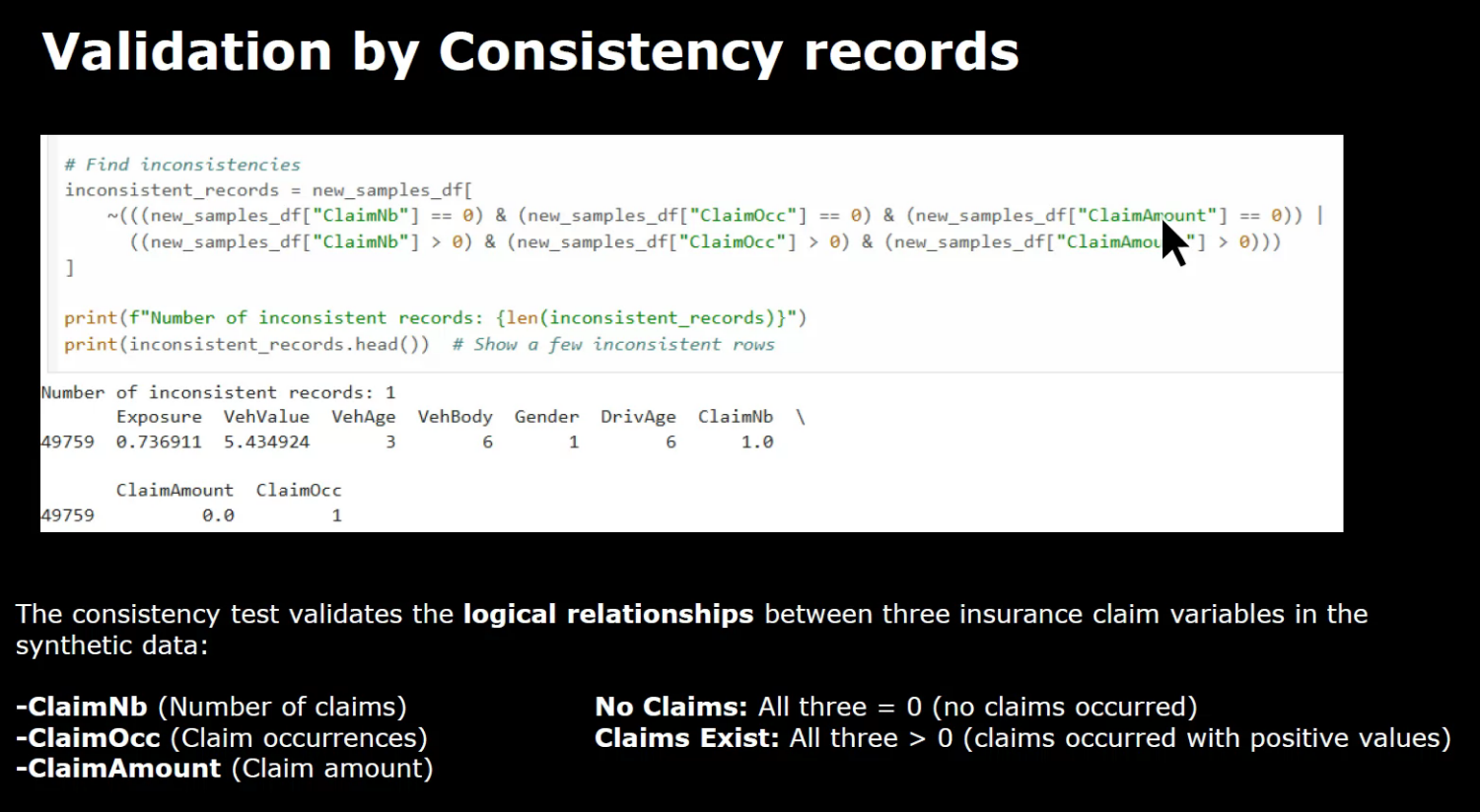

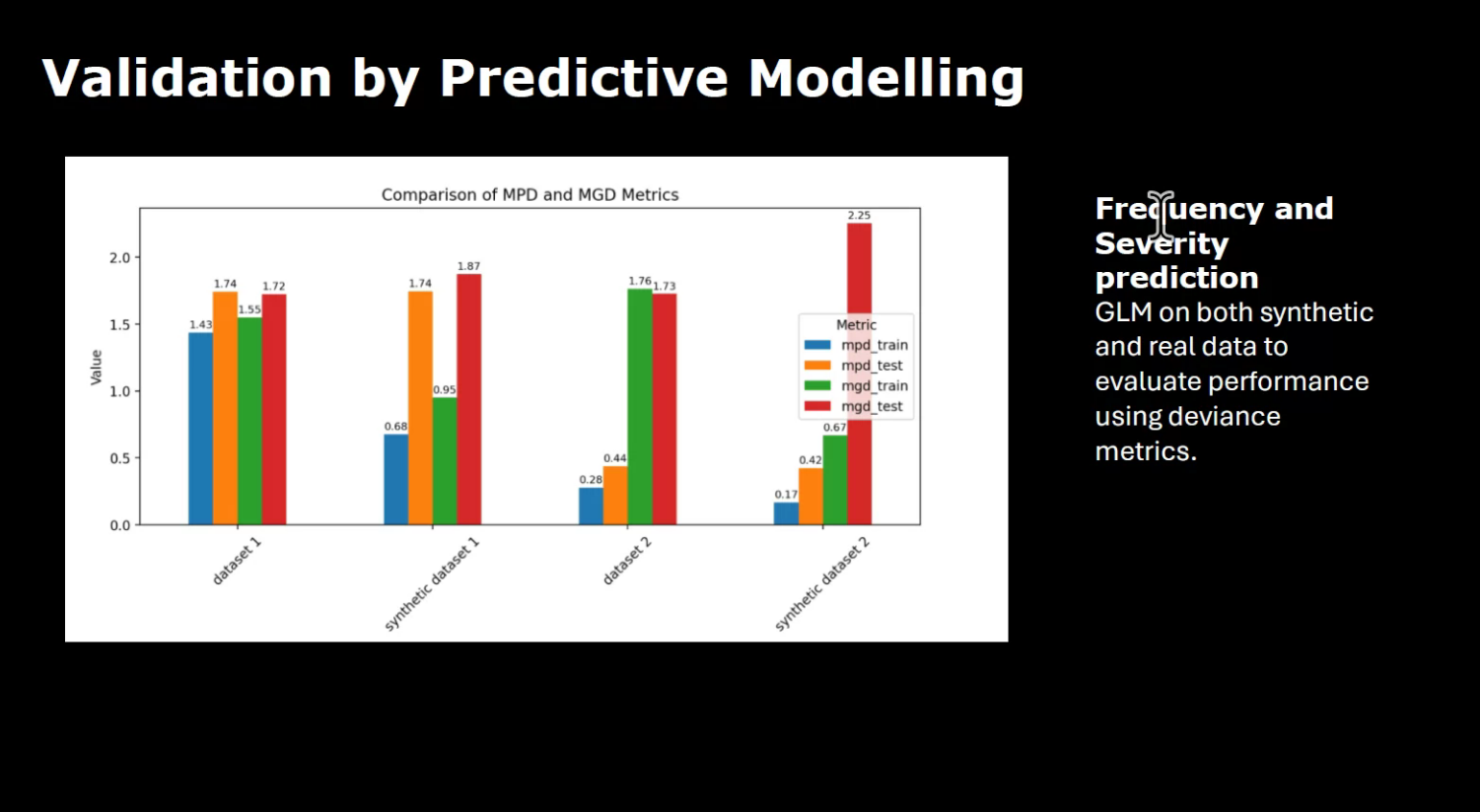

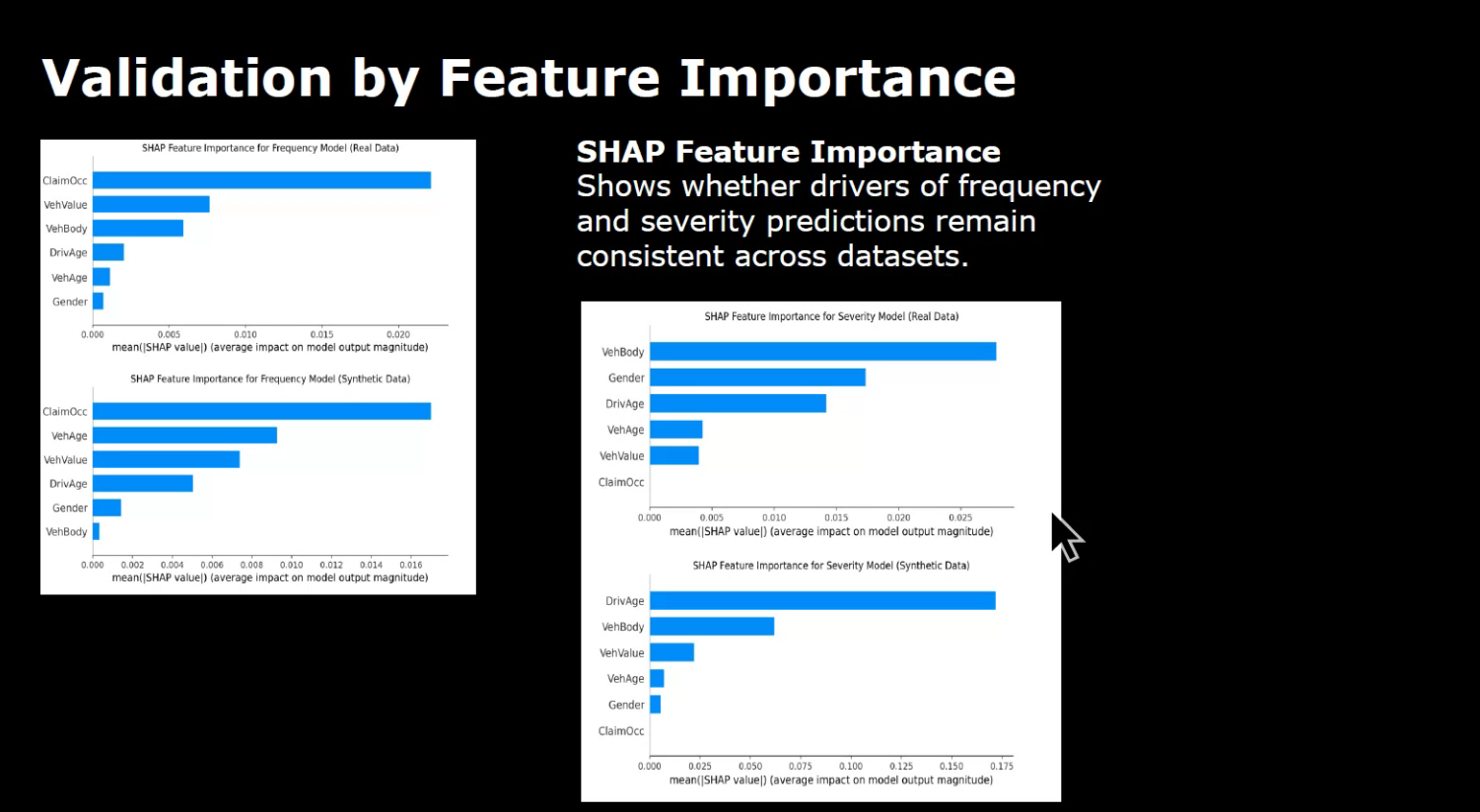

In addition, check the consistency of data produced, the statistical Kolmogorov–Smirnov test and predictive modeling of frequency and severity with Generalised Linear Models (GLMs) exploited by Tweedie distribution as a measure of the generated data’s quality, followed by the evidence of features importance.

For further comparison, advanced Deep Learning architectures have been employed:

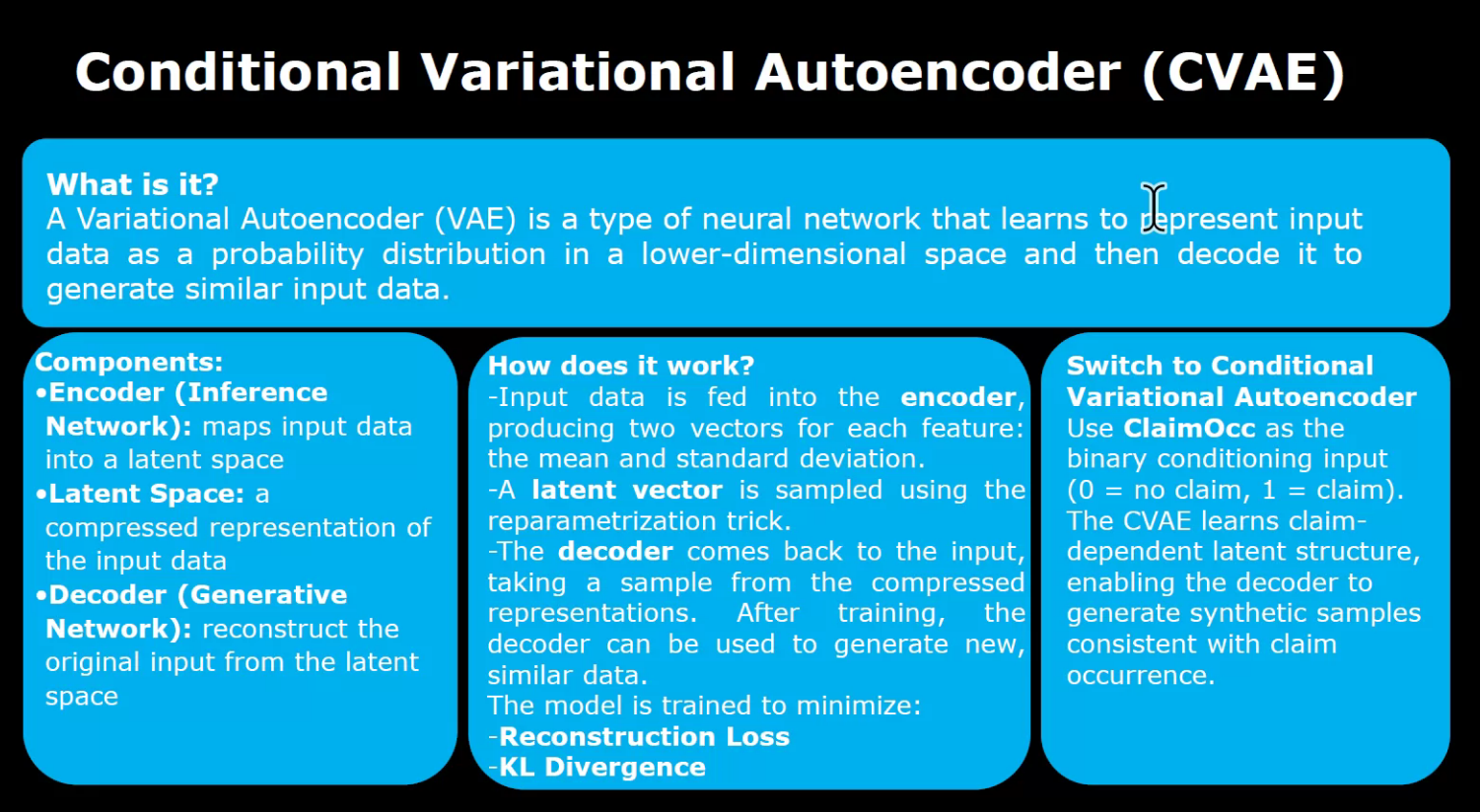

- Conditional Variational Autoencoders (CVAEs),

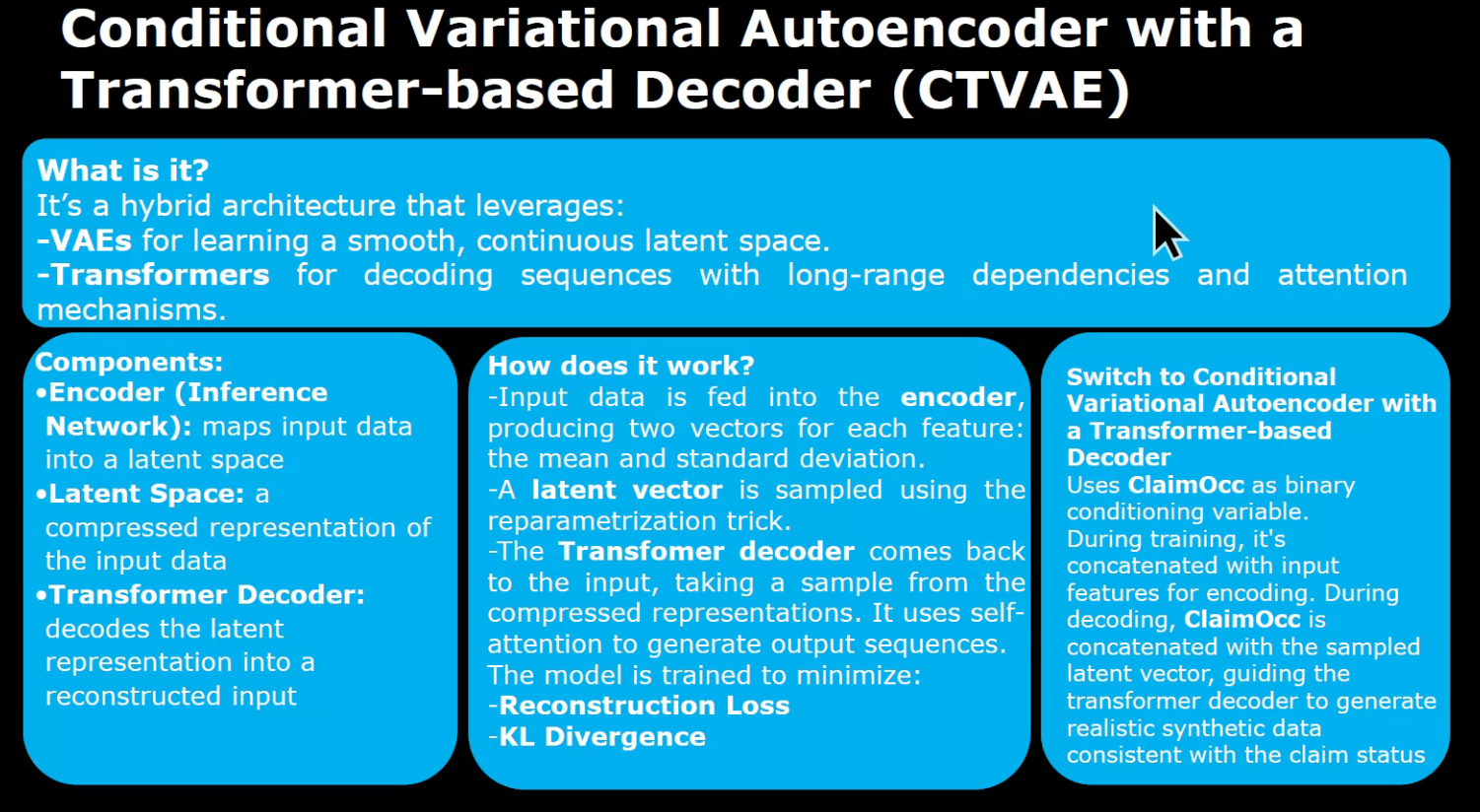

- CVAEs enhanced with a Transformer Decoder,

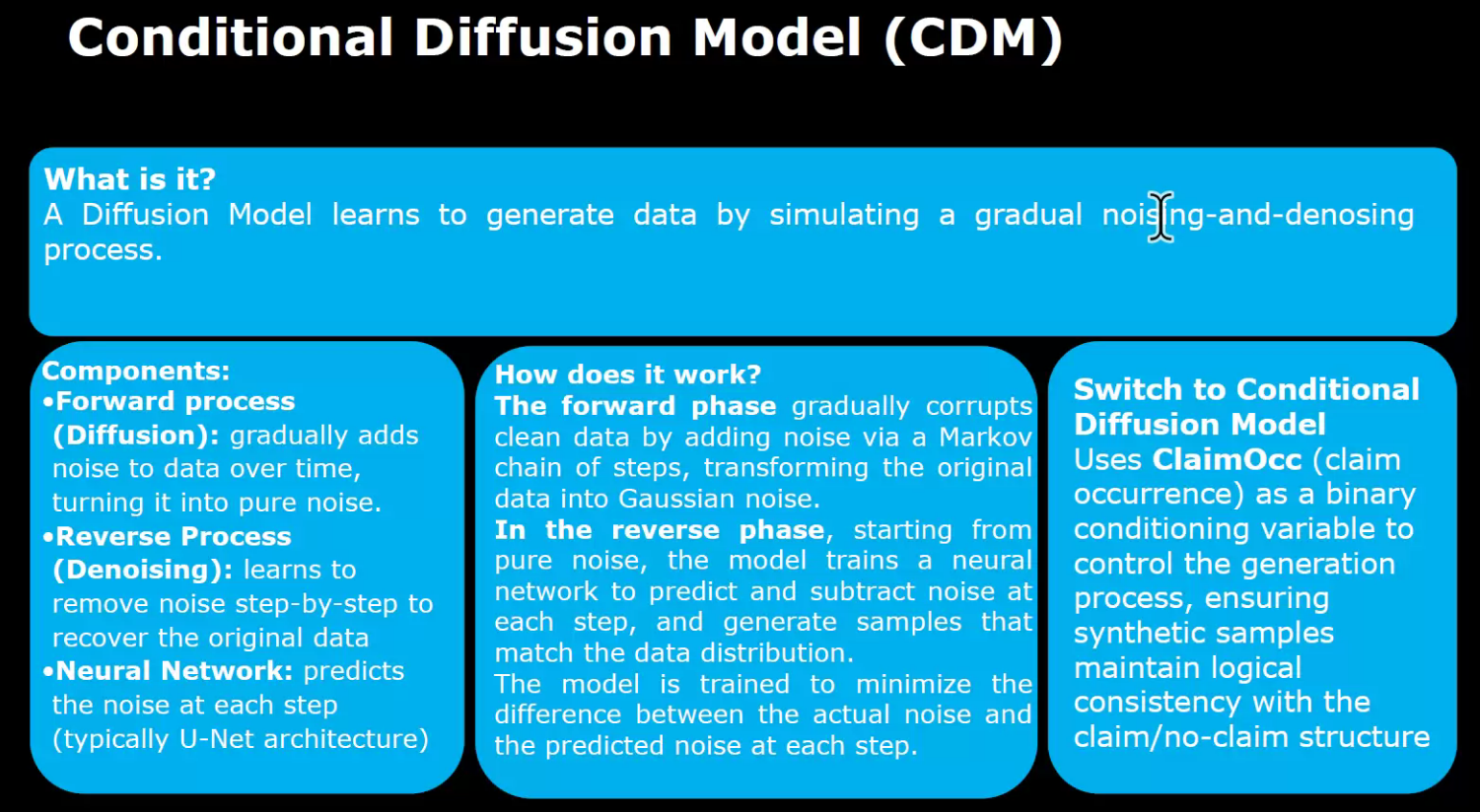

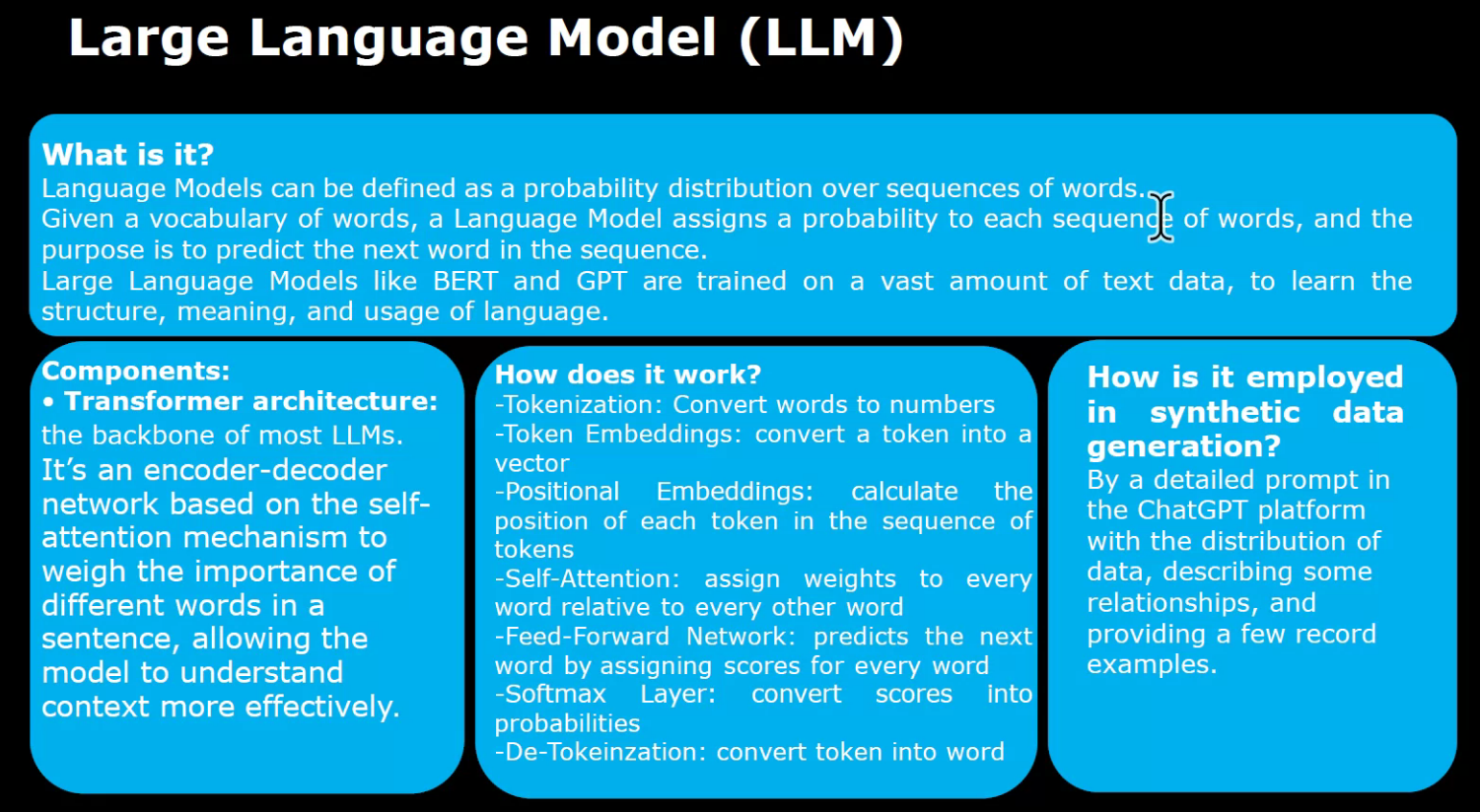

- a Conditional Diffusion Model, and Large Language Models.

The analysis assesses each model’s ability to capture the underlying distributions, preserve complex dependencies, and maintain relationships intrinsic to the premium data.

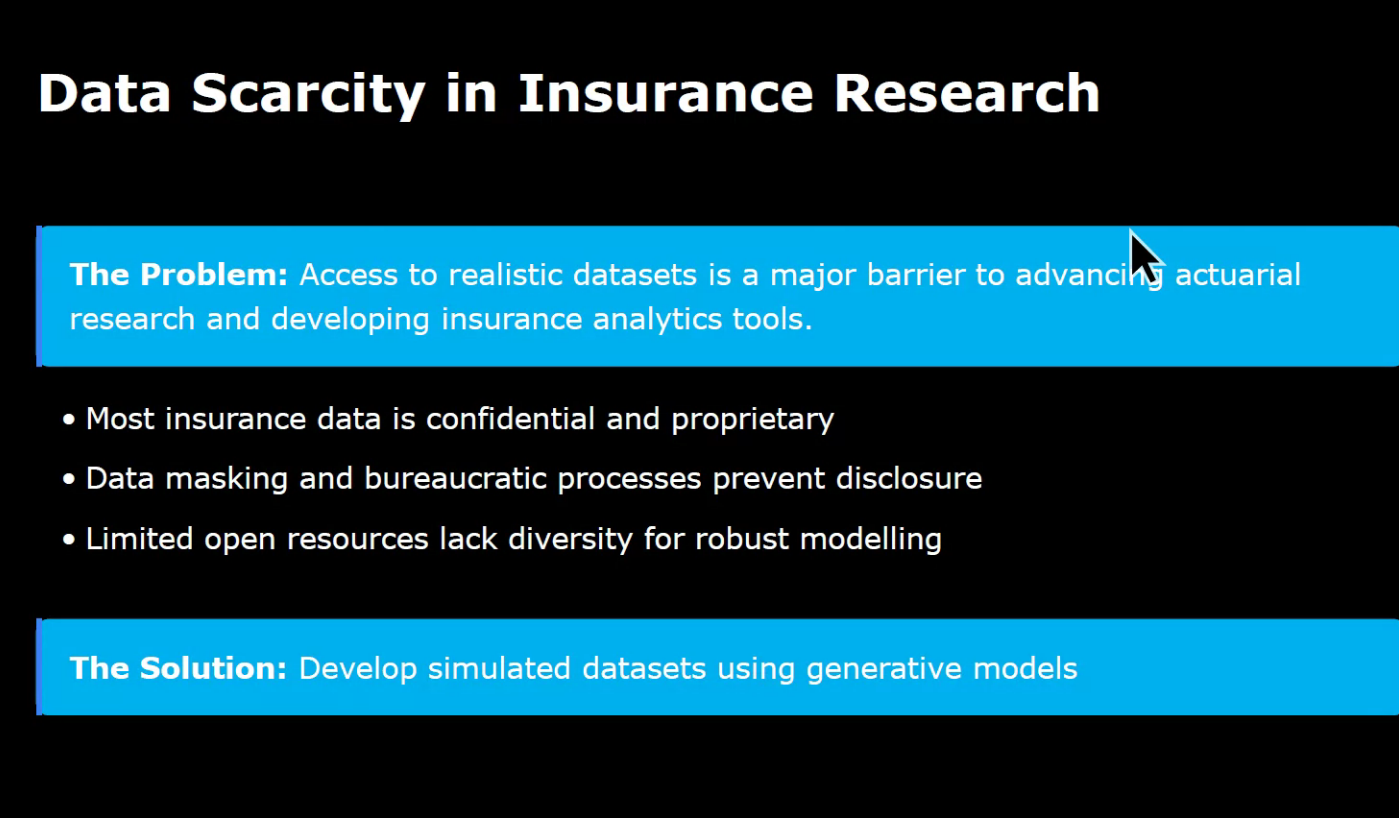

These findings provide insightful directions for enhancing synthetic data generation in insurance, with potential applications in risk modeling, pricing strategies with data scarcity, and regulatory compliance.

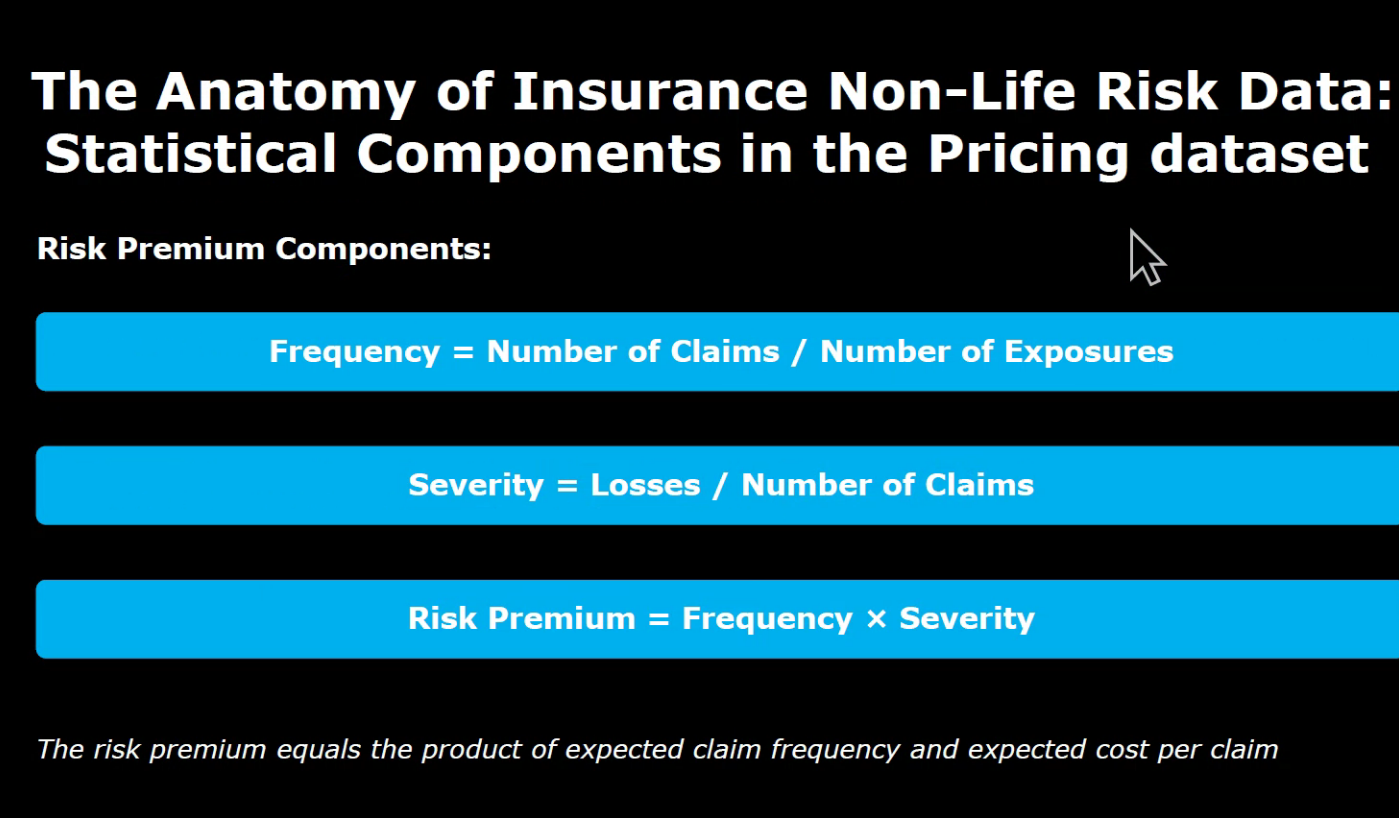

In classification and regression tasks, generative models aim to learn the joint probability distribution of data.

These models focus on generating data points similar to the training data.

Open insurance datasets are rare because they encode proprietary risk structures of the Company, limiting researchers’ access to comprehensive data for analysis and assessing new approaches.

Generative models enable reproducible experimentation and innovation today. In the talk I explore several generative models used to produce synthetic data.

In the talk I explore several generative models used to produce synthetic data.

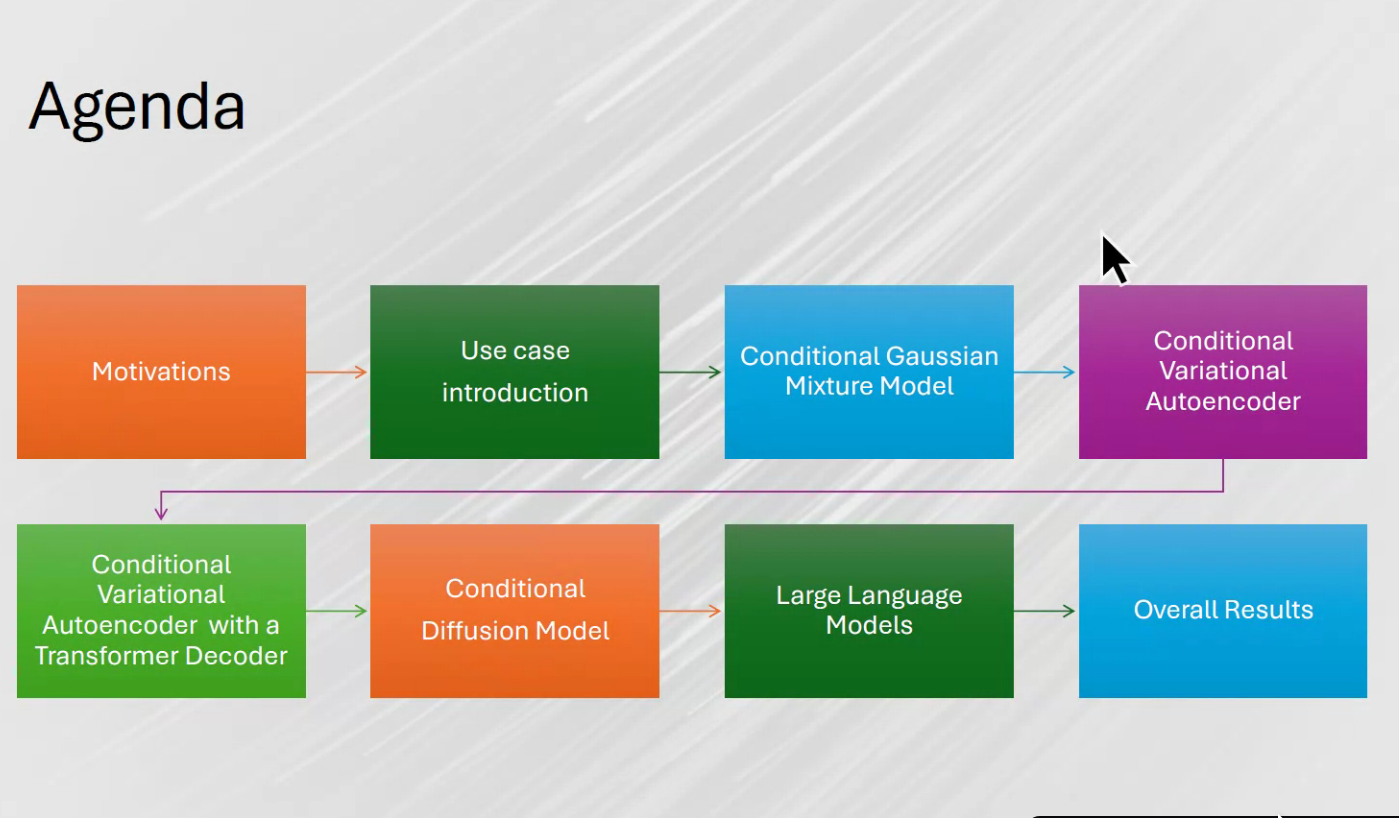

- Conditional Gaussian Mixture Models used as a benchmark;

- Conditional Variational Autoencoders;

- Conditional Variational Autoencoders with a Transformer Decoder;

- Conditional Diffusion Model;

- Large Language Models.

Finally, I gave the overall results, followed by different approaches.

- Basic Python and PyTorch

- Some familiarity with neural networks (e.g., feed-forward, softmax)

- No need for prior experience in building models from scratch

Tools and Frameworks:

We will introduce you to certain modern frameworks in the workshop but the emphasis be on first principles and using vanilla Python and LLM calls to build AI-powered systems.

Claudio Giorgio Giancaterino

- Statistics & Actuarial background

- Actuary during the day

- Data Scientist in the free time

- c.f links

Outline

The Data

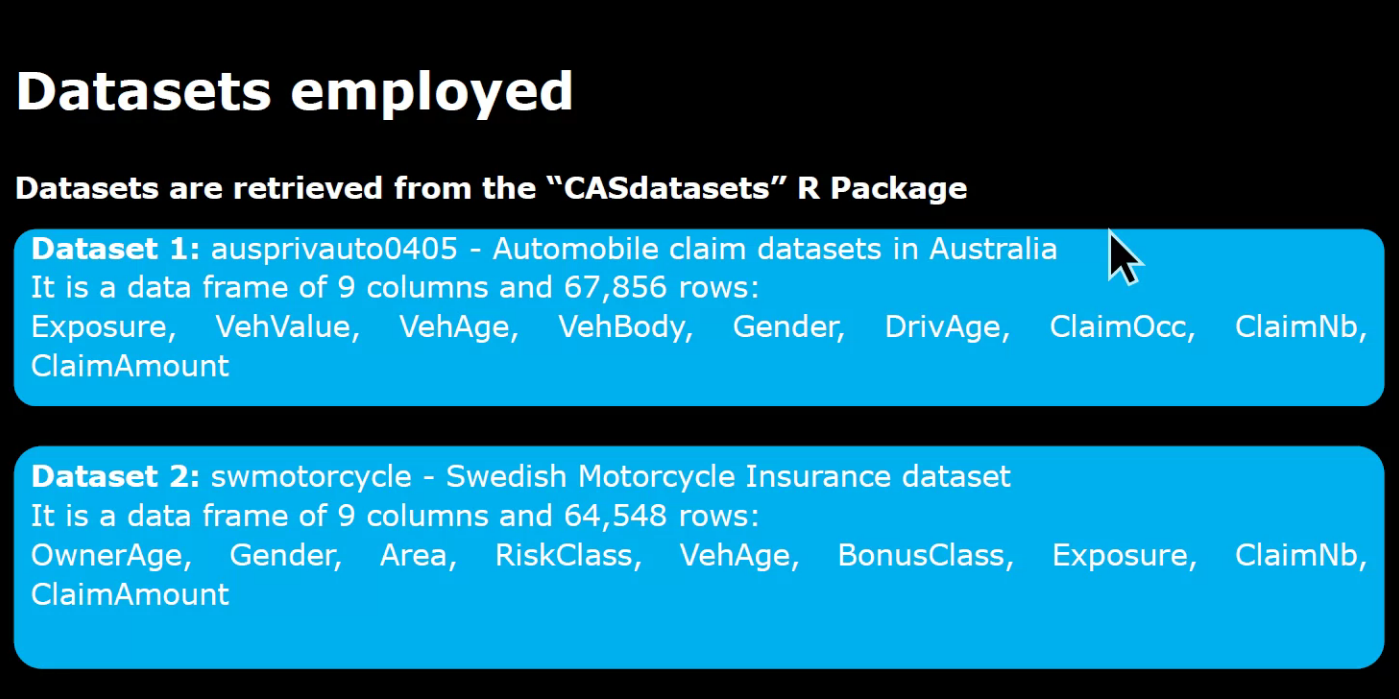

These are kind of similar.

I thought that non-life insurance was very broad.

- Should look into other datasets

The Models

this is like a party ?

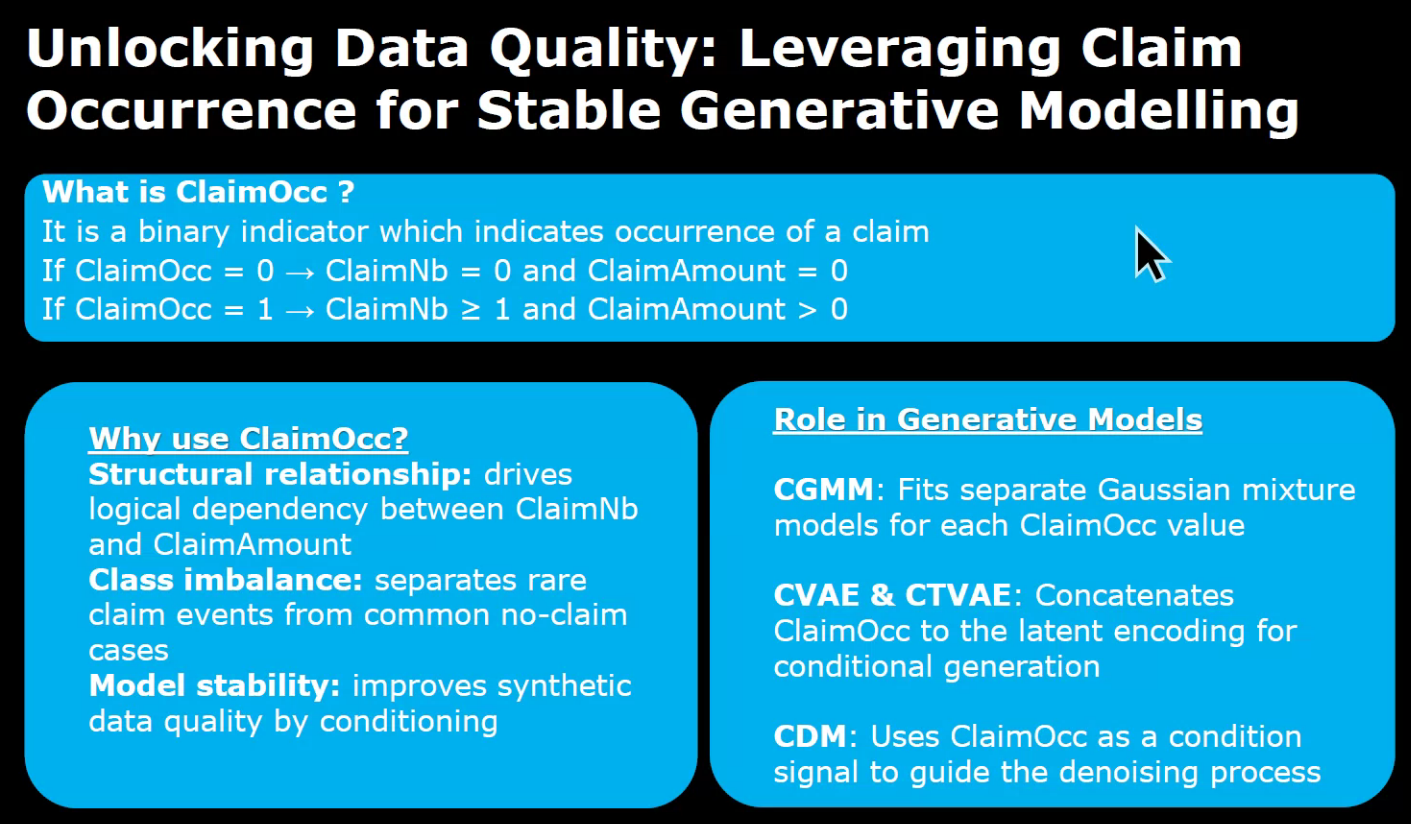

- I covered Gaussian mixtures in my notes on Bayesian Mixture Models

- EM is fine for Point estimates but It seems that using a Hierarcial MCMC the ClaimOcc would be handled just as well without conditioning.

This is analogous to a forger

Create a story but start with an outline - i.e. to make it a richer story.

Add noise to an image and then learn to denoise it.

- Unclear how using an LLM would be applicable to the tabular datasets discussed above.

- What is the context,

- what are the sequences ?

Perhaps this is just a feed forward Neural network doing regression or a transformer doing regression.

Validation

Results and Conclusions

My Reflections:

- The speaker is a very smart/accomplished person.

- The validation parts is very interesting - it would be interesting to see what he can say about model validation in general.

- The model intuitions are neat. Worth reviewing and noting down!

- How was the data used with LLM ?

- How is the LLM trained?

Citation

@online{bochman2025,

author = {Bochman, Oren},

title = {Harnessing {Generative} {Models} for {Synthetic} {Non-Life}

{Insurance} {Data}},

date = {2025-12-09},

url = {https://orenbochman.github.io/posts/2025/2025-12-09-pydata-synthetic-insurance-data/},

langid = {en}

}