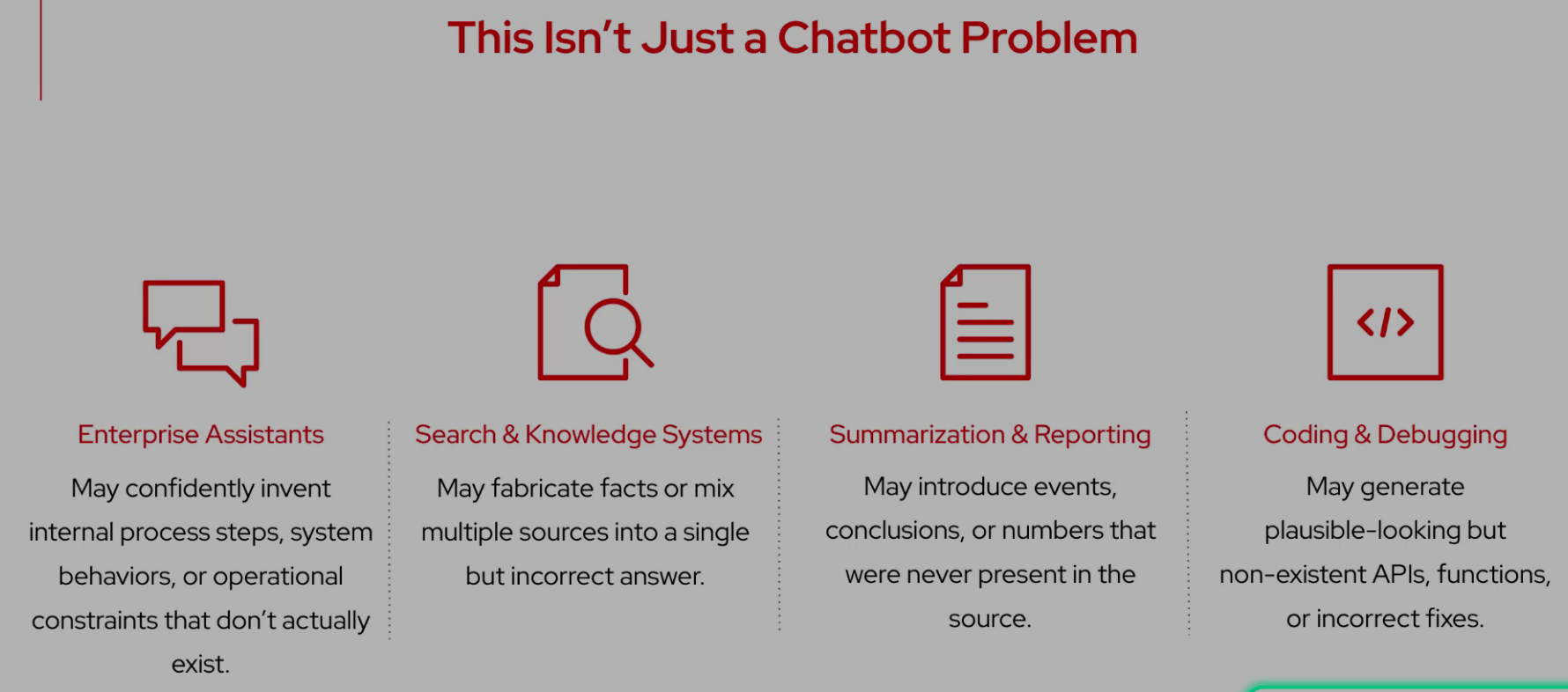

AI systems are increasingly being integrated into real-world products - from chatbots and search engines to summarisation tools and coding assistants. Yet, despite their fluency, these models can produce confident but false or misleading information, a phenomenon known as hallucination. In production settings, such errors can erode user trust, misinform decisions, and introduce serious risks. This talk unpacks the root causes of hallucinations, explores their impact on various applications, and highlights emerging techniques to detect and mitigate them. With a focus on practical strategies, the session offers guidance for building more trustworthy AI systems fit for deployment..

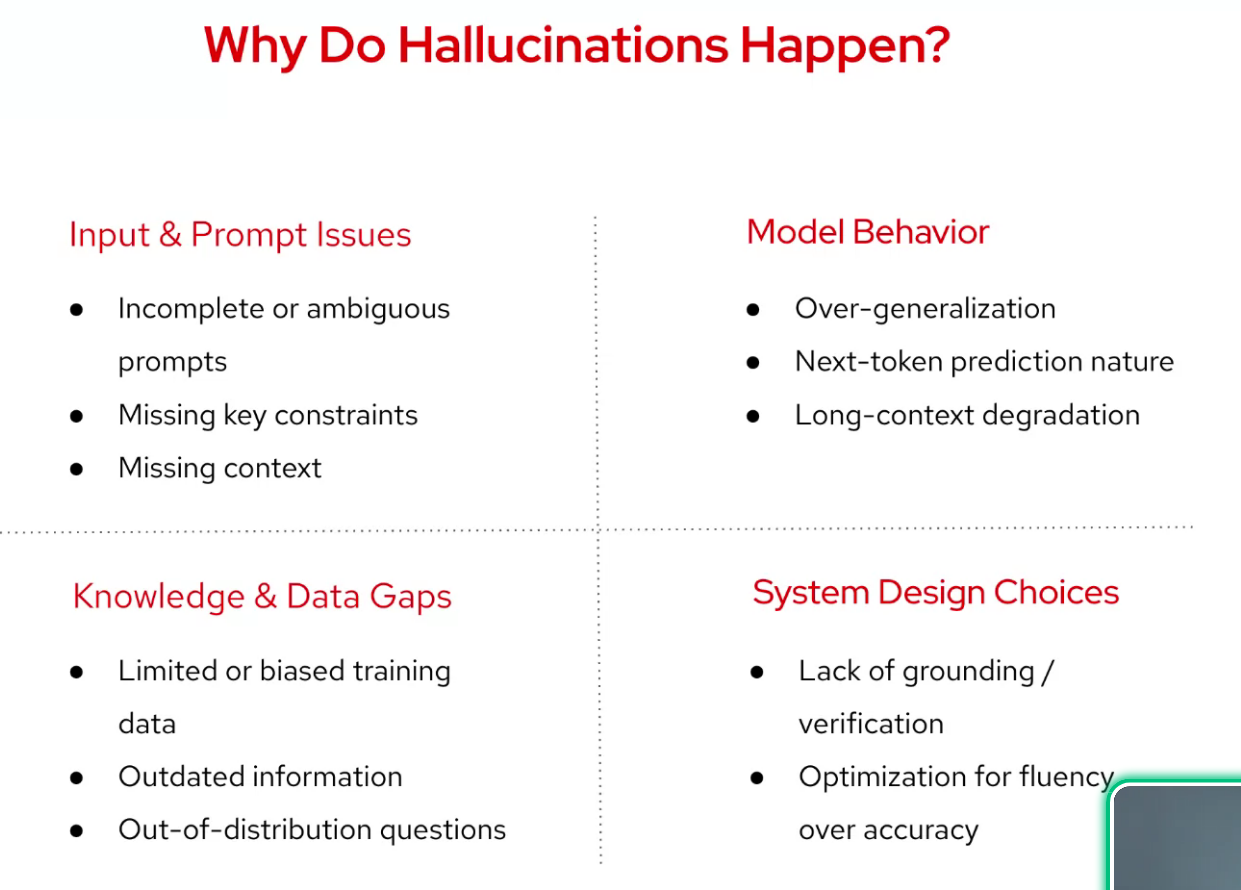

This session will unpack the problem of AI hallucination - not just what it is, but how it surfaces in everyday use. We’ll look at the common causes, ranging from incomplete context to over-generalisation, and walk through detection and prevention techniques such as grounding, prompt design and RAG. Whether you’re building AI products or evaluating outputs, this talk will give you the tools to recognise hallucinations and reduce their risk.

Aarti Jha

Aarti Jha is a Principal Data Scientist at Red Hat, where she develops AI-driven solutions to streamline internal processes and reduce operational costs. She brings over 6.5 years of experience in building and deploying data science and machine learning solutions across industry domains. She is an active public speaker and frequently presents at developer and data-science conferences, focusing on practical approaches to applied AI and LLMs

Outline:

- Introduction to hallucinations in LLMs

- Common causes behind hallucinated outputs

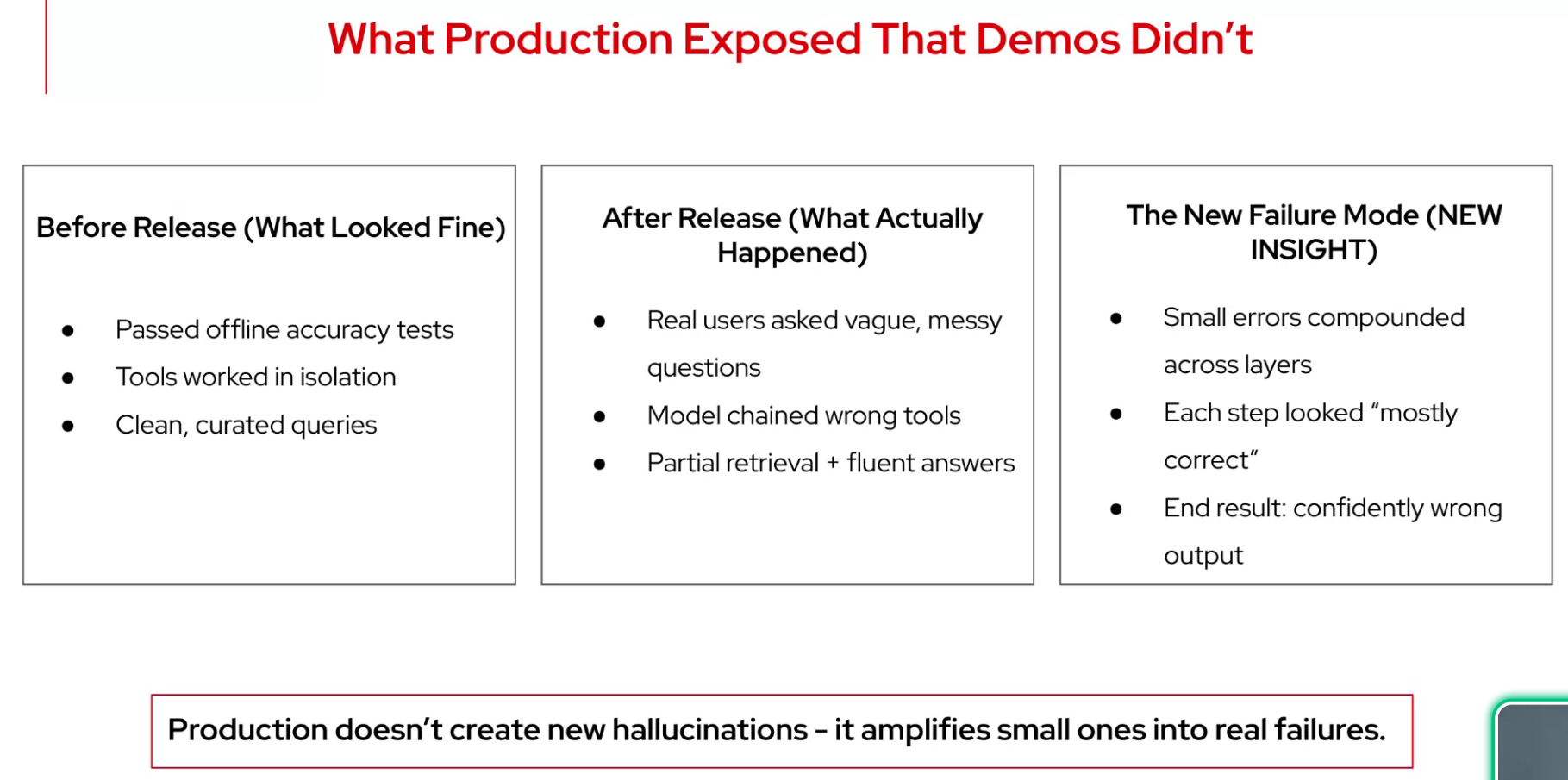

- Impact on production applications

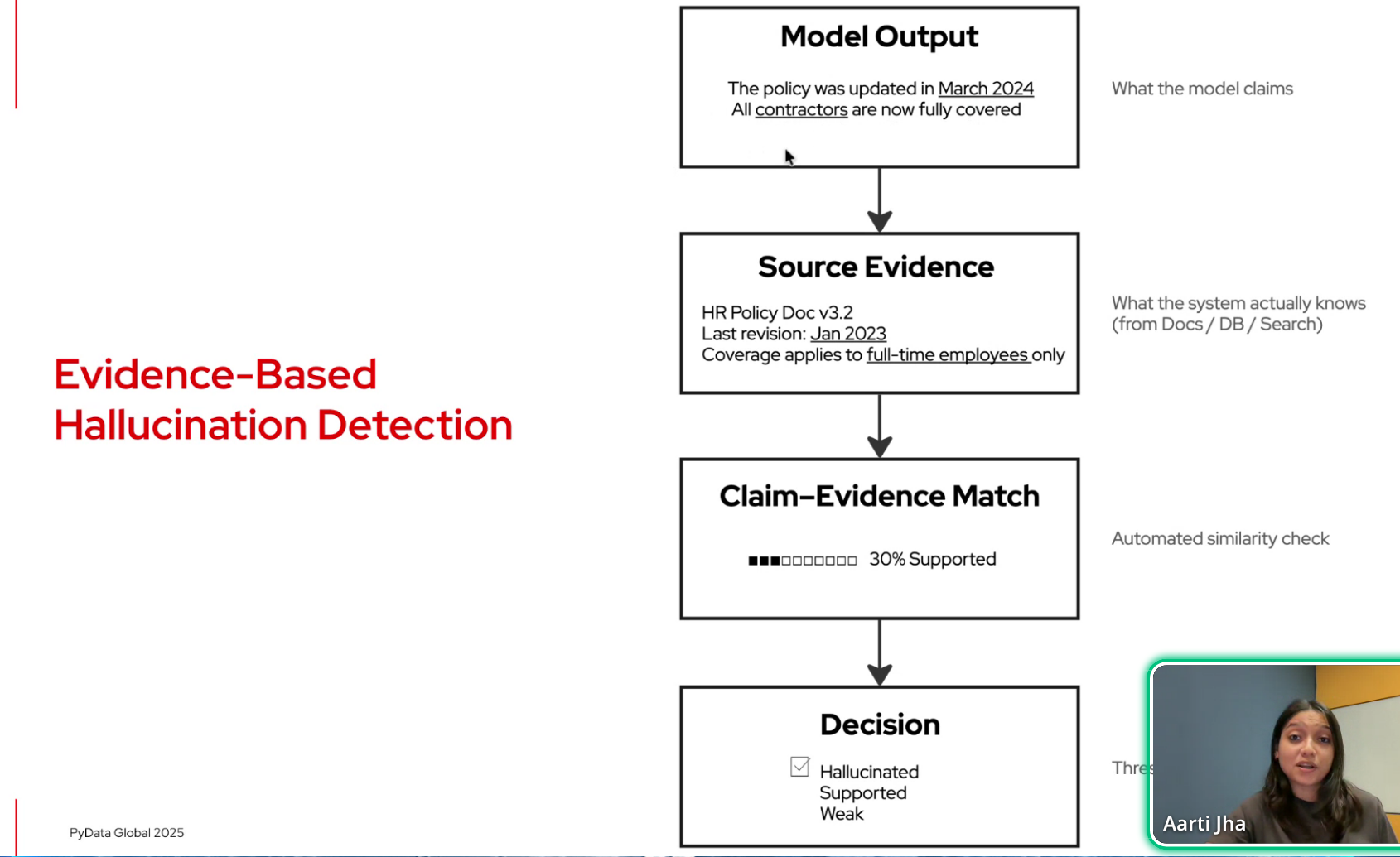

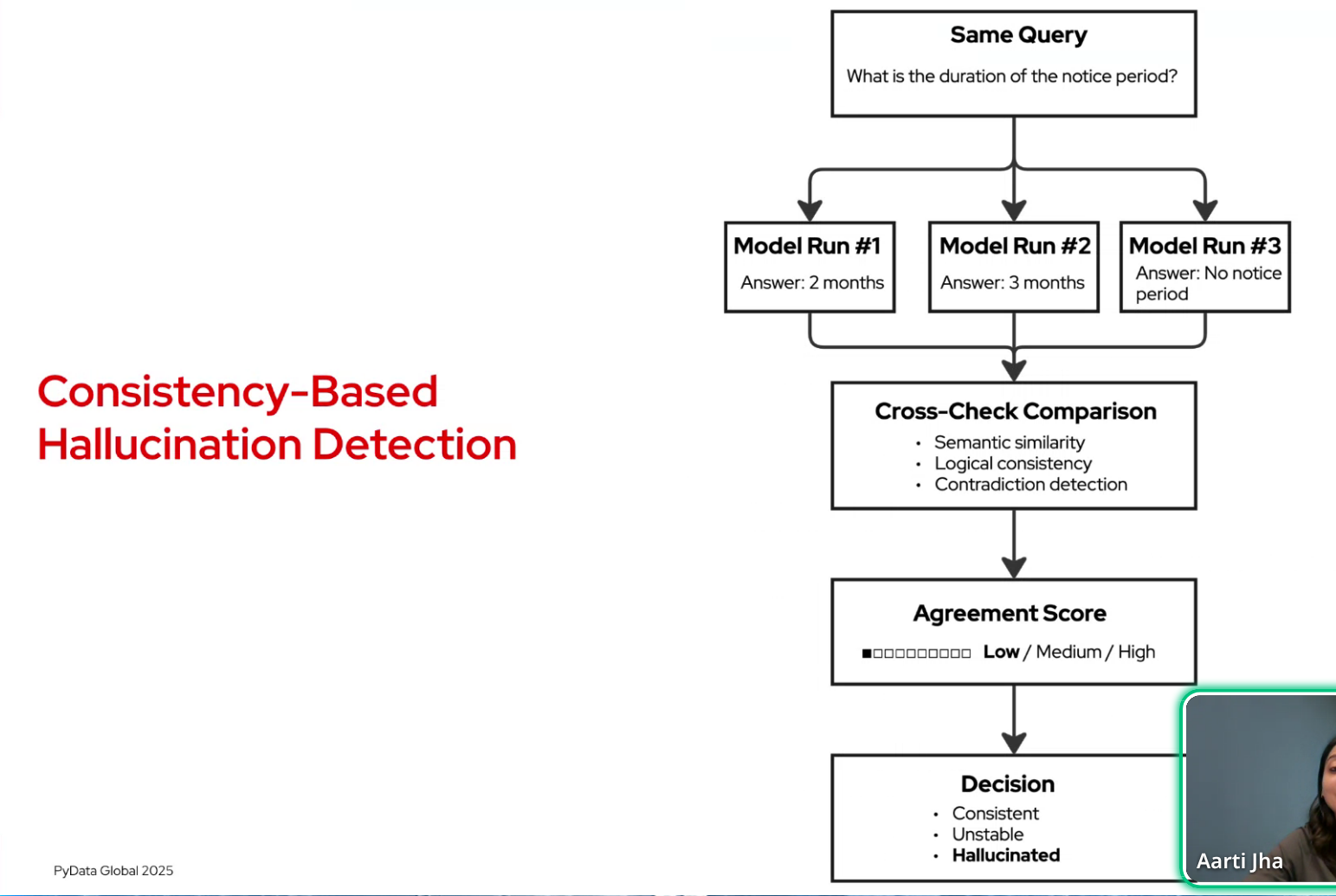

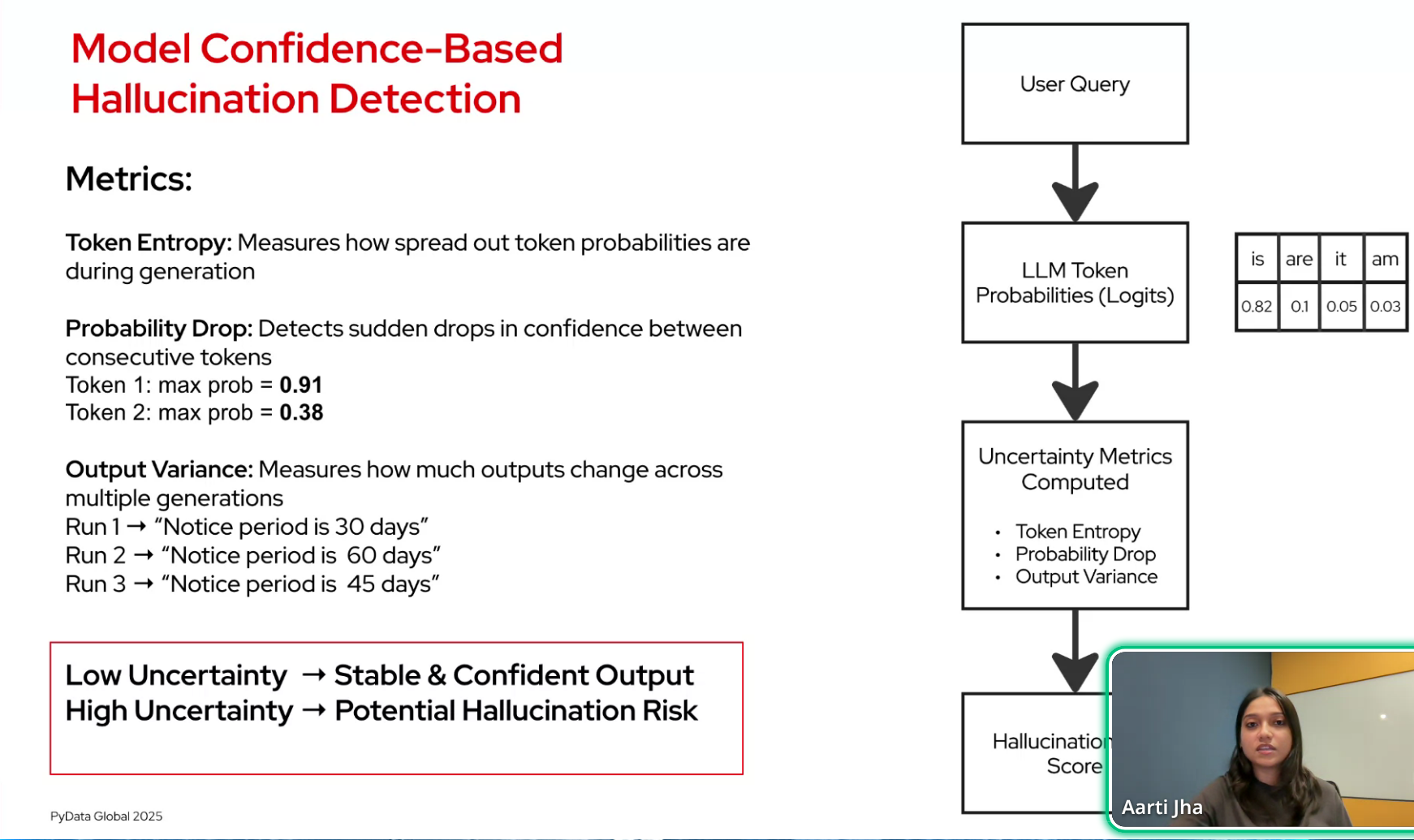

- Techniques for detecting and evaluating hallucinations

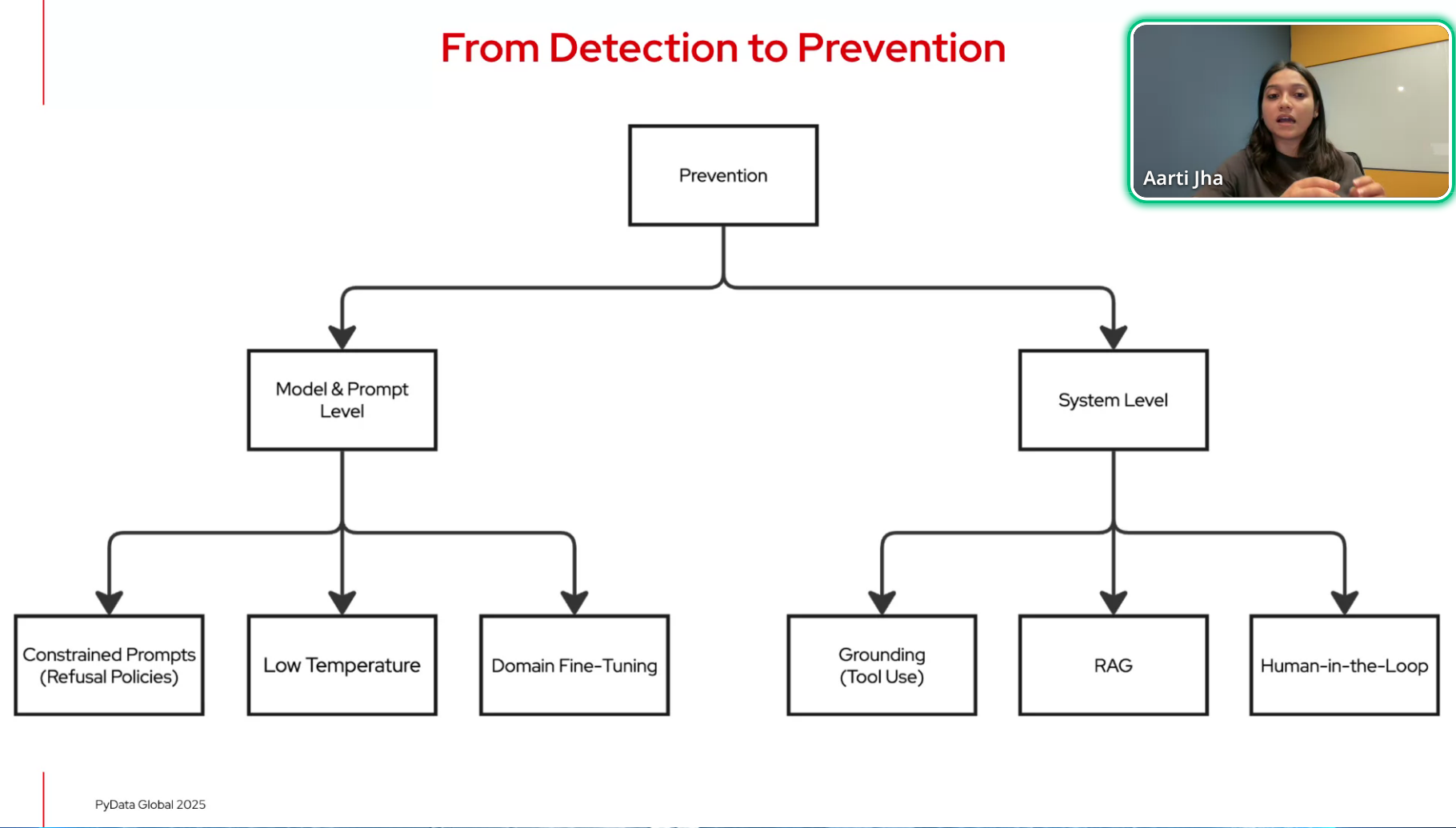

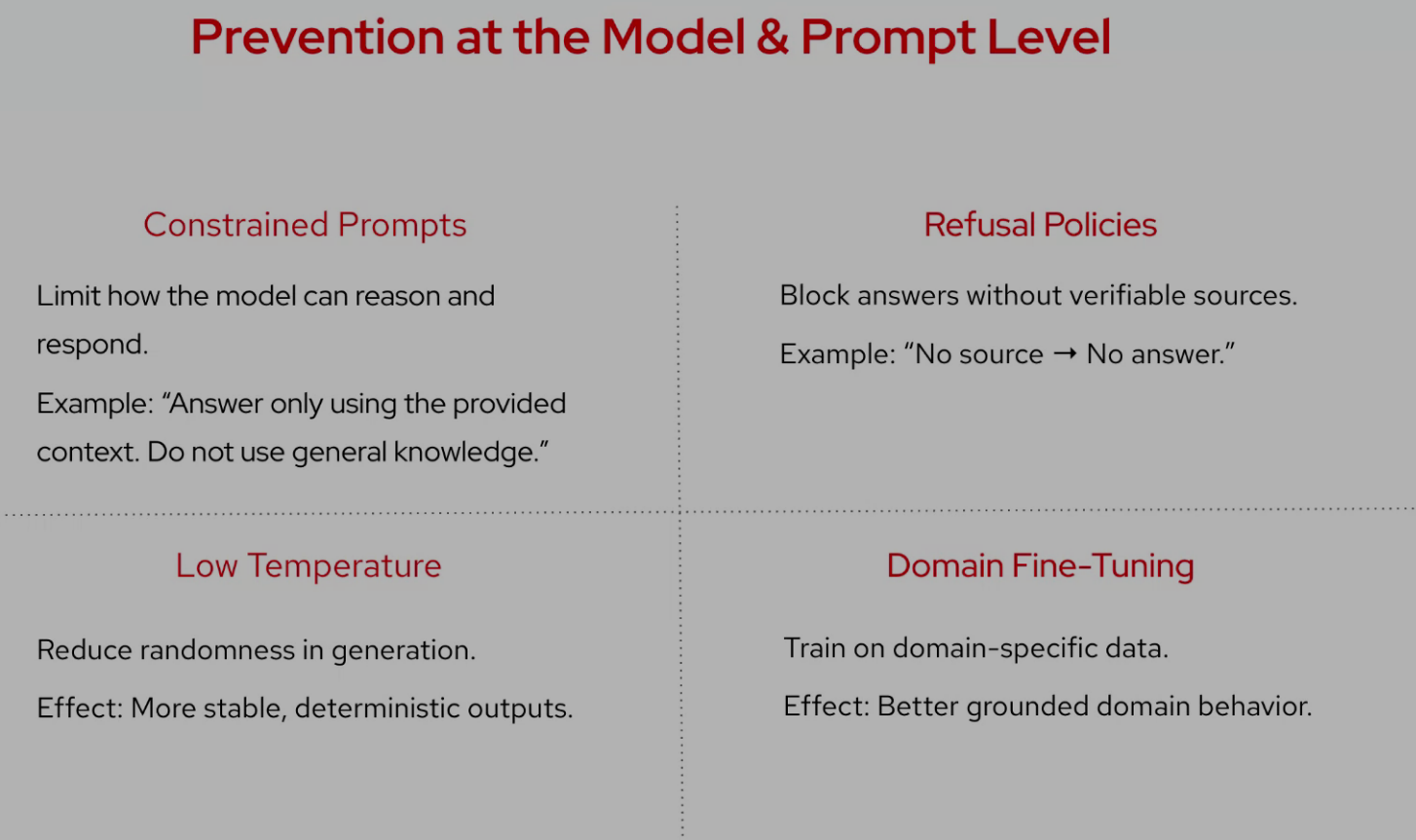

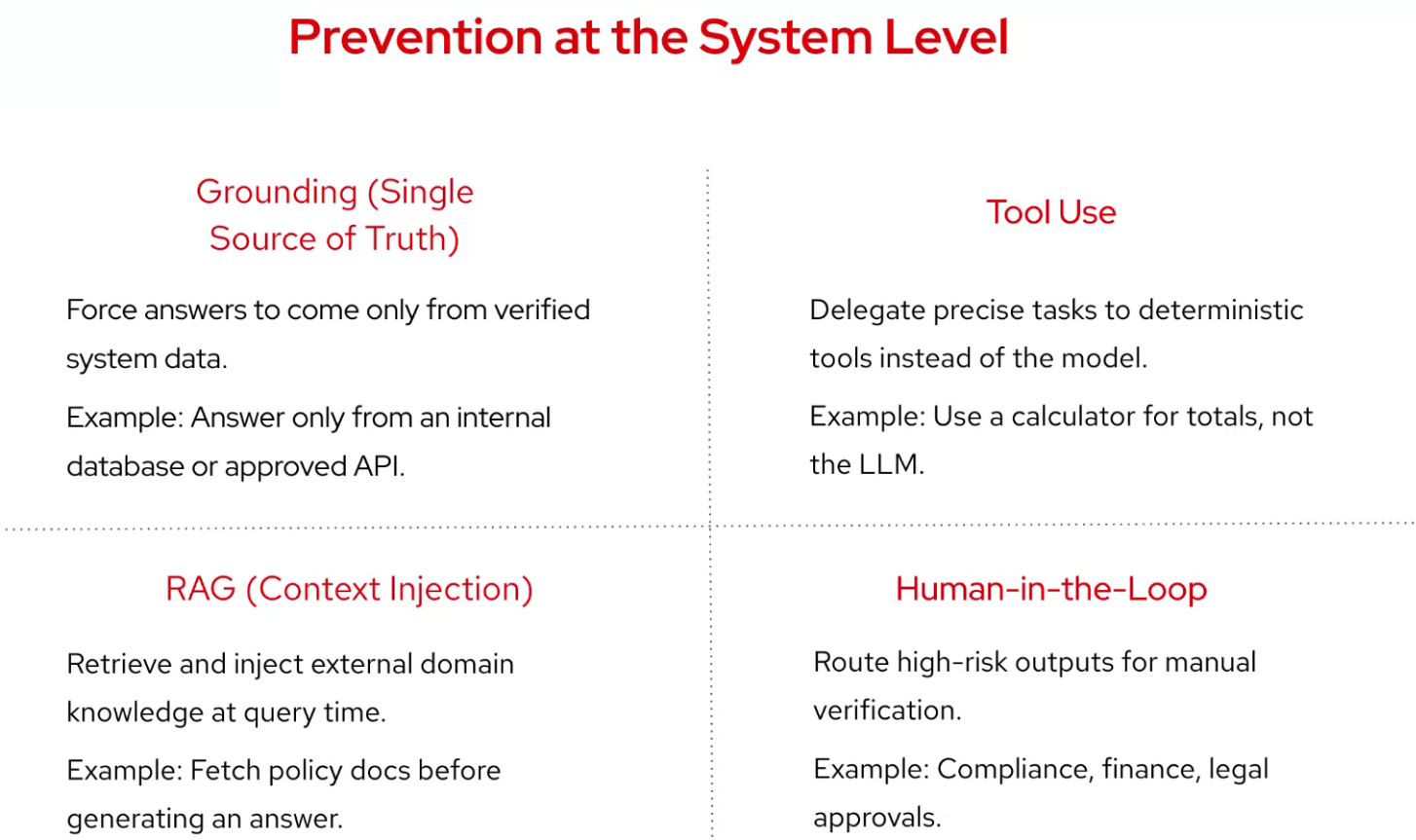

- Strategies to reduce hallucinations

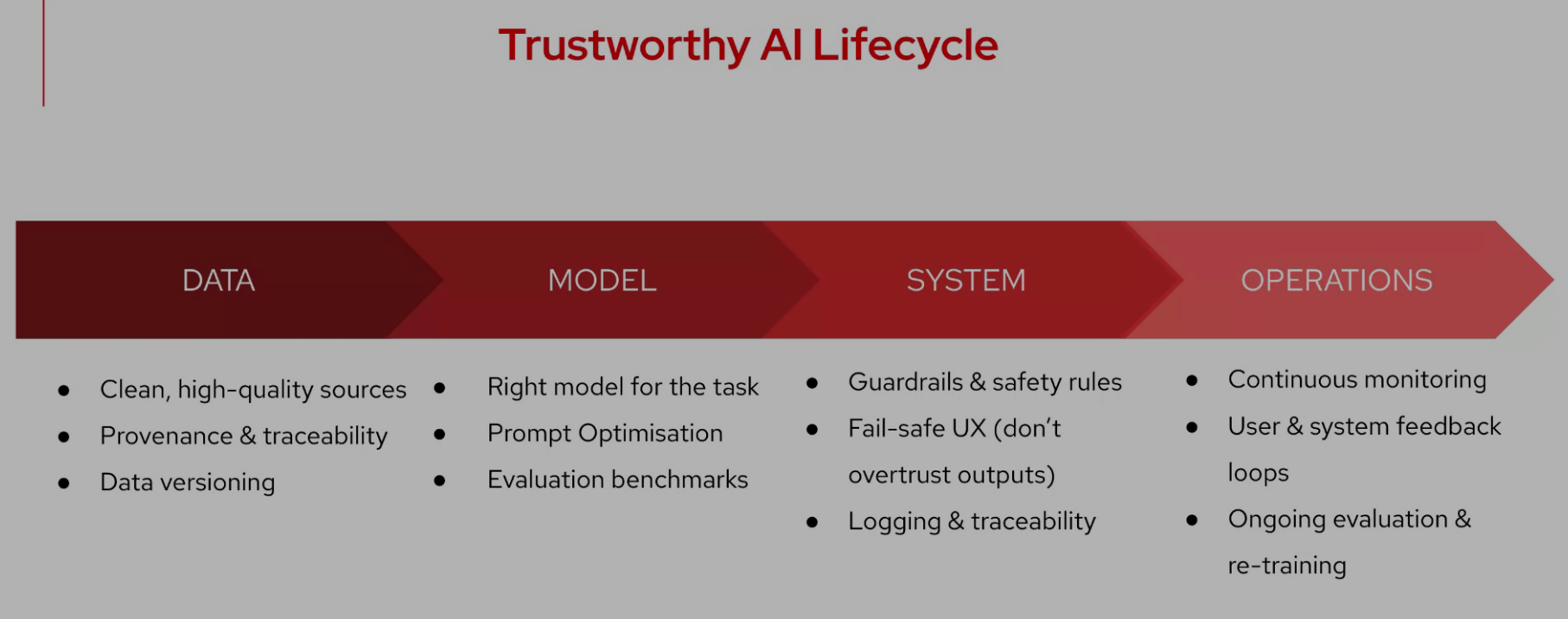

- Best practices for building trustworthy AI products

- Key takeaways

https://github.com/aartij22

Reflections

- this covers some interesting ground

- it does not cover the recent papers that trended on Twitter etc.

Citation

@online{bochman2025,

author = {Bochman, Oren},

title = {When {AI} {Makes} {Things} {Up:} {Understanding} and

{Tackling} {Hallucinations}},

date = {2025-12-09},

url = {https://orenbochman.github.io/posts/2025/2025-12-09-pydata-when-ai-makes-things-up/},

langid = {en}

}