from itertools import product

def generate_sequences_generator(alphabet, n):

"""

A generator to yield all possible sequences of length n from the given alphabet.

Args:

alphabet (list): List of symbols representing the alphabet.

n (int): Length of the sequences to generate.

Yields:

str: A sequence of length n from the alphabet.

"""

for seq in product(alphabet, repeat=n):

yield ''.join(seq)

# Example usage snippet

# alphabet = ['A', 'B', 'C'] # Example alphabet

# n = 3 # Sequence length

# print("Generated sequences:")

# for sequence in generate_sequences_generator(alphabet, n):

# print(sequence)Okay after taking a break, today I’ve been rethinking Complex Lewis signaling systems and the equilibria. I slow make progress in this area byc considering a number of research questions that seem trivial for a simple two-state two-signal Lewis signaling game but become important as we delve deeper into extensions in which complex systems emerge.

I’ve made a bit of study of the original Lewis signaling game and built some intuition by reproducing some key results. I’ve developed urn based RL algorithms that are fast and Bayesian RL algorithms that are versatile for this tasks. I’ve spent a long time trying to figure out the complex games from a mathematical perspective.

There is a big hurdle between the original lewis signaling game and one that can generate a complex signaling system that is a first approximation of a natural langague, or even a good representation of a formal languae like first order logic. It certainly possible to design some variants that lead to specific complex signaling systems. But a more general solution seems elusive. In this article I’ve made another step towards finding this more general solution.

Recently I also reviewed a number of talks on emergent languages. Considered the challenges they put forth and reviewed the main papers they were based on and read many others.

This article is my first take on figuring out what modifications to the Lewis signaling game used in a number of research papers resulted in a emergent languages. By emergent languages we mean an equilibria of the modified Lewis signaling game that has been learned by the agents through reinforcement learning.

Why are these equilibria called emregent languages ?

We can start with language. Unmodifed lewis games result in learning a shared lexicon which allows the agents to successfully communicate every time. Emergent language can be imperfect with an expected communication less than 1. Emergent languages may also develop more structure than just a lexicon snd this is the main themes of this and upcoming article. Next is the emergence part. In (De Boer 2011) the authors discuss emergence in languages in terms of a self-organizing systems that operate through selection pressure, exploration, feedback, preferential attachment etc. Though as I pointed out with the lewis signaling game and decent RL algorithms a perfect signaling system is inevitable. So that calling such a system emergent needs to be justified and one should perhaps talk about emergent properties like compositionality.

This article takes a step back and reconsiders the what one should expect from a game modified to create a complex signaling system. With enough insight the process may look less like emergence and more like design or engineering.

At this point I am trying to find better research questions which slowly have been collecting in the next section.

I then go over the main terminology and main ideas that are being discussed in the context of emergent languages. This helps clear up the intellectual landscape and will help us touch base for the rest of this article.

Next I try to puzzle out how emergent languages have been generated through trial and error. And what is thier secret sauce. This is primarily an exercise in hypothesis generation.

It isn’t really easy to figure out complex systems like the complex lewis signaling game in general. I’ve made some progress that way and noticed that some challenges from the simple game become advantages in the complex game. So at this point though I will consider some examples and try to take them apart to see if I can get at some general principles.

Some of these are rathe long and will be broken up into smaller articles that cover experiments to check the hypothesis.

Finally I think I can make a couple of hypothesis that go beyond understanding the mechanisms needed to make emergent languages. These are more in line with the design and engineering approach.

Within the complex games we are dealing with sequences of signals based on some alphabet. Here are a few of the research questions that raise their heads as we consider the complexity of the signaling systems:

Research questions

Semantics

In the simple game semantics (meaning) of signals are not easy to define. But the gist of it is that each symbols encodes an action that corresponds uniquely to some state. In nature the actions are real action and in the game they correspond to simply recognizing the original states. So the semantics of the simple games are captured by the lexicon. But how do they arrise ? There can be many ways (which I am exploring in a working paper) But let’s consider the answer presented by David Lewis that semantics arbitrarily emerges though spontaneous symmetry breaking.

Let’s explain a bit. In a mathematical sense a signaling system with N states and symbols is one of any permutation permutation from stated to symbols and it inverse. These are premuations as a set from the symmetric group. Picking one is a form of symmetry breaking. But in the game we do this in steps. THis also figures with the fact that one can decompose a permutation into a product of transpositions. Each time we fix a transposition we are breaking a smaller symmetry. Eventually ending up with a fixed system.

In one sense we do the same for complex systems. But if are interested in a self organizing signaling system to emerge we only go so far with trial and error that arbitrary system breaking follows. Why is this tricky. Whatever system that will emerge even is highly organized and structured may have numerous degenerate systems that are isomorphic to it. In this sense nothing is different - we will still go though a series of symmetry breaking steps whose order is dictated by nature. But it seems there is a greater role for planning step by the sender to select a set of symbols using a mapping that preserves the structure of the states. This type of signaling system is a group homomorphism.

So we have three paradigms in mind for the creation of semantics in the complex signaling system.

- Spontaneous symmetry breaking - that learns a system that is just a lexicon.

- Self-organizing - a blind watch maker that can pick a structure that is a group homomorphism.

- Planning - a sender that can plan a signaling system that is a Normality-Preserving Homomorphism homomorphism.

I.e. IF the semantics arise via self-organization using RL we may end up with systems that are do not correspond to different stages in the sequence of normal subgroups. And with a good algorithms we likely get the smallest symmetries most frequently and fastest and the largest symmetries least frequently and slowest. With planning the sender should be able to plan a signaling system that is a normality preserving homomorphism i.e. leads to a system that captures the structure of the states.

One point about self organization.

I envision this in few ways.

The first is that sender may decide to swap the signal between states and jump closer to a homomorphic equilibrium. This can happen using epsilon greedy exploration and may be reinfoced over time if the outcome is better. A second is some sort of preferential attachment or rich get richer situation where the states are. Unevenly distributed and we use the initial bias to learn a system that has not just symmetry but a semantics to the symbols. Other ways for a system to self organize is to replicate structures though templates. These might arise from using priors in a hierarchial bayesian learning system.

Another way the system may self orgenize if there is a bias in the states that is reflected in the rewards. This bias may be reflected in the distribution of the states or in the rewards. If the states are distributed unevenly the system may self orgenize if rewards encourge shorter messages or messages built from shorter symbols (think morse code). One way I envision this is that the signaling system is at first a 2x2 system. One system might arise spontaneously. Next each of these states becomes two states with a new symmetry. If learning is a hierarchial and bayesian the bias learned intially can be used to predispose learning to learn one systems with a symmetry (perhaps even if there isn’t one) Another ideas is if the states are dirtibuted unevenly, the system may self orgenize if rewards encourge shorter messages or messages built from shorter symbols (think morse code). The sender sees this he might use the old system as a template for additional states via a template or some prior. This is a could be planning but it could also be though some contraint. A prior may be just a bias for listing the more comon substates first reusing the existing symbols in a sequence. This may lead to a systems thar leands itseslf

- Using templates - reusing existing structures and teaching/learning through analogy or using an established bias.

All these approaches can be incorporated into planning. Just that a planning agent may be able to simulate many states drawn from the distribution of states and seek out a signaling system that is a optimal for some criteria - faster learning though homomorphisms, better generalization, safer communication, error correction etc.

Given all that there could be further structures in the states that are not even captured by normal subgroups (i.e. not symmetries perhaps order and hierarchy) To capture these we may need better planning or to design rewards strucures that allow these to emerge naturally though self-orgenization

Here are some more questions pn semantics?

How do semantics arise for sequences?1

- Does Simple lexicon2 suffice ?

- Is an aggregation rule sufficient to create semantics? Does a simple lexicon help?

- What aggregation types lead to compositionality, entanglement and disentanglement of meaning?

- Is having a grammar3 sufficient to get a useful syntax sufficient?

- can we rely on syntax for semantics?

- if not how do we get a general framework for semantics?

- To what extents do pre-linguistic structure determine the ability of agents

- Will agents become great if they have greatness thrust upon them? i.e. If they get a nice signaling system early on will the be able to extend it or will it wither away4? 5

Are there subset of sequences that are:

- Easier acquired by one or multiple generations of learners? ;

- Better suited for communication between agents ? Perhaps exhibiting greater resilience to errors, reducing risk for certain signal, handle saliency, able to compress sequence to make best use of the comms channel?

- More able to generalize to new or unseen states (moran process for the alphabet)

- a better match to represent the states.6

- easier to interpret/translate/transfer

Emergent languages may be subject to selection pressure when the Lewis game is composed with some external framing game. What choices are more conducive for agents to lean quickly and communicate effectively using robust learning . I.r. algorithms that lead to more stable language whose lexicon, grammar and semantic persist over time?

- Do we need to tinker with rewards structure to realign the incentives of the agents?7

- Can these be grounded within the external framing game? 8

- resilience to distributional shifts in the states distribution. (e.g. changes in the framing game)

- resilience to co-adaptation between agents. (persistence of the lexicon, grammar, and semantics).

More crucially, what interventions can we make as designers can to encourage quick emergence of a signaling system that is conducive to perfect communication, fast learning and generalization?

Can we impose additional structure on the sequences (e.g. a formal grammar that decides a sequence is well formed) Does this imbue the signaling system with additional desiderata?

Natural Languages develop between many agent and evolve over long time frames. (Hebrew students in primary school read the bible written in Hebrew thousands of years ago using just thier knowledge of modern hebrew.) What about having many senders and receivers speeds things up. I.e. what choices can we make as designers to leverage this.

Can the the sender plan all this machinery in advance and hide it in the the sequences allowing the receiver to learn in the same way as the simple game. Can the receiver infer the machinery from the data? Are there other paths to learning. What if they need to make revisions can they handle those too?

Can we locate set of states with symmetries where we have more more equilibria that preserve the structure of the states.

- Is subgroups enough or is there benefits to having normal subgroups?

- How can we quantify the amount of learning needed to learn a signaling system for a given set of states?

- How can we quantify the likelihood of reinforcement given set of states for signal/action action/signal pairs?

- Can we use s-vector semantics to aid in quantifying this.

What can we say about basins of attraction and stability for equilibria in a lattice from maximally symmetric to minimally symmetric equilibria?

Are there some some collections of states for whcih we can expect radicaly different equilibria to exist in the complex signaling system?

1 this is a natural question for anyone who has stated learning a new language that is from a different language family, and in this case we may be better equipped to answer it. I’ve outlined three mechanism there may be more and there may be additional subtleties.

2 semantics for atomic symbols, it kind of does in propositional calculus

3 rules imposing structural correctness on a sequence

4 c.f. erosion of the verb in romance languages

5 This seems more of an algorithmic question about how we reinforce sub-state coding in the algorithms that is can do generalization. c.f section on planning

6 can we leverage representation theory to handle symmetries within the states?

7 can agents that are at odds evolve a signaling system or will deception lead to the collapse of the signaling system to perfectly pooling equilibria?

8 will we get the full benefits of signaling systems in our framing game to re-shape the over-all equilibria

So I ended up with more questions then I bargained for. These are questions within question. These questions suggest new and intriguing desiderata for (complex) signaling systems as some novel path for signaling and possibly new settings for learning them.

Are Complex signaling games hard to visualize?

In reality as I progress writing this article adding more examples. I keep finding more questions and I have little in the way of answers. Some what similar to how I felt at the end of my BA in mathematics. In reality I’ve made lots of progress with the lewis game and so I do believe I can make more progress. The reality is that to make progress you need to ask the right questions. I have been lucky to be able to look at the work of many others, criticize but later ask different questions than they did and so I was able to crack the issues related to the simple game. I also developed good intuitions on the simple lewis game and over and over I realize that the complex game is in many aspects just the simple game in most respects.

I was looking over books this week and I came across two or three by math teacher, author and blogger Ben Orlin. I felt they are too basic for me to buy but I did get the idea that with the kind of sketches he used I may be able to make more progress with these research questions. I confess am not very good at visualizing thing in my mind. So unless I can put them down on paper so it can be a bit of a struggle to get the ideas out of my head.

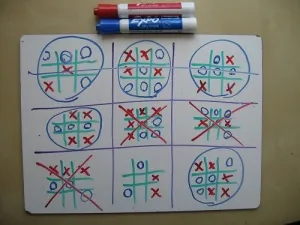

This ultimate tic-tac-toe game seems to be similar to the coordination task in the lewis game with three states and three signals. In tic-tac-toe the game is zero sum and in the Lewis game the agents are trying to cooperate. But nature (chance) can have the effect that they don’t end up with a good solution or at least take a long time to lean a good system.

One way to visualize this could be viewed as a pattern that is like a three rook problem in chess in red with the blue corresponding to all the misses they made along the way. Of course lewis games can start on a two by two grid but tend to be much bigger.

A second aspect of visualizing is the learning in the game. I use urns or matrices with heatmap to visualize the learning in the simple game.

Now this ultimate tic tac toe suggest something extra. In complex lewis games we are working with sequences. The simplest way to think about sequences is to put each at the top of the matrix and all the states at the side. Agents then look for an n-rook solution in the matrix. And that is a signaling system. However there may be other ways to view sequences, perhaps using nesting or recursion which is a way to establish hierarchy and organize a language

Say we had a sequence of three sysmbols we

I have been doing some similar work myself trying to explain basic statistics visually. And I used my own urn models to workout the different lewis algorithms. So it’s not a big surprise how looking at his books I got the idea that I need to make this more visual and concrete.

What I hope is that by posing these many questions I might be able to find some organizing idea to answer them. I seems that one way to move forward is to extend the petting zoo environment and build agents that can participate in experiments that will help me answer these questions. A second challenge is to add metrics that lead to easy evaluation of communication and robustness of the equilibria in the game. A third challenge is to test different algorithms for learning the signaling system and see how they perform in some different framing scenarios. Another challenge seems to be even harder – that of interpreting the many emerging languages.

Looking fo the Organizing Ideas

Earlier this week I tried to explain the main issues around compositionality to a friend. This helped to cement my understanding of the problems that researchers in this field are facing. I noticed that in many talks by RL researchers they like to presents their work from first principles. This means most of the RL talks wastes a big chunk of their time on introductory stuff. But it does allow them to talk about advanced concepts with all the more confidence their readers understand what they mean.

Let’s start with these concepts that come up quite a bit in the literature and might be less intuitive to the uninitiated. I realize that big words can be intimidating so I will make an effort to explain the main concepts in a simple way and if I do use more big words in these definitions, I’ll mark them in italics to indicate that they are not essential to understanding the concept. Some readers might know the terms in italics and this might be helpful to them.

- Signaling systems - these are steady states corresponding to separating equilibria in to the Lewis signaling game that allow agents to communicate about the world with each other using symbols and without making mistakes. Human languages are rife with ambiguity and do not fit into this category.

- Besides signaling systems the Lewis signaling game has many equilibria that are less then conducive for perfect communication. These correspond to partial pooling equilibria and we can rank them by their likelihood of the agents to correctly interpret each other messages. We can generally pinpoint the issues as homonyms in the lexicon.

- Is the agents ignore the states or the messages then the game collapses to a perfect pooling equilibrium. This is a state where agents can’t do better then random guessing.

- Even when agents are deceptive and messages are cheap talk, it is possible to agent to coordinate by looking at thier actions and infer from that some kind of signal. So even under a zero sum game it may be possible that they might end up with an system of communication that is better then random guessing. c.f. (Jaques et al. 2019) However this is an aside on deception and will not be pursued further.

- Simple signaling systems - these are signaling systems that comprise of just a lexicon of symbols and their corresponding states.

- Complex signaling systems - these are signaling systems that comprise of a lexicon of sequences of symbols drawn from an alphabet and each sequence represents some corresponding states.

It not simple to explain how these differ differences from simple signaling systems except to say that while simple signaling systems are just a lexicon complex ones may be able to capture some or possibly all of the nonces of a natural language.

One way is that perhaps some the symbols comprising the alphabet might be included in the lexicon as length one sequences, and when they are used in a sequence their semantics may be assumed to be the same as when they are used alone, unless there is an explicit entry in the lexicon that says otherwise. This would mean that the lexicon now has potential to express What is different from simple signaling systems is that should the states have a sub-states with some structure. In such a case if agents are able to coordinate a signaling system that respects this structure they may be able to learn to communicate much faster. Also if the system gain evolves and new states are intorduced with this structure the agents may be able to learn to communicate about these states much faster then if the structure is ignored.

It worthwhile to recall that these three definitions

However in this case the lexicon might have additional structure that matched the structure of the states. Idealy this structure is codified using rules so that using these rules the semantics of the atoms can be combined to give the semantics of the whole.

Sub states - Pre-linguistic objects are sometimes called states and we would like to look into these and discern if they have structure like key-values pairs, some kind of hierarchy, some symmetries, a temporal structure, etc. If such a structure exists and follows some steady distribution we the lewis signaling game may have signaling systems that are conducive to perfect communication, faster learning and generalization. for we are primarily interested in complex signaling systems.

Framing Game in movies we often have a framing device - some kind of story that is used to introduce the main story as a flashback. In MA-RL the lewis signaling game may be a means to achieve coordination between agents in a more complex game. This bigger game is called the framing game. Though in in the life long learning setting there may be many games taking place simultaneously.

Entanglement - when a sequence of symbols are combined in a way that their meaning is different from the sum of their meaning we say that they are entangled. For example idioms like “kick the bucket” or “keep the wolf from the door” are non-compositional and highly entangled. We cannot assign a specific word (atomic symbol) that captures part of thier meaning. This is a non-compositional way of encoding information. Although entanglement is explained using idioms, it can happen at different levels and may be an artefact of some selection pressure in the environment to compress information about certain states. If some bound colocation is used very frequently. i.e. where both words are used together exclusively then the speakers may have a benefit in fitness or communication for encoding them as a single symbol. Another form of weak entanglement might be exemplified by the compound verbs in English. In the phrases Look out for, Look up, etc the meaning of the verb look changes in a way that is not a simple sum of the usual meaning of preposition that follows it. This is a cliché. But the bottom line is that entanglement representation require the agents to learn the meaning of the entangled symbols together.

Disentanglement - when the meaning symbols can be interpreted regardless of thier neighbors. There are two problems with disentanglement. The frist is that it is rather vague definition and secondly it is not a property of languages. Sure many words in a language can be assigned a definition that is independent of the other words in the language. But the lexicon if full of colocations, idioms, compound verbs etc. Verbs and Nouns have a stem and affixes that encode multiple units of meaning. Describing relations and using adjectives and adjectives is done using phrases, i.e. they are spread across multiple words. But the problem with this term is that semantics of one usint isn’t a signle bit of information (i.e. discreate) Some symbols can contain more information, then other. This is other grammatical symbols perhaps less but seem to operate on others. The problem with this term is that semantics of one unit isn’t a signls bit of information (i.e. discreate) Some symbols can contain more information, then other. This is other grammatical symbols perhaps less but seem to operate on others.

Compositional- when the meaning of a symbol can be decomposed into the meaning of its parts. This is a compositional way of encoding information. One system that is compositional is first order logic.

Generality - We take here the meaning from machine learning, where a model is said to be general if it can perform well on unseen data. What researchers tend to look at is if agents have have coordinated a signaling system that is a good representation of some subset of pre-linguitic objects which we call the training set, how well would that system generalize to unseen objects?

Catagories - In a simple lewis game we might have multiple states assigned to a single symbol. We call this a homonym and consider it a defect. If though in the framing game we have a survival benefit to acting early, if such a homonym encodes a number of states that require the same action then we might have a benefit to splitting the states into two parts the category and the subcategory. Also we might have additional benefits here by using a shorter signal to encode the category and a longer signal to encode the sub-category. Categories or in more general form Hierarchies are a ubiquitous feature of natural languages. Another facet of this idea is the use of prefixes and suffixes in natural languages. In both cases we have a benefit from using a shorter signal to encode some category and a longer signal to encode the subcategory. But is the prefix perhaps we need prefer to know the category first and in the suffix we might prefer to know the subcategory first.

Aggregation rule - an aggregation rule is a rule that takes an input with a number of symbols and reconstructs from them a state. In one sense this is what we think of as a grammar, but I’d like to keep them seperate and think about the agrregation rule as something more like a serelization protocol. It takes a number of inputs – possible from differnt senders and likely each with some meaning or prehaps just a cue - a partial meaning that can’t be interpreted without the other cues. Two examples of aggregation rules are

- the disjunction leading to the bag-of-symbols

- the idea of serilization of incoming audio signals by the reciever by appending them order in which they are recieved.

- and serilization converting OOP into a sequence - perhaps for images. We can think about a recursive aggregation rule but I’d like to call these a grammar and keep them seperate. Perhaps later I’ll be able to explain why I think this is a good idea. Note that complex signaling systems do not require an aggregation rule or a grammar, but they may benefit from them. Without an aggregation rule we are dealing with a signaling system that is fully entangled and that is little different than a simple signaling system.

tip: currently my mental models for Aggregation rules are {disjunction, serialization, prefix coding}.

- Formal Grammar - describes which strings drawn from an alphabet are valid according to the language’s syntax. Note that the formal grammar is in charge of the syntax and not the semantics. Thus a grammar can be considered as a language generator. If the speaker uses such a language generator then the resulting language will have a syntax. And further more is the grammar is unambiguous then the language will be a signaling system. And Aside is that for propositional logic a formal grammar is enough to define the semantics as they arise directly from the syntax. For FOL we need a model theory to define the semantics. However if we construct a Lexicon with semantics from the model of the FOL we end up with a grammar whose semantics are defined from the syntax. My mental model for a formal grammar in the lewis game is propositional logic on its sub-states.

The main takeaways is that if we generate the lexicon without using an unambiguous formal grammar we are putting the syntax of the language at risk. And if we don’t have a a lexicon for the alphabet we may be putting the semantics of the language at risk.

An interesting issue is that we can have differently likelihoods of having different constructs depending on what we include in out Lewis game extention….

We might want to find a metric that measures the use of categories in a signaling system.

Another couple of ideas.

We can have examples of complex signaling systems that are on a spectrum from being fully compositional to being fully entangled.

Another thing we can use is a complex compositional language for pre-linguistic objects that don’t have a simple disentangled structure and don’t fit with the positivistic view of an objective orthogonal and disentangled dimensions that can be measured with full certainty. Ie we can use a easy to learn compositional complex signaling system to encode the pre-linguistic objects that are not easy to interpret like arrays of raw pixels. But also we might be less likely to learn such a language if this is our only input. We would have to come up with it by change.

One way this might happen is that we could learn a grammar (classifier) that

Transcripts:

Baroni Talk

I’ve had this idea about Complex signaling systems, unfortunately. I’m wasted a lot of time, installing a transcription app and lost focus. Let see

I’m trying to refocus and get back into it…. Yes, I think I got it.

So yesterday after talking with my friend Eyal which got me rethinking about complex signaling systems and trying to realize how despite the many perhaps misguided attempts a growing number of researchers have been able to come up with agents that use complex and even compositional signaling systems. How these agentic systems seem to be a solution to a problem that is hard to frame.

So I walked over my thinking about the baroni paper. I got to realize that these results are problematic. That it is not so easy to explain why. But to get started some of thier ideas and metrics are borrowed from another domain – representation learning. The ideas they were thinking seem as best as I can intuit as this was not adequately motivated of explained in the papers mentioned in the talk – has to do with embeddings. Embeddings map the space of words sequences and thier context in a language think Gazillion dimensions to a space of 300 dimensions. The way to intuit this is that we do a non linear PCA on the high dimensional space and then tak the leading 300 dimensions. This way we get to keep the most important information and throw away the rest. The main problem here is that each dimension in the embedding space is a some summary of a gazillion dimensions in the original space. We can’t say what any one dimension signifies. That is similar to an entangled state in quantum physics. What embeddings tell us in deep learning is that these distributed represeantation are very powerful. They are able to capture the meaning of words and phrases and even sentences. But they are not interpretable. A lot of people, me included wanted to enjoy the power of embeddings but to have them interpretable. It turns out that not only that this is hard but that it is a trade off. The more interpretable the less powerful. The more powerful the less interpretable. What a linguist might want is to break the embeddings into dedicated regions dealing with phonology, morphology, syntax, semantics, pragmatics etc and perhaps also some of the regions overlapping to represent the interfaces between these regions. It would be even better If specific dimensions represented specific features. Some ideas were to use algorithms that would learn to extract such representation from the embeddings or learn them direcrly from the high dimensional space. There are aslo ideas about learning semantic atoms using non-negative matrix factorization. Other ideas about distiling word sense level embeddings from the word level embeddings. But the main idea is that we want to have a representation that is interpretable. So we can do more with them. Given all this it seems to be an interesting to learn disentangled representations.

What’s the problem then is that emergent languages that are not interpretable, the main pain point of Baronis group, are nothing like embeddings. Lewis signaling game at its core is just a way for agents to coordinate on a shared convetion. The default setup leads the sender to to map the pre-linguistic object to a symbol and the reciever to learn the opposite mapping. If they succeed they get en expected payoff of 1. If they fail they get an expected payoff of 1/|\mathcal{S}|. The game defaults to learning a tabular mapping without any ability to generalize. From N states to the next. To get this to happen the game needs to be modified.

One simple way to do this is to use a bottle neck in the form of a small alphabet of signals. The message is then split up and each part is encoded spepretly (either by different agents or by one agent sending the state as a sequence from a restircted alphabet) In (Skyrms 2010) chapter 12 coevers this. The problem is that in those research there isn’t a a clear explnation of what is going on. How can agents learn to break down the some arbitrary state. How to serialize this state into a sequence. How to decode it.

Now some basic checkss about signaling systems indicate that there can be massive speed ups to learning and the ability to generelize if agents can learn nicely composable signaling systems. This though needs spelling out: it can only happen to if they can breakdown the state into sub-states with a structure and the sequences uses as faithfully represent this structure.

Think homo-morphism. In (Skyrms 2010) there is also a discussion of agents learn ‘logical’ representation in variants of the game. Or at least some representation that are grounded into a simple logical scheme.

9 describe competing signaling via inversion for Propositional logic (PL) a system with alphabets {NOR,A,B,C,T}, {NAND,A,B,C,T}, should emerge before {NOT,AND,A,B,C,T}, {NOT,OR,A,B,C,T} which should arise faster than {NOT,OR,AND,IFF,IMP,A,B,C,T,F,(,)} even though all are equivalent - since the first ones need to learn one binary function, and will start to reinfoce much faster then ones with two or (422)+1 which needs NOT?(NOT?A {OR|AND|IFF|IMP}NOT?B) > will emerge much later as cooridnatinon is slower many options to try and reinforcement is slower - repeated success are far less likely to arise due to nature.

Again we don’t know enough to say if for some structured state space there is a some easy to learn equilibria. What seems to be self evident is that as we have bigger state spaces any signaling system is going to be very rare amongst possible equilibria. That complex signaling systems emerge, and that they are composable suggest that they may be a mechanism that can invert9 the population of equilibria. At least for signaling systems so that smaller alphabets may be better at reinfocing then ones with many symbols. Another aspect of this is that might be is that for only a few wff in PL most sequences should be rejected. If we use reward shaping to learn from negative outcomes we might come up with algs that can reinfoce at almost every turn.

And despite lots of talk, it doen’t seem to be relevant to the problems they were facing. The effect are that the most of what is happening in lewis signaling game is not realy That explain entanglement disentanglement. Compositional, non-compositional and generality. In a nice concise way. And that deserves. Good. Write up inside of the baroni post. And maybe inside of the guide to the perplexed. About compositionality. Uh, in fact, I don’t think Baroni deserves this because He’s just all about making things to make him confusing. Uh, then there is this Gondola girl. Whatever name is. And, Thinking about her work. Got me. To make a bit of progress. I think. 02:26 What we could do there? Is say this.

The Question of Grammar

This bit of thinking is about the deep mind emergent languages talk and paper (Lazaridou et al. 2018). by Angeliki Lazaridou, Karl Moritz Hermann, Karl Tuyls, Stephen Clark titled “Emergence of Linguistic Communication from Referential Games with Symbolic and Pixel Input”. I seems to have been rather influential on the field of emergent languages. And while this paper seems fairly sound, some of the follow up work seems less so.

What I noticed there. Is that? The talking about emerging languages. And I’m talking about, Engine re-engineering in Duluth game. The change at equilibria. So, as to. Support complex, signaling systems. And this is quite tricky. Particularly. When we have, Both simple and complex. 00:45 Signals and we have a third kind of thing. Which is the so-called aggregation. And there’s yet another thing. Which is the grammar. Is grammar and aggregation the same thing. I don’t think so. I think that. The related. But only coincidentally, The aggregation. As scrims describes, it can be conjunctive, which is weak. We go, it leads the weaker representation and the sequential one. Which leads to Richer. Representation. Um, 01:38 But that said, It doesn’t necessarily lead to a grammar. Although, It’s definitely sufficient to act as a kind of grammar. 01:54 Um, 01:59 What what is an example of a concatitative grammar? Uh, Hungarian. Is a glue. Native language. You simply add. Morphological units to form. Ah, very powerful. 02:25 Um, representation of a word, which is able to Essentially exist. Or rather resist changes in the order. Or for freeze. And, That doesn’t mean that they don’t care about that. There isn’t extra meaning, due to the order like the word, which is at the focus position. But basically Once we have the markings, in the words with all the affixes that you have in Hungarian, It’s quite possible to shuffle the words. And not disrupt. The meaning of the That. Morphology is encoded. So, that’s kind of 03:24 The power of the sequence I suppose. Though. It also, we also have in the sequence this special morphological markers. 03:39 But we could consider that these morphological markers are just basically. Certain words. 03:51 Which we put in certain positions. But, So, we’re still talking about this idea of 04:04 Competitive grammar. So another thing in the concoctative grammar is Uh, it could be. Ordered or disordered. If things are marked or unmarked, we can have it. Uh, resistant reordering. So the meaning is preserved and if it isn’t like in the example from The unfolding of languages from Old Turkish or Babylonian. You might have a very long sequence of Slots. And, We should be able to. Composite into these slots. A whole wealth of words, a whole wealth of meaning. And, I think we have something similar in German. We basically assemble a whole sentence. By gluing together, bits and pieces. 05:06 Of. 05:12 Into one long word. And this actually makes sense. If you think about, 05:24 About all societies in which The nobody wrote down the language if you don’t write down the language. Yes. It’s all overall. And, We could think of a word is something. That’s just a sequence. Okay. So, all of this Tries to highlight that. Um, We can have grammars just by concatenating. More films or like themes. And, 06:06 That’s what we call compositionality really, or at least. That’s a very basic form of Compositionality. 06:19 So, what is grammar if we have just a compositional 06:26 Rules. I would say that grammar. Um, 06:35 Is different ways we use. Create isolate bits of meaning. 06:49 Yeah, it’s Seems to be a hard thing to Define properly, but 06:58 The kind of ideas I’m thinking about is that we might have this recursive. Recursive set of rules. Because the grammar, Yeah, so in former languages, I think that’s the direction that’s the direction I’m bent to in. Informal languages, grammar defines. Using recursion, usually. 07:30 Set of sequences, the sequences are defined by the grammar. You can call them sequences, we control them sets. But I think they usually order set, so we can call them sequences and these sequences I lost to take. Find that alphabet and create. Uh, infinite number of 08:00 Messages. How do we do that? Quite simply put. We have this operation. With a simple set where we can take the power of the set, the power set. Which is. 08:22 All the pairs, I think. And all the triplets and so on and so on. Basically, all the subsets rather. Yeah, the power set is the central subsets. If we look at just 08:46 But the power. Yeah, if we look at all the subsets we can create, Bigger more complex constructs. 08:56 Um, If we look at, Sequences that we can form. We can also have a grammar for that. And, Some things human grammars have. This thing, this notion of agreement. I see agreement. This Serving two purposes. One is to identify. To maintain a correlation between lexical units. That have a relation. To show us assumings that 09:43 That the Mexico. Units or even two phrases. Are related using this? Correlation of gender number and so on. Whatever the agreement is keeping, And that way. We can. Poke into it. Into the slots between them. Uh, additional structures. And, The agreement. Allows us to maintain. The relationship. This, of course, breaks down. If we poke in, And they’ll literally arbitrarily Large number of, Uh, phrases. He put in a very big tree. This isn’t effective. Another way that I’m looking at it. Is that in terms of the pragmatics? If we look at the pragmatic side of communication, 10:57 This is just the redundancy, which allows To do our correction. I suppose. In the big picture though. These two things. These two phenomena agreement. And error, Corrections are 11:22 Dual aspects of the same thing. You put. These markers. They allow. They also make the language. More resistant. 11:39 Errors. They help us. Uh, disambiguate certain messages. 11:50 Through this types of agreement. And, 11:57 Uh, we usually don’t need these 12:03 If there is, let’s say less chance of an error or less chance of confusing. Some pronoun referencing, some other battle piece. Uh, multiple bits and pieces of the sentence. We might not need to mark this thing with. 12:27 With an agreement. To understand what’s going on and we might end up with a determiner. Which is unmarked. All it says is So what’s the difference between a and there or that? Definite and indefinite. All right, this or that. 12:54 So, The unmarked distance in any way that they don’t have. Um, what don’t they have? They don’t reflect number, they don’t reflect. Gender and so on. 13:14 The other hand we do have one, we have mine. These are. 13:24 Markers. These are, I don’t know, pronouns or particles that. 13:31 Mark possession. The Mach number, the micro number the marked for person. Right. 13:43 In Hebrew, they marked with gender. So they get marked with a lot of things and that kind of makes it significantly easier. 13:56 Makes it much easier. To discuss. There’s ambiguous thing. Called position. 14:08 And in Hungarian we can, The position and the possessor. Using additional information. Which is, uh, stored in the suffix. Along with the singular plural. So, they mark that. But they don’t Mark gender. Which is. How do you say? It’s useful as it’s on a, on a pig or something. Right. So Enough said about that. Um, So, let’s get back. So now we’ve discussed Grammar. Kind of try to Define grammar. And, Three rolls the formal role. Being. That it is. 15:13 A. That allows us to. To make use of a finite set of symbols. Into an infinite set of messages. But not necessarily saying anything about Their semantics. Although if we look at, I don’t know. The grandma. First saw the logic. It does allow us to Define by induction. 15:48 The Logical. 15:53 The Logical. The Logical meanings of. I’ll betually long phrases. Okay. 16:07 Um, So, it not only allows us to generate sequences. But to propagate the atomic meanings into more complicated meanings. 16:26 Aggregate meetings. This. 16:34 Um, Is more complicated and simple. Aggregation in simple. Aggregation we’re saying something after something after something just have this blasting. Logic, we have. And the no with brackets. 17:02 And we can build with this very specific functions. Of truth functions. And Truth functions correspond to a big chunk of semantics. No doubt about that. 17:24 So, if you want to look at grammar, Starting with a former language. It’s not the worst thing. But if we’re thinking about, This thing we call uh, what do we call it? Um, 17:44 Emergent language. We may be interested in having some additional useful properties, and this is what I collect and the ziturata This. Space. Definitely consider. The issue of learnability. Vulnerability. 18:14 Becomes Paramount. When we have Collective generational, collectives of Agents, 18:27 Basically. When we? To transfer. Disability. And The ability to communicate. 18:43 Uh, between agents and Even if we don’t have Generations, even if we have Continuing tasks. Which is. Similar to what’s happening in the real world. Uh, we still The. You want? We still would like, to be able to Handle. 19:16 Distributional shifts. Within the language. So If we. If you and I are talking for a long time. We’re gonna have. Who had adaptation of our language will have accent, and then would have a dialect. And then pretty soon. We’re gonna ask someone about. This and that and other people. Won’t be able to know what we’re talking about. We’ll have our own jokes. We’ll have our own idioms. And, 19:58 Made upwards. So we’ll have Words that other people know, but that now have completely new meanings. So, that’s this. Shift. I’m referencing. Distributional shift. And we’d like, to be able to Uh, communicate with other agents. The distributional shift. Is also annoying for researcher. In the sense that Let’s say, you understood what the language means now. But What happens after? The our allegiance. 20:42 Have had another. Half a million turns playing. Let’s say some kind of diary game or something. Now, the language might mean. Other things. The words and phrases might be the same but the semantics have changed, that’s a big headache or the grammar might have changed. 21:08 One of the algorithms I came about was to Full languages was Try to swap out. Pairs of signals. 21:24 And the meanings based on. 21:30 Distribution of. And this. Get us to a point where Um, We can have. Shared bits and pieces. Shared button pieces. These shirts bits and pieces. 21:56 Then be able to spread. And then we would have a compositionality. Basically. Should bits and pieces. Become prefixes and prefixes become categories. Vice versa. Let’s say, categories. Semantic categories and maybe later they become. 22:29 Grammatical categories part of speech. And so on and so forth. And That way, we might be able to evolve Um, Rules that simplify learning. We might learn construct. Let the language become. Uh, fixed. So, we don’t have A big issue with. With that language needs to evolve, but we do have an issue with this core adaptation. 23:10 Certain bits and pieces of the language not changed so much. We’d like, To find an optimal grammar. Then stick with it. We’d like to Have close categories that not change those. We’d like to use. Affixes efficiently. And we might want to and prefixes and so on. And we like to stick with those. Otherwise. 23:45 Our verbs. Might be. More prone to. Withering. And to make the language learnable. It’s gonna be much simpler if the verb and the noun, Morphology. Is regular. And more or less constant? And then, We can block in a stem or a root. You can get. A new verb or a new noun, or maybe even both. And all we have to do is learn the meaning of the stem more or less. And we understand all the rest. And that’s the power compositionality. So, 24:39 One old idea, I had. About trying to induct. Buffalologies. In unsupervised or semi-supervised way. Was to try and find some kind of 25:04 That’s a good fit. 25:09 Handling morphologies for. Handling tables. Did you follow the same structures? 25:20 This is. 25:25 Uh, something I don’t want to explore here. But this kind of a loss. Might be useful. To encourage. 25:43 Hola. The agents to coordinate on the language. With a given structure and yeah. A further refinement of this idea. Which I’ve had more recently. Is to consider that. This morphology. To high degree mirrors a group action. And so we can Define This loss in terms of, Homomorphic loss. I lost that preserves. 26:28 Structure. 26:34 But that’s pretty much. 26:38 Just handling structure. You could probably do even better. If we. Also, preserve distances. Distances between. Semantic unit. I suppose. Such. 27:02 Structure would be. Also a home homeomorphism topologically preserver. Something. To think about. 27:18 And yet. These are approximate. 27:25 Why is that? Because, Because, Um, Because of the irregularities irregularities. Are usually going to be deviations from this group structure. From the topology. From the simple distributional forms. 27:52 For compositionality. We generally assume. Independence. But three languages. 28:04 Are not uniformly, distributed. 28:08 Biggest structures are not. Uh, often 28:19 Show conditional. Probabilities. So, They don’t follow. 28:33 Independent structure. 28:38 And these are. Aspect of the language, we usually want to preserve In other words, It’s often. To our benefit. To have. 28:56 These other things in the language. 29:07 Why is that? Because these oddities often encode. Not always, but often. Then code. 29:26 Frequent the most frequent. Elements. Irregular verbs. To be. 29:37 To do. In French, a regular. 29:46 In English. I’m not sure, I think they are also. Is that good makes learning harder? But it’s only a little chunk and it probably makes air correction better. 30:06 Although, I’m sure we could do better than that. Um, So there are all sorts of plays going on here. And, Uh, one thing you can be sure about Is that? To come up with a good grammar. Isn’t. Necessarily very difficult. 30:38 But, 30:43 It appears to be. Product of planning. Once you have the language, Or laid out in other words. 30:56 Expand the rules of the grammar. You’ll have vast constructs. And to change. And we see the changes in these contracts are often localized. Of the localized. Which means? It’s very hard to change the grammar. Just change the grammar, you will have to change. 31:25 All the application of all these constructs. For that particular, aspect of the rule. There might be subtle changes, that not make big, big, big changes. Usually, 31:44 Natural language grammars. Nothing. Like The world behaved formal grammars. Which we see, and I think, This is primarily because of what I just described. That it takes a Big effort to make these changes because you have to get these changes to happen in the heads.

The most.

Oh, at least localized area. Where people are talking? Um, Yeah. Um, but they do happen. So they happen, they happen because The forces. Evolutionary forces. Acting over. Certain time frames. That. We’ll do this, because The original system might be inefficient. Of equilibrium in terms of signaling systems. And each interaction entails a tiny amount of of friction. And, If it is likely, the changes will happen in such a way. 33:32 Follow some kind of path of lift resistance. A domino effect Chain Reaction. We see the vowel, shifts. How can that possibly happen? 33:51 One wond. But, 2 Speaker 2 33:59 I don’t know. But 1 Speaker 1 34:07 They did happen. They have happened. And, One would think this is. Something that’s happening, step by step. 34:20 Certainly. Words. With a dominant meaning resist changes. 34:34 To conform with the grammar. Until? 34:42 Perhaps that word. Is replaced. With another word of the use of that term. Falls into. The shoes. Allowing us to. 35:00 Uh, assign a new meaning or Reduce the variation. We see in Hebrew. 35:10 Something even more drastic has happened. Over time certain of the phonemes. Have become. 35:25 The. 35:30 Where we have three different sounds in Arabic, we have only one song in Hebrew. And, This is cause number of verbs to become conflated. 35:44 You can only imagine the chaos. This is created in terms of meanings. 35:52 If only, we could take a step backwards, Teach everybody, how to Pronounce. These sounds in three distinct ways, everybody. And then, teach everybody. Which verb? Fits with which sound. 36:15 We could. Make the language, much more. 36:23 Much less ambiguous. But at the same time, It will probably be. Harder to learn. So, If there’s one conclusion from all, this rather long story, Is that all these things? Represent trade-offs. And, 36:58 Every languages, the equilibria. 37:07 Corresponding to these different things. Equilibria. That is stable. One change so much, but You can see from the research by schemes and others. That. 37:33 The. Most of the equilibrio at least in the simple models. Are unstable or semi-stable. And serve the language. Will shift over time. 37:53 All that you need is the right kind of pressure. I suppose. 38:03 Enough said. So now we can get back to So, 38:15 I’ve covered a lot about. Aspects of grammars. Now, let’s get to Signal existence, let’s get back to signaling systems. It looks like. Does not look like the simplest games. There’s a bunch of. Through specific equilibriums possible. Very specific. They’re essentially infinite. Infinite number, and yet very specific. What do I mean? The three types of purequalibia. Perfect, pooling completely useless. Partial pulling. Imperfect. 39:05 And, And, 39:15 Separate the equilibria, the so-called signaling systems. That’s to, at least. The simple. Games were simple States. 39:33 So-Called. 39:40 Uh, simple. The basic. 39:50 If you. Something more sophisticated. 39:58 We need to look for some other game. We usually call this. Modification. What we should ask is. Does our modification allow us? 40:16 To have. Um, 2 Speaker 2 40:22 Does it allow us to have new types of equilibrium? 40:29 I think there’s 41:18 And just upload those. 41:36 So, the the big, the big question again. 41:55 Since we do see compositionals, Languages. 42:05 Can we 3 Speaker 3 42:06 say? 2 Speaker 2 42:11 Using complex symbols. And not just the coincidence. 42:20 No, that’s not the paper. The big question is 42:26 If we make some change to the significance, 42:41 Of the game. 42:46 To have. New kinds 1 Speaker 1 42:48 of Bolivia. Furthermore. 42:55 This 2 Speaker 2 42:57 is equilibrium. Follow approximate, let’s say. Use the approximate. 43:25 We really wish the property. That are close together. 43:40 Conform to some grammar. To the approximate, some kind of drama. Is there sometimes a genre. Death is. More likely to emerge. And so on. 44:00 Why do we ask this? Because we don’t know. Nice and simple way to extend the game to get this. I don’t think. 1 Speaker 1 44:23 I 2 Speaker 2 44:24 think you would apply this to the agents. They create is such an algorithm These agents. Lexus. No. 3 Speaker 3 44:38 Have order of magazine. 44:44 And it comes. What the future is to transfer. 2 Speaker 2 44:56 Oh, it would alone. For these abilities. 45:14 Policies. 45:19 The values and action values in terms of, A language where design in terms of getting used to be really interested. Objects. And there’s a bit to flip. 45:42 They will be able to transfer. To have a translation. Between languages. 46:02 From the line is just one problem. 46:15 Another I know injection. We’ve seen in enforcement learning. That when you run the same algorithm, Some seats. So, Just by chance. We might have. Low performance. And another right here. 46:45 Lord, that pitched. 46:54 Well, not much except that. When we talk about language, we talk about state that unstable equilibrium and 47:11 What time vision is? 47:16 This could do. We understand. The Scorplex signaling systems. You should also be able to figure out How to create? 47:35 Very many designers including one with three stabilities. 47:42 If we are able to use this stability of our Policy value functions equivalent function. 47:57 Because we now have 48:02 More abstract. 48:07 We’re probably going to be more powerful. 48:14 Then. 48:27 So, given all this 48:33 What did you change? With these so-called. 48:48 Research. 48:56 Composable. So complex. To work this country. 49:31 Because, 49:37 Uh, because We can easily show that. Languages with these properties. 49:51 The ability to capture very much and for Concepts using this small relatively small. 50:03 They’re very easy to learn. They’re very easy to teach. So if you have all these benefits, maybe some of them deserve distance and structure. 50:19 And maybe they are also. 50:29 Easier to To. Translate. 50:49 So, we would facilitate Uh, the best faciliters of transfer learning. Maybe they. Group instructions for many things that might not be in the learnable in one second. So you might be working with Space Invaders, you might not have. And language. 51:23 You get that. So if you’re working with soccer band, you might have other kinds of Concepts 51:34 Useful, which 51:40 And, 51:45 I, We would like to find some kind of core. 51:57 A human language, which has 52:12 Didn’t describe States. Well enough. 52:22 I 1 Speaker 1 52:24 have also properties, which bar 52:34 Uh, gravity stability. 52:58 And yes, all of this might follow one. Three, two, maybe just one uh, geometrical rule. 53:33 Yeah. Anyway, anyhow 53:49 So that’s us. What? What does this? What does this? Transcribed by Pixel

The second question, seems to be in what way is The referential game different from the signaling game?

The second question, seems to be “in what way is The referential game different from the signaling game?”

I postulate that this is due two changes and more significantly, the way these two things come together. The alternative hypothesis is that just one suffices for the emergence of a signaling system.

- There is the classifier.

- Is the Message generator - as described in (Lazaridou et al. 2018) can generate any sequence from a given alphabet of symbols.

If these symbols are already imbued with semantics from a pre-ante lewis game, then what we now have is a complex signaling system. However, if the symbols are not imbued with semantics then we can still use the lewis game to imbue them with semantics. It uncertain though if these semantics will be different from the semantics due to a simple lewis game.

Initially I liked the first hypothesis as it mirrored my thinking of using an extensive game with two steps as my modified lewis game. Soon though I had an small epiphany, and decided to test the second hypothesis, this being more in line with the reductionist approach I had espoused all along. Particularly as this is the smaller and less powerful extension then the first.

Now we can think of the lewis signaling game as using a generator with some set of symbols and sequences of length 1.

More so we might want to shuffle the sequences so they come out in arbitrary orders allowing all the lewis game to unfold in all possible ways. I.e. all forms of symmetry breaking. Alternatively we might want to enumerate the different equilibria and thus only use one canonical order.

Some sequences and thier interpretations

Here is some code that takes an alphabet \mathcal{A} and generates all the sequences \mathcal{L} of length N exactly

Tenery Sequences

lang= []

print("Generated sequences:")

for sequence in generate_sequences_generator(['A','B','C'], 3):

lang.append(sequence)

# print lang 3 sequences per line

print("\n".join([",".join(lang[i:i+9]) for i in range(0, len(lang), 9)]))Generated sequences:

AAA,AAB,AAC,ABA,ABB,ABC,ACA,ACB,ACC

BAA,BAB,BAC,BBA,BBB,BBC,BCA,BCB,BCC

CAA,CAB,CAC,CBA,CBB,CBC,CCA,CCB,CCCInterpreting the sequences.

In this case we might interpret each sequence as a message indicating a sub-state A, B, or C took place. If this is their meaning we might need to remove duplicates and order them to decode it. Also we have many alternative messages for equivalent states. This would slow down the learning process.

Another way to go is to treat A,B,C as 0,1,2 and we can interpret them as a ternary number. Now each sequence is unique and can be interpreted as a corresponding to some state. If we had again three binary sub-states we could use this system with 3^3 symbols to encode the 2^3 states as follows:

A in the first position indicates true for the first sub-state, B indicates False, and the same for the second and third sub-states. We don’t need states with C. This is a more efficient encoding and will speed up the learning process.

Let say we used the restricted system to start with and 0,1 to encode False and True

\begin{align*} AAA \to ['A':T 'B':F,'C':F] \\ AAB \to ['A':T 'B':F,'C':T] \\ ABC \to ['A':T 'B':F,'C':T] \\ \vdots\\ CCC \to ['A':F 'B':F,'C':F] \end{align*}

Binary Strings

def gen_all_sequences(alphabet = ['0', '1'], min_length = 1,max_length = 4,col_size=4):

lang= []

print("Generated sequences:")

print(f"From alphabet: {alphabet}")

for i in range(min_length, max_length+1):

seq=[]

for sequence in generate_sequences_generator(alphabet, i):

seq.append(sequence)

# print lang 3 sequences per line

print(f'Sequences of length {i}:')

print("\n".join([",".join(seq[i:i+col_size]) for i in range(0, len(seq), col_size)]))

print("\n")

lang=lang+seq

print(lang)

return lang

bin_lan_1_4=gen_all_sequences()Generated sequences:

From alphabet: ['0', '1']

Sequences of length 1:

0,1

Sequences of length 2:

00,01,10,11

Sequences of length 3:

000,001,010,011

100,101,110,111

Sequences of length 4:

0000,0001,0010,0011

0100,0101,0110,0111

1000,1001,1010,1011

1100,1101,1110,1111

['0', '1', '00', '01', '10', '11', '000', '001', '010', '011', '100', '101', '110', '111', '0000', '0001', '0010', '0011', '0100', '0101', '0110', '0111', '1000', '1001', '1010', '1011', '1100', '1101', '1110', '1111']Notes:

- that that the above system might be learned if the Lewis signaling game took place and the sequences were generated in an order corresponding to these states. Otherwise we would get an equivalent system to the first one

- When we generate all sequences of length up to 4 we get in the first row the two atomic symbols.

- Since these are binary sequence of numbers I naturally interpret these as each being a more general version of the previous one.

- In one sense ‘0’, ‘01’, and ‘001’ mean the same thing to me. Behind it is an aggregation of the digits weighted be powers of 2. This leads to a semantic

- However this interpretation is arbitrary. Binary sequences can encode pretty much anything. We use them for general purpose commutation.

DNA=gen_all_sequences('ACGT', 3,3)Generated sequences:

From alphabet: ACGT

Sequences of length 3:

AAA,AAC,AAG,AAT

ACA,ACC,ACG,ACT

AGA,AGC,AGG,AGT

ATA,ATC,ATG,ATT

CAA,CAC,CAG,CAT

CCA,CCC,CCG,CCT

CGA,CGC,CGG,CGT

CTA,CTC,CTG,CTT

GAA,GAC,GAG,GAT

GCA,GCC,GCG,GCT

GGA,GGC,GGG,GGT

GTA,GTC,GTG,GTT

TAA,TAC,TAG,TAT

TCA,TCC,TCG,TCT

TGA,TGC,TGG,TGT

TTA,TTC,TTG,TTT

['AAA', 'AAC', 'AAG', 'AAT', 'ACA', 'ACC', 'ACG', 'ACT', 'AGA', 'AGC', 'AGG', 'AGT', 'ATA', 'ATC', 'ATG', 'ATT', 'CAA', 'CAC', 'CAG', 'CAT', 'CCA', 'CCC', 'CCG', 'CCT', 'CGA', 'CGC', 'CGG', 'CGT', 'CTA', 'CTC', 'CTG', 'CTT', 'GAA', 'GAC', 'GAG', 'GAT', 'GCA', 'GCC', 'GCG', 'GCT', 'GGA', 'GGC', 'GGG', 'GGT', 'GTA', 'GTC', 'GTG', 'GTT', 'TAA', 'TAC', 'TAG', 'TAT', 'TCA', 'TCC', 'TCG', 'TCT', 'TGA', 'TGC', 'TGG', 'TGT', 'TTA', 'TTC', 'TTG', 'TTT']- if we use four symbols alphabet we can encode the four nucleotides of DNA.

- this is the basis of the language of DNA!

Base 4 Sequences

the following generate all the sequences of length 3 comprised of 0,1 and a space symbol

Propositional Logic

The following generates all the logical propositions of length 4 using the with three clauses and symbols for negation, conjunction, disjunction for it alphabet

brackets='()'

modal='◻◊'

std_connectives='∧∨⟹⟺¬'

connectives=std_connectives+modal

props='⊤⊥ABC'

logic_alphabet=brackets+connectives+propsIn this case we can see that almost all the sequences are not well formed formulas. Clearly if we wanted to use the a language of propositional logic we would need to reject the sequences that are not well formed formulas.

Secondly we see that this alphabet which has only three atomic symbols for propositions is bigger - it has 10 symbols. If we wanted to consider ((A∧B)∨C) we need to use a sequence of length 7. I f we also need to gengate everything we are at length 10. This is 10^10 sequences to go through. And it is likely to exceed the memory capacity of most computers to store all these sequences. We need a smarter way to generate the sequences.

Also we can see that this language is not very efficient. We can encode the same information in a more compact way. For example we can use the following encoding:

the following function checks a string for well-formedness

def is_well_formed(brackets, connectives, props, s):

"""

Checks if a given string 's' is a well-formed formula (WFF) under propositional calculus.

Args:

brackets: A string containing the opening and closing bracket characters (e.g., '()').

connectives: A string containing valid connective symbols (e.g., '∧∨⟹⟺¬').

props: A string containing valid propositional symbols (e.g., 'ABC⊤⊥').

s: The string to be checked for WFF status.

Returns:

True if 's' is a WFF, False otherwise.

"""

# 1. Reject consecutive negation

if "¬¬" in s:

return False

# 2. Quick check for invalid characters or unbalanced brackets

logic_alphabet = brackets + connectives + props

if not all(ch in logic_alphabet for ch in s):

return False

if not are_brackets_balanced(s, brackets[0], brackets[1]):

return False

# 3. Recursive checker

return check_subformula(s, brackets, connectives, props)

def are_brackets_balanced(s, open_br, close_br):

"""Return True if brackets are balanced in s, otherwise False."""

stack = []

for ch in s:

if ch == open_br:

stack.append(ch)

elif ch == close_br:

if not stack:

return False

stack.pop()

return len(stack) == 0

def check_subformula(s, brackets, connectives, props):

"""

Recursively check if 's' is a well-formed formula under the rules:

1. A single proposition in `props` is a WFF.

2. ⊤ or ⊥ is a WFF.

3. If φ is a WFF, then ¬φ is a WFF.

4. If φ and ψ are WFFs and ° is a binary connective, then (φ°ψ) is a WFF.

"""

# Base cases: single proposition or constant

if s in props:

return len(s) == 1

#if s == '⊤' or s == '⊥':

# return True

# Negation

if s.startswith('¬'):

# must have something after '¬'

if len(s) == 1:

return False

return check_subformula(s[1:], brackets, connectives, props)

# Parenthesized binary formula: must be of the form (X ° Y)

open_br, close_br = brackets[0], brackets[1]

if s.startswith(open_br) and s.endswith(close_br):

# Strip outer parentheses

inside = s[1:-1]

# Find the main connective at depth 0

depth = 0

main_connective_pos = -1

for i, ch in enumerate(inside):

if ch == open_br:

depth += 1

elif ch == close_br:

depth -= 1

# A binary connective at top-level (depth == 0)

elif ch in connectives and ch != '¬' and depth == 0:

main_connective_pos = i

break

# If we never found a binary connective, not a valid (φ°ψ) form

if main_connective_pos == -1:

return False

# Split around the main connective

left_part = inside[:main_connective_pos]

op = inside[main_connective_pos]

right_part = inside[main_connective_pos + 1 :]

# Ensure left and right parts are non-empty and op is truly binary

if not left_part or not right_part:

return False

if op not in connectives or op == '¬': # '¬' is unary, so reject it here

return False

# Check each side recursively

return (check_subformula(left_part, brackets, connectives, props) and

check_subformula(right_part, brackets, connectives, props))

# If it doesn't match any of the rules above, it's not a WFF

return FalseTest Cases for the WFF checker

# ====================

# Test Cases

# ====================

test_cases = [

("A ∧ B", False), # Reject spaces around binary connective

("(A ∧ B)", False), # Reject spaces

("(A ∧ B) ⟹ C", False), # Reject spaces

("A ∧", False), # reject spaces and single operand

("A∧", False), # reject bin op with one prop

("A∧B", False), # reject bin op without brackets

("(A∧B)", True),

("(A∧B)⟹C", False), # missing outer brackets

("((A∧B)⟹C)", True),

("¬¬A", False), # Reject multiple consecutive nots

("⊤", True),

("⊥", True),

("∨", False), # Reject single connective not allowed

("()", False), # Empty brackets

("⊤⊤", False), # Reject multiple constants

("⊤⊥⊤", False), # Multiple constants

("A", True), # Accept single propositions

("AB", False), # Reject adjacent propositions

("ABC", False), # Reject adjacent propositions

("A¬B", False), # Reject adjacency

("¬AB", False), # Reject adjacency

("¬A¬B", False), # Reject adjacency

("⊥¬⊤", False), # Reject adjacency

("⊥A", False), # Reject adjacency

("⊥¬A", False), # Reject adjacency

("(B)C", False), # Reject adjacency

("(A) ∧ (B)", False), # Reject spaces

("A∧(B)", False), # Must have parentheses around entire binary expression, not half

("(A)∧B", False),

("A∧(B)⟹C", False),

("(A)∧(B)⟹(C)", False),

("((A))", False) # Reject double parentheses around a single prop

]

for i, (formula, expected_result) in enumerate(test_cases):

result = is_well_formed(brackets, connectives, props, formula)

print(f"{i}: {formula}: {result} (Expected: {expected_result})")

assert result == expected_result0: A ∧ B: False (Expected: False)

1: (A ∧ B): False (Expected: False)

2: (A ∧ B) ⟹ C: False (Expected: False)

3: A ∧: False (Expected: False)

4: A∧: False (Expected: False)

5: A∧B: False (Expected: False)

6: (A∧B): True (Expected: True)

7: (A∧B)⟹C: False (Expected: False)

8: ((A∧B)⟹C): True (Expected: True)

9: ¬¬A: False (Expected: False)

10: ⊤: True (Expected: True)

11: ⊥: True (Expected: True)

12: ∨: False (Expected: False)

13: (): False (Expected: False)

14: ⊤⊤: False (Expected: False)

15: ⊤⊥⊤: False (Expected: False)

16: A: True (Expected: True)

17: AB: False (Expected: False)

18: ABC: False (Expected: False)

19: A¬B: False (Expected: False)

20: ¬AB: False (Expected: False)

21: ¬A¬B: False (Expected: False)

22: ⊥¬⊤: False (Expected: False)

23: ⊥A: False (Expected: False)

24: ⊥¬A: False (Expected: False)

25: (B)C: False (Expected: False)

26: (A) ∧ (B): False (Expected: False)

27: A∧(B): False (Expected: False)

28: (A)∧B: False (Expected: False)

29: A∧(B)⟹C: False (Expected: False)

30: (A)∧(B)⟹(C): False (Expected: False)

31: ((A)): False (Expected: False)# Example of generating all sequences that are WFF up to length 5

from itertools import product

def generate_sequences_generator(brackets, connectives, props, n, is_wwf=None):

alphabet = brackets + connectives + props

for seq in product(alphabet, repeat=n):

candidate = ''.join(seq)

if is_wwf is None or is_wwf(brackets, connectives, props, candidate):

yield candidate

def gen_all_sequences(brackets, connectives, props,

min_length=1, max_length=4, col_size=4, is_wwf=None):

alphabet = brackets + connectives + props

lang = []

print("Generated sequences:")

print(f"From alphabet: {alphabet}\n")

for length in range(min_length, max_length + 1):

seq_list = []

for sequence in generate_sequences_generator(brackets, connectives, props, length, is_wwf):

seq_list.append(sequence)

print(f"Sequences of length {length}:")

for i in range(0, len(seq_list), col_size):

print(",".join(seq_list[i : i + col_size]))

print()

lang.extend(seq_list)

return lang

print("\nGenerating WFF (is_well_formed) up to length 5:\n")

wff = gen_all_sequences(brackets, connectives, props, 1, 5, 4, is_wwf=is_well_formed)

print("\nAll WFFs up to length 5:")

print(wff)

Generating WFF (is_well_formed) up to length 5:

Generated sequences:

From alphabet: ()∧∨⟹⟺¬◻◊⊤⊥ABC

Sequences of length 1:

⊤,⊥,A,B

C

Sequences of length 2:

¬⊤,¬⊥,¬A,¬B

¬C

Sequences of length 3:

Sequences of length 4:

Sequences of length 5:

(⊤∧⊤),(⊤∧⊥),(⊤∧A),(⊤∧B)

(⊤∧C),(⊤∨⊤),(⊤∨⊥),(⊤∨A)

(⊤∨B),(⊤∨C),(⊤⟹⊤),(⊤⟹⊥)

(⊤⟹A),(⊤⟹B),(⊤⟹C),(⊤⟺⊤)

(⊤⟺⊥),(⊤⟺A),(⊤⟺B),(⊤⟺C)

(⊤◻⊤),(⊤◻⊥),(⊤◻A),(⊤◻B)

(⊤◻C),(⊤◊⊤),(⊤◊⊥),(⊤◊A)

(⊤◊B),(⊤◊C),(⊥∧⊤),(⊥∧⊥)

(⊥∧A),(⊥∧B),(⊥∧C),(⊥∨⊤)

(⊥∨⊥),(⊥∨A),(⊥∨B),(⊥∨C)

(⊥⟹⊤),(⊥⟹⊥),(⊥⟹A),(⊥⟹B)

(⊥⟹C),(⊥⟺⊤),(⊥⟺⊥),(⊥⟺A)

(⊥⟺B),(⊥⟺C),(⊥◻⊤),(⊥◻⊥)

(⊥◻A),(⊥◻B),(⊥◻C),(⊥◊⊤)

(⊥◊⊥),(⊥◊A),(⊥◊B),(⊥◊C)

(A∧⊤),(A∧⊥),(A∧A),(A∧B)

(A∧C),(A∨⊤),(A∨⊥),(A∨A)

(A∨B),(A∨C),(A⟹⊤),(A⟹⊥)

(A⟹A),(A⟹B),(A⟹C),(A⟺⊤)

(A⟺⊥),(A⟺A),(A⟺B),(A⟺C)

(A◻⊤),(A◻⊥),(A◻A),(A◻B)

(A◻C),(A◊⊤),(A◊⊥),(A◊A)

(A◊B),(A◊C),(B∧⊤),(B∧⊥)

(B∧A),(B∧B),(B∧C),(B∨⊤)

(B∨⊥),(B∨A),(B∨B),(B∨C)

(B⟹⊤),(B⟹⊥),(B⟹A),(B⟹B)

(B⟹C),(B⟺⊤),(B⟺⊥),(B⟺A)

(B⟺B),(B⟺C),(B◻⊤),(B◻⊥)

(B◻A),(B◻B),(B◻C),(B◊⊤)

(B◊⊥),(B◊A),(B◊B),(B◊C)

(C∧⊤),(C∧⊥),(C∧A),(C∧B)

(C∧C),(C∨⊤),(C∨⊥),(C∨A)

(C∨B),(C∨C),(C⟹⊤),(C⟹⊥)

(C⟹A),(C⟹B),(C⟹C),(C⟺⊤)

(C⟺⊥),(C⟺A),(C⟺B),(C⟺C)

(C◻⊤),(C◻⊥),(C◻A),(C◻B)

(C◻C),(C◊⊤),(C◊⊥),(C◊A)

(C◊B),(C◊C)

All WFFs up to length 5:

['⊤', '⊥', 'A', 'B', 'C', '¬⊤', '¬⊥', '¬A', '¬B', '¬C', '(⊤∧⊤)', '(⊤∧⊥)', '(⊤∧A)', '(⊤∧B)', '(⊤∧C)', '(⊤∨⊤)', '(⊤∨⊥)', '(⊤∨A)', '(⊤∨B)', '(⊤∨C)', '(⊤⟹⊤)', '(⊤⟹⊥)', '(⊤⟹A)', '(⊤⟹B)', '(⊤⟹C)', '(⊤⟺⊤)', '(⊤⟺⊥)', '(⊤⟺A)', '(⊤⟺B)', '(⊤⟺C)', '(⊤◻⊤)', '(⊤◻⊥)', '(⊤◻A)', '(⊤◻B)', '(⊤◻C)', '(⊤◊⊤)', '(⊤◊⊥)', '(⊤◊A)', '(⊤◊B)', '(⊤◊C)', '(⊥∧⊤)', '(⊥∧⊥)', '(⊥∧A)', '(⊥∧B)', '(⊥∧C)', '(⊥∨⊤)', '(⊥∨⊥)', '(⊥∨A)', '(⊥∨B)', '(⊥∨C)', '(⊥⟹⊤)', '(⊥⟹⊥)', '(⊥⟹A)', '(⊥⟹B)', '(⊥⟹C)', '(⊥⟺⊤)', '(⊥⟺⊥)', '(⊥⟺A)', '(⊥⟺B)', '(⊥⟺C)', '(⊥◻⊤)', '(⊥◻⊥)', '(⊥◻A)', '(⊥◻B)', '(⊥◻C)', '(⊥◊⊤)', '(⊥◊⊥)', '(⊥◊A)', '(⊥◊B)', '(⊥◊C)', '(A∧⊤)', '(A∧⊥)', '(A∧A)', '(A∧B)', '(A∧C)', '(A∨⊤)', '(A∨⊥)', '(A∨A)', '(A∨B)', '(A∨C)', '(A⟹⊤)', '(A⟹⊥)', '(A⟹A)', '(A⟹B)', '(A⟹C)', '(A⟺⊤)', '(A⟺⊥)', '(A⟺A)', '(A⟺B)', '(A⟺C)', '(A◻⊤)', '(A◻⊥)', '(A◻A)', '(A◻B)', '(A◻C)', '(A◊⊤)', '(A◊⊥)', '(A◊A)', '(A◊B)', '(A◊C)', '(B∧⊤)', '(B∧⊥)', '(B∧A)', '(B∧B)', '(B∧C)', '(B∨⊤)', '(B∨⊥)', '(B∨A)', '(B∨B)', '(B∨C)', '(B⟹⊤)', '(B⟹⊥)', '(B⟹A)', '(B⟹B)', '(B⟹C)', '(B⟺⊤)', '(B⟺⊥)', '(B⟺A)', '(B⟺B)', '(B⟺C)', '(B◻⊤)', '(B◻⊥)', '(B◻A)', '(B◻B)', '(B◻C)', '(B◊⊤)', '(B◊⊥)', '(B◊A)', '(B◊B)', '(B◊C)', '(C∧⊤)', '(C∧⊥)', '(C∧A)', '(C∧B)', '(C∧C)', '(C∨⊤)', '(C∨⊥)', '(C∨A)', '(C∨B)', '(C∨C)', '(C⟹⊤)', '(C⟹⊥)', '(C⟹A)', '(C⟹B)', '(C⟹C)', '(C⟺⊤)', '(C⟺⊥)', '(C⟺A)', '(C⟺B)', '(C⟺C)', '(C◻⊤)', '(C◻⊥)', '(C◻A)', '(C◻B)', '(C◻C)', '(C◊⊤)', '(C◊⊥)', '(C◊A)', '(C◊B)', '(C◊C)']ok lets also consider the following minimal connectives example:

max_length=5

print("\nGenerating WFF (is_well_formed) up to length 5:\n")

wff = gen_all_sequences(brackets='()', connectives='∨¬', props='1ABC', min_length=1, max_length=max_length, col_size=4, is_wwf=is_well_formed)

print(f"\nAll WFFs up to length {max_length}:")

print(wff)

Generating WFF (is_well_formed) up to length 5:

Generated sequences:

From alphabet: ()∨¬1ABC

Sequences of length 1:

1,A,B,C

Sequences of length 2:

¬1,¬A,¬B,¬C

Sequences of length 3:

Sequences of length 4:

Sequences of length 5:

(1∨1),(1∨A),(1∨B),(1∨C)

(A∨1),(A∨A),(A∨B),(A∨C)

(B∨1),(B∨A),(B∨B),(B∨C)

(C∨1),(C∨A),(C∨B),(C∨C)

All WFFs up to length 5:

['1', 'A', 'B', 'C', '¬1', '¬A', '¬B', '¬C', '(1∨1)', '(1∨A)', '(1∨B)', '(1∨C)', '(A∨1)', '(A∨A)', '(A∨B)', '(A∨C)', '(B∨1)', '(B∨A)', '(B∨B)', '(B∨C)', '(C∨1)', '(C∨A)', '(C∨B)', '(C∨C)']as max_length increases the number of sequences we need to generate and test quickly becomes prohibitive. It takes too long.