TL;DR

This Nips 1988 paper is about simplifying neural networks by removing redundant units. The authors’ approach is systematically identifying and removing redundant or less-relevant units without losing functionality.

Summary

In (Mozer and Smolensky 1988) the authors presents a novel approach to simplifying neural networks by systematically identifying and removing redundant or less-relevant units. The authors address a key challenge in connectionist models: understanding and optimizing the network’s behavior by trimming unnecessary components without losing functionality.

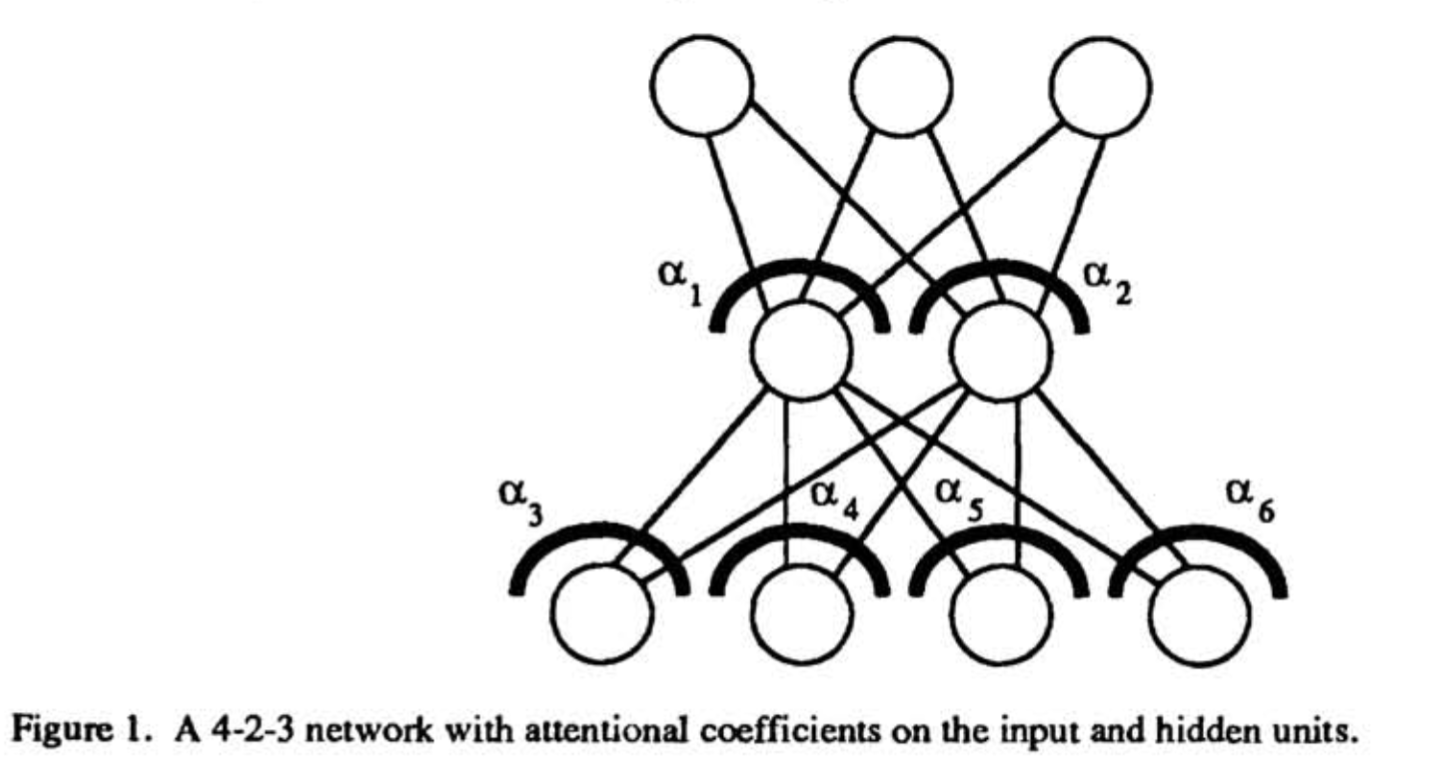

The core idea of the paper is to iteratively train a network, compute a relevance metric for each input or hidden unit, and eliminate the units that are least relevant to the network’s performance. The skeletonization process offers several practical benefits:

Constraining Generalization: By reducing redundant units, skeletonization effectively limits the network’s complexity, which can enhance its generalization capability.

Improving Learning Performance: Networks often require many hidden units to quickly learn a dataset. Skeletonization accelerates initial learning with excess units and trims unnecessary ones later, leading to better generalization without sacrificing learning speed.

Simplifying Network Behavior: The authors argue that skeletonization can transform complex networks into simpler models, effectively capturing core decision-making rules.

The technique contrasts with other approaches such as weight decay methods by opting for an all-or-none removal of units, motivated by the desire to identify the most critical components through explicit relevance metrics. These metrics are determined by computing the network’s error with and without specific units, using a time-averaged relevance estimate.

Key Contributions

Relevance Metric and Error Propagation: Mozer and Smolensky introduce a relevance measure derived from the network’s error response to the removal of a unit. The metric can be computed via an error propagation procedure similar to backpropagation, making the approach computationally feasible for larger networks.

Practical Application in Simple Examples:

- In the cue salience problem, the relevance metric highlights the most critical input unit, effectively eliminating irrelevant units.

- In the rule-plus-exception problem, the method identifies the hidden unit responsible for most general cases, while distinguishing another unit that handles exceptions, reflecting the nuanced task structure.

- The train problem demonstrates the technique’s ability to reduce inputs to a minimal set of features needed to differentiate categories.

Skeletonization in More Complex Tasks: Mozer and Smolensky apply skeletonization to more sophisticated problems like the four-bit multiplexor and the random mapping problem, showing that skeletonized networks can outperform standard ones in terms of both failure rate and learning speed.

Strengths

Effective Reduction of Network Size: One of the most impressive outcomes is the ability of skeletonized networks to match or exceed the performance of larger networks with fewer units. The networks show resilience in maintaining their functionality even as units are removed.

Improvement in Learning Time: The authors provide evidence that learning with an initially large network and then trimming it can lead to faster convergence than training a smaller network from the start. This result challenges conventional thinking that fewer hidden units should always be preferable from the outset.

Rule Abstraction: The skeletonization process successfully identifies essential “rules” that govern network behavior, making it easier to interpret a network’s decisions in a simplified and concise manner.

Limitations and Open Questions

Predefined Trimming Limit: One limitation of the paper’s approach is the need to predefine how much of the network to trim. While the authors acknowledge that magnitudes of relevance values may offer insights, an automatic stopping criterion based on these values is not fully explored.

Error Function Sensitivity: The paper highlights an issue with using quadratic error functions to compute relevance. The authors propose an alternative linear error function, but the sensitivity of results to different error metrics could benefit from further investigation.

Conclusion

Mozer and Smolensky’s skeletonization technique represents a significant step toward optimizing neural networks by removing redundant units without losing core functionality. The method not only improves learning performance but also offers valuable insights into the internal workings of neural networks. By focusing on relevance metrics, the authors pave the way for more interpretable, efficient, and generalized neural models.

Overall, skeletonization remains an influential contribution to the study of neural network optimization, providing both theoretical insights and practical improvements in learning systems.

The Paper

Citation

@online{bochman2022,

author = {Bochman, Oren},

title = {Skeletonization: {A} {Technique} for {Trimming} the {Fat}

from a {Network} via {Relevance} {Assessment}},

date = {2022-06-22},

url = {https://orenbochman.github.io/reviews/1989/skeletonization/},

langid = {en}

}