Lecture 5a: Why object recognition is difficult

We’re switching to a different application of neural networks: computer vision, i.e. having a computer really understand what an image is showing.

This video explains why it is difficult for a computer to go from an image (i.e. the color and intensity for each pixel in the image) to an understanding of what it’s an image of.

Some of this discussion is about images of 2-dimensional objects (writing on paper), but most of it is about photographs of 3-D real-world scenes.

Make sure that you understand the last slide:

It explains how switching age and weight is like an object moving over to a different part of the image (to different pixels).

These two might sound like very different situations, but the analogy is in fact quite good: they’re not really very different.

Understanding this is prerequisite for especially the next video.

Things that make it hard to recognize objects

- Segmentation: Real scenes are cluHered with other objects:

- Its hard to tell which pieces go together as parts of the same object.

- Parts of an object can be hidden behind other objects.

- Lighting: The intensties of the pixels are determined as much by the lighting as by the objects.

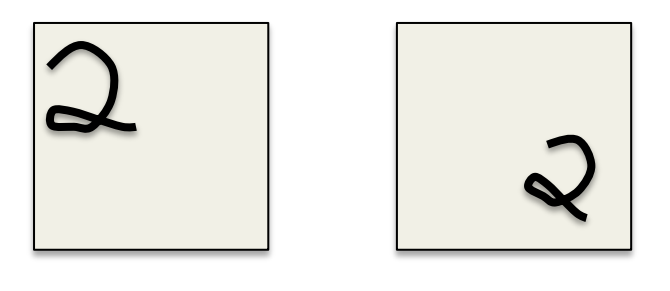

- Deformation: Objects can deform in a variety of non-affine ways:

- e.g a hand-written 2 can have a large loop or just a cusp.

- e.g a hand-written 2 can have a large loop or just a cusp.

- Affordances: Object classes are often defined by how they are used:

- Chairs are things designed for sitting on so they have a wide variety of physical shapes.

More things that make it hard to recognize objects

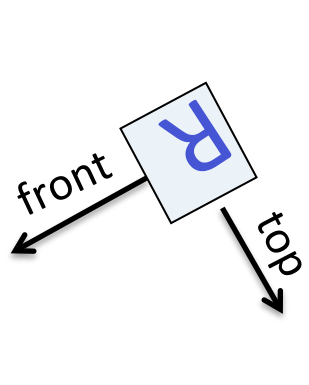

- Viewpoint: Changes in viewpoint cause changes in images that standard learning methods cannot cope with.

- Information hops between input dimensions (i.e. pixels)

- Information hops between input dimensions (i.e. pixels)

- Imagine a medical database in which the age of a patient sometimes hops to the input dimension that normally codes for weight!

- To apply machine learning we would first want to eliminate this dimension-hopping

Reuse

Citation

@online{bochman2017,

author = {Bochman, Oren},

title = {Deep {Neural} {Networks} - {Notes} for Lecture 5a},

date = {2017-08-18},

url = {https://orenbochman.github.io/notes/dnn/dnn-05/l05a.html},

langid = {en}

}