In this lecture we covered the main types of networks studied in the course

Lecture 2a: Types of neural network architectures

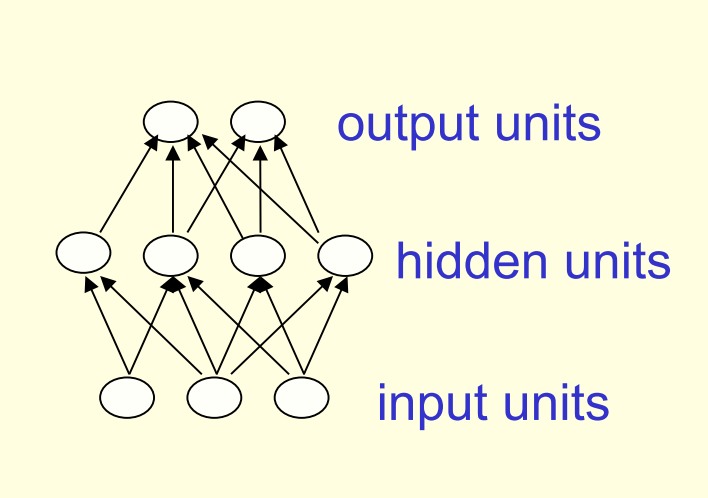

Feed-forward neural networks

- Feed forward networks are the subject of the first half of the course.

- These are the most common type of neural network.

- The first layer is the input and

- The last layer is the output.

- If there is more than one hidden layer, we call them “deep” neural networks.

- They compute a series of transformations that change the similarities between cases.

- The activities of the neurons in each layer are a non-linear function of the activities in the layer below.

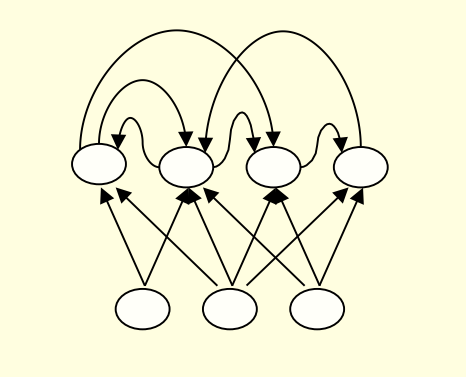

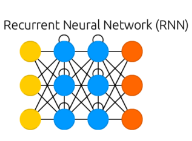

Recurrent networks

- These have directed cycles in their connection graph.

- That means you can sometimes get back to where you started by following the arrows.

- They can have complicated dynamics and this can make them very difficult to train. – There is a lot of interest at present in finding efficient ways of training recurrent nets.

- They are more biologically realistic.

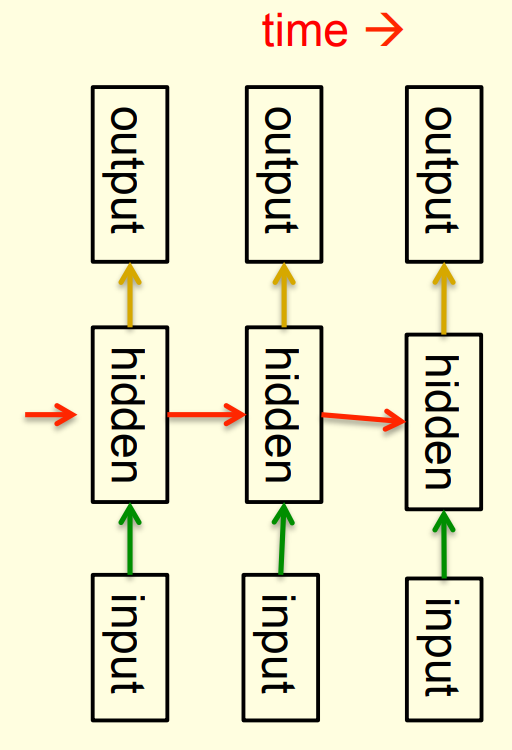

Recurrent neural networks for modeling sequences

- Recurrent neural networks are a very natural way to model sequential data:

- They are equivalent to very deep nets with one hidden layer per time slice.

- Except that they use the same weights at every time slice and they get input at every time slice.

- They have the ability to remember information in their hidden state for a long time.

- But its very hard to train them to use this potential

An example of what RNNs can now do

- In (Sutskever, Martens, and Hinton 2011) the authors trained a special type of RNN to predict the next character in a sequence.

- After training for a long time on a string of half a billion characters from English Wikipedia, he got it to generate new text.

- It generates by predicting the probability distribution for the next character and then sampling a character from this distribution.

- The next slide shows an example of the kind of text it generates. Notice how much it knows!

Sample text generated one character at a time by Ilya Sutskever’s RNN

In 1974 Northern Denver had been overshadowed by CNL, and several Irish intelligence agencies in the Mediterranean region. However, on the Victoria, Kings Hebrew stated that Charles decided to escape during an alliance. The mansion house was completed in 1882, the second in its bridge are omitted, while closing is the proton reticulum composed below it aims, such that it is the blurring of appearing on any well-paid type of box printer.

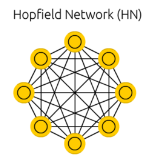

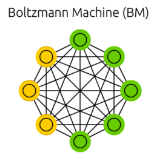

Symmetrically connected networks

- These are like recurrent networks, but the connections between units are symmetrical (they have the same weight in both directions).

- John Hopfield (and others) realized that symmetric networks are much easier to analyze than recurrent networks. – They are also more restricted in what they can do. because they obey an energy function.

- For example, they cannot model cycles.

- John Hopfield (and others) realized that symmetric networks are much easier to analyze than recurrent networks. – They are also more restricted in what they can do. because they obey an energy function.

- In (Hopfield 1982), the author introduced symmetrically connected nets without hidden units that are now called Hopfield networks.

References

Hopfield, J J. 1982. “Neural Networks and Physical Systems with Emergent Collective Computational Abilities.” Proceedings of the National Academy of Sciences 79 (8): 2554–58. https://doi.org/10.1073/pnas.79.8.2554.

Sutskever, Ilya, James Martens, and Geoffrey E Hinton. 2011. “Generating Text with Recurrent Neural Networks.” In Proceedings of the 28th International Conference on Machine Learning (ICML-11), 1017–24. https://www.cs.toronto.edu/~jmartens/docs/RNN_Language.pdf.

Reuse

CC SA BY-NC-ND

Citation

BibTeX citation:

@online{bochman2017,

author = {Bochman, Oren},

title = {Deep {Neural} {Networks} - {Notes} for Lecture 2a},

date = {2017-07-17},

url = {https://orenbochman.github.io/notes/dnn/dnn-02/l02a.html},

langid = {en}

}

For attribution, please cite this work as:

Bochman, Oren. 2017. “Deep Neural Networks - Notes for Lecture

2a.” July 17, 2017. https://orenbochman.github.io/notes/dnn/dnn-02/l02a.html.