These is the first installment of notes to the course “Deep Neural Networks” by Geoffrey Hinton I took on Coursera

This was one of the first course online on the subject.

Hinton was one of the leading researchers on deep learning, his students are some of the most important reaserchers today. He introduced some algorithms and methods that were not published.

This course is dated, the SOTA results have improved, it does not cover transformers and probably all the results have been beaten as this is a fast moving field.

Still this is an interesting, if mathematically sophisticated introduction to deep learning.

Lecture 1a: Why do we need ML?

What is ML?

- It is very hard 😱 to write programs that solve problems like recognizing a 3d object from a novel viewpoint in new lighting conditions in a cluttered scene.

- We don’t know what program to write because we don’t know 😕 how its done in our brain. 🧠

- Even if we had an clue 💡 how to do it — the program would be 😱 horrendously complicated.

- It is hard to write a program to compute the probability that a credit card transaction is fraudulent.

- There may not be any rules that are both simple and reliable. We need to combine a very large number of weak rules.

- Fraud is a moving target. The program needs to keep changing.

The ML approach

- Instead of writing a program by hand for each specific task, we collect lots of examples that specify the correct output for a given input.

- A ML algorithm 🤖 then takes these examples and produces a program that does the job.

- ]The program produced by the ML algorithm 🤖] may look very different from a typical hand-written program]{.mark}. It may contain millions of numbers 🙀 …

- If we do it right, the program works for new cases as well as the ones we trained it on.

- If the data changes the program can change too by training on the new data.

- Massive amounts of computation are now cheaper than paying someone to write a task-specific program.

Some examples of tasks best solved by ML

- Recognizing patterns:

- Objects in real scenes

- Facial identities or facial expressions

- Spoken words

- Recognizing anomalies:

- Unusual sequences of credit card transactions

- Unusual patterns of sensor readings in a nuclear power plant1

- Prediction:

The standard example of ML

- A lot of genetics is done on fruit flies.

- They are convenient because they breed fast.

- We already know a lot about them.

- The MNIST database of hand-written digits is the the ML equivalent of fruit flies. 🦋

- They are publicly available and we can learn them quite fast in a moderate-sized neural net.

- We know a huge amount about how well various ML methods do on MNIST.

- We will use MNIST as our standard task.

Beyond MNIST — The ImageNet task

- 1000 different object classes in 1.3 million high-resolution training images from the web.

- Best system in 2010 competition got 47% error for its first choice and 25% error for its top 5 choices.

- Jitendra Malik, an eminent neural net sceptic 💀, said that this competition is a good test of whether DNNs 🧠 work well for object recognition.

- A very deep neural net (Krizhevsky, Sutskever, and Hinton 2012) gets less that 40% error for its first choice and less than 20% for its top 5 choices.

The Speech recognition task

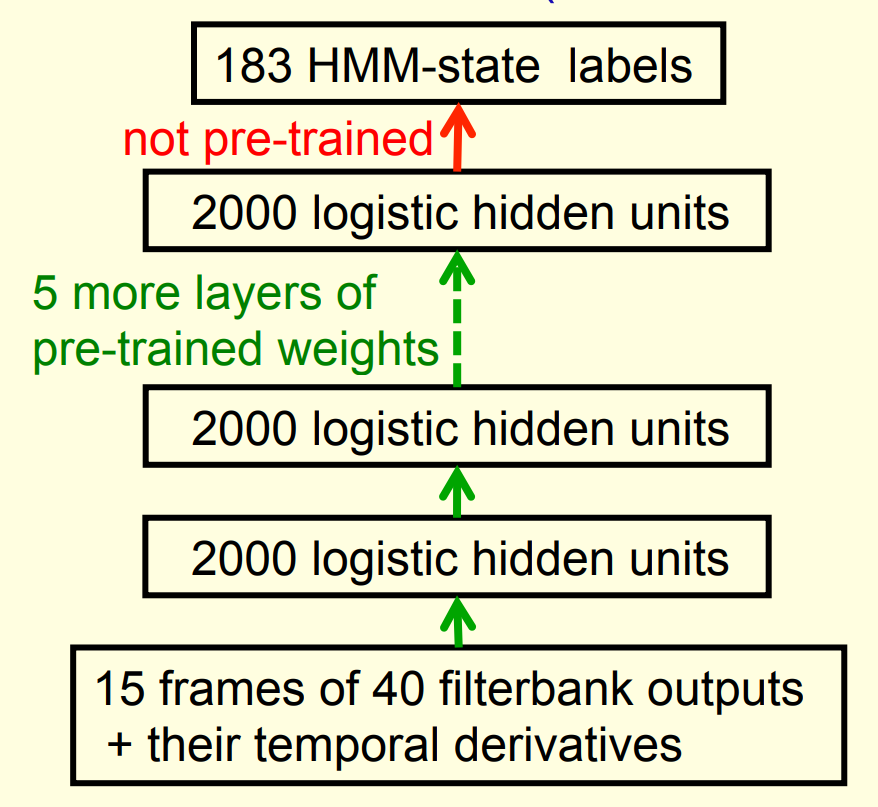

- A speech recognition system has several stages:

- Pre-processing: Convert the sound wave into a vector of acoustic coefficients. Extract a new vector about every 10 mille seconds.

- The acoustic model: Use a few adjacent vectors of acoustic coefficients to place bets on which part of which phoneme is being spoken.

- Decoding: Find the sequence of bets that does the best job of fitting the acoustic data and also fitting a model of the kinds of things people say.

- Deep neural networks pioneered by George Dahl and Abdel-rahman Mohamed are now replacing the previous ML method for the acoustic model.

Phone recognition on the TIMIT benchmark

He discusses work from from Mohamed, Dahl, and Hinton (2012)

- After standard post-processing using a bi-phone model, a deep net with 8 layers gets 20.7% error rate.

- The best previous speaker independent result on TIMIT 4 was 24.4% and this required averaging several models.5

- NLP researcher Li Deng at Microsoft Research realized that this result could change the way speech recognition was done.

References

Footnotes

use a program no one can interprest to control a nuclear reactor 😒↩︎

😕 if there be predicted at all↩︎

a recommendation system is probably best for this 😎↩︎

is a corpus of phonemically and lexically transcribed speech of American English speakers of different sexes and dialects. Each transcribed element has been delineated in time. TIMIT was designed to further acoustic-phonetic knowledge and automatic speech recognition systems↩︎

this is a massive jump in SOTA performance↩︎

Reuse

Citation

@online{bochman2017,

author = {Bochman, Oren},

title = {Deep {Neural} {Networks} - {Notes} for Lecture 1a},

date = {2017-07-02},

url = {https://orenbochman.github.io/notes/dnn/dnn-01/l01a.html},

langid = {en}

}