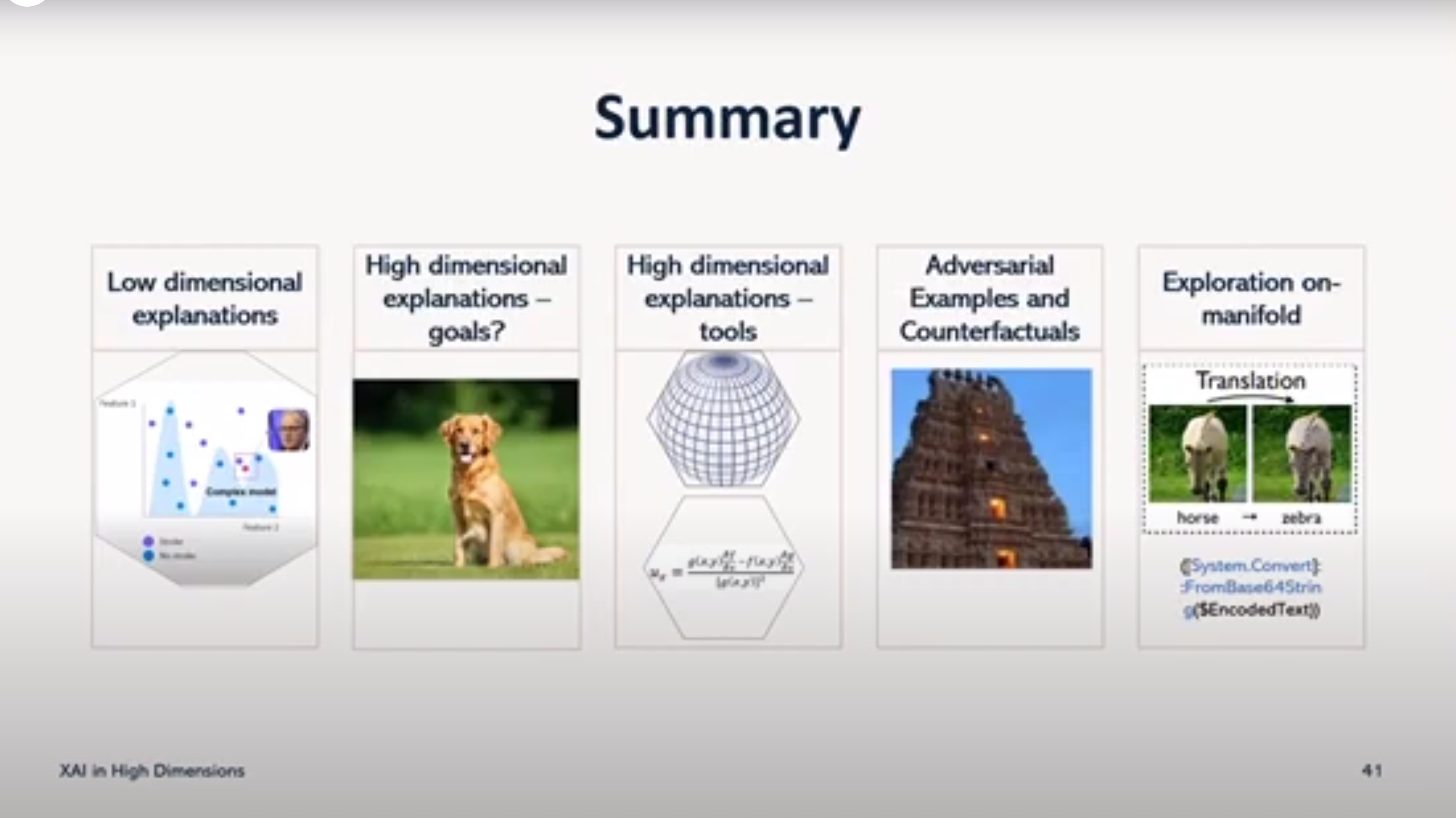

The XAI course provides a comprehensive overview of explainable AI, covering both theory and practice, and exploring various use cases for explainability.

Participants will learn not only how to generate explanations, but also how to evaluate and effectively communicate these explanations to diverse stakeholders.

Series Poster

Session Description

How to properly incorporate explanations in machine learning projects and what aspects should you keep in mind?

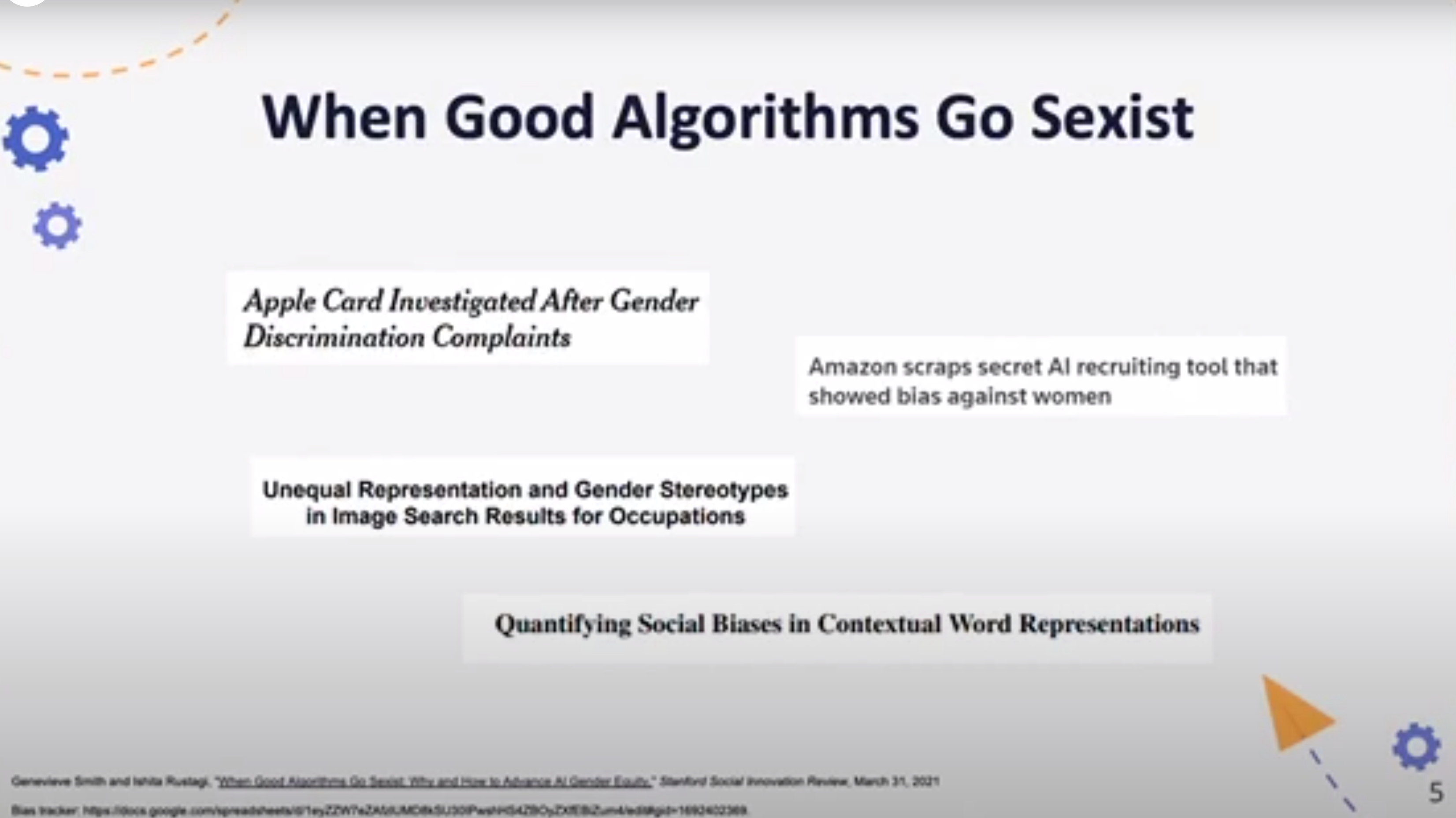

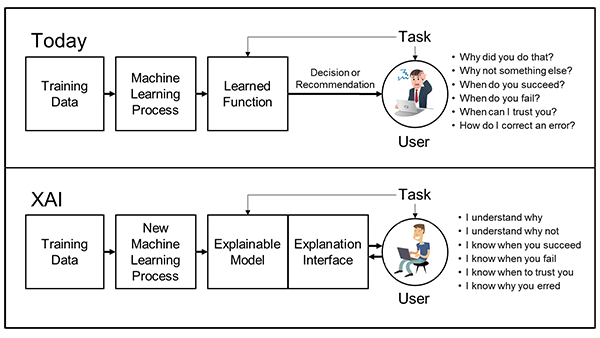

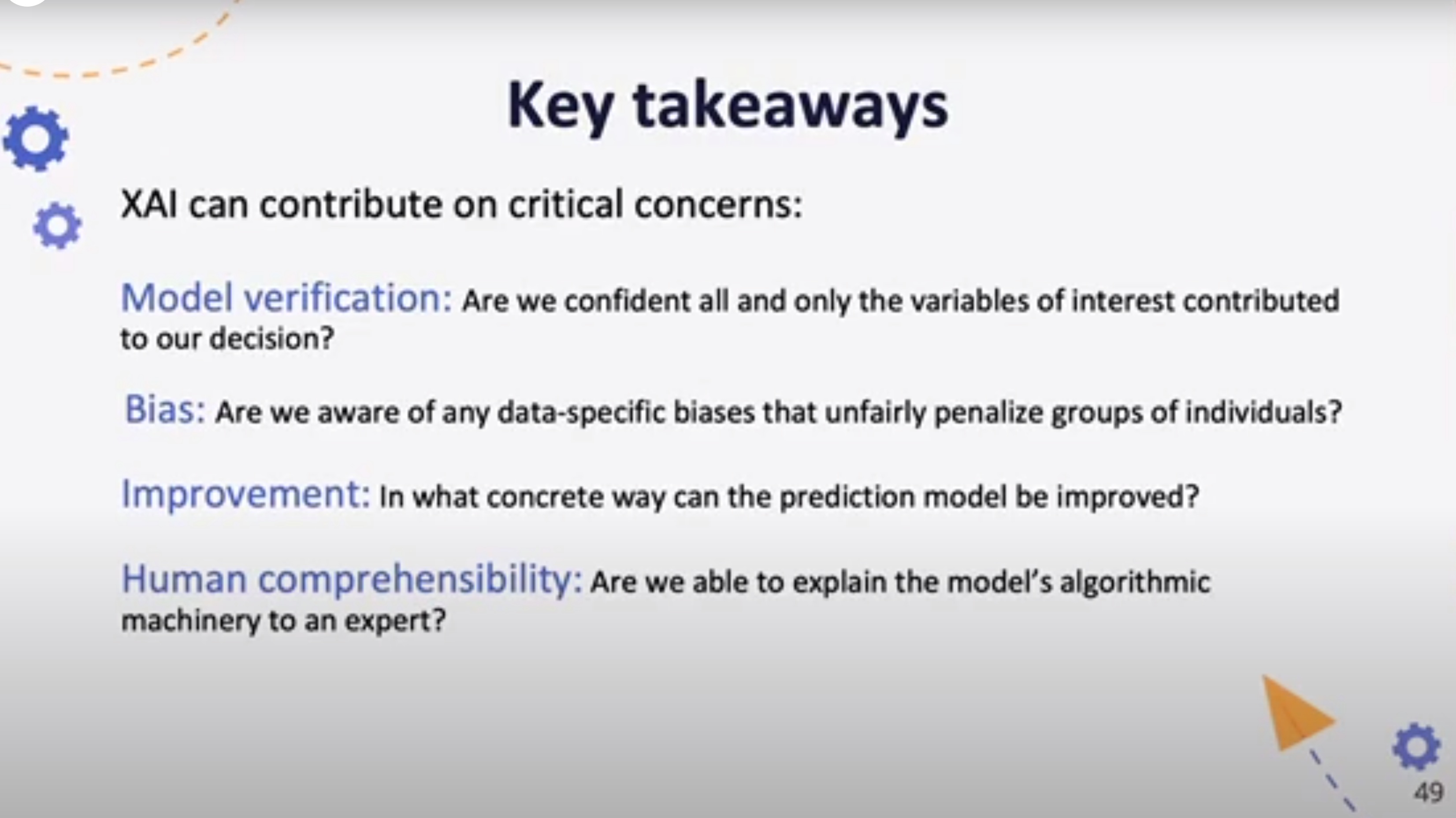

Over the past few years the need to explain the output of machine learning models has received growing attention.

Explanations not only reveal the reasons behind models predictions and increase users’ trust in the model, but they can be used for different purposes.

To fully utilize explanations and incorporate them into machine learning projects the following aspects of explanations should taken into consideration — explanation goals, the explanation method, and explanations’ quality.

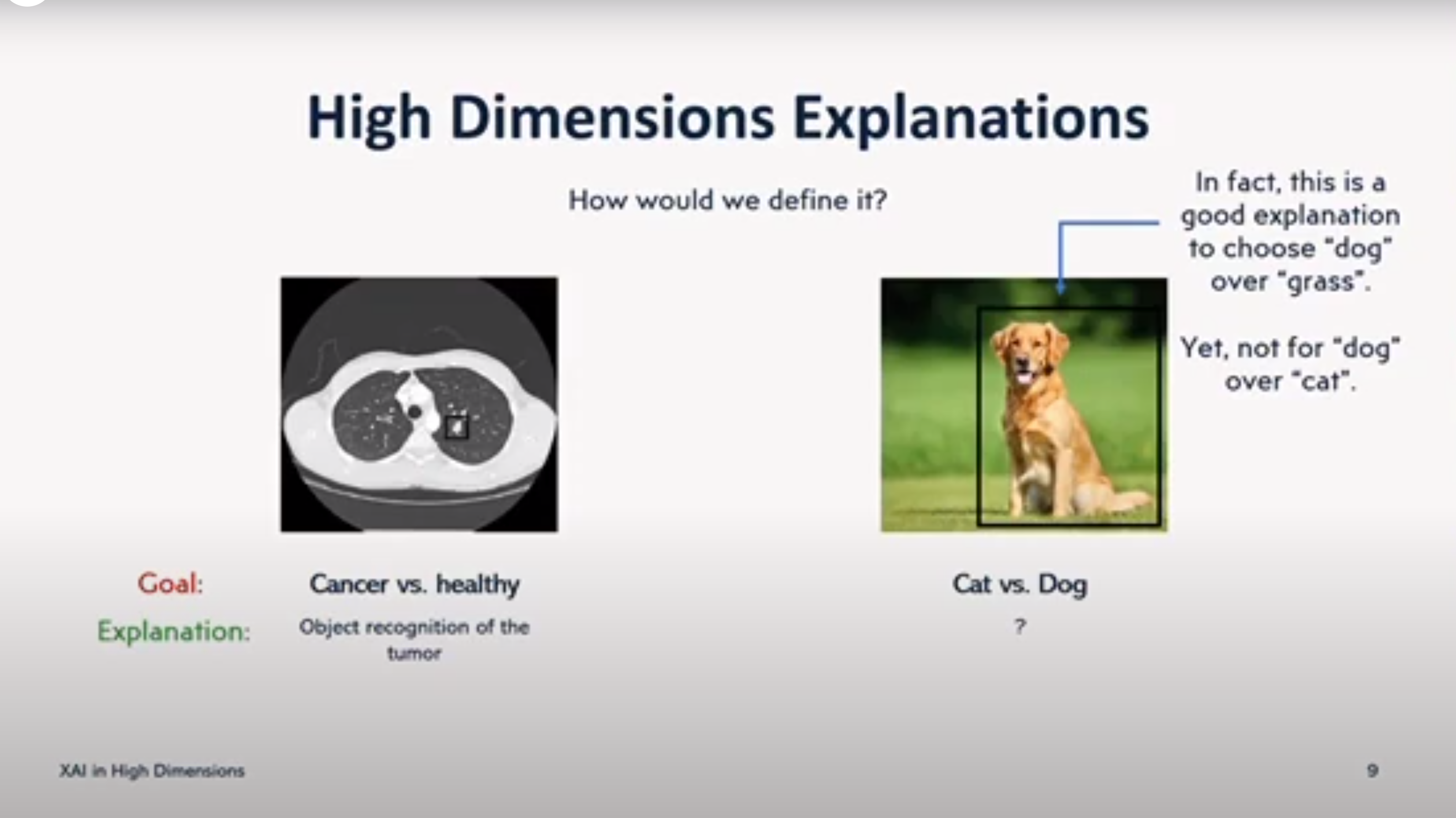

In this talk, we will discuss how to select the appropriate explanation method based on the intended purpose of the explanation.

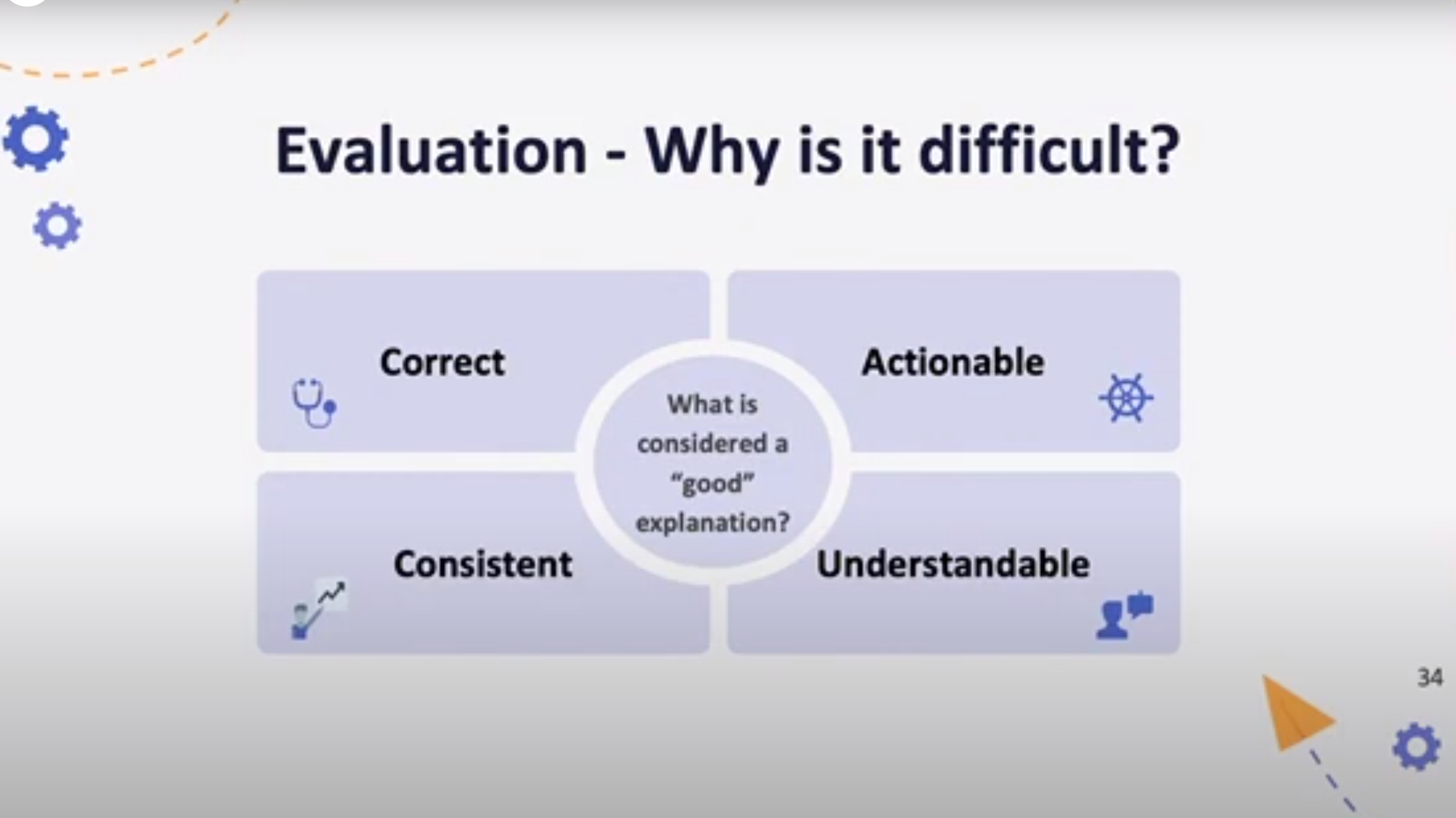

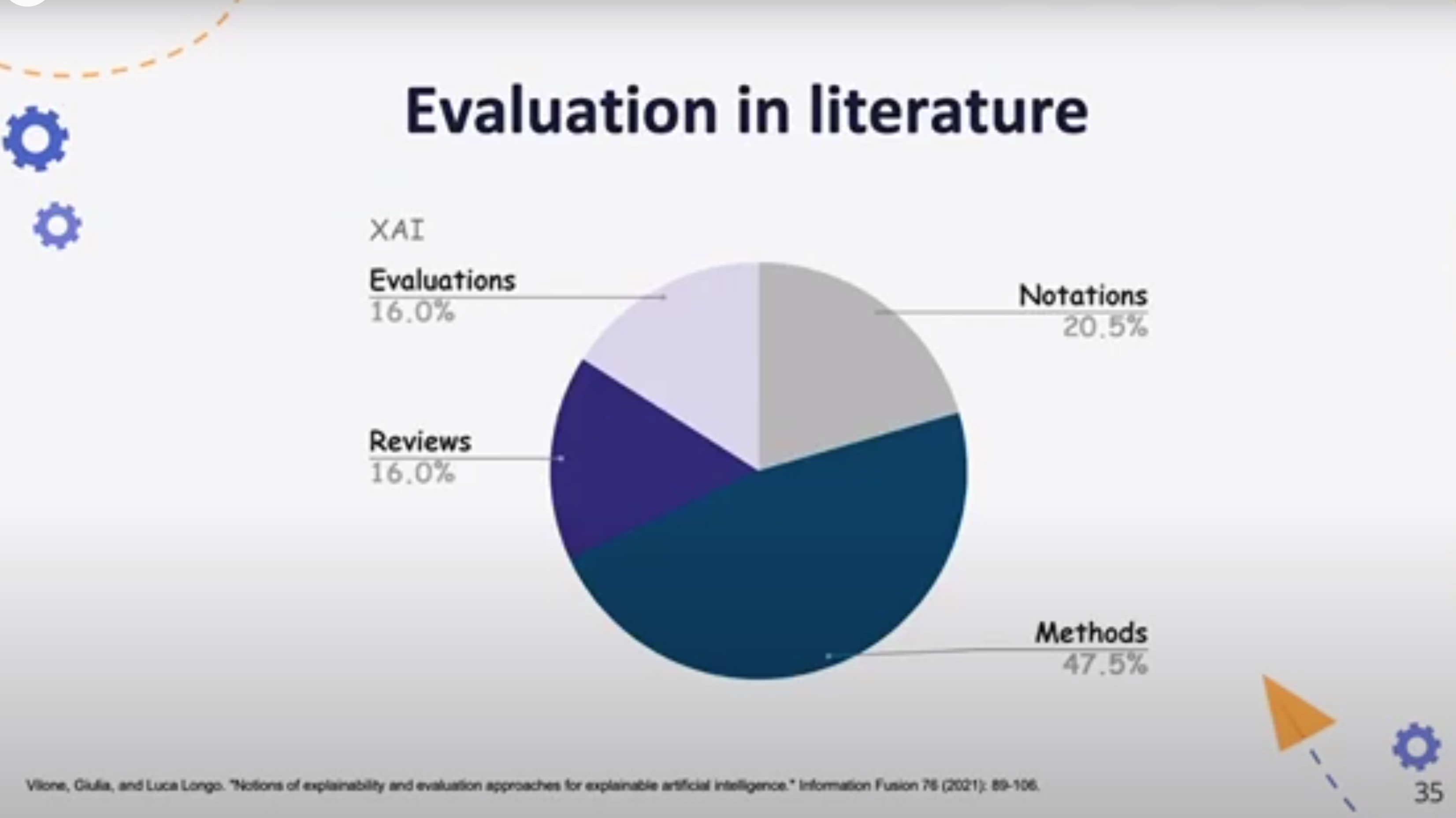

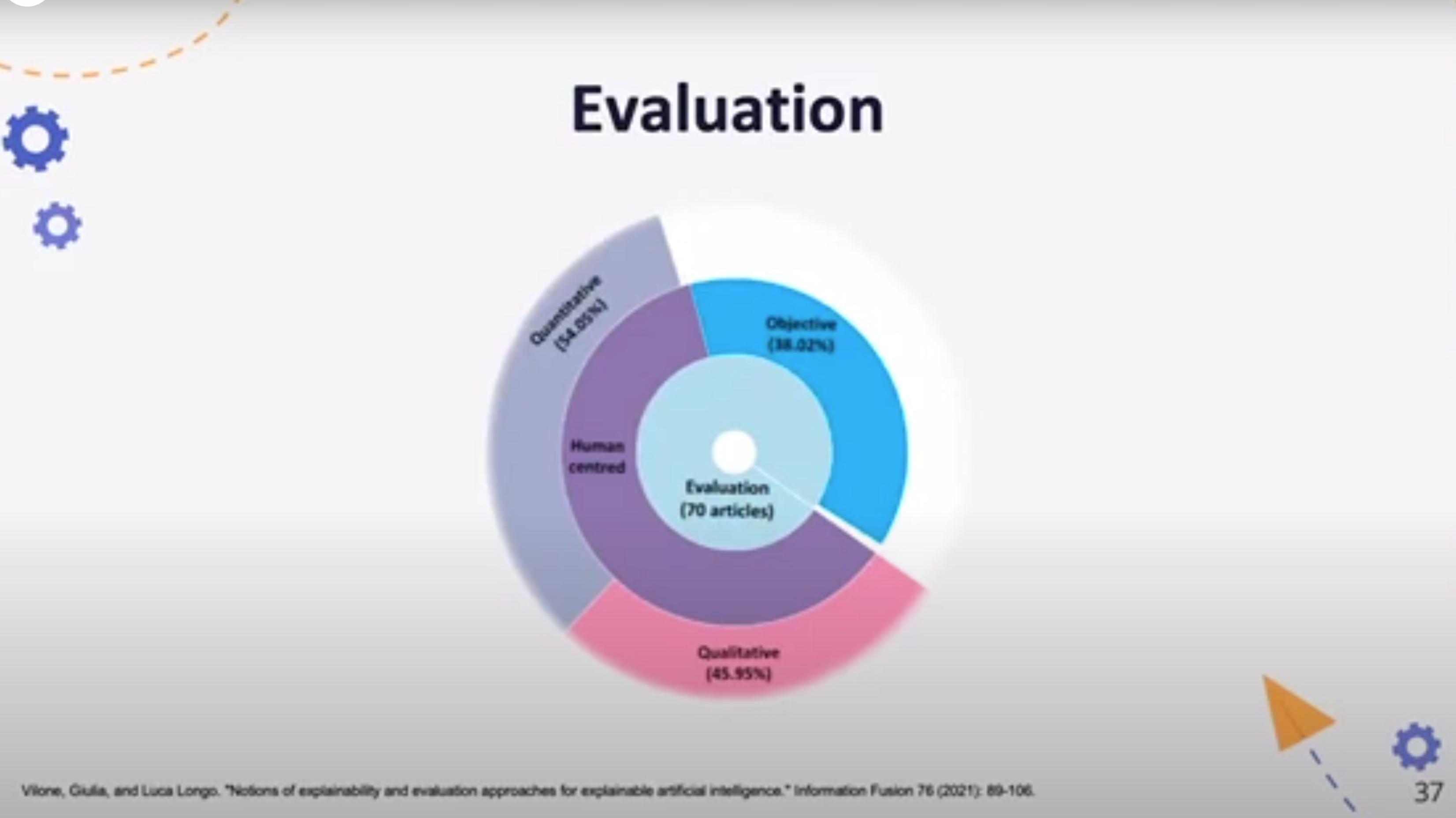

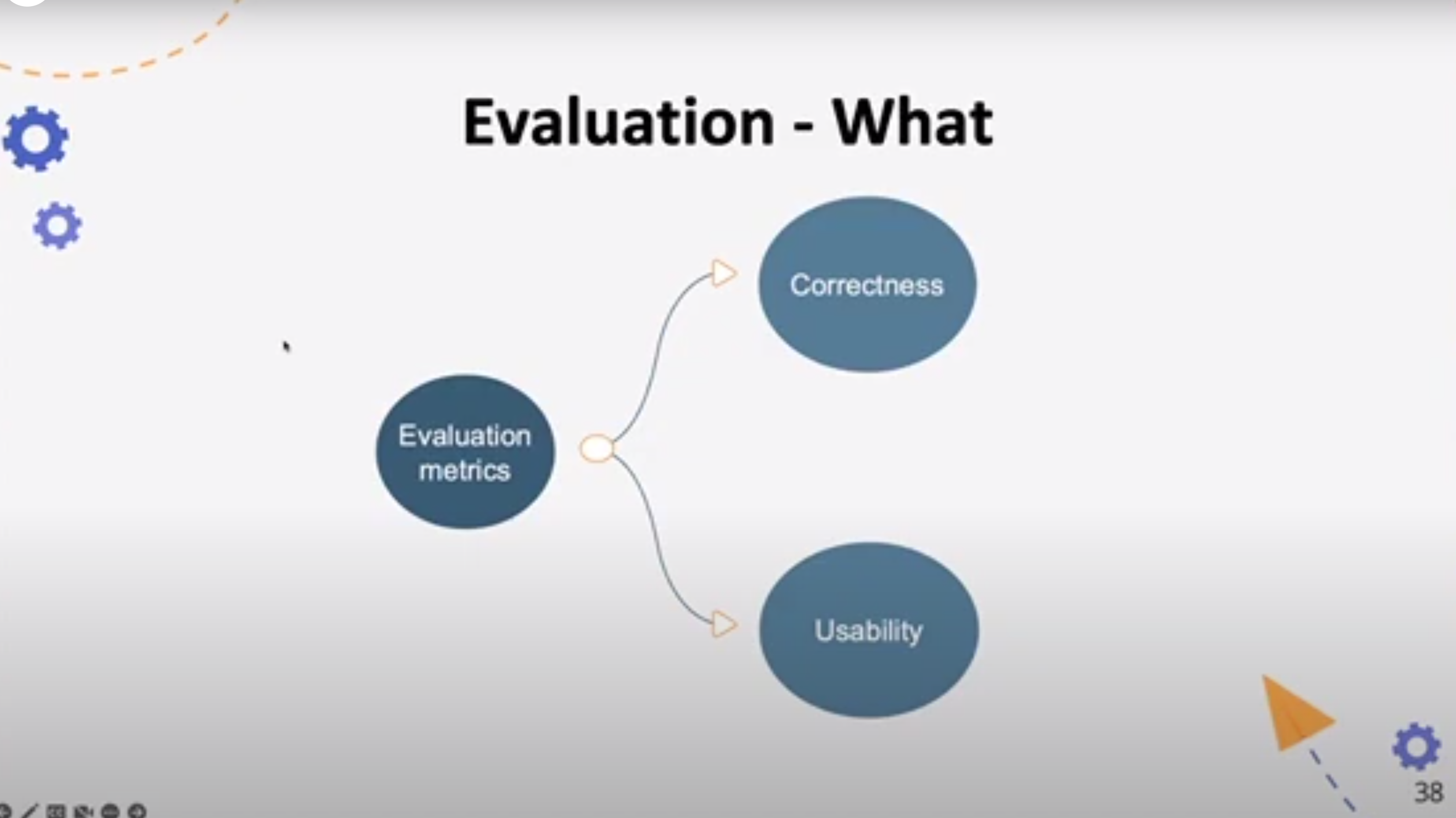

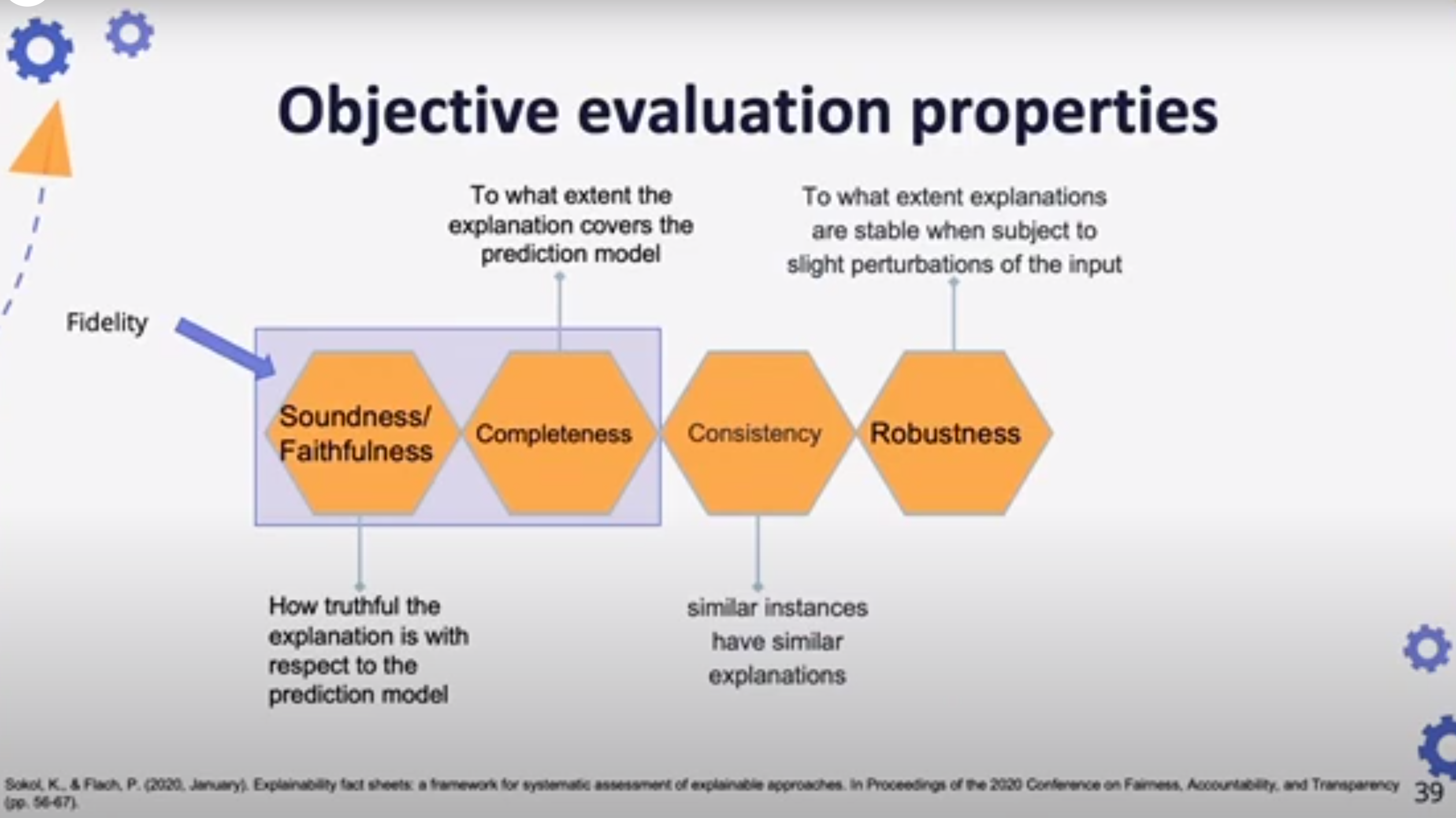

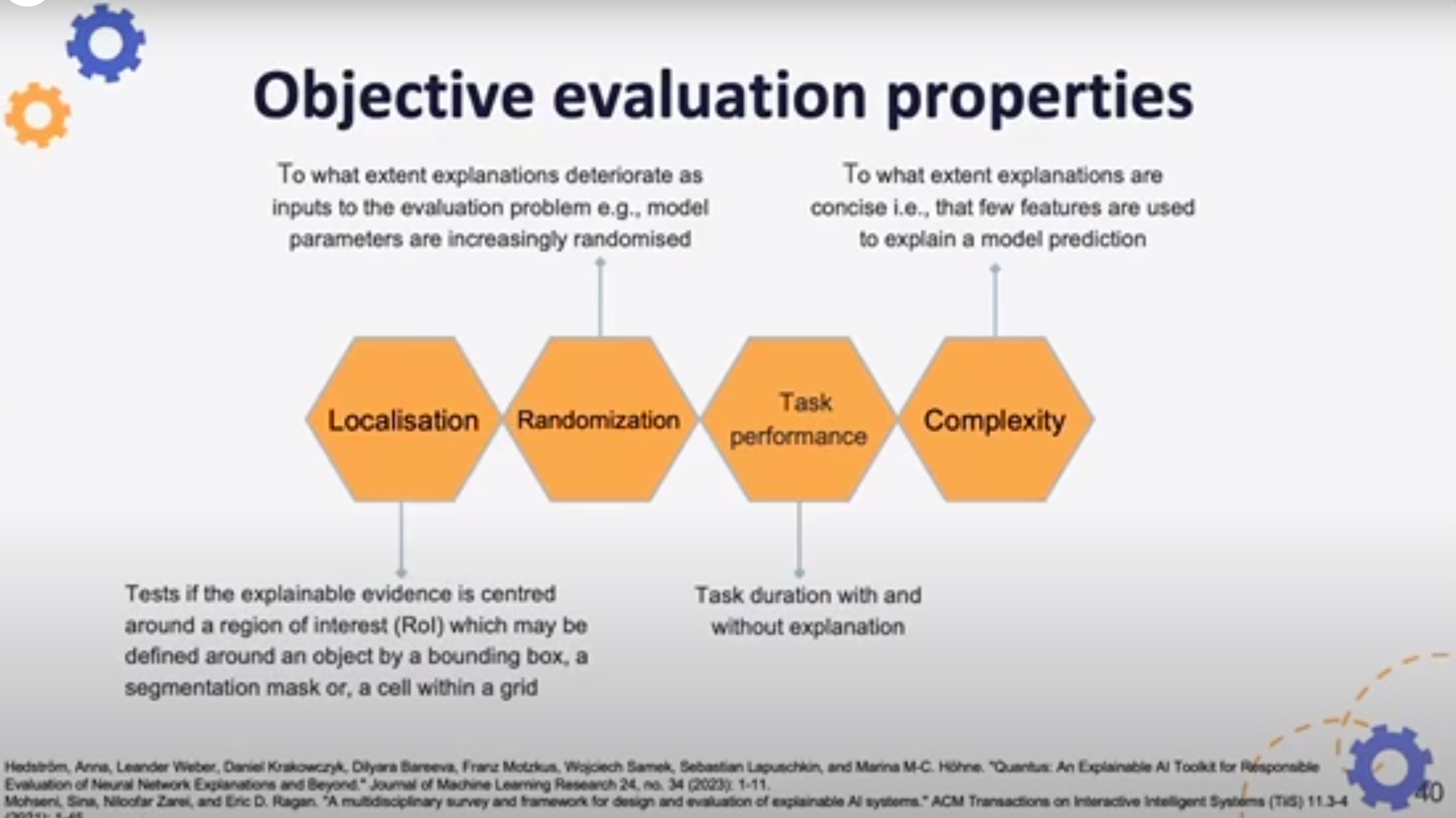

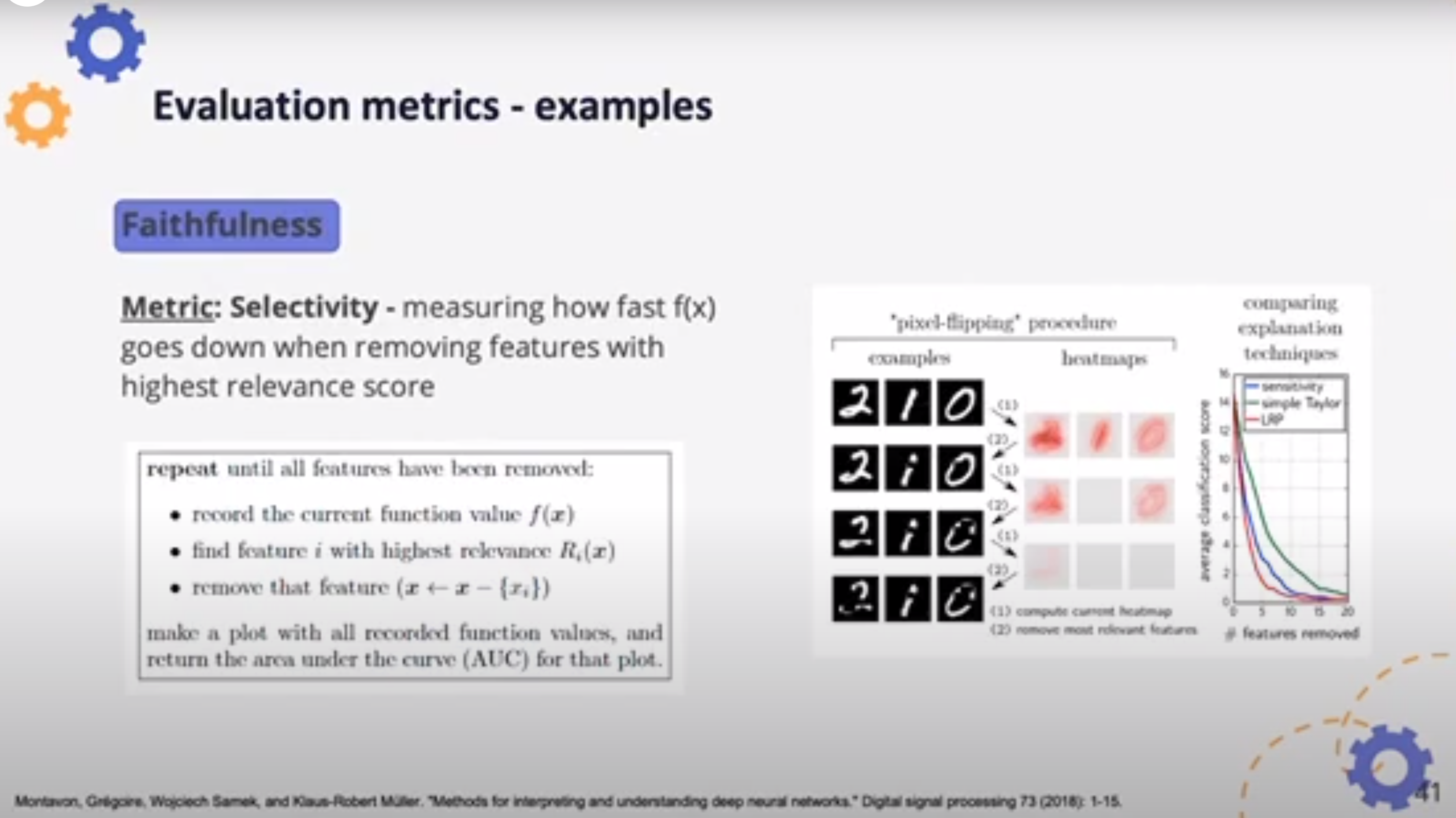

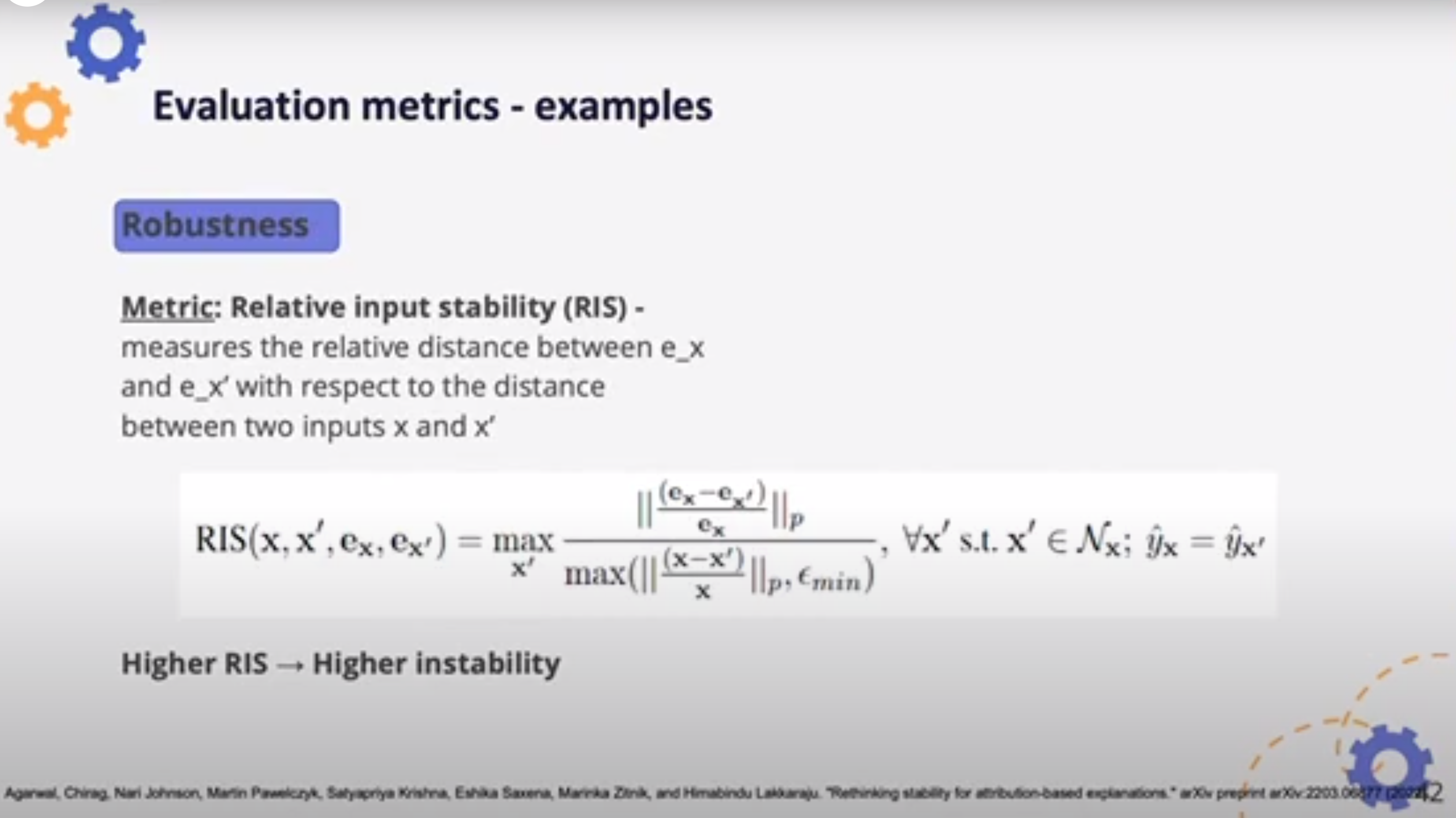

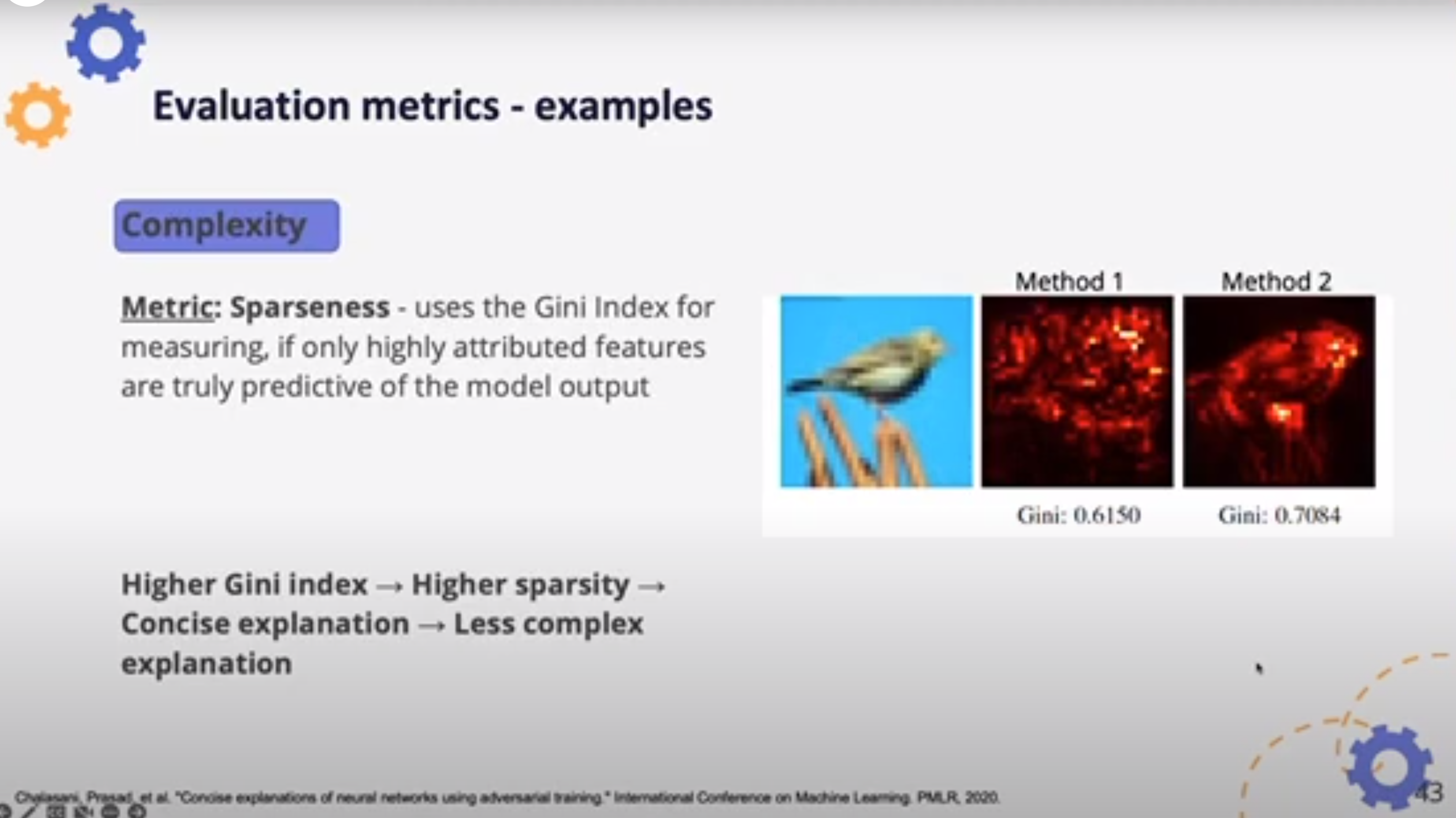

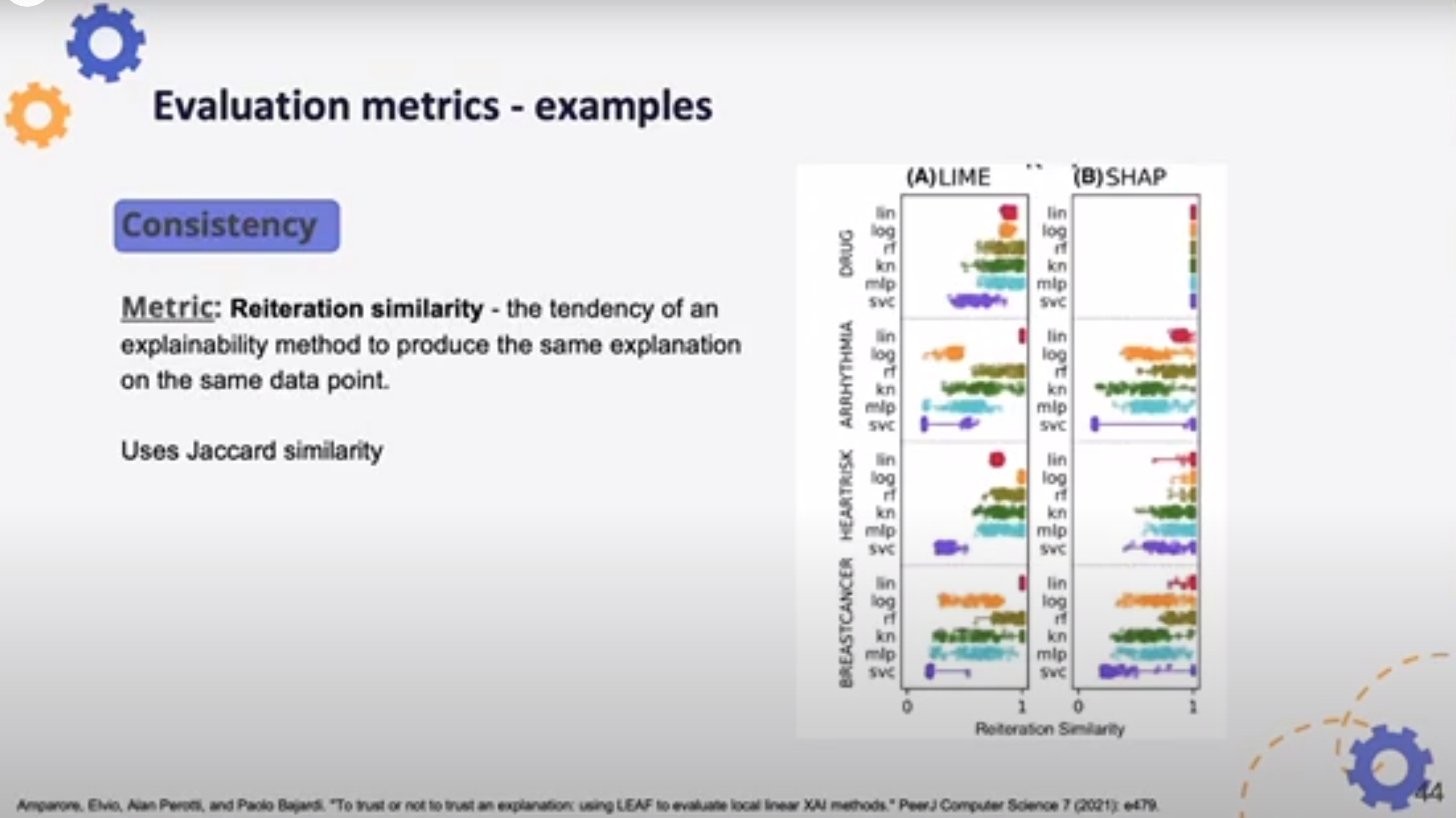

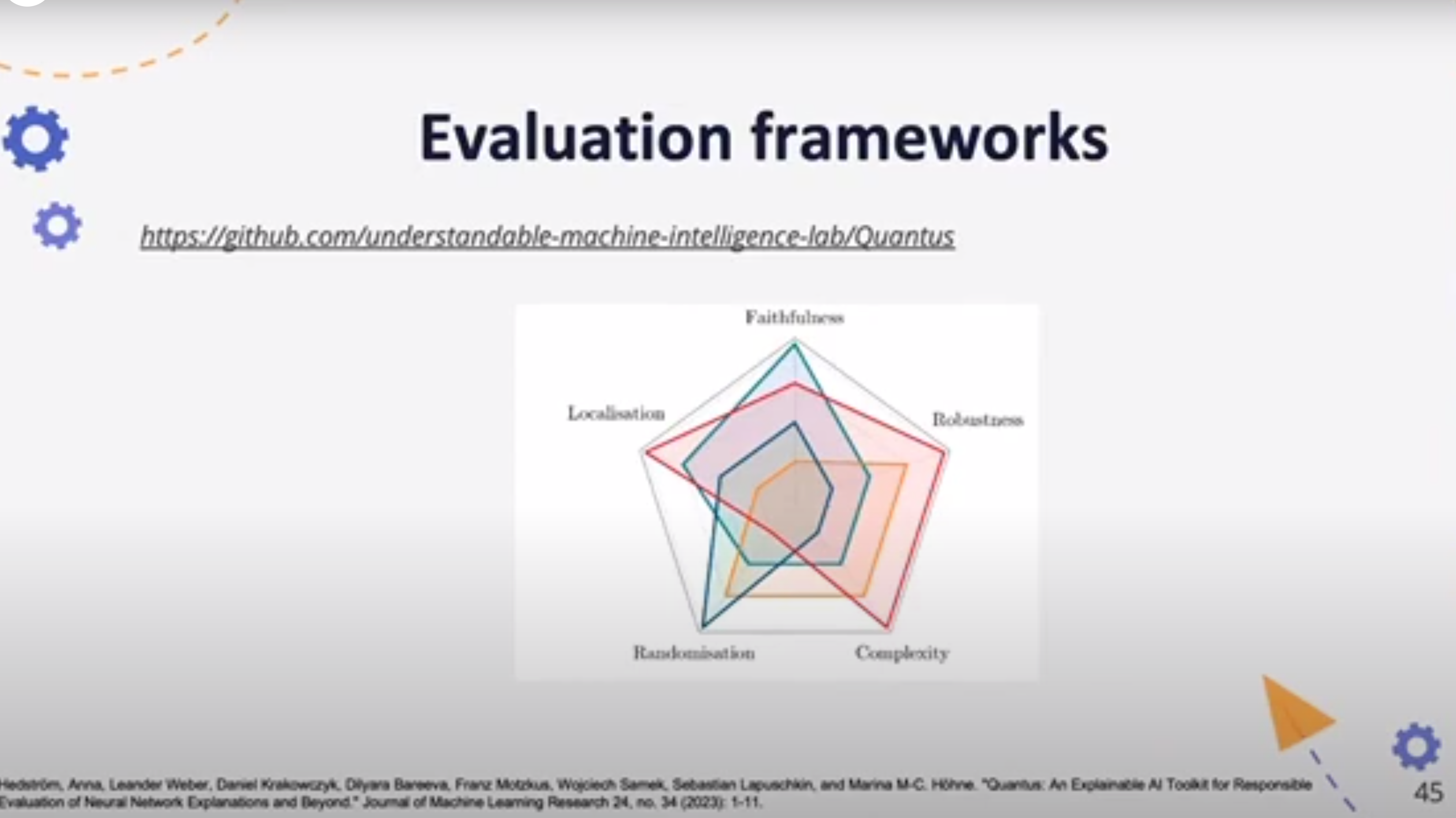

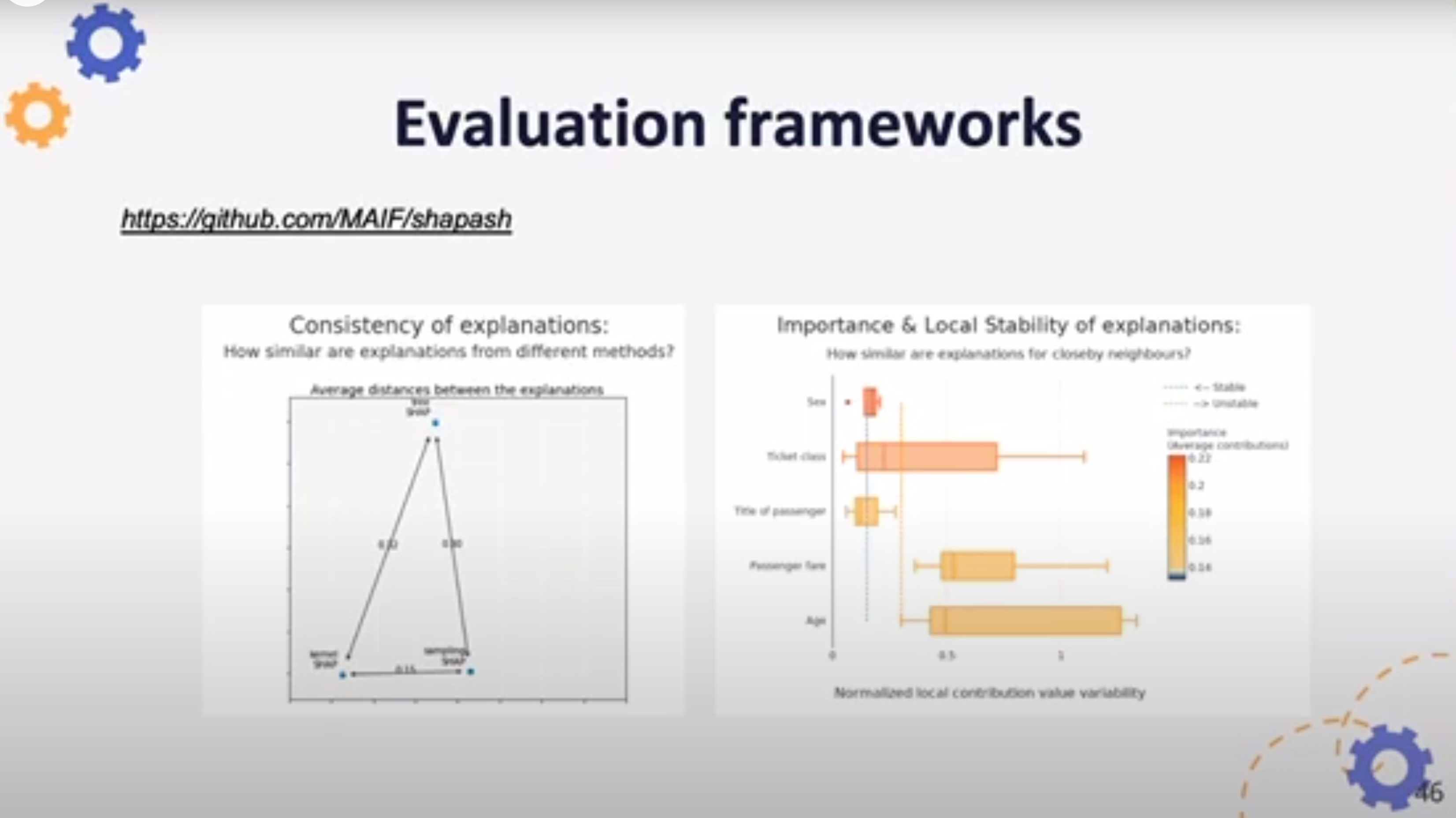

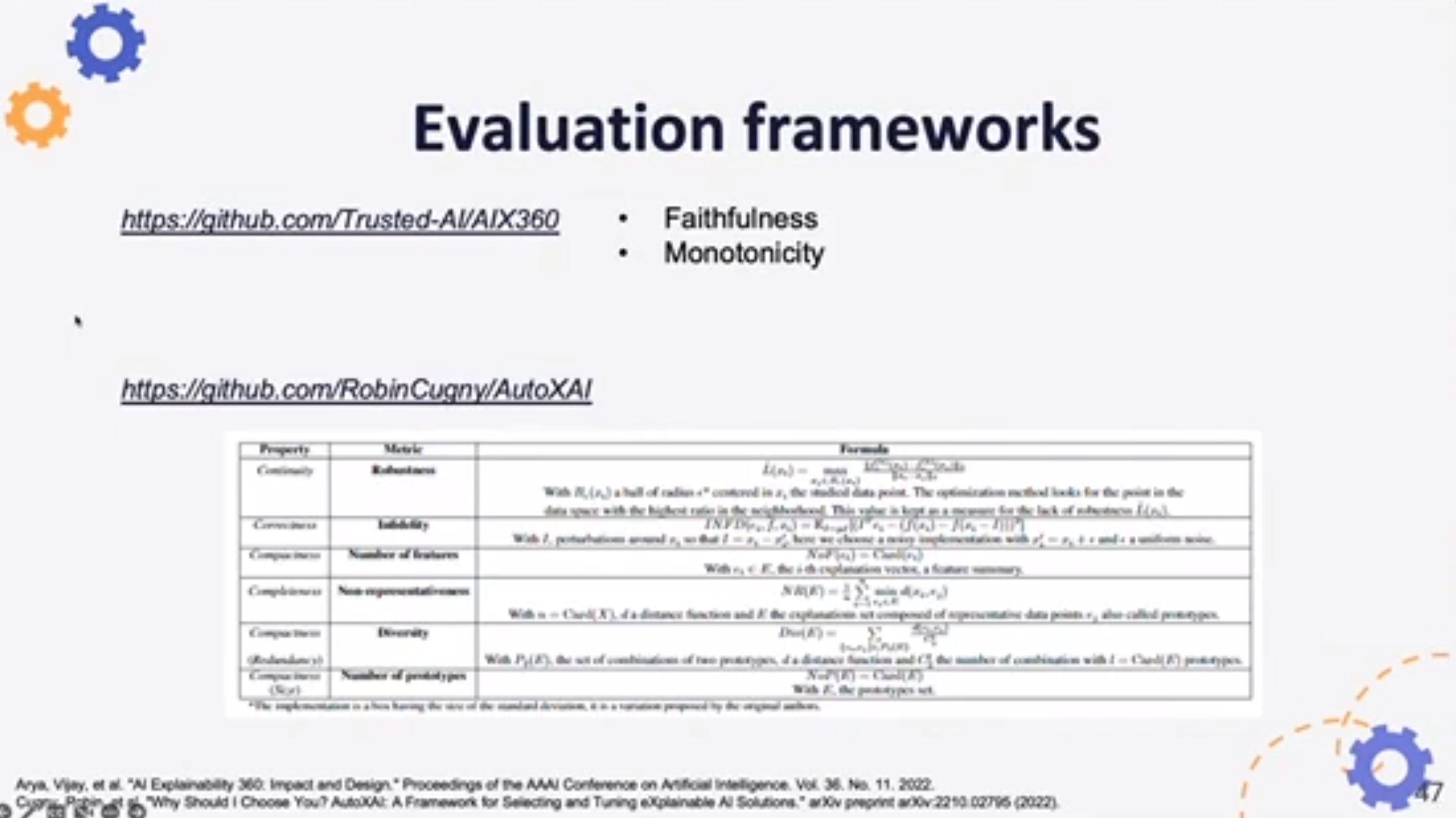

Then, we will present two approaches for evaluating explanations, including practical examples of evaluation metrics, while highlighting the importance of assessing explanation quality.

Next, we will examine the various purposes explanation can serve, along with the stage of the machine learning pipeline the explanation should be incorporated in.

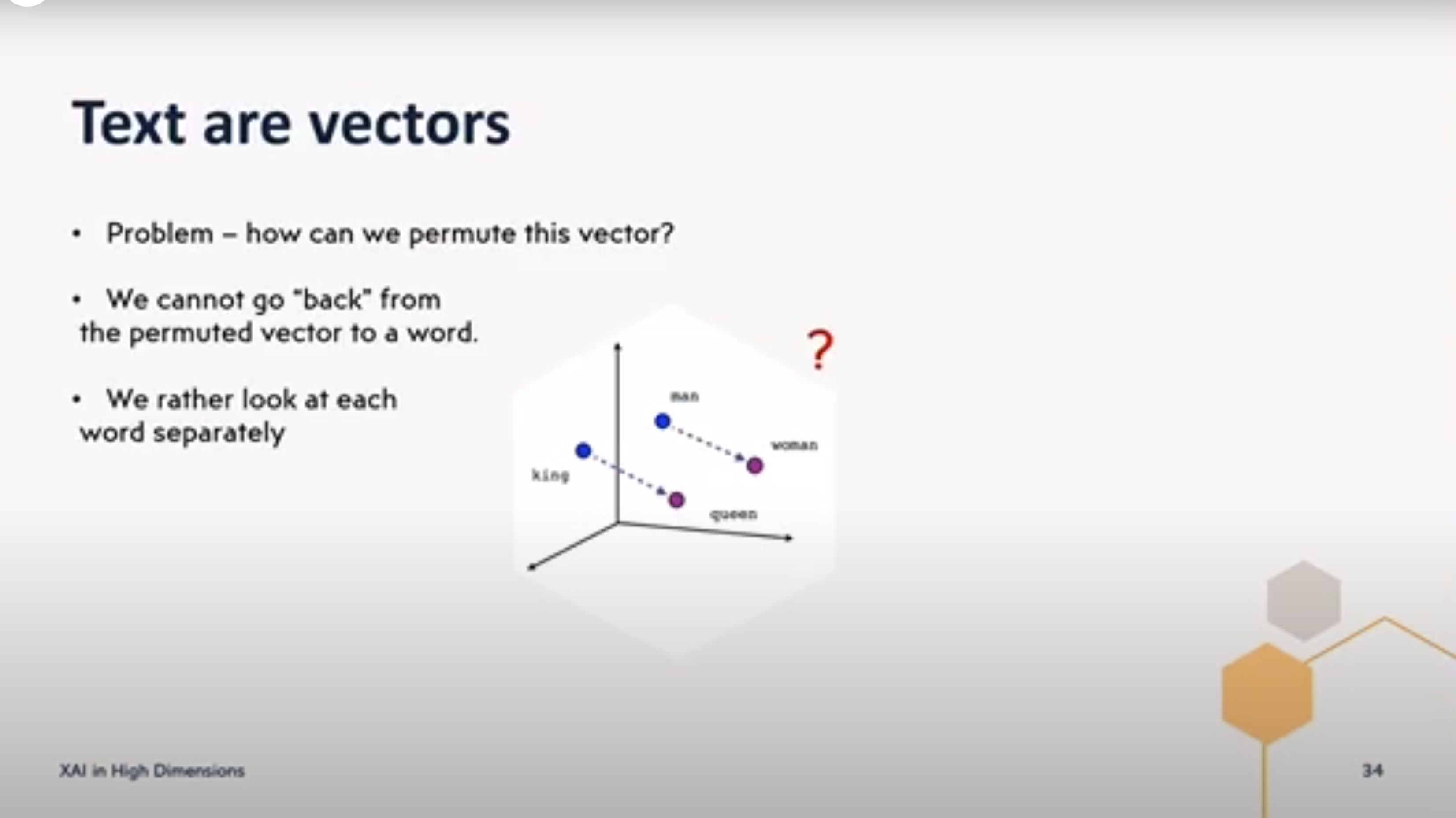

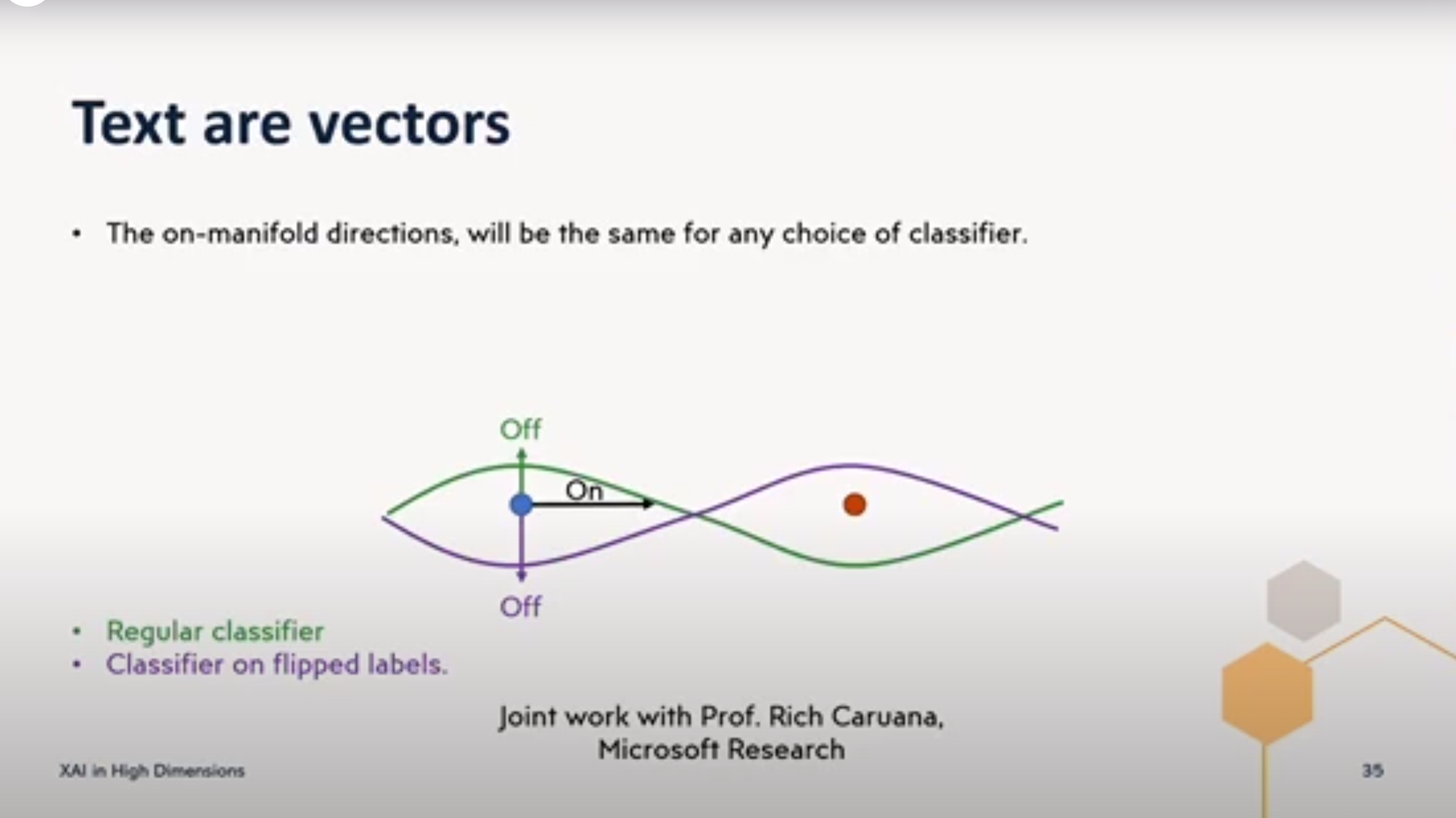

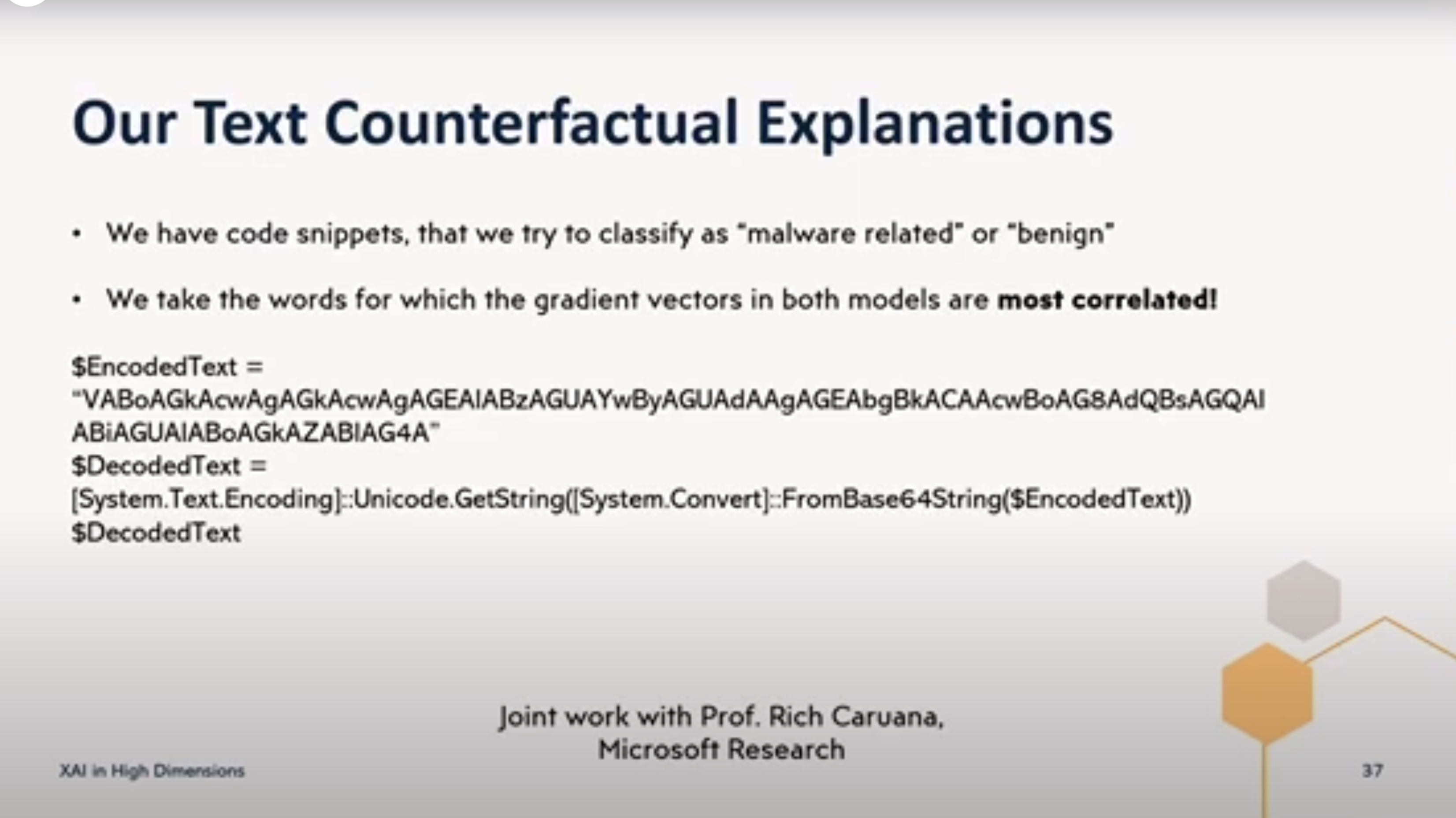

Finally we will present a real use case of script classification as malware-related in Microsoft and how we can benefit from high-dimensional explanations in this context.

Session Video

The task here was to identify bad insurgence claims. e.g.

when the product was out of warranty

the item was not insured

the damage was not covered.

the model found many claim that the insurance people had not and they were skeptical.

the data scientist was on the spot and needed local explanations.

so we have some use cases, how do we do it!?

Adding post-hoc XAI to an ML project

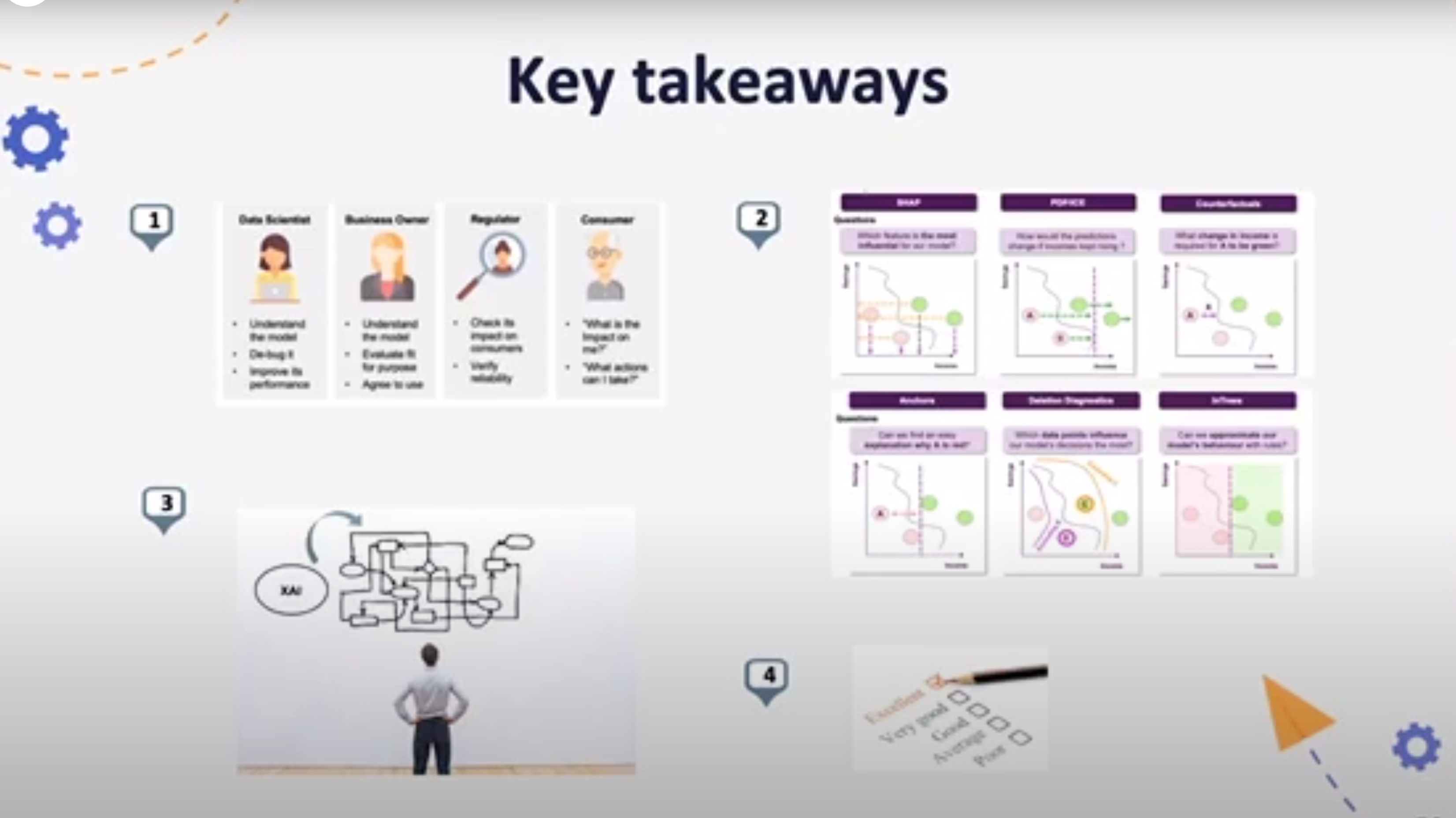

the idea is a process with 5 steps:

- understand stakeholder - i.e. the end users of the project

- identify the goals of using explanations

- chose XAI method that fits with the project properties

- implication - taking the goal one step further

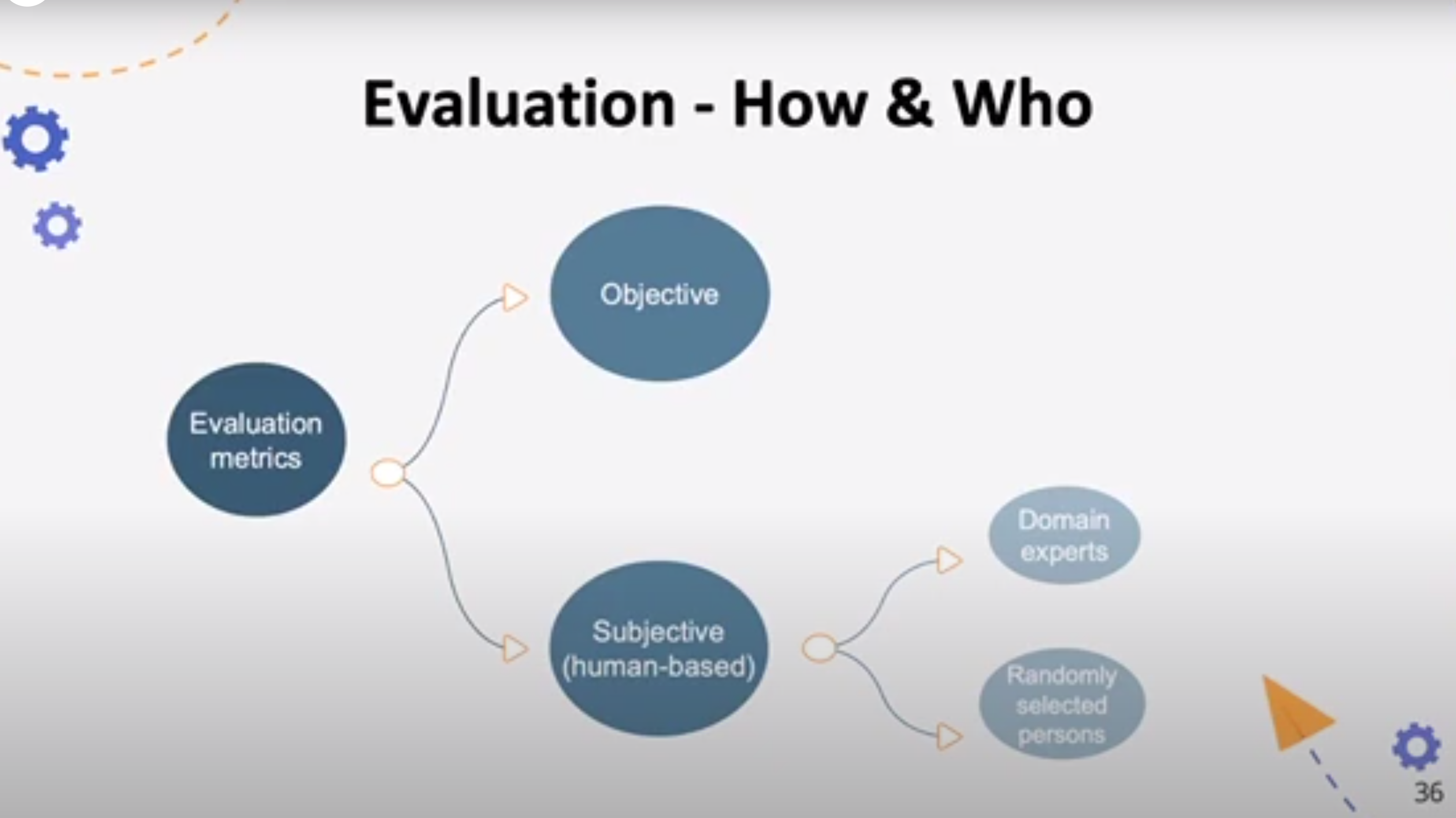

- decide on an evaluation metrics

1. Who are the explanation for ?

Different stakeholders have different needs from an ML model. Once we captured these needs we can decide on a suitable strategy for providing a suitable explanation. Understanding these needs will also aid in selecting the metrics utilized to determine the effectiveness of explanation.

| Stakeholder | Their Goal |

|---|---|

| Data scientists | Debug and refine the model |

| Decision makers | Assess model fit to a business strategy |

| Model Risk Analyst | Assess the model’s robustness |

| Regulators | Inspect model’s reliability and impact on costumers |

| Consumers | Transparency into decisions that affect them |

Explanation goals ?

understanding predictions

model debugging and verification

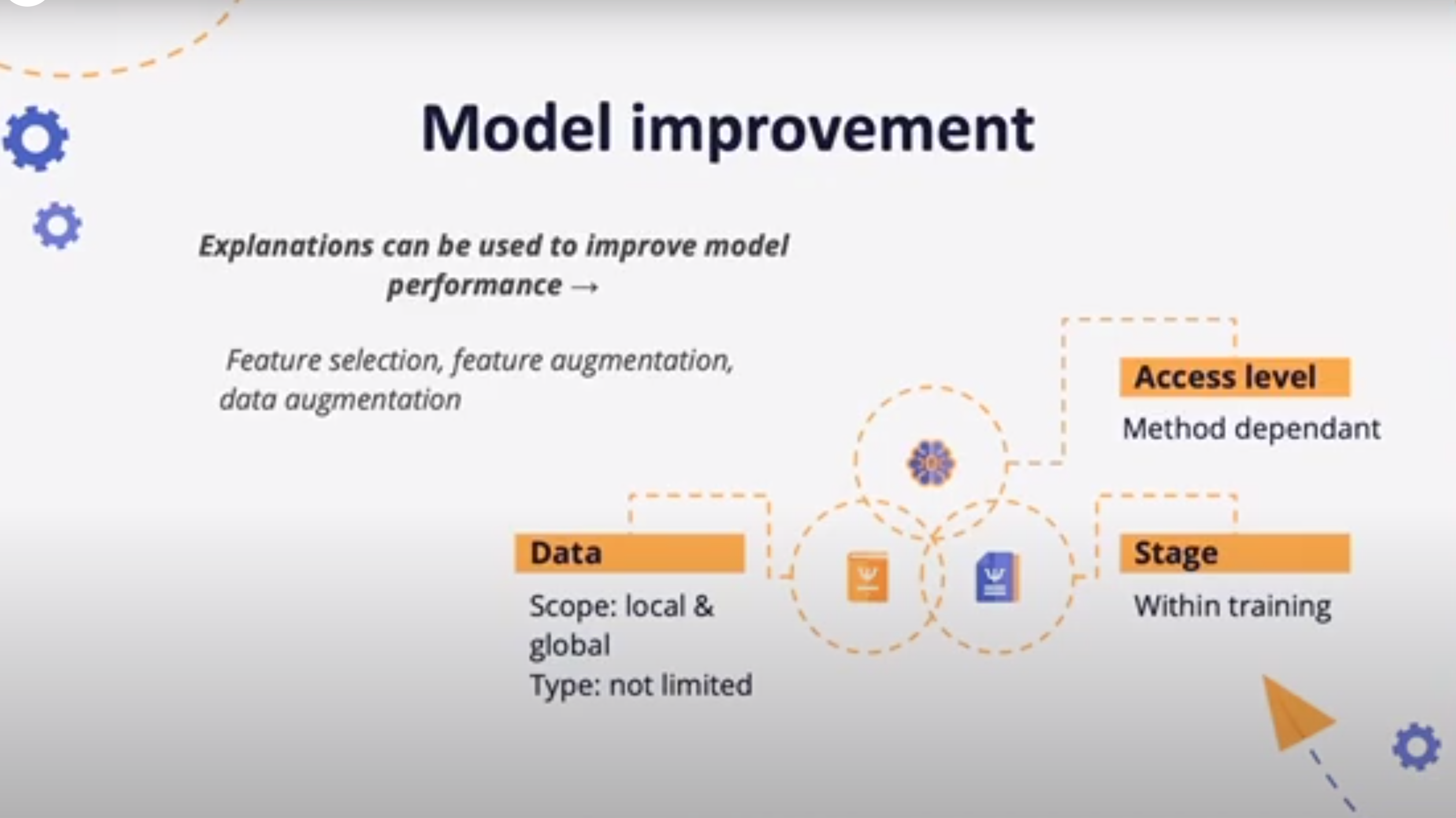

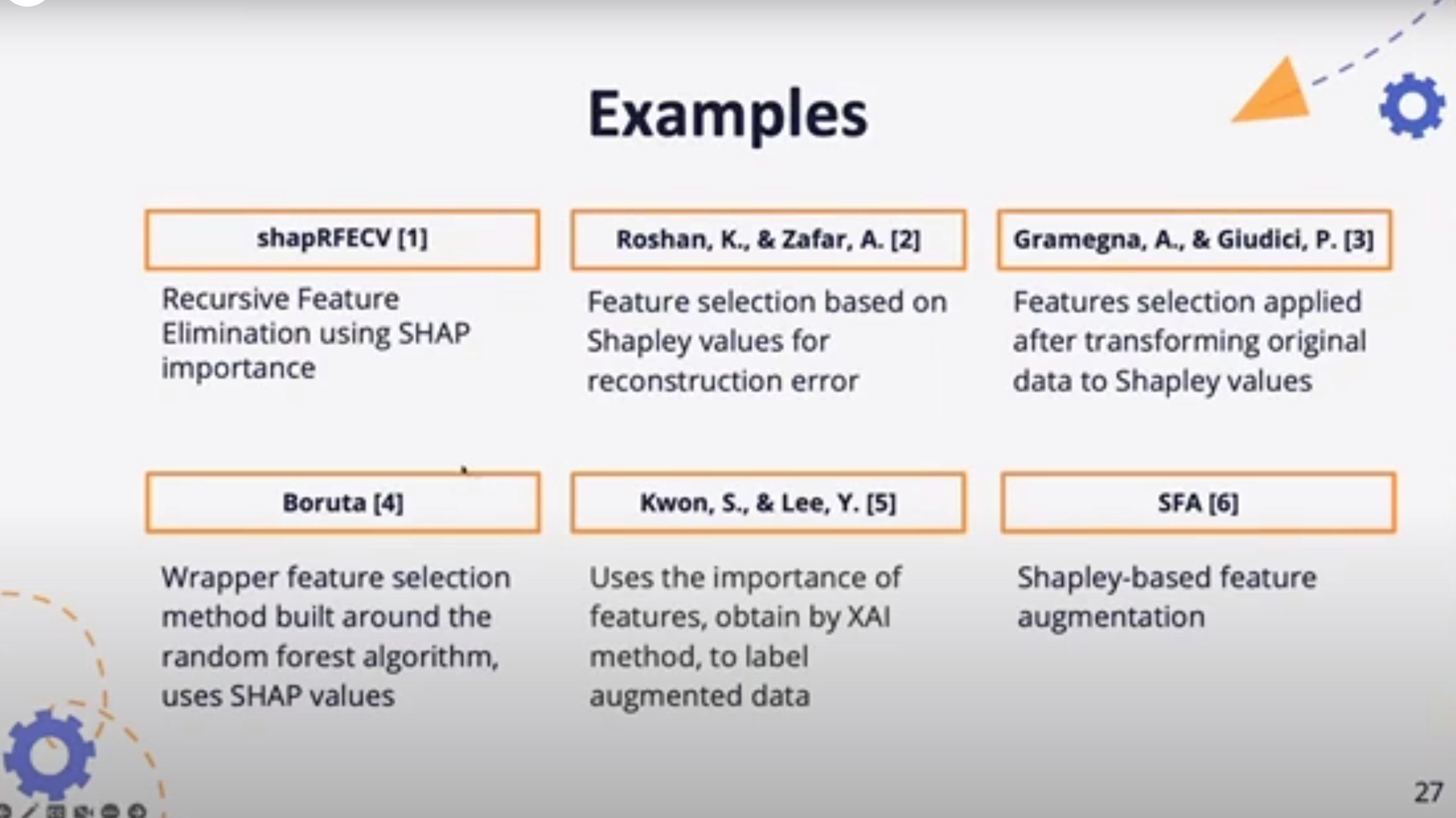

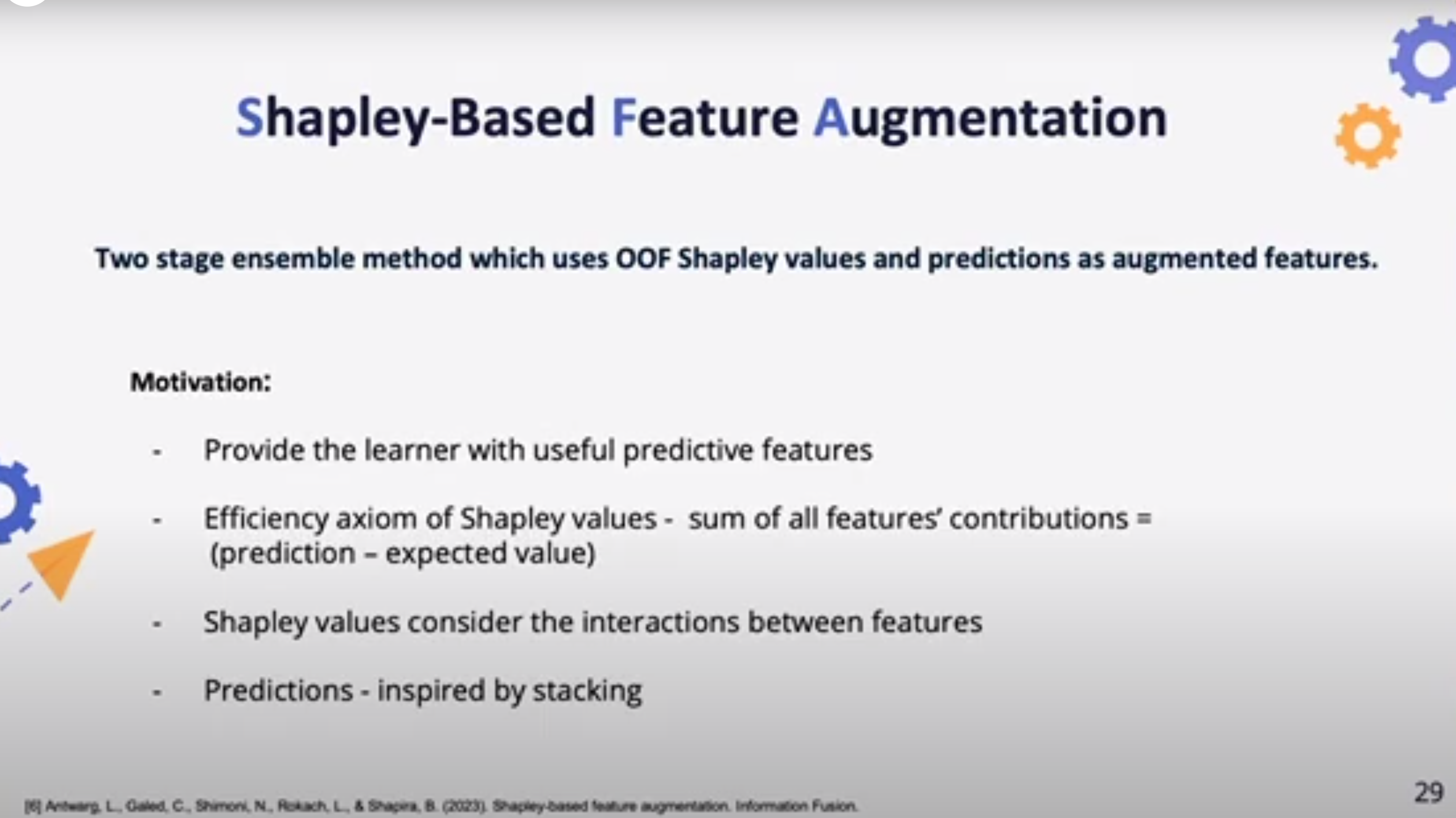

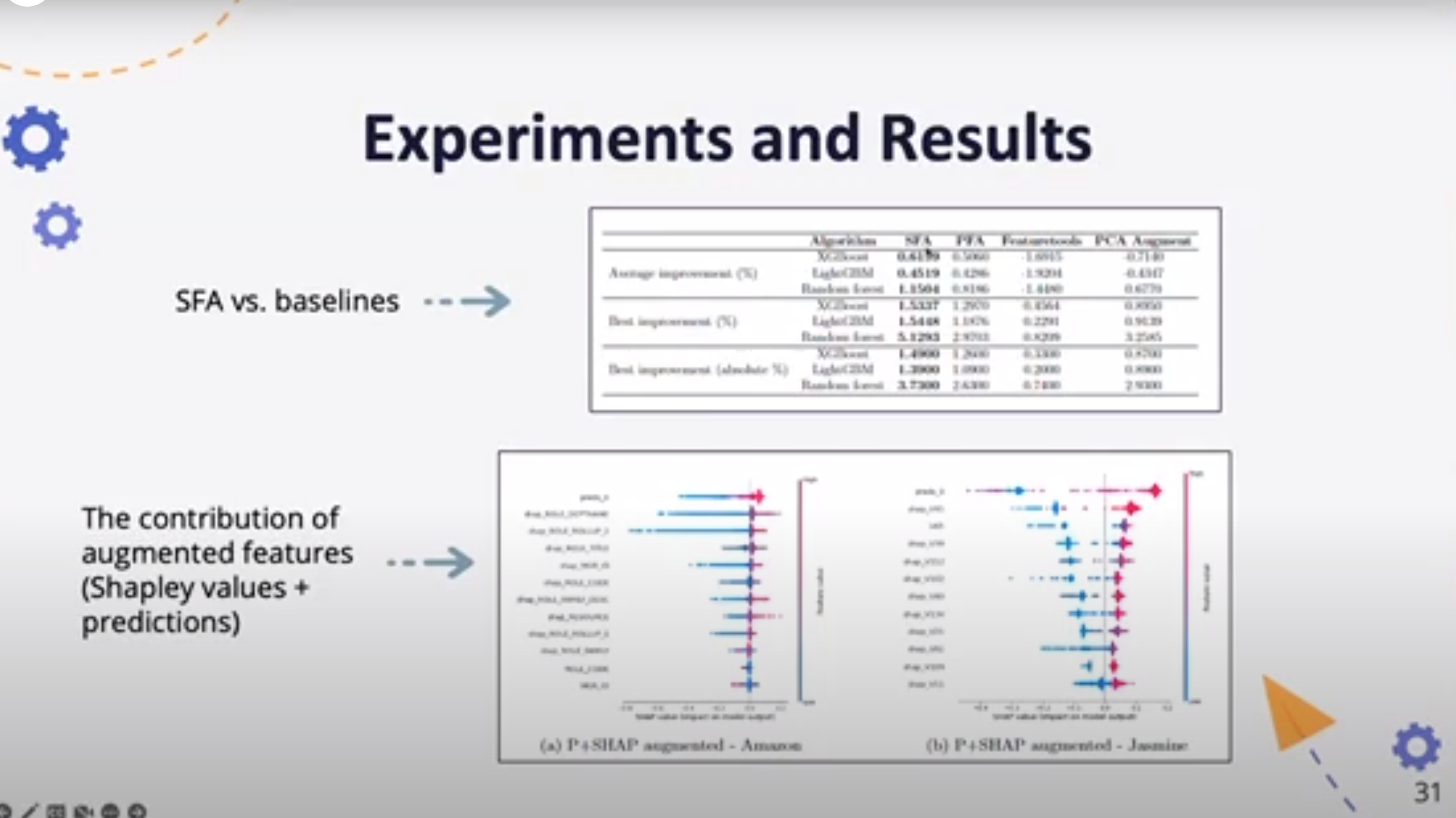

improving performance

increasing trust

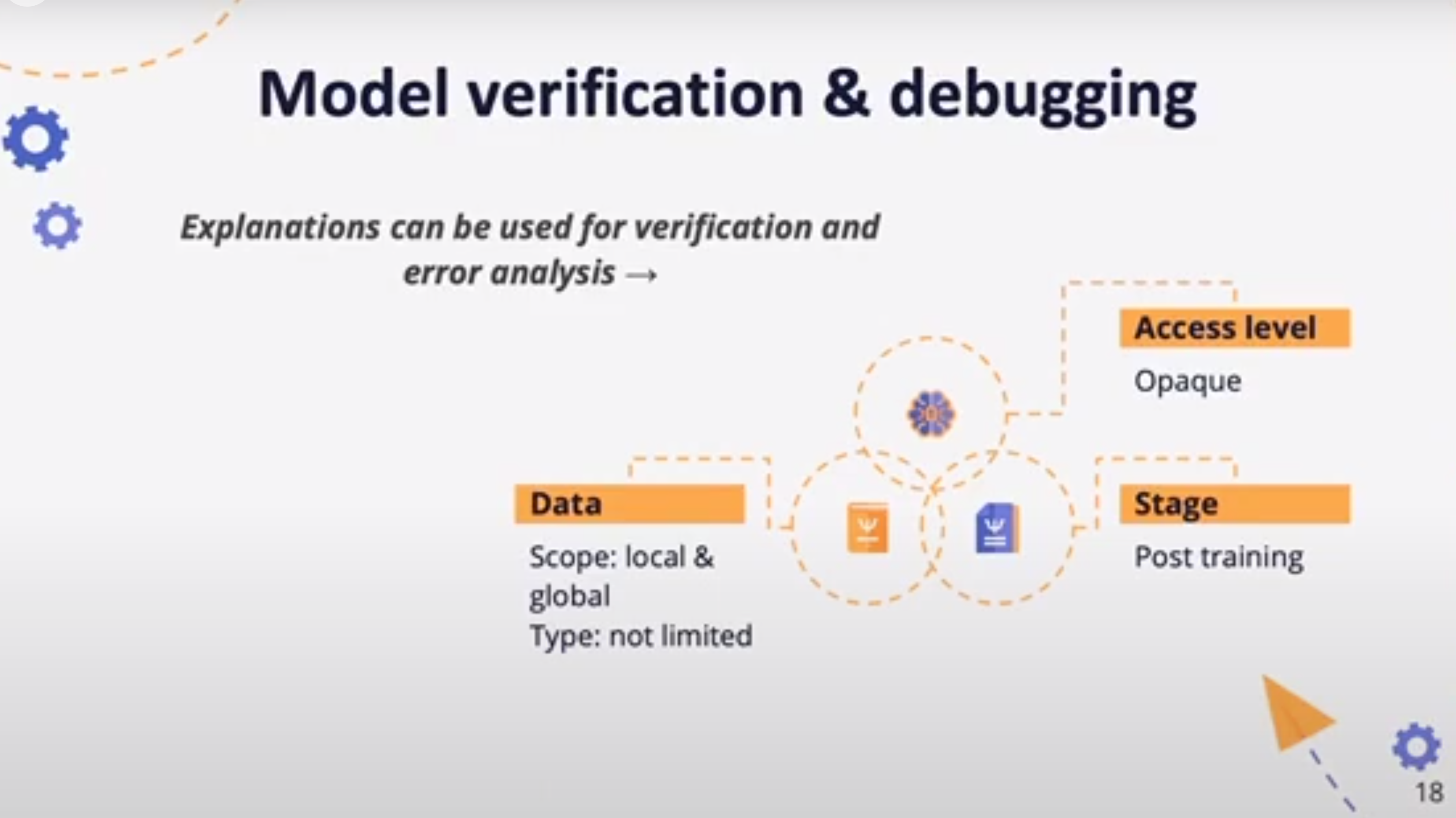

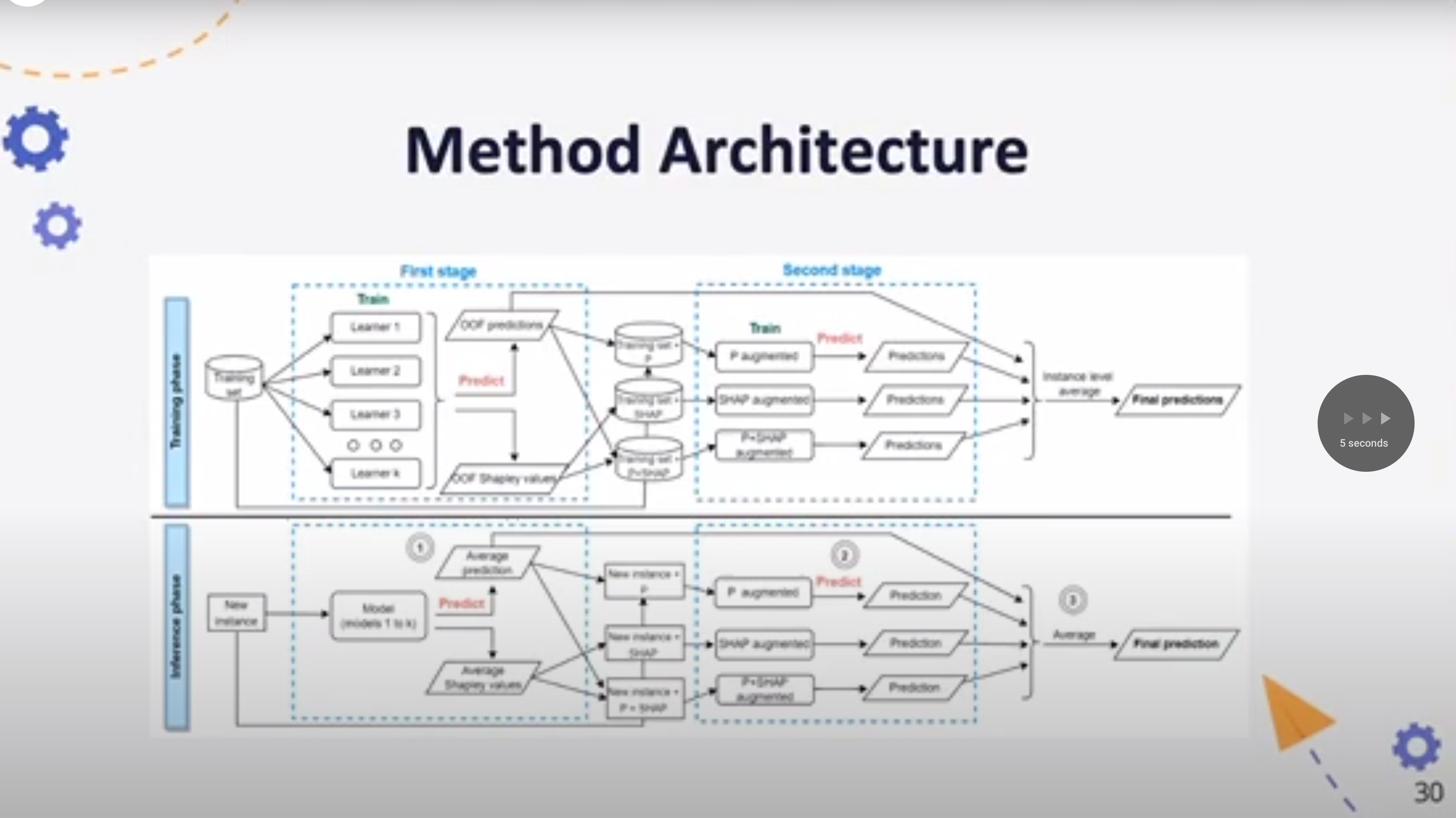

XAI method Selection

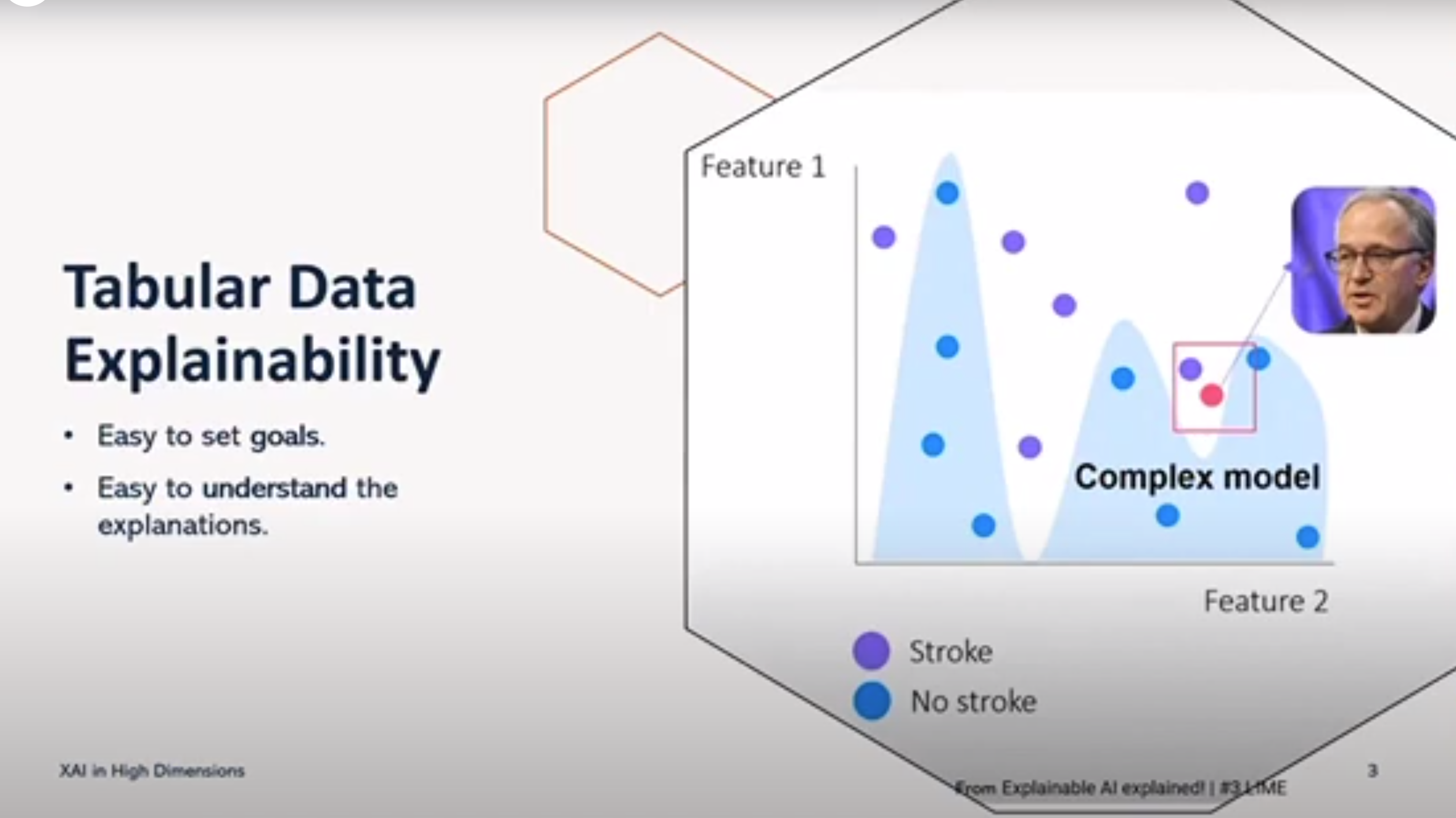

- Data

- what is the scope of the explanation?

- what is the data type ?

- what output are we trying to explain ?

- Access level

- Can the explainer access the model?

- Can the explainer access the data?

- Stage

- At What stage does the model require the explanation ?

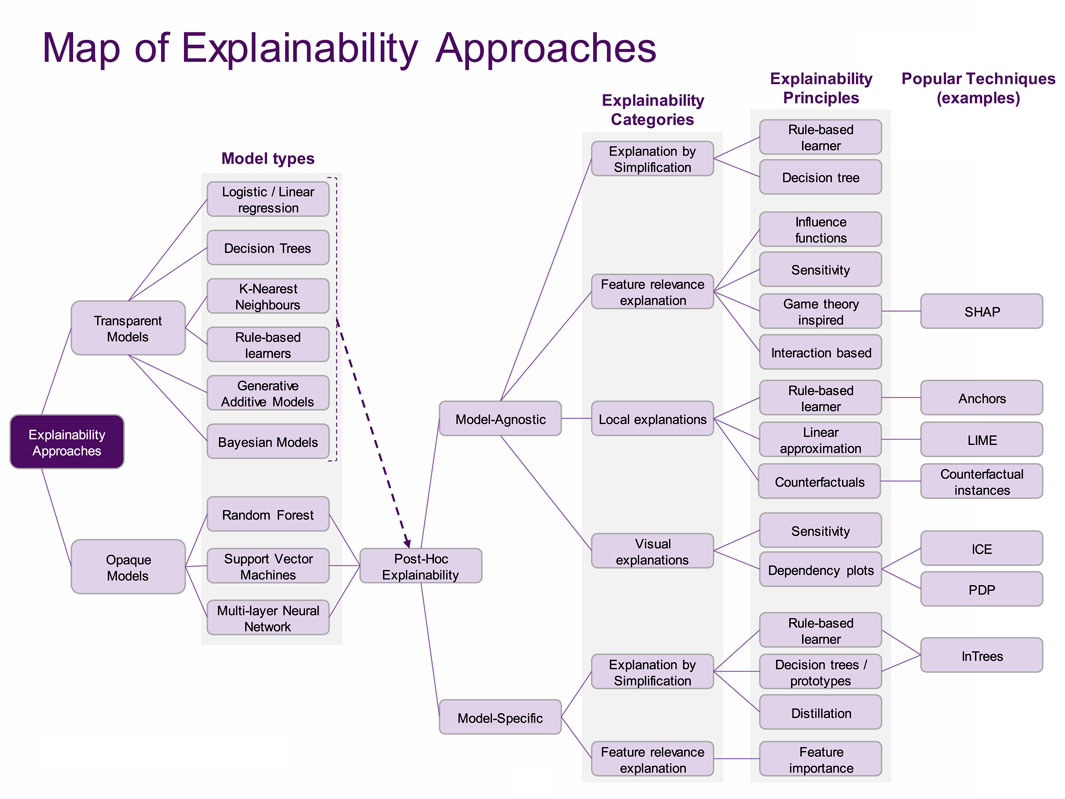

Explanation methods map

How do we pick the correct approach to explaining the model ?

The next figure on the right can let us pick

from (Belle and Papantonis 2021)

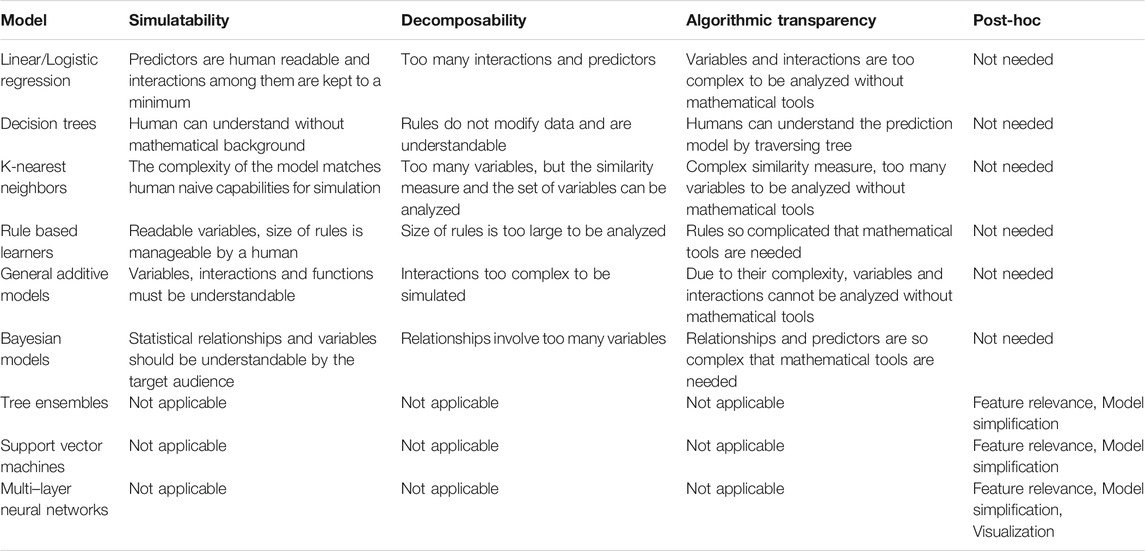

Anchors and InTrees were not discussed earlier in the course.

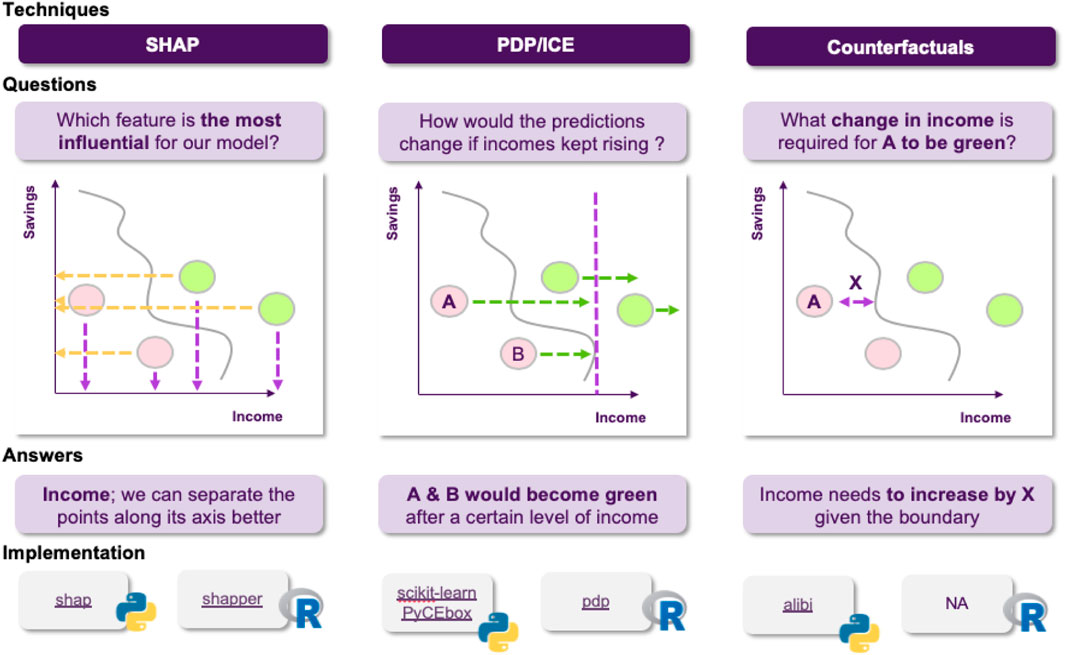

We covered SHAP which identifies the contribution of of each feature to the game of cooperative prediction

PDP / ICE - use a local model’s decision boundary to identify at what point we switched class.

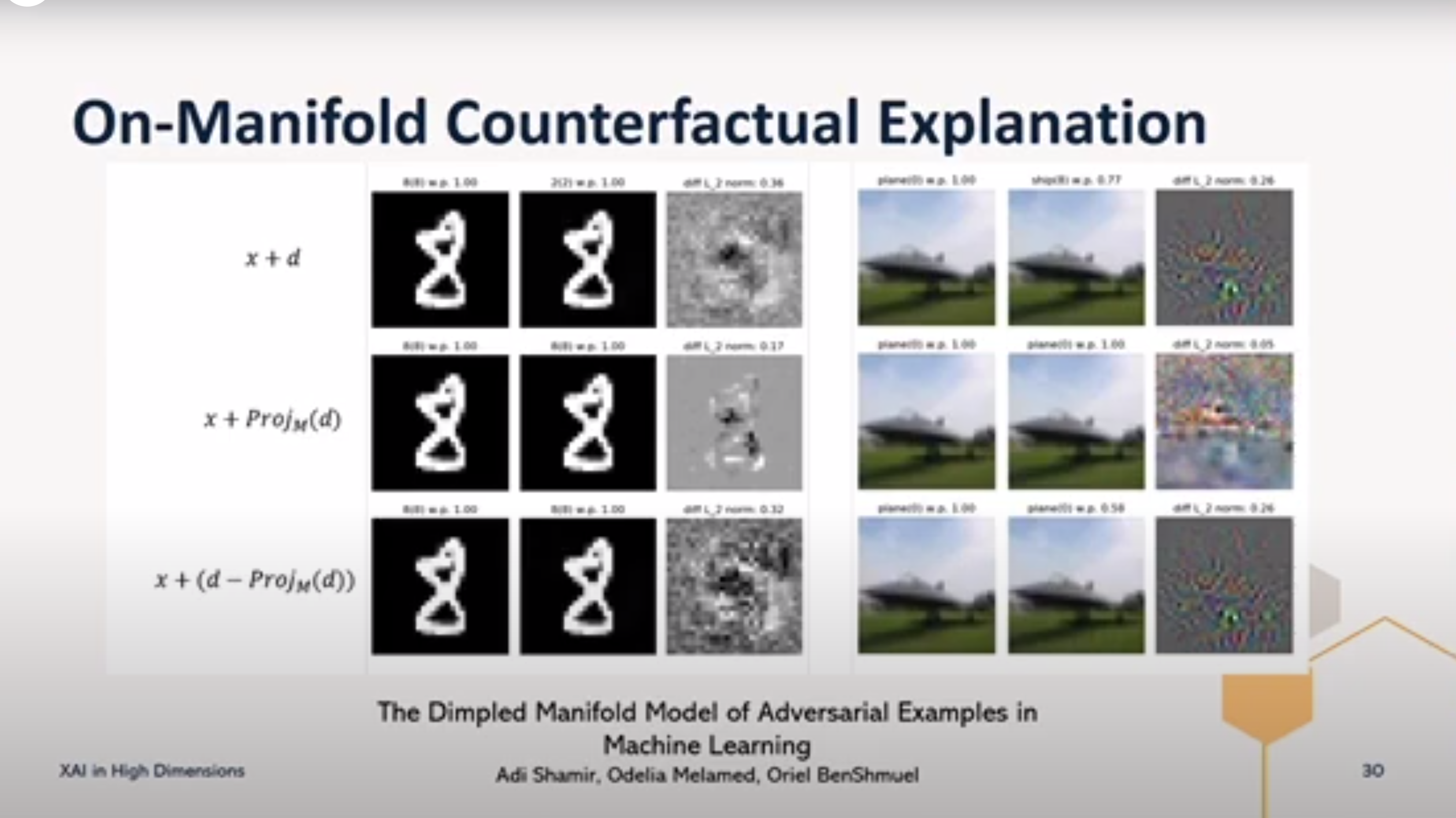

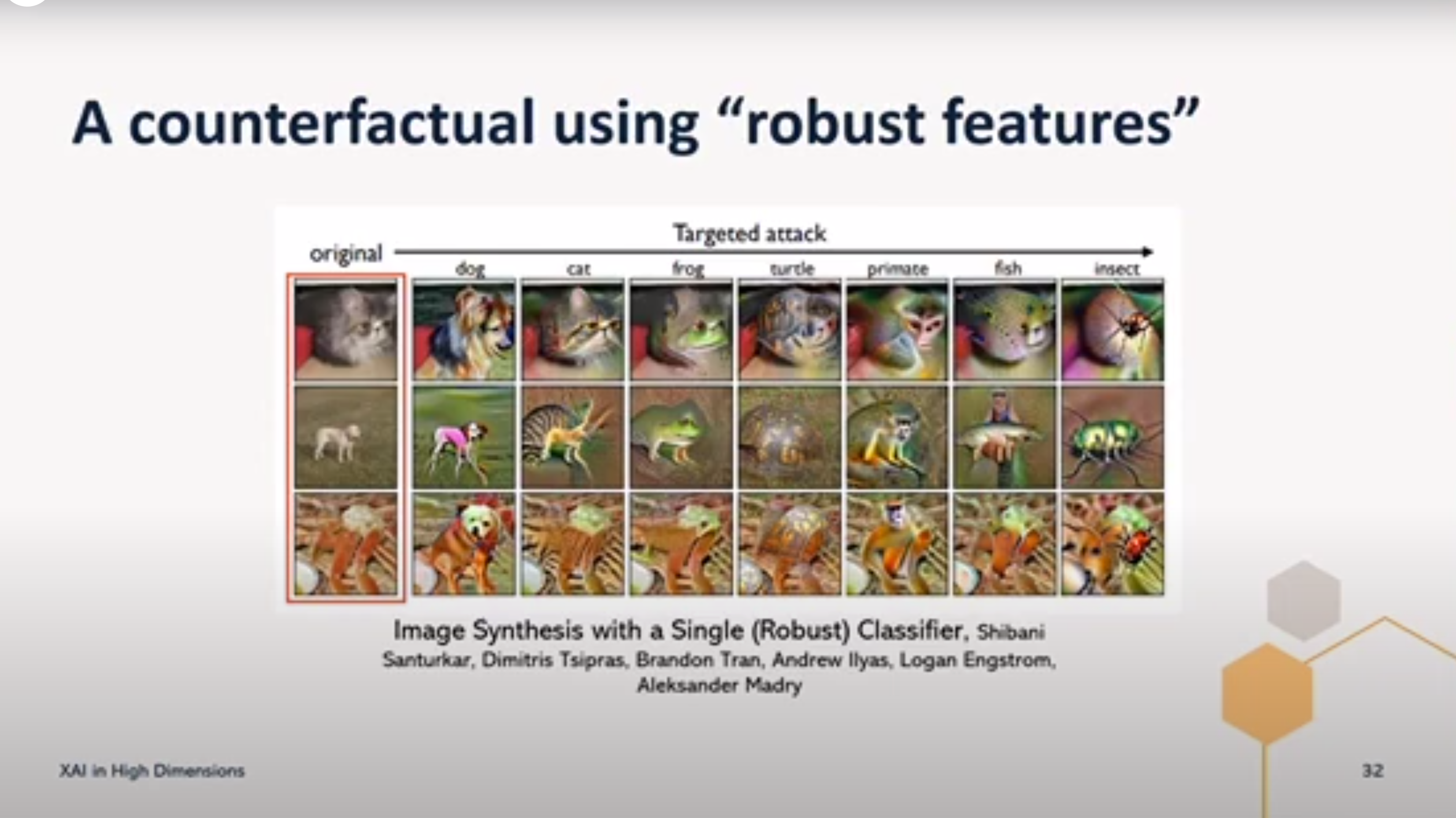

Counterfactuals utilize the original model’s decision boundary identify the intervention that would lead to the effect of switching class.

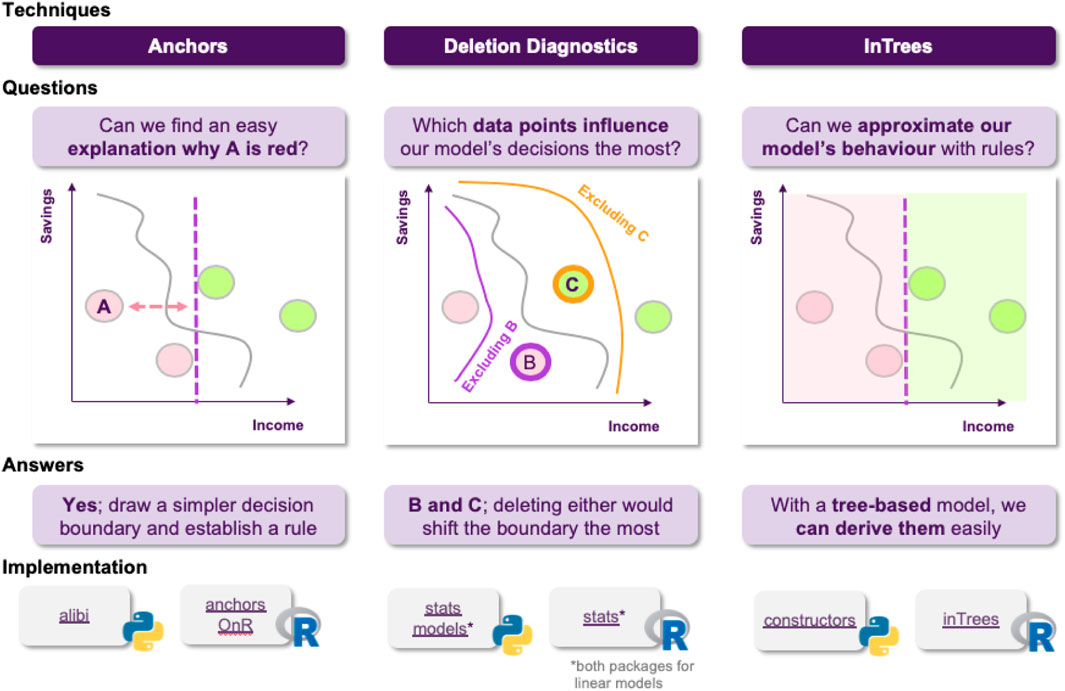

Anchors, introduced in (Ribeiro, Singh, and Guestrin 2018) and called Scoped Rules in [Molnar (2022) §9.4]

Deletion Diagnostics: How would removing the highest leverage points change the model’s decision boundary

InTrees: What rules approximate our decision ?

XAI Implications

from (DARPA, n.d.)

c.f. https://www.darpa.mil/program/explainable-artificial-intelligence

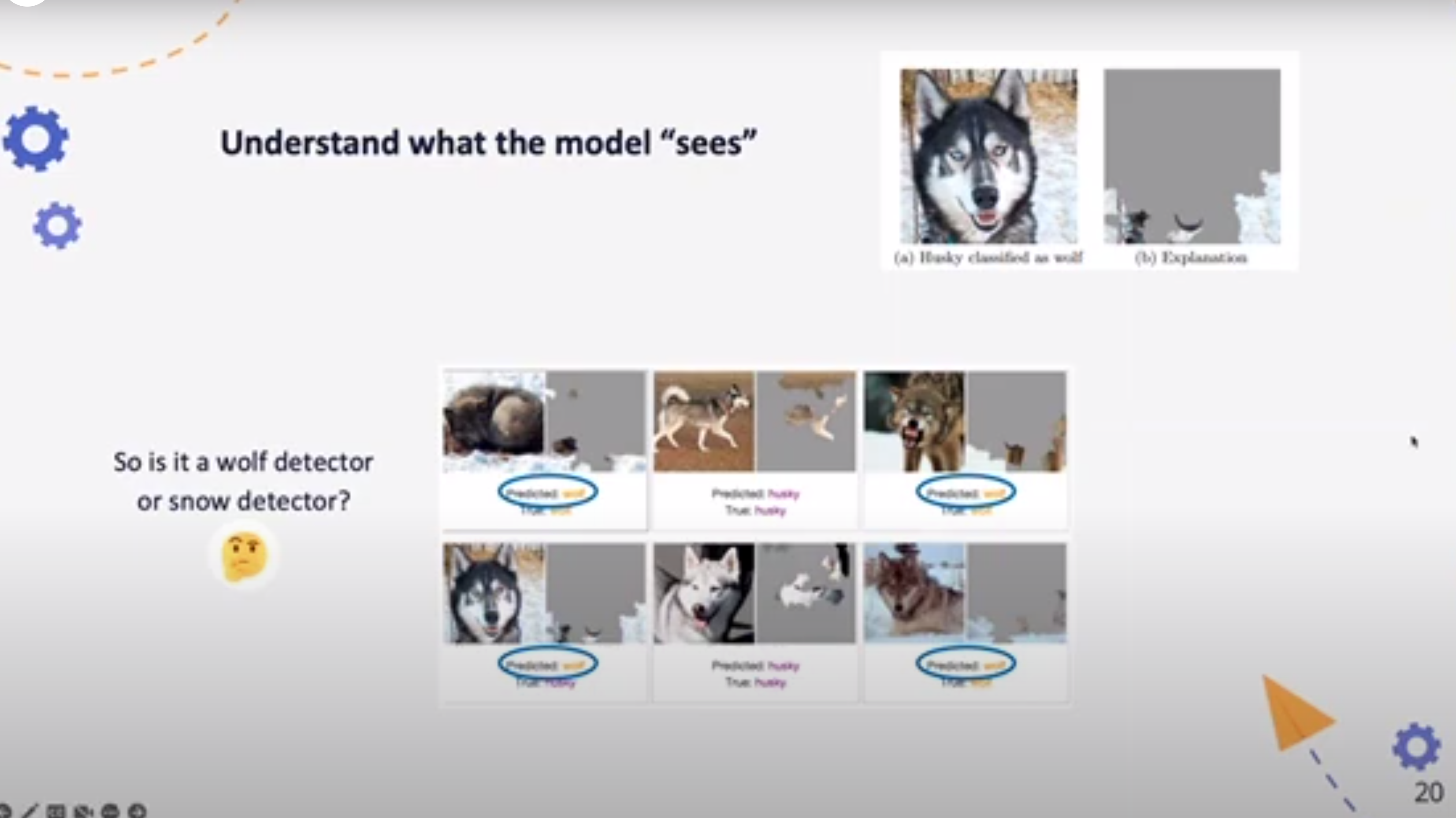

Model Verification

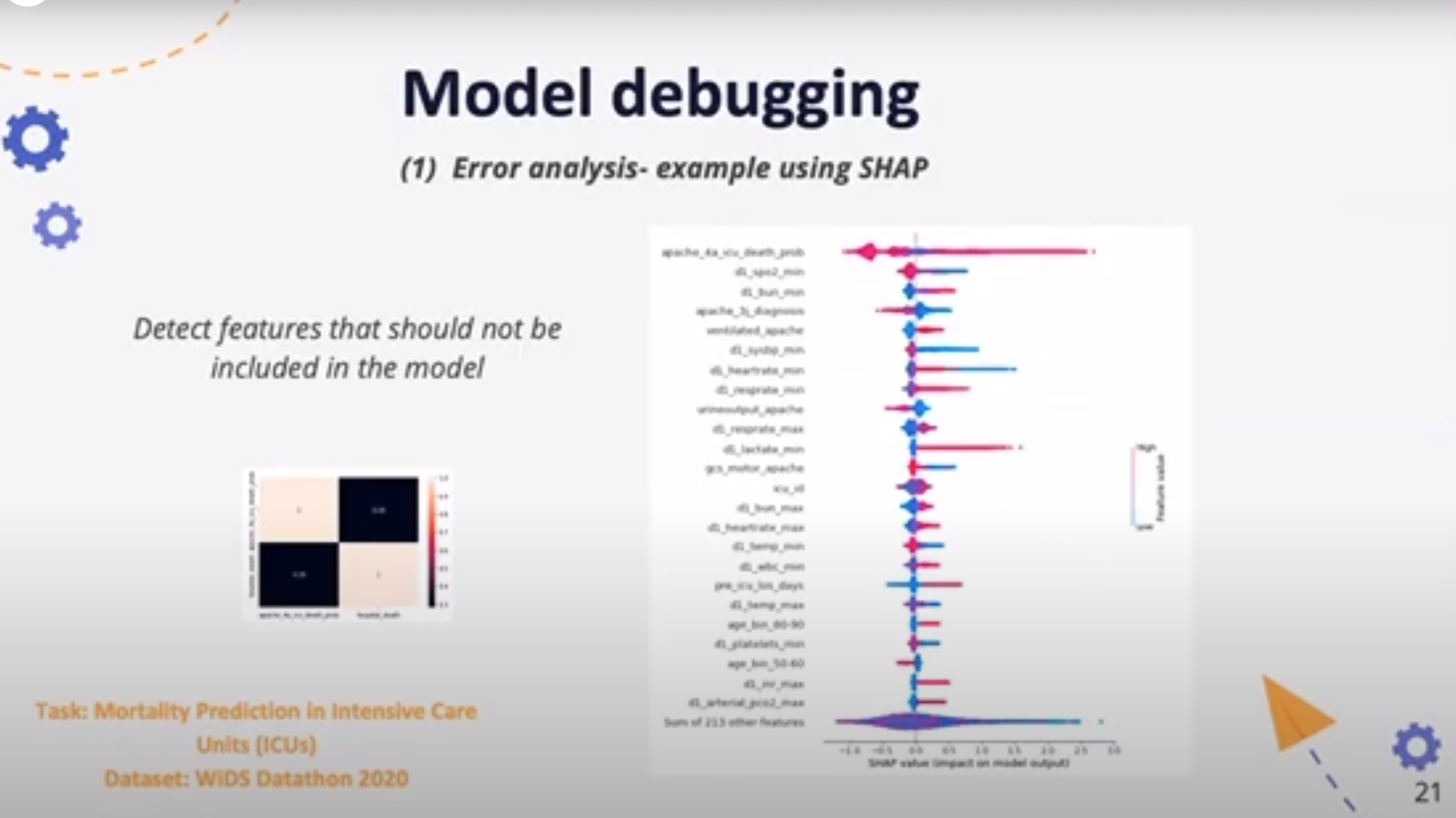

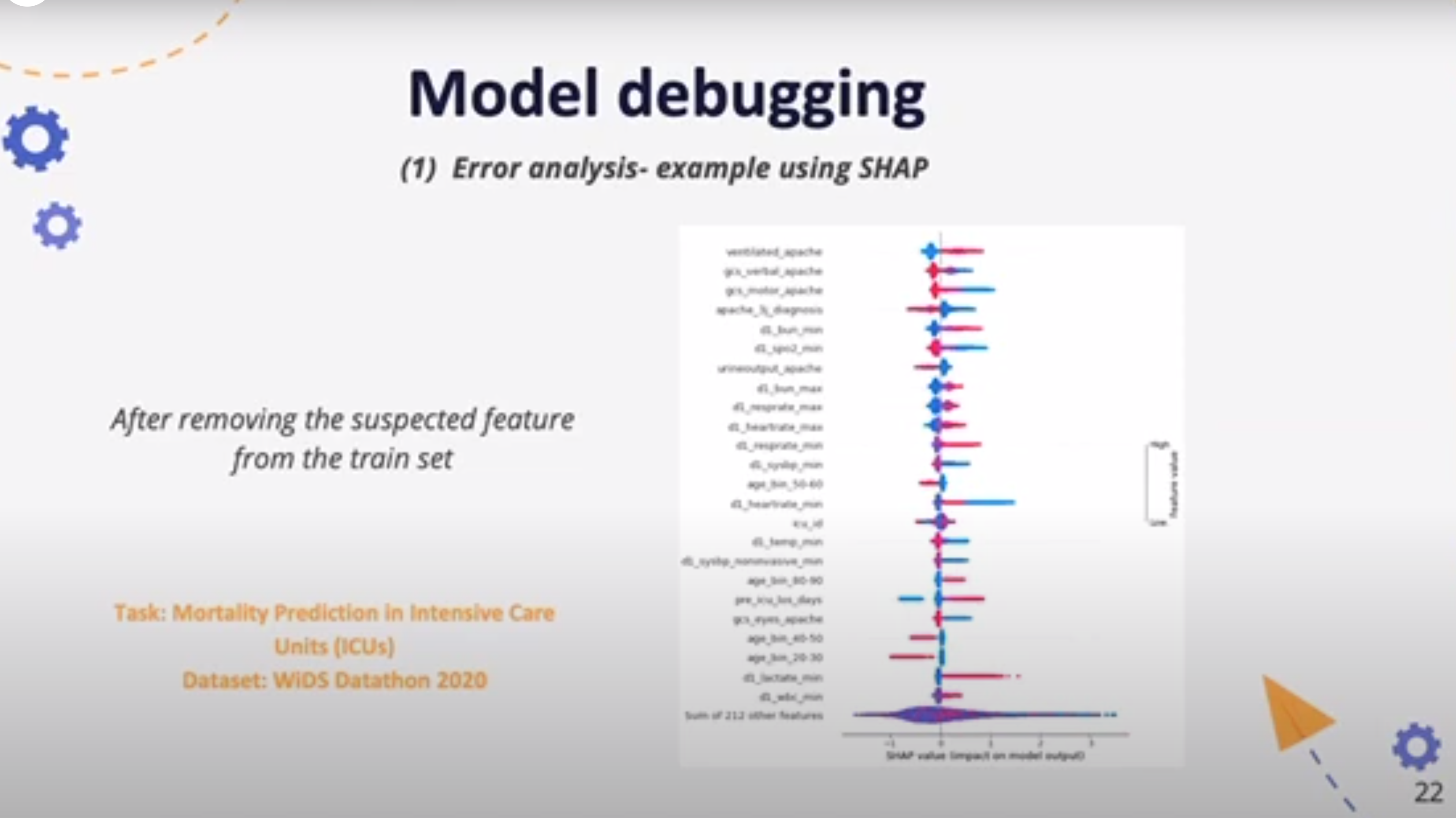

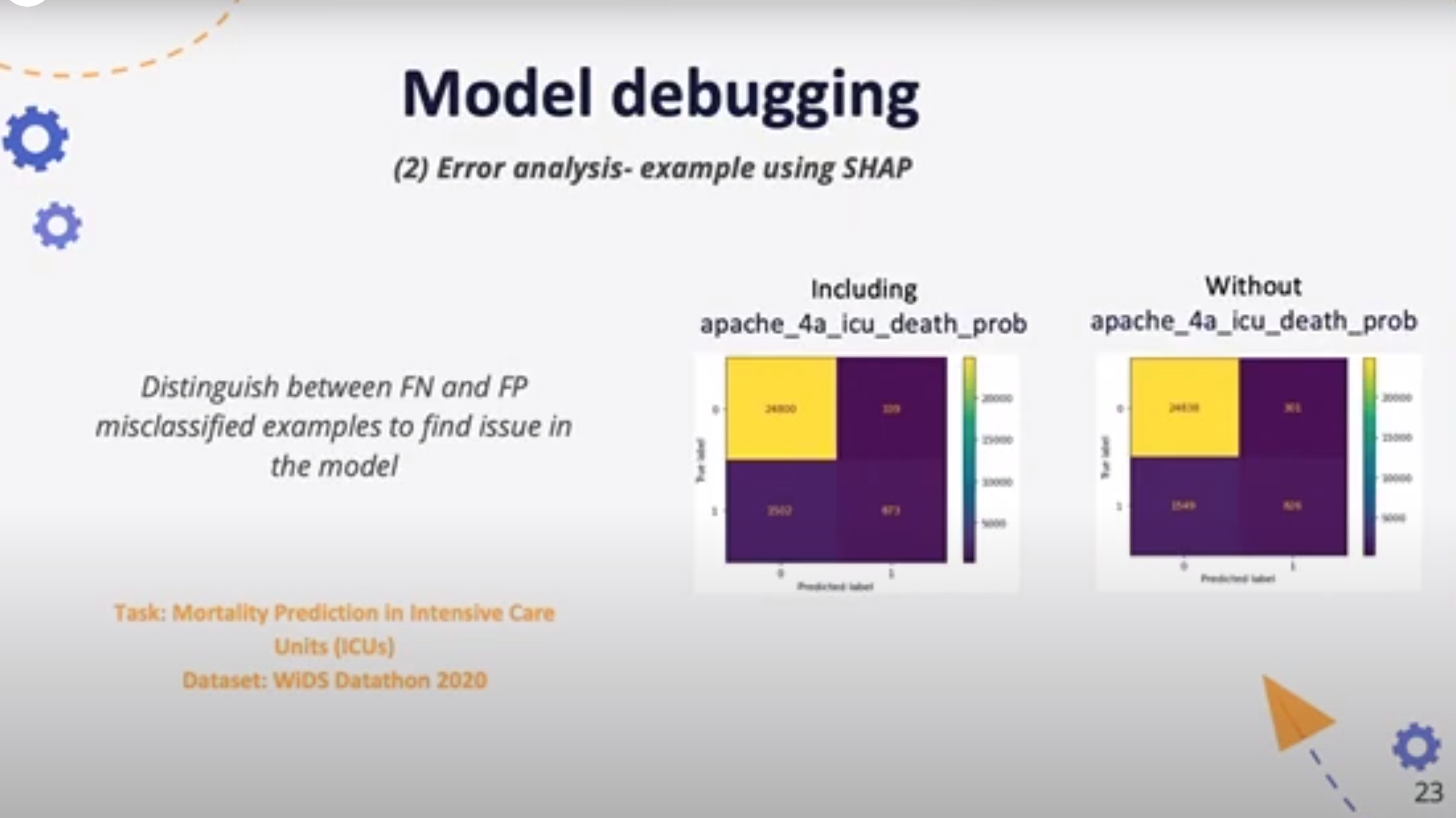

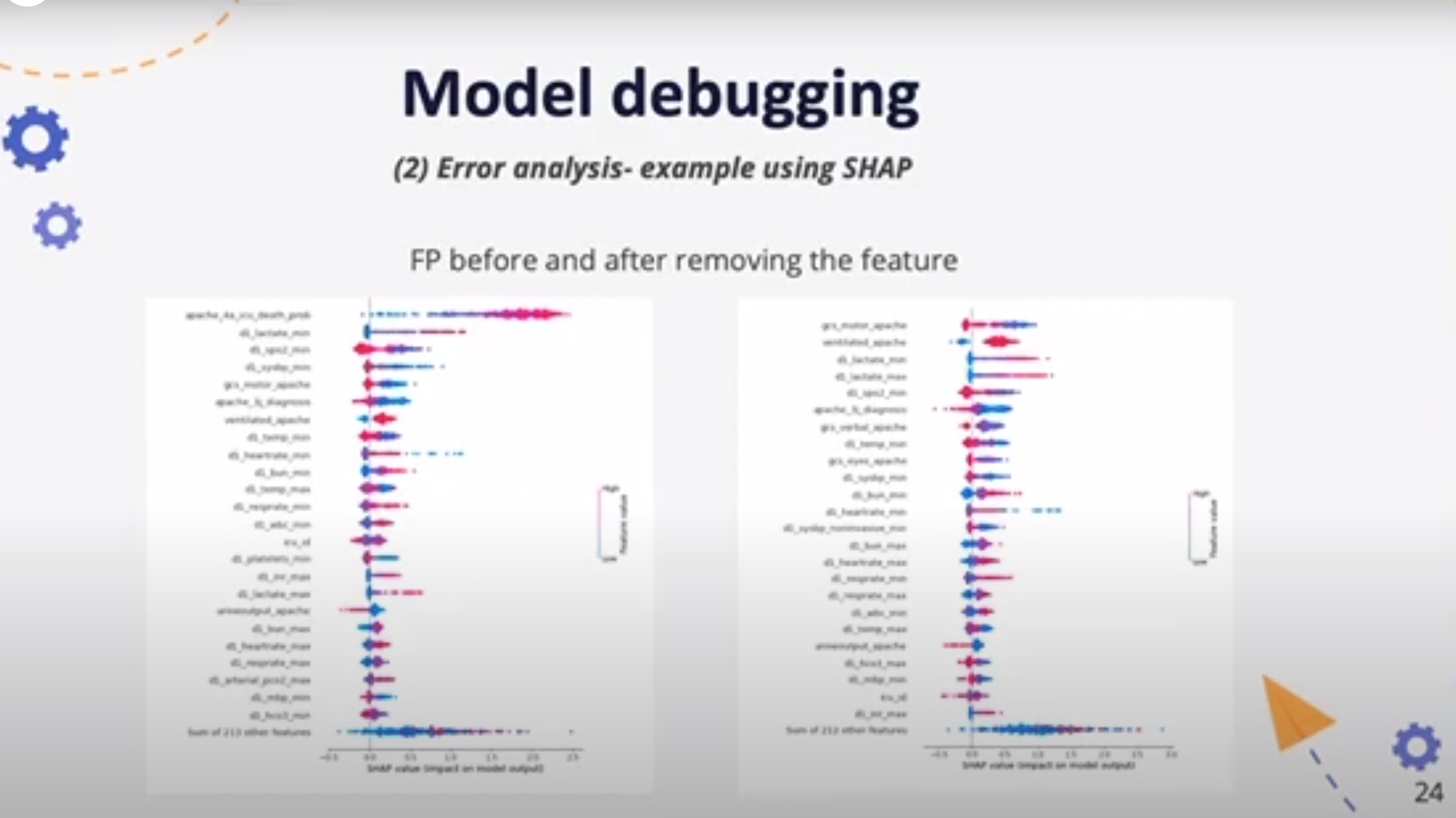

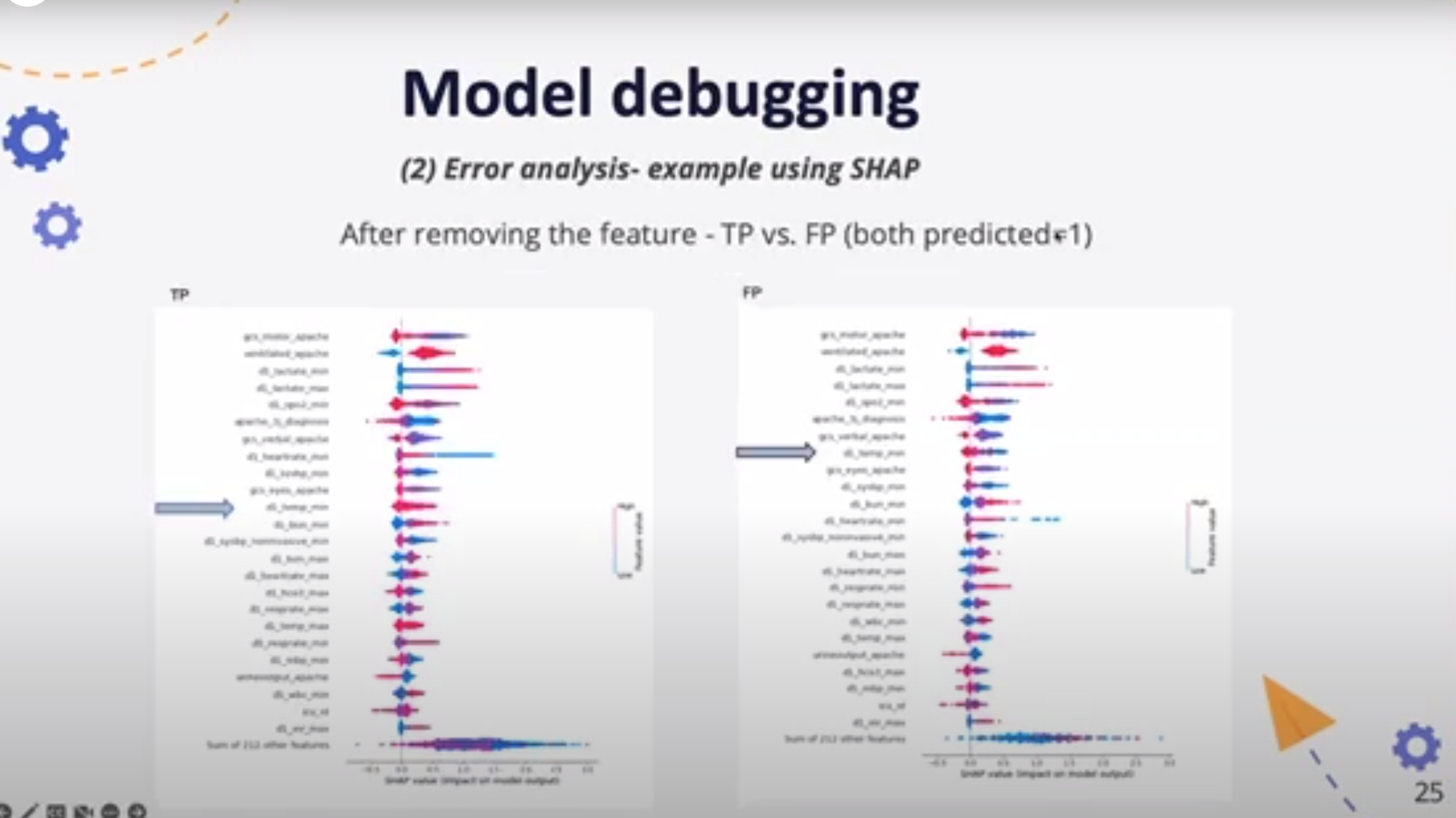

Model Debugging

look at the errors of the models - false positives and false negatives

use SHAP to identify features that should be excluded.1

References

Footnotes

But we should be using Causal Inference for this, since fixing using a local explanation will surely impact all the data set. If we have a Causal DAG we can probably use this concept to identify nodes as confounders on some Causal path.↩︎

Reuse

Citation

@online{bochman2023,

author = {Bochman, Oren},

title = {Lecture 5 -\/-\/- {Explainable} {AI} in Practice},

date = {2023-03-28},

url = {https://orenbochman.github.io/notes/XAI/l05/},

langid = {en}

}