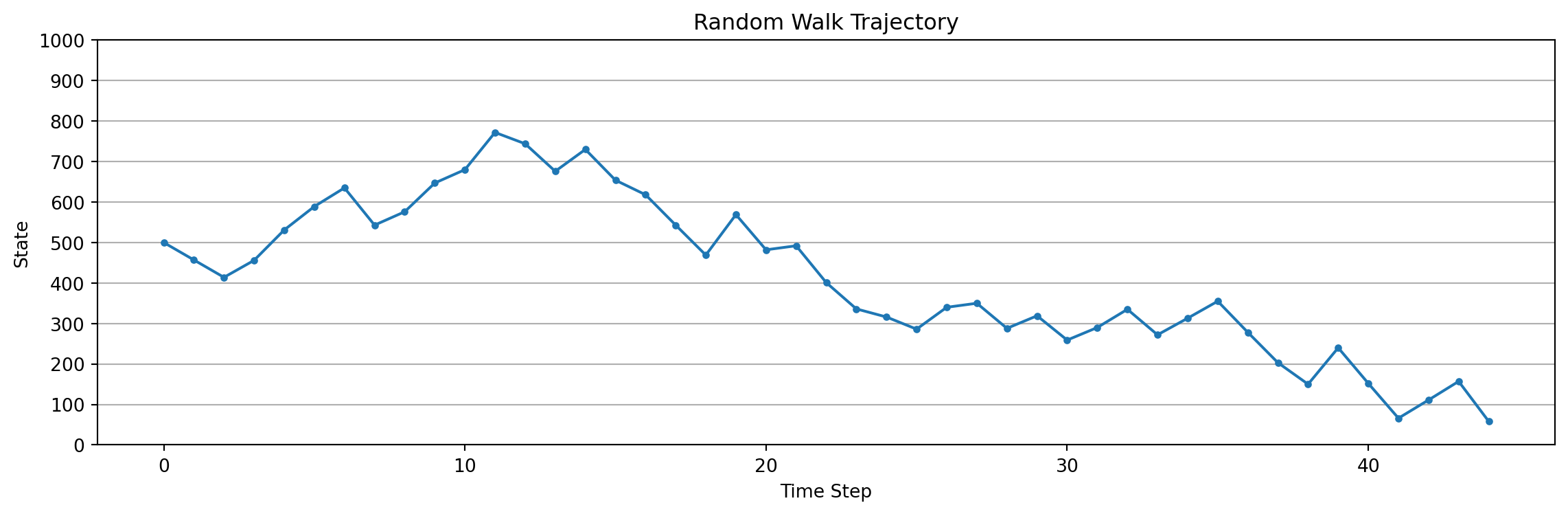

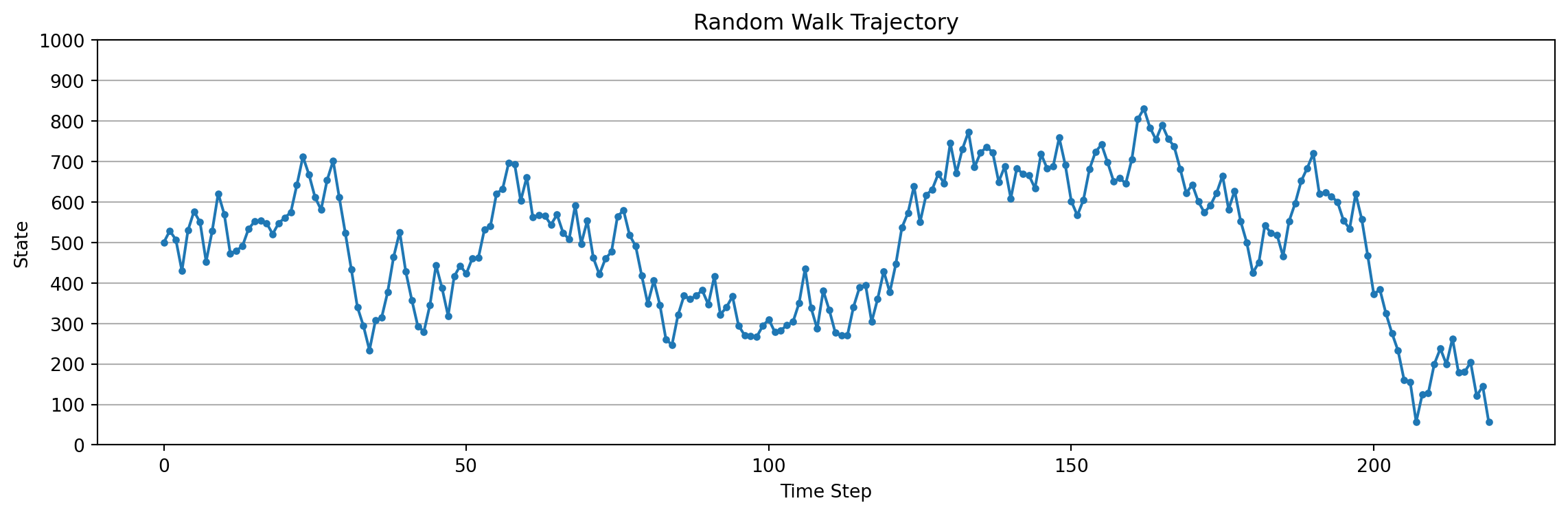

obs=457, action=0, reward=0, terminated=False

obs=414, action=0, reward=0, terminated=False

obs=456, action=1, reward=0, terminated=False

obs=531, action=1, reward=0, terminated=False

obs=589, action=1, reward=0, terminated=False

obs=635, action=1, reward=0, terminated=False

obs=543, action=0, reward=0, terminated=False

obs=576, action=1, reward=0, terminated=False

obs=647, action=1, reward=0, terminated=False

obs=680, action=1, reward=0, terminated=False

obs=772, action=1, reward=0, terminated=False

obs=744, action=0, reward=0, terminated=False

obs=676, action=0, reward=0, terminated=False

obs=730, action=1, reward=0, terminated=False

obs=654, action=0, reward=0, terminated=False

obs=618, action=0, reward=0, terminated=False

obs=543, action=0, reward=0, terminated=False

obs=469, action=0, reward=0, terminated=False

obs=569, action=1, reward=0, terminated=False

obs=482, action=0, reward=0, terminated=False

obs=492, action=1, reward=0, terminated=False

obs=401, action=0, reward=0, terminated=False

obs=336, action=0, reward=0, terminated=False

obs=316, action=0, reward=0, terminated=False

obs=286, action=0, reward=0, terminated=False

obs=340, action=1, reward=0, terminated=False

obs=350, action=1, reward=0, terminated=False

obs=288, action=0, reward=0, terminated=False

obs=319, action=1, reward=0, terminated=False

obs=259, action=0, reward=0, terminated=False

obs=290, action=1, reward=0, terminated=False

obs=335, action=1, reward=0, terminated=False

obs=272, action=0, reward=0, terminated=False

obs=313, action=1, reward=0, terminated=False

obs=355, action=1, reward=0, terminated=False

obs=278, action=0, reward=0, terminated=False

obs=203, action=0, reward=0, terminated=False

obs=150, action=0, reward=0, terminated=False

obs=240, action=1, reward=0, terminated=False

obs=152, action=0, reward=0, terminated=False

obs=66, action=0, reward=0, terminated=False

obs=111, action=1, reward=0, terminated=False

obs=157, action=1, reward=0, terminated=False

obs=58, action=0, reward=0, terminated=False

obs=0, action=0, reward=-1, terminated=True